Incorporating Global Visual Features into Attention-based ... · 2 Attention-based NMT In this...

Transcript of Incorporating Global Visual Features into Attention-based ... · 2 Attention-based NMT In this...

Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, pages 992–1003Copenhagen, Denmark, September 7–11, 2017. c©2017 Association for Computational Linguistics

Incorporating Global Visual Features intoAttention-Based Neural Machine Translation

Iacer CalixtoADAPT Centre

Dublin City UniversitySchool of ComputingGlasnevin, Dublin 9

Qun LiuADAPT Centre

Dublin City UniversitySchool of ComputingGlasnevin, Dublin 9

Abstract

We introduce multi-modal, attention-based Neural Machine Translation (NMT)models which incorporate visual featuresinto different parts of both the encoderand the decoder. Global image featuresare extracted using a pre-trained convo-lutional neural network and are incorpo-rated (i) as words in the source sentence,(ii) to initialise the encoder hidden state,and (iii) as additional data to initialisethe decoder hidden state. In our exper-iments, we evaluate translations into En-glish and German, how different strategiesto incorporate global image features com-pare and which ones perform best. Wealso study the impact that adding syntheticmulti-modal, multilingual data brings andfind that the additional data have a posi-tive impact on multi-modal models. Wereport new state-of-the-art results and ourbest models also significantly improve ona comparable Phrase-Based Statistical MT(PBSMT) model trained on the Multi30kdata set according to all metrics evaluated.To the best of our knowledge, it is the firsttime a purely neural model significantlyimproves over a PBSMT model on all met-rics evaluated on this data set.

1 Introduction

Neural Machine Translation (NMT) has recentlybeen proposed as an instantiation of the sequenceto sequence (seq2seq) learning problem (Kalch-brenner and Blunsom, 2013; Cho et al., 2014b;Sutskever et al., 2014). In this problem, each train-ing example consists of one source and one tar-get variable-length sequence, with no prior infor-mation regarding the alignments between the two.

A model is trained to translate sequences in thesource language into corresponding sequences inthe target. This framework has been successfullyused in many different tasks, such as handwrittentext generation (Graves, 2013), image descriptiongeneration (Hodosh et al., 2013; Kiros et al., 2014;Mao et al., 2014; Elliott et al., 2015; Karpathy andFei-Fei, 2015; Vinyals et al., 2015), machine trans-lation (Cho et al., 2014b; Sutskever et al., 2014)and video description generation (Donahue et al.,2015; Venugopalan et al., 2015).

Recently, there has been an increase in the num-ber of natural language generation models thatexplicitly use attention-based decoders, i.e. de-coders that model an intra-sequential mapping be-tween source and target representations. For in-stance, Xu et al. (2015) proposed an attention-based model for the task of Image DescriptionGeneration (IDG) where the model learns to at-tend to specific parts of an image (the source) as itgenerates its description (the target). In MT, onecan intuitively interpret this attention mechanismas inducing an alignment between source and tar-get sentences, as first proposed by Bahdanau et al.(2015). The common idea is to explicitly frame alearning task in which the decoder learns to attendto the relevant parts of the source sequence whengenerating each part of the target sequence.

We are inspired by recent successes in usingattention-based models in both IDG and NMT.Our main goal in this work is to propose end-to-end multi-modal NMT models which effectivelyincorporate visual features in different parts of theattention-based NMT framework. The main con-tributions of our work are:

• We propose novel attention-based multi-modal NMT models which incorporate visualfeatures into the encoder and the decoder.

• We discuss the impact that adding synthetic

992

multi-modal and multilingual data brings tomulti-modal NMT.

• We show that images bring useful informa-tion to an NMT model and report state-of-the-art results.

One additional contribution of our work is thatwe corroborate previous findings by Vinyals et al.(2015) that suggested that using image features di-rectly as additional context to update the hiddenstate of the decoder (at each time step) preventslearning.

The remainder of this paper is structured as fol-lows. In §1.1 we briefly discuss relevant previousrelated work. We then revise the attention-basedNMT framework and further expand it into differ-ent multi-modal NMT models (§2). In §3 we intro-duce the data sets we use in our experiments. In §4we detail the hyperparameters, parameter initiali-sation and other relevant details of our models. Fi-nally, in §6 we draw conclusions and provide someavenues for future work.

1.1 Related work

Attention-based encoder-decoder models for MThave been actively investigated in recent years.Some researchers have studied how to improve at-tention mechanisms (Luong et al., 2015; Tu et al.,2016) and how to train attention-based models totranslate between many languages (Dong et al.,2015; Firat et al., 2016).

However, multi-modal MT has only recentlybeen addressed by the MT community in the formof a shared task (Specia et al., 2016). We note thatin the official results of this first shared task nosubmissions based on a purely neural architecturecould improve on the Phrase-Based SMT (PB-SMT) baseline. Nevertheless, researchers haveproposed to include global visual features in re-ranking n-best lists generated by a PBSMT sys-tem or directly in a purely NMT framework withsome success (Caglayan et al., 2016; Calixto et al.,2016; Libovicky et al., 2016; Shah et al., 2016).The best results achieved by a purely NMT modelin this shared task are those of Huang et al. (2016),who proposed to use global and regional imagefeatures extracted with the VGG19 (Simonyan andZisserman, 2014) and the RCNN (Girshick et al.,2014) convolutional neural networks (CNNs).

Similarly to one of the three models we pro-

pose,1 Huang et al. (2016) extract global featuresfor an image, project these features into the vectorspace of the source words and then add it as a wordin the input sequence. Their best model improvesover a strong NMT baseline and is comparableto results obtained with a PBSMT model trainedon the same data, although not significantly bet-ter. For that reason, their models are used as base-lines in our experiments. Next, we point out somekey differences between the work of Huang et al.(2016) and ours.

Architecture Their implementation is based onthe attention-based model of Luong et al. (2015),which has some differences to that of Bahdanauet al. (2015), used in our work (§2.1). Their en-coder is a single-layer unidirectional LSTM andthey use the last hidden state of the encoder to ini-tialise the decoder’s hidden state, therefore indi-rectly using the image features to do so. We usea bi-directional recurrent neural network (RNN)with GRU (Cho et al., 2014a) as our encoder, bet-ter encoding the semantics of the source sentence.

Image features We include image features sep-arately either as a word in the source sen-tence (§2.2.1) or directly for encoder (§2.2.2)or decoder initialisation (§2.2.3), whereas Huanget al. (2016) only use it as a word. We also show itis better to include an image exclusively for the en-coder or the decoder initialisation (Tables 1 and 2).

Data Huang et al. (2016) use object detectionsobtained with the RCNN of Girshick et al. (2014)as additional data, whereas we study the impactthat additional back-translated data brings.

Performance All our models outperform Huanget al. (2016)’s according to all metrics evaluated,even when they use additional object detections.If we use additional back-translated data, the dif-ference becomes even larger.

2 Attention-based NMT

In this section, we briefly revise the attention-based NMT framework (§2.1) and expand it intoa multi-modal NMT framework (§2.2).

2.1 Text-only attention-based NMT

We follow the notation of Bahdanau et al. (2015)and Firat et al. (2016) throughout this section.

1This idea has been developed independently by both re-search groups.

993

Given a source sequence X = (x1, x2, · · · , xN )and its translation Y = (y1, y2, · · · , yM ), an NMTmodel aims at building a single neural networkthat translates X into Y by directly learning tomodel p(Y |X). Each xi is a row index in a sourcelookup matrix Wx ∈ R|Vx|×dx (the source wordembeddings matrix) and each yj is an index in atarget lookup matrix Wy ∈ R|Vy |×dy (the targetword embeddings matrix). Vx and Vy are sourceand target vocabularies and dx and dy are sourceand target word embeddings dimensionalities, re-spectively.

A bidirectional RNN with GRU is used asthe encoder. A forward RNN

−→Φ enc reads X

word by word, from left to right, and gener-ates a sequence of forward annotation vectors(−→h 1,−→h 2, · · · ,−→h N ) at each encoder time step

i ∈ [1, N ]. Similarly, a backward RNN←−Φ enc reads

X from right to left, word by word, and gener-ates a sequence of backward annotation vectors(←−h 1,←−h 2, · · · ,←−h N ), as in (1):−→

hi =−→Φ enc

(Wx[xi],

−→h i−1

),

←−hi =

←−Φ enc

(Wx[xi],

←−h i+1

). (1)

The final annotation vector for a given time step iis the concatenation of forward and backward vec-tors hi =

[−→hi;←−hi

].

In other words, each source sequence X isencoded into a sequence of annotation vectorsh = (h1,h2, · · · ,hN ), which are in turn used bythe decoder: essentially a neural language model(LM) (Bengio et al., 2003) conditioned on the pre-viously emitted words and the source sentence viaan attention mechanism.

At each time step t of the decoder, we computea time-dependent context vector ct based on theannotation vectors h, the decoder’s previous hid-den state st−1 and the target word yt−1 emitted bythe decoder in the previous time step.2

We follow Bahdanau et al. (2015) and use asingle-layer feed-forward network to compute anexpected alignment et,i between each source anno-tation vector hi and the target word to be emittedat the current time step t, as in (2):

et,i = vaT tanh(Uast−1 + Wahi). (2)

In Equation (3), these expected alignments are

2At training time, the correct previous target word yt−1

is known and therefore used instead of yt−1. At test or in-ference time, yt−1 is not known and yt−1 is used instead.Bengio et al. (2015) discussed problems that may arise fromthis difference between training and inference distributions.

normalised and converted into probabilities:

αt,i =exp (et,i)∑N

j=1 exp (et,j), (3)

where αt,i are called the model’s attentionweights, which are in turn used in computing thetime-dependent context vector ct =

∑Ni=1 αt,ihi.

Finally, the context vector ct is used in computingthe decoder’s hidden state st for the current timestep t, as shown in Equation (4):

st = Φdec(st−1,Wy[yt−1], ct), (4)where st−1 is the decoder’s previous hidden state,Wy[yt−1] is the embedding of the word emitted inthe previous time step, and ct is the updated time-dependent context vector.

We use a single-layer feed-forward neural net-work to initialise the decoder’s hidden state s0 attime step t = 0 and feed it the concatenation of thelast hidden states of the encoder’s forward RNN(−→Φ enc) and backward RNN (

←−Φ enc), as in (5):

s0 = tanh(Wdi[

←−h 1;−→hN ] + bdi

), (5)

where Wdi and bdi are model parameters. SinceRNNs normally better store information aboutrecent inputs in comparison to more distantones (Hochreiter and Schmidhuber, 1997; Bah-danau et al., 2015), we expect to initialise the de-coder’s hidden state with a strong source sentencerepresentation, i.e. a representation with a strongfocus on both the first and the last tokens in thesource sentence.

2.2 Multi-modal NMT (MNMT)

Our models can be seen as expansions of theattention-based NMT framework described in §2with the addition of a visual component to incor-porate image features.

Simonyan and Zisserman (2014) trained andevaluated an extensive set of deep ConvolutionalNeural Networks (CNNs) for classifying imagesinto one out of the 1000 classes in ImageNet (Rus-sakovsky et al., 2015). We use their 19-layer VGGnetwork (VGG19) to extract image feature vec-tors for all images in our dataset. We feed animage to the pre-trained VGG19 network and usethe 4096D activations of the penultimate fully-connected layer FC73 as our image feature vector,henceforth referred to as q.

We propose three different methods to incor-porate images into the attentive NMT framework:

3We use the activations of the FC7 layer, which encodeinformation about the entire image, of the VGG19 network(configuration E) in Simonyan and Zisserman (2014)’s paper.

994

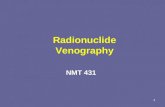

(a) An encoder bidirectional RNN thatuses image features as words in thesource sequence.

(b) Using an image to initialise the en-coder hidden states.

(c) Image as additional data to initialisethe decoder hidden state s0.

Figure 1: Multi-modal neural machine translation models IMGW, IMGE, and IMGD.

using an image as words in the source sentence(§2.2.1), using an image to initialise the sourcelanguage encoder (§2.2.2) and the target languagedecoder (§2.2.3).

We also evaluated a fourth mechanism to incor-porate images into NMT, namely to use an imageas one of the different contexts available to the de-coder at each time step of the decoding process.We added the image features directly as an addi-tional context, in addition to Wy[yt−1], st−1 andct, to compute the hidden state st of the decoderat a given time step t. We corroborate previousfindings by Vinyals et al. (2015) in that adding theimage features as such prevents the model fromlearning.4

2.2.1 Images as source words: IMGW

One way we propose to incorporate images intothe encoder is to project an image feature vectorinto the space of the words of the source sentence.We use the projected image as the first and/or lastword of the source sentence and let the attentionmodel learn when to attend to the image represen-tation. Specifically, given the global image featurevector q ∈ R4096, we compute (6):

d = W 2I · (W 1

I · q + b1I) + b2

I , (6)where W 1

I ∈ R4096×4096 and W 2I ∈ R4096×dx are

image transformation matrices, b1I ∈ R4096 and

b2I ∈ Rdx are bias vectors, and dx is the source

words vector space dimensionality, all trained withthe model. We then directly use d as words in thesource words vector space: as the first word only(model IMG1W), and as the first and last words of

4Outputs would typically consist of sets of 2-5 words re-peated many times, usually without any syntax. For com-parison, translations for the translated Multi30k test set(described in §3) achieve just 3.8 BLEU (Papineni et al.,2002), 15.5 METEOR (Denkowski and Lavie, 2014) and 93.0TER (Snover et al., 2006).

the source sentence (model IMG2W).An illustration of this idea is given in Figure 1a,

where a source sentence that originally containedN tokens, after including the image as sourcewords will contain N + 1 tokens (model IMG1W)or N + 2 tokens (model IMG2W). In modelIMG1W, the image is projected as the first sourceword only (solid line in Figure 1a); in modelIMG2W, it is projected into the source words spaceas both first and last words (both solid and dashedlines in Figure 1a).

Given a sequence X = (x1, x2, · · · , xN ) in thesource language, we concatenate the transformedimage vector d to Wx[X] and apply the forwardand backward encoder RNN passes, generatinghidden vectors as in Figure 1a. When comput-ing the context vector ct (Equations (2) and (3)),we effectively make use of the transformed imagevector, i.e. the αt,i attention weight parameterswill use this information to attend or not to the im-age features.

By including images into the encoder in mod-els IMG1W and IMG2W, our intuition is that (i) byincluding the image as the first word, we propa-gate image features into the source sentence vectorrepresentations when applying the forward RNN−→Φ enc (vectors

−→hi), and (ii) by including the image

as the last word, we propagate image features intothe source sentence vector representations whenapplying the backward RNN

←−Φ enc (vectors

←−hi).

2.2.2 Images for encoder initialisation: IMGE

In the original attention-based NMT model de-scribed in §2, the hidden state of the encoder isinitialised with the zero vector

#»0 . Instead, we

propose to use two new single-layer feed-forwardneural networks to compute the initial states of theforward RNN

−→Φ enc and the backward RNN

←−Φ enc,

995

respectively, as illustrated in Figure 1b.Similarly to §2.2.1, given a global image feature

vector q ∈ R4096, we compute a vector d usingEquation (6), only this time the parameters W 2

I

and b2I project the image features into the same di-

mensionality as the textual encoder hidden states.The feed-forward networks used to initialise the

encoder hidden state are computed as in (7):←−h init = tanh

(Wfd + bf

),

−→h init = tanh

(Wbd + bb

), (7)

where Wf and Wb are multi-modal projectionmatrices that project the image features d into theencoder forward and backward hidden states di-mensionality, respectively, and bf and bb are biasvectors.

2.2.3 Images for decoder initialisation:IMGD

To incorporate an image into the decoder, we in-troduce a new single-layer feed-forward neuralnetwork to be used instead of the one described inEquation 5. Originally, the decoder’s initial hid-den state was computed using the concatenationof the last hidden states of the encoder forwardRNN (

−→Φ enc) and backward RNN (

←−Φ enc), respec-

tively−→hN and

←−h 1.

Our proposal is that we include the image fea-tures as additional input to initialise the decoderhidden state at time step t = 0, as in (8):

s0 = tanh(Wdi[

←−h 1;−→hN ] + Wmd + bdi

), (8)

where Wm is a multi-modal projection matrix thatprojects the image features d into the decoder hid-den state dimensionality and Wdi and bdi are thesame as in Equation (5).

Once again we compute d by applying Equa-tion (6) onto a global image feature vectorq ∈ R4096, only this time the parameters W 2

I andb2

I project the image features into the same dimen-sionality as the decoder hidden states. We illus-trate this idea in Figure 1c.

3 Data set

Our multi-modal NMT models need bilingual sen-tences accompanied by one or more images astraining data. The original Flickr30k data set con-tains 30K images and 5 English sentence descrip-tions for each image (Young et al., 2014). Weuse the translated and the comparable Multi30kdatasets (Elliott et al., 2016), henceforth referredto as M30kT and M30kC, respectively, which aremultilingual expansions of the original Flickr30k.

For each of the 30K images in the Flickr30k,the M30kT has one of its English descriptionsmanually translated into German by a professionaltranslator. Training, validation and test sets con-tain 29K, 1014 and 1K images, respectively, eachaccompanied by one sentence pair (the originalEnglish sentence and its German translation). Foreach of the 30K images in the Flickr30k, theM30kC has five descriptions in German collectedindependently of the English descriptions. Train-ing, validation and test sets contain 29K, 1014 and1K images, respectively, each accompanied by 5English and 5 German sentences.

We use the scripts in Moses (Koehn et al., 2007)to normalise, truecase and tokenize English andGerman descriptions and we also convert space-separated tokens into subwords (Sennrich et al.,2016b). All models use a common vocabularyof ∼83K English and ∼91K German subword to-kens. If sentences in English or German are longerthan 80 tokens, they are discarded.

We use the entire M30kT training set for train-ing, its validation set for model selection withBLEU, and its test set to evaluate our models.In order to study the impact that additional train-ing data brings to the models, we use the base-line model described in §2 trained on the textualpart of the M30kT data set (German→English andEnglish→German) without the images to buildback-translation models (Sennrich et al., 2016a).We back-translate the 145K German (English) de-scriptions in the M30kC into English (German)and include the triples (synthetic English descrip-tion, German description, image) as additionaltraining data when translating into German, and(synthetic German description, English descrip-tion, image) when translating into English.

We train models to translate from English intoGerman and from German into English, and re-port evaluation of cased, tokenized sentences withpunctuation.

4 Experimental setup

Our encoder is a bidirectional RNN with GRU(one 1024D single-layer forward RNN and one1024D single-layer backward RNN). Source andtarget word embeddings are 620D each and bothare trained jointly with our model. All non-recurrent matrices are initialised by sampling froma Gaussian (µ = 0, σ = 0.01), recurrent matricesare orthogonal and bias vectors are all initialised

996

to zero. Our decoder RNN also uses GRU and isa neural LM (Bengio et al., 2003) conditioned onits previous emissions and the source sentence bymeans of the source attention mechanism.

Image features are obtained by feeding im-ages to the pre-trained VGG19 network of Si-monyan and Zisserman (2014) and using the ac-tivations of the penultimate fully-connected layerFC7. We apply dropout with a probability of 0.2in both source and target word embeddings andwith a probability of 0.5 in the image features (inall MNMT models), in the encoder and decoderRNNs inputs and recurrent connections, and be-fore the readout operation in the decoder RNN.We follow Gal and Ghahramani (2016) and applydropout to the encoder bidirectional RNN and de-coder RNN using the same mask in all time steps.

Our models are trained using stochastic gra-dient descent with Adadelta (Zeiler, 2012) andminibatches of size 40 for improved generalisa-tion (Keskar et al., 2017), where each training in-stance consists of one English sentence, one Ger-man sentence and one image. We apply early stop-ping for model selection based on BLEU scores,so that if a model does not improve on the valida-tion set for more than 20 epochs, training is halted.

We evaluate our models’ translation qual-ity quantitatively in terms of BLEU4 (Papineniet al., 2002), METEOR (Denkowski and Lavie,2014), TER (Snover et al., 2006), and chrF3scores5 (Popovic, 2015) and we report statisti-cal significance for the three first metrics us-ing approximate randomisation computed withMultEval (Clark et al., 2011).

As our main baseline we train an attention-based NMT model (§2) in which only the tex-tual part of M30kT is used for training. We alsotrain a PBSMT model built with Moses on thesame English→German (German→English) data,where the LM is a 5–gram LM with modifiedKneser-Ney smoothing (Kneser and Ney, 1995)trained on the German (English) of the M30kTdataset. We use minimum error rate training (Och,2003) for tuning the model parameters for BLEUscores. Our third baseline (English→German), isthe best comparable multi-modal model by Huanget al. (2016) and also their best model with addi-tional object detections: respectively models m1(image at head) and m3 in the authors’ paper. Fi-nally, our fourth baseline (German→English) is

5We specifically compute character 6-gram F3 scores.

BLEU4↑ METEOR↑ TER↓ chrF3↑English→German

PBSMT 32.9 54.1 45.1 67.4NMT 33.7 52.3 46.7 64.5Huang 35.1 52.2 — —+ RCNN 36.5 54.1 — —

IMG1W 37.1†‡ (↑ 3.4) 54.5†‡ (↑ 0.4) 42.7†‡ (↓ 2.4) 66.9 (↓ 0.5)IMG2W 36.9†‡ (↑ 3.2) 54.3†‡ (↑ 0.2) 41.9†‡ (↓ 3.2) 66.8 (↓ 0.6)IMGE 37.1†‡ (↑ 3.4) 55.0†‡ (↑ 0.9) 43.1†‡ (↓ 2.0) 67.6 (↑ 0.2)IMGD 37.3†‡ (↑ 3.6) 55.1†‡ (↑ 1.0) 42.8†‡ (↓ 2.3) 67.7 (↑ 0.3)IMG2W+D 35.7†‡ (↑ 2.0) 53.6†‡ (↓ 0.5) 43.3†‡ (↓ 1.8) 66.2 (↓ 1.2)IMGE+D 37.0†‡ (↑ 3.3) 54.7†‡ (↑ 0.6) 42.6†‡ (↓ 2.5) 67.2 (↓ 0.2)

German→English

PBSMT 32.8 34.8 43.9 61.8NMT 38.2 35.8 40.2 62.8

IMG2W 39.5†‡ (↑ 1.3) 37.1†‡ (↑ 1.3) 37.1†‡ (↓ 3.1) 63.8 (↑ 1.0)IMGE 41.1†‡ (↑ 2.9) 37.7†‡ (↑ 1.9) 37.9†‡ (↓ 2.3) 65.7 (↑ 2.9)IMGD 41.3†‡ (↑ 3.1) 37.8†‡ (↑ 2.0) 37.9†‡ (↓ 2.3) 65.7 (↑ 2.9)IMG2W+D 39.9†‡ (↑ 1.7) 37.2†‡ (↑ 1.4) 37.0†‡ (↓ 3.2) 64.4 (↑ 1.6)IMGE+D 41.9†‡ (↑ 3.7) 37.9†‡ (↑ 2.1) 37.1†‡ (↓ 3.1) 66.0 (↑ 3.2)

Table 1: BLEU4, METEOR, chrF3 (higher is bet-ter) and TER scores (lower is better) on the M30kTtest set for the two text-only baselines PBSMTand NMT, the two multi-modal NMT models byHuang et al. (2016) (English→German only) andour MNMT models that: (i) use images as wordsin the source sentence (IMG1W, IMG2W), (ii) useimages to initialise the encoder (IMGE), and (iii)use images as additional data to initialise the de-coder (IMGD). Best text-only baselines are un-derscored and best overall results appear in bold.We highlight in parentheses the improvementsbrought by our models compared to the best cor-responding text-only baseline score. Results differsignificantly from PBSMT baseline (†) or NMTbaseline (‡) with p = 0.05.

the best-performing model in the WMT’16 multi-modal MT shared task (Shah et al., 2016), hence-forth PBSMT+. It uses image features as well asadditional data from WordNet (Miller, 1995) to re-rank n-best lists.

4.1 Results

The Multi30K dataset contains images and bilin-gual descriptions. Overall, it is a small datasetwith a small vocabulary whose sentences havesimple syntactic structures and not much ambigu-ity (Elliott et al., 2016). This is reflected in thefact that even the simplest baselines perform fairlywell on it, i.e. the smallest BLEU scores of 32.9for translating into German, which are still reason-ably good results.

997

BLEU4↑ METEOR↑ TER↓ chrF3↑English→German

original training dataIMG2W 36.9 54.3 41.9 66.8IMGE 37.1 55.0 43.1 67.6IMGD 37.3 55.1 42.8 67.7

+ back-translated training dataPBSMT 34.0 55.0 44.7 68.0NMT 35.5 53.4 43.3 65.3

IMG2W 36.7†‡ (↑ 1.2) 54.6†‡ (↓ 0.4) 42.0†‡ (↓ 1.3) 66.8 (↓ 1.2)IMGE 38.5†‡ (↑ 3.0) 55.7†‡ (↑ 0.9) 41.4†‡ (↓ 1.9) 68.3 (↑ 0.3)IMGD 38.5†‡ (↑ 3.0) 55.9†‡ (↑ 1.1) 41.6†‡ (↓ 1.7) 68.4 (↑ 0.4)

German→English

PBSMT+ 42.5 39.5 35.6 68.7

original training dataIMG2W 39.5 37.1 37.1 63.8IMGE 41.1 37.7 37.9 65.7IMGD 41.3 37.8 37.9 65.7

+ back-translated training dataNMT 42.6 38.9 36.1 67.6

IMG2W 42.4†‡ (↓ 0.2) 39.0†‡ (↑ 0.1) 34.7†‡ (↓ 1.4) 67.6 (↑ 0.0)

IMGE 43.9†‡ (↑ 1.3) 39.7†‡ (↑ 0.8) 34.8†‡ (↓ 1.3) 68.7 (↑ 1.1)IMGD 43.4†‡ (↑ 0.8) 39.3†‡ (↑ 0.4) 35.2†‡ (↓ 0.9) 67.8 (↑ 0.2)

Improvements (original vs. + back-translated)English→German / German→English

IMG2W ↓ 0.2 / ↑ 2.9 ↑ 0.1 / ↑ 1.9 ↑ 0.1 / ↓ 2.4 ↑ 0.0 / ↑ 3.8IMGE ↑ 1.4 / ↑ 2.8 ↑ 0.7 / ↑ 2.0 ↓ 1.8 / ↓ 3.1 ↑ 0.7 / ↑ 2.9IMGD ↑ 1.2 / ↑ 2.1 ↑ 0.8 / ↑ 1.5 ↓ 1.2 / ↓ 2.7 ↑ 0.7 / ↑ 2.1

Table 2: BLEU4, METEOR, TER and chrF3scores on the M30kT test set for models trained onoriginal and additional back-translated data. Besttext-only baselines are underscored and best over-all results in bold. We highlight in parenthesesthe improvements brought by our models com-pared to the best baseline score. Results differsignificantly from PBSMT baseline (†) or NMTbaseline (‡) with p = 0.05. We also show theimprovements each model yields in each metricwhen only trained on the original M30kT trainingset vs. also including additional back-translateddata. PBSMT+ is the best model in the multi-modal MT shared task (Specia et al., 2016).

Multi30k In Table 1, we show resultsfor translating from English→German andGerman→English. When translating into Ger-man, our multi-modal models perform well, withmodels IMGE and IMGD improving on bothbaselines according to all metrics analysed. Wealso note that all models but IMG2W+D performconsistently better than the strong multi-modalNMT baseline of Huang et al. (2016), even whenthis model has access to more data (+RCNNfeatures).6 Combining image features in the

6In fact, model IMG2W+D still improves on the multi-modal baseline of Huang et al. (2016) when trained on the

encoder and the decoder at the same time does notseem to improve results compared to using theimage features in only the encoder or the decoderwhen translating into German. To the best ofour knowledge, it is the first time a purely neuralmodel significantly improves over a PBSMTmodel in all metrics on this data set.

When translating into English, all multi-modalmodels significantly improve over the NMTbaseline, with the only exception being modelIMG2W’s BLEU scores. In this scenario, modelIMGE+D is the best performing one according toall but one metric. However, differences betweenmulti-modal models are not statistically signifi-cant, i.e. all multi-modal models but IMG2W andIMG2W+D perform comparably.

Additional back-translated data Arguably, themain downside of applying multi-modal NMT ina real-world scenario is the small amount of pub-licly available training data (∼30K entries). Forthat reason, we back-translated the German andEnglish sentences in the M30kC and created twosets of 145K synthetic triples, one for each trans-lation direction, as described in §3.

In Table 2, we present results for some ofthe models evaluated in Table 1 but when alsotrained on the additional data. In order to addmore data to our PBSMT baseline, we simplyadded the German sentences in the M30kC to trainthe LM.7 We also include results for PBSMT+,which uses image features as well as additionalfeatures extracted using WordNet (Shah et al.,2016). When translating into German, both ourmodels IMGE and IMGD that use global im-age features to initialise the encoder and the de-coder, respectively, significantly improve accord-ing to BLEU, METEOR and TER with the ad-ditional back-translated data, and also achievedbetter chrF3 scores. When translating into En-glish, IMGE is the only model to significantly im-prove over both baselines according to all met-rics with the additional back-translated data, alsoimproving chrF3 scores. We highlight that ourbest-performing model IMGE significantly outper-forms PBSMT+ according to BLEU and TER, andall our other multi-modal models perform com-parably to it. This is a noteworthy finding, since

same data.7Adding the synthetic sentence pairs to train the baseline

PBSMT model, as we did with all neural MT models, deteri-orated the results.

998

Multi30k test set (English→German)Ensemble? BLEU4 ↑ METEOR↑ TER↓ chrF3↑

IMGD × 37.3 55.1 42.8 67.7

IMGD + IMGE X 40.1 (↑ 2.8) 58.5 (↑ 3.4) 40.7 (↓ 2.1) 68.1 (↑ 0.4)

IMGD + IMGE + IMG2W X 41.0 (↑ 3.7) 58.9 (↑ 3.8) 39.7 (↓ 3.1) 68.3 (↑ 0.6)

IMGD + IMGE + IMG2W + IMGD X 41.3 (↑ 4.0) 59.2 (↑ 4.1) 39.5 (↓ 3.3) 68.5 (↑ 0.8)

Table 3: Results for different combinations of multi-modal models, all trained on the original M30kTtraining data only, evaluated on the M30kT test set.

PBSMT+ uses image features as well as additionaldata from WordNet and, to the best of our knowl-edge, is the best published model in this languagepair and data set to date.

Ensemble decoding We now report on how canensemble decoding be used to improve transla-tions obtained with our multi-modal NMT mod-els. In order to do that, we use different combi-nations of models trained on the original M30kTtraining set to translate from English into German.We built ensembles of different models by start-ing with our best performing multi-modal modelon this language pair and data set, IMGD, and byadding new models to the ensemble one by one,until we reach a maximum of four independentmodels, all of which are trained separately and onthe original M30kT training data only. In Table 3,we show results when translating the M30kT’s testset. These models were also evaluated in our re-cent participation in the WMT 2017 multi-modalMT shared task (Calixto et al., 2017a).

We first note that to add more models to the en-semble seems to always improve translations, andby a considerable margin (∼ 3 BLEU/METEORpoints). Adding model IMG2W to the ensemblealready consisting of models IMGE and IMGD im-proves translations according to all metrics evalu-ated. This is an interesting result, since comparedto these other two multi-modal models, modelIMG2W performs poorly according to BLEU, ME-TEOR and chrF3. Regardless of that fact, ourbest results are obtained with an ensemble of fourdifferent multi-modal models, including modelIMG2W.

By using an ensemble of four different multi-modal NMT models trained on the translatedMulti30k training data, we were able to obtaintranslations comparable to or even better thanthose obtained with the strong multi-modal NMTmodel of Calixto et al. (2017b), which is pre-trained on large amounts of English–German data

and uses local image features. Finally, we haverecently participated in the WMT 2017 multi-modal MT shared task, and our system submis-sions ranked among the best performing systemsunder the constrained data regime (Calixto et al.,2017a). We note that our models performed par-ticularly well on the ambiguous MSCOCO testset (Elliott et al., 2017), which indicate its abil-ity to use the image information in disambiguatingdifficult source sentences into their correct transla-tions.

5 Error Analysis

In Table 4 we show translations into German gen-erated by different models for one entry of theM30k test set. In this example, the last three multi-modal models extrapolate the reference+imageand describe “ceremony” as a “wedding cere-mony” (IMG2W) and as an “Olympics ceremony”(IMGE and IMGD). This could be due to thefact that the training set is small, depicts a smallvariation of different scenes and contains differentforms of biasses (van Miltenburg, 2015).

In Table 5, we draw attention to an examplewhere some models generate what seems to benovel visual terms. Neither the source Germansentence nor the English reference translation con-tained the translated units “having fun” or “Mex-ican restaurant”, although both could have beeninferred at least partially from the image. In thisexample, the visual term “having fun” is also gen-erated by the baseline NMT model, making it clearthat at times what seems like a translation ex-tracted exclusively from the image may have beenlearnt from the training text data. However, noneof the two baselines translated “MexikanischenSetting” as “Mexican restaurant”, but four out ofthe five multi-modal models did. The multi-modalmodels also had problems translating the German“trinkt Shots” (drinking shots). We observe trans-lations such as “having drinks” (IMG2W), which

999

src. a woman with long hair is at a graduation ceremony .ref. eine Frau mit langen Haaren bei einer Abschluss Feier.

NMT eine Frau mit langen Haaren ist an einer StaZeremonie.PBSMT eine Frau mit langen Haaren steht an einem AbschlussIMG1W eine Frau mit langen Haaren ist an einer warmen Zeremonie teilIMG2W eine Frau mit langen Haaren steht bei einer Hochzeit Feier.IMGE eine lang haarige Frau bei einer olympischen Zeremonie.IMGD eine lang haarige Frau bei einer olympischen Zeremonie.

Table 4: Translations for the 668th example in the M30k test set.

src. eine Gruppe junger Menschen trinkt Shots in einem Mexikanischen Setting .ref. a group of young people take shots in a Mexican setting .

NMT a group of young people are having fun in an auditorium .PBSMT a group of young people drinking at a Shots Mexikanischen Setting .IMG2W a group of young people having drinks in a Mexican restaurant .IMGE a group of young people drinking apples in a Mexican restaurant .IMGD a group of young people drinking food in a Mexican restaurant .IMG2W+D a group of young people having fun in a Mexican room .IMGE+D a group of young people drinking dishes in a Mexican restaurant .

Table 5: Translations for 300th example in the M30k test set.

although not a novel translation is still correct, butalso “drinking apples” (IMGE), “drinking food”(IMGD), and “drinking dishes” (IMGE+D), whichare clearly incorrect.

6 Conclusions and future work

In this work, we introduced models that incor-porate images into state-of-the-art attention-basedNMT, by using images as words in the source sen-tence, to initialise the encoder’s hidden state andas additional data in the initialisation of the de-coder’s hidden state. The intuition behind our ef-fort is to use images to visually ground transla-tions, and consequently increase translation qual-ity. We demonstrate with extensive experimentsthat adding global image features into NMT sig-nificantly improves the translations of image de-scriptions compared to text-only NMT and PB-SMT. It also improves significantly on the previ-ous state-of-the-art model of Huang et al. (2016)(English→German), and performs comparably tothe best published results of Shah et al. (2016)(German→English). Overall, we note that usingimages as words in the source sequence (IMG1W,IMG2W), an idea similarly entertained by Huanget al. (2016), does not fare as well as to directlyincorporate the image either in the encoder or thedecoder (IMGE and IMGD), independently of thetarget language. The fact that multi-modal NMTmodels can benefit from back-translated data is

also an interesting finding.In future work, we will conduct a more sys-

tematic study on the impact that synthetic back-translated data brings to multi-modal NMT, andrun an error analysis to identify what particulartypes of errors our models make (and prevent).

Acknowledgments

This project has received funding from ScienceFoundation Ireland in the ADAPT Centre for Dig-ital Content Technology (www.adaptcentre.ie) at Dublin City University funded underthe SFI Research Centres Programme (Grant13/RC/2106) co-funded under the European Re-gional Development Fund and the EuropeanUnion Horizon 2020 research and innovation pro-gramme under grant agreement 645452 (QT21).

References

Dzmitry Bahdanau, Kyunghyun Cho, and YoshuaBengio. 2015. Neural Machine Translation byJointly Learning to Align and Translate. In Inter-national Conference on Learning Representations,ICLR 2015. San Diego, California.

Samy Bengio, Oriol Vinyals, Navdeep Jaitly, andNoam M. Shazeer. 2015. Scheduled Sampling forSequence Prediction with Recurrent Neural Net-works. In Advances in Neural Information Process-ing Systems, NIPS. http://arxiv.org/abs/1506.03099.

1000

Yoshua Bengio, Rejean Ducharme, Pascal Vincent, andChristian Janvin. 2003. A Neural Probabilistic Lan-guage Model. J. Mach. Learn. Res. 3:1137–1155.http://dl.acm.org/citation.cfm?id=944919.944966.

Ozan Caglayan, Walid Aransa, Yaxing Wang,Marc Masana, Mercedes Garcıa-Martınez, FethiBougares, Loıc Barrault, and Joost van de Weijer.2016. Does Multimodality Help Human andMachine for Translation and Image Captioning? InProceedings of the First Conference on MachineTranslation. Berlin, Germany, pages 627–633.http://www.aclweb.org/anthology/W/W16/W16-2358.

Iacer Calixto, Koel Dutta Chowdhury, and Qun Liu.2017a. DCU System Report on the WMT 2017Multi-modal Machine Translation Task. In Proceed-ings of the Second Conference on Machine Transla-tion. Copenhagen, Denmark.

Iacer Calixto, Desmond Elliott, and Stella Frank. 2016.DCU-UvA Multimodal MT System Report. InProceedings of the First Conference on MachineTranslation. Berlin, Germany, pages 634–638.http://www.aclweb.org/anthology/W/W16/W16-2359.

Iacer Calixto, Qun Liu, and Nick Campbell. 2017b.Doubly-Attentive Decoder for Multi-modal NeuralMachine Translation. In Proceedings of the 55thConference of the Association for ComputationalLinguistics: Volume 1, Long Papers. Vancouver,Canada (Paper Accepted).

Kyunghyun Cho, Bart van Merrienboer, Dzmitry Bah-danau, and Yoshua Bengio. 2014a. On the proper-ties of neural machine translation: Encoder–decoderapproaches. Syntax, Semantics and Structure in Sta-tistical Translation page 103.

Kyunghyun Cho, Bart van Merrienboer, Caglar Gul-cehre, Dzmitry Bahdanau, Fethi Bougares, Hol-ger Schwenk, and Yoshua Bengio. 2014b. Learn-ing Phrase Representations using RNN Encoder–Decoder for Statistical Machine Translation. InProceedings of the 2014 Conference on Em-pirical Methods in Natural Language Process-ing (EMNLP). Doha, Qatar, pages 1724–1734.http://www.aclweb.org/anthology/D14-1179.

Jonathan H. Clark, Chris Dyer, Alon Lavie, andNoah A. Smith. 2011. Better Hypothesis Testingfor Statistical Machine Translation: Control-ling for Optimizer Instability. In Proceedingsof the 49th Annual Meeting of the Associa-tion for Computational Linguistics: HumanLanguage Technologies: Short Papers - Vol-ume 2. Portland, Oregon, HLT ’11, pages 176–181.http://dl.acm.org/citation.cfm?id=2002736.2002774.

Michael Denkowski and Alon Lavie. 2014. MeteorUniversal: Language Specific Translation Evalua-tion for Any Target Language. In Proceedings of theEACL 2014 Workshop on Statistical Machine Trans-lation.

Jeff Donahue, Lisa Anne Hendricks, Sergio Guadar-rama, Marcus Rohrbach, Subhashini Venugopalan,Trevor Darrell, and Kate Saenko. 2015. Long-term Recurrent Convolutional Networks for VisualRecognition and Description. In Computer Visionand Pattern Recognition (CVPR), 2015 IEEE Con-ference on. Boston, US, pages 2625–2634.

Daxiang Dong, Hua Wu, Wei He, Dianhai Yu, andHaifeng Wang. 2015. Multi-Task Learning for Mul-tiple Language Translation. In Proceedings of the53rd Annual Meeting of the Association for Compu-tational Linguistics and the 7th International JointConference on Natural Language Processing (Vol-ume 1: Long Papers). Beijing, China, pages 1723–1732. http://www.aclweb.org/anthology/P15-1166.

Desmond Elliott, Stella Frank, Loıc Barrault, FethiBougares, and Lucia Specia. 2017. Findings ofthe Second Shared Task on Multimodal MachineTranslation and Multilingual Image Description. InProceedings of the Second Conference on MachineTranslation. Copenhagen, Denmark.

Desmond Elliott, Stella Frank, and Eva Hasler.2015. Multi-language image description withneural sequence models. CoRR abs/1510.04709.http://arxiv.org/abs/1510.04709.

Desmond Elliott, Stella Frank, Khalil Sima’an,and Lucia Specia. 2016. Multi30K: Multilin-gual English-German Image Descriptions. InProceedings of the 5th Workshop on Visionand Language, VL@ACL 2016. Berlin, Ger-many. http://aclweb.org/anthology/W/W16/W16-3210.pdf.

Orhan Firat, Kyunghyun Cho, and Yoshua Bengio.2016. Multi-Way, Multilingual Neural MachineTranslation with a Shared Attention Mechanism. InProceedings of the 2016 Conference of the NorthAmerican Chapter of the Association for Com-putational Linguistics: Human Language Tech-nologies. San Diego, California, pages 866–875.http://www.aclweb.org/anthology/N16-1101.

Yarin Gal and Zoubin Ghahramani. 2016. A Theoreti-cally Grounded Application of Dropout in RecurrentNeural Networks. In Advances in Neural Informa-tion Processing Systems, NIPS, Barcelona, Spain,pages 1019–1027. http://papers.nips.cc/paper/6241-a-theoretically-grounded-application-of-dropout-in-recurrent-neural-networks.pdf.

Ross Girshick, Jeff Donahue, Trevor Darrell, and Ji-tendra Malik. 2014. Rich Feature Hierarchies forAccurate Object Detection and Semantic Segmen-tation. In Proceedings of the 2014 IEEE Confer-ence on Computer Vision and Pattern Recognition.Washington, DC, USA, CVPR ’14, pages 580–587.https://doi.org/10.1109/CVPR.2014.81.

Alex Graves. 2013. Generating Sequences With Re-current Neural Networks. CoRR abs/1308.0850.http://arxiv.org/abs/1308.0850.

1001

Sepp Hochreiter and Jurgen Schmidhuber. 1997. LongShort-Term Memory. Neural Comput. 9(8):1735–1780. https://doi.org/10.1162/neco.1997.9.8.1735.

Micah Hodosh, Peter Young, and Julia Hock-enmaier. 2013. Framing Image DescriptionAs a Ranking Task: Data, Models and Evalu-ation Metrics. J. Artif. Int. Res. 47(1):853–899.http://dl.acm.org/citation.cfm?id=2566972.2566993.

Po-Yao Huang, Frederick Liu, Sz-Rung Shiang,Jean Oh, and Chris Dyer. 2016. Attention-basedMultimodal Neural Machine Translation. InProceedings of the First Conference on MachineTranslation. Berlin, Germany, pages 639–645.http://www.aclweb.org/anthology/W/W16/W16-2360.

Nal Kalchbrenner and Phil Blunsom. 2013. RecurrentContinuous Translation Models. In Proceedings ofthe 2013 Conference on Empirical Methods in Nat-ural Language Processing, EMNLP 2013. Seattle,USA, pages 1700–1709.

Andrej Karpathy and Li Fei-Fei. 2015. Deep visual-semantic alignments for generating image descrip-tions. In Proceedings of the IEEE Conference onComputer Vision and Pattern Recognition, CVPR2015. Boston, Massachusetts, pages 3128–3137.

Nitish Shirish Keskar, Dheevatsa Mudigere, Jorge No-cedal, Mikhail Smelyanskiy, and Ping Tak PeterTang. 2017. On Large-Batch Training for DeepLearning: Generalization Gap and Sharp Minima.In International Conference on Learning Represen-tations, ICLR 2017. Toulon, France.

Ryan Kiros, Ruslan Salakhutdinov, and Richard S.Zemel. 2014. Unifying visual-semantic embeddingswith multimodal neural language models. CoRRabs/1411.2539. http://arxiv.org/abs/1411.2539.

Reinhard Kneser and Hermann Ney. 1995. Improvedbacking-off for m-gram language modeling. In InProceedings of the IEEE International Conferenceon Acoustics, Speech and Signal Processing. De-troit, Michigan, volume I, pages 181–184.

Philipp Koehn, Hieu Hoang, Alexandra Birch, ChrisCallison-Burch, Marcello Federico, Nicola Bertoldi,Brooke Cowan, Wade Shen, Christine Moran,Richard Zens, Chris Dyer, Ondrej Bojar, AlexandraConstantin, and Evan Herbst. 2007. Moses: OpenSource Toolkit for Statistical Machine Translation.In Proceedings of the 45th Annual Meeting of theACL on Interactive Poster and Demonstration Ses-sions. Association for Computational Linguistics,Prague, Czech Republic, ACL ’07, pages 177–180.http://dl.acm.org/citation.cfm?id=1557769.1557821.

Jindrich Libovicky, Jindrich Helcl, Marek Tlusty,Ondrej Bojar, and Pavel Pecina. 2016. CUNISystem for WMT16 Automatic Post-Editingand Multimodal Translation Tasks. In Pro-ceedings of the First Conference on Machine

Translation. Berlin, Germany, pages 646–654.http://www.aclweb.org/anthology/W/W16/W16-2361.

Thang Luong, Hieu Pham, and Christopher D. Man-ning. 2015. Effective Approaches to Attention-based Neural Machine Translation. In Proceed-ings of the 2015 Conference on Empirical Methodsin Natural Language Processing (EMNLP). Lisbon,Portugal, pages 1412–1421.

Junhua Mao, Wei Xu, Yi Yang, Jiang Wang,and Alan L. Yuille. 2014. Explain Imageswith Multimodal Recurrent Neural Networks.http://arxiv.org/abs/1410.1090.

George A. Miller. 1995. Wordnet: A lexicaldatabase for english. Commun. ACM 38(11):39–41.https://doi.org/10.1145/219717.219748.

Franz Josef Och. 2003. Minimum Error Rate Train-ing in Statistical Machine Translation. In Pro-ceedings of the 41st Annual Meeting on Asso-ciation for Computational Linguistics - Volume1. Sapporo, Japan, ACL ’03, pages 160–167.https://doi.org/10.3115/1075096.1075117.

Kishore Papineni, Salim Roukos, Todd Ward, andWei-Jing Zhu. 2002. BLEU: A Method for Au-tomatic Evaluation of Machine Translation. InProceedings of the 40th Annual Meeting on As-sociation for Computational Linguistics. Philadel-phia, Pennsylvania, ACL ’02, pages 311–318.https://doi.org/10.3115/1073083.1073135.

Maja Popovic. 2015. chrf: character n-gram f-score for automatic mt evaluation. In Proceed-ings of the Tenth Workshop on Statistical Ma-chine Translation. Lisbon, Portugal, pages 392–395.http://aclweb.org/anthology/W15-3049.

Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause,Sanjeev Satheesh, Sean Ma, Zhiheng Huang, An-drej Karpathy, Aditya Khosla, Michael Bernstein,Alexander C. Berg, and Li Fei-Fei. 2015. Ima-geNet Large Scale Visual Recognition Challenge.International Journal of Computer Vision (IJCV)115(3):211–252. https://doi.org/10.1007/s11263-015-0816-y.

Rico Sennrich, Barry Haddow, and Alexandra Birch.2016a. Improving Neural Machine TranslationModels with Monolingual Data. In Proceed-ings of the 54th Annual Meeting of the Asso-ciation for Computational Linguistics (Volume 1:Long Papers). Berlin, Germany, pages 86–96.http://www.aclweb.org/anthology/P16-1009.

Rico Sennrich, Barry Haddow, and Alexandra Birch.2016b. Neural Machine Translation of RareWords with Subword Units. In Proceedingsof the 54th Annual Meeting of the Associa-tion for Computational Linguistics (Volume 1:Long Papers). Berlin, Germany, pages 1715–1725.http://www.aclweb.org/anthology/P16-1162.

1002

Kashif Shah, Josiah Wang, and Lucia Specia.2016. SHEF-Multimodal: Grounding Ma-chine Translation on Images. In Proceed-ings of the First Conference on MachineTranslation. Berlin, Germany, pages 660–665.http://www.aclweb.org/anthology/W/W16/W16-2363.

K. Simonyan and A. Zisserman. 2014. Very Deep Con-volutional Networks for Large-Scale Image Recog-nition. CoRR abs/1409.1556.

Matthew Snover, Bonnie Dorr, Richard Schwartz, Lin-nea Micciulla, and John Makhoul. 2006. A studyof translation edit rate with targeted human annota-tion. In In Proceedings of Association for MachineTranslation in the Americas. Cambridge, MA, pages223–231.

Lucia Specia, Stella Frank, Khalil Sima’an, andDesmond Elliott. 2016. A Shared Task onMultimodal Machine Translation and Crosslin-gual Image Description. In Proceedings ofthe First Conference on Machine Translation,WMT 2016. Berlin, Germany, pages 543–553. http://aclweb.org/anthology/W/W16/W16-2346.pdf.

Ilya Sutskever, Oriol Vinyals, and Quoc V Le. 2014.Sequence to Sequence Learning with Neural Net-works. In Advances in Neural Information Process-ing Systems. Montreal, Canada, pages 3104–3112.

Zhaopeng Tu, Zhengdong Lu, Yang Liu, Xiao-hua Liu, and Hang Li. 2016. Modeling Cov-erage for Neural Machine Translation. In Pro-ceedings of the 54th Annual Meeting of the As-sociation for Computational Linguistics (Volume1: Long Papers). Berlin, Germany, pages 76–85.http://www.aclweb.org/anthology/P16-1008.

Emiel van Miltenburg. 2015. Stereotyping and bias inthe flickr30k dataset. In Proceedings of the Work-shop on Multimodal Corpora, MMC-2016. Por-toroz, Slovenia, pages 1–4.

Subhashini Venugopalan, Marcus Rohrbach, JeffreyDonahue, Raymond Mooney, Trevor Darrell, andKate Saenko. 2015. Sequence to Sequence - Videoto Text. In Proceedings of the IEEE InternationalConference on Computer Vision. Santiago, Chile,pages 4534–4542.

Oriol Vinyals, Alexander Toshev, Samy Bengio, andDumitru Erhan. 2015. Show and tell: A neural im-age caption generator. In IEEE Conference on Com-puter Vision and Pattern Recognition, CVPR 2015.Boston, Massachusetts, pages 3156–3164.

Kelvin Xu, Jimmy Ba, Ryan Kiros, KyunghyunCho, Aaron Courville, Ruslan Salakhudinov, RichZemel, and Yoshua Bengio. 2015. Show, at-tend and tell: Neural image caption genera-tion with visual attention. In Proceedings ofthe 32nd International Conference on Machine

Learning (ICML-15). JMLR Workshop and Confer-ence Proceedings, Lille, France, pages 2048–2057.http://jmlr.org/proceedings/papers/v37/xuc15.pdf.

Peter Young, Alice Lai, Micah Hodosh, and JuliaHockenmaier. 2014. From image descriptions tovisual denotations: New similarity metrics for se-mantic inference over event descriptions. Transac-tions of the Association for Computational Linguis-tics 2:67–78.

Matthew D. Zeiler. 2012. ADADELTA: An Adap-tive Learning Rate Method. CoRR abs/1212.5701.http://arxiv.org/abs/1212.5701.

1003

![Untitled-1 [salvichem.com]salvichem.com/assets/SCI-IRON-SALTS-BOOKLET.pdf · NMT. 1 Oppm NMT 0.3% No turbidty is produced Wthin 5 minutes NMT 10ppm 16.5 to 18.5% FCC Passes Test NMT](https://static.fdocuments.us/doc/165x107/5f10a47d7e708231d44a1ca8/untitled-1-nmt-1-oppm-nmt-03-no-turbidty-is-produced-wthin-5-minutes-nmt.jpg)