[IEEE Sixth International Conference on Intelligent Systems Design and Applications - Jian, China...

Transcript of [IEEE Sixth International Conference on Intelligent Systems Design and Applications - Jian, China...

![Page 1: [IEEE Sixth International Conference on Intelligent Systems Design and Applications - Jian, China (2006.10.16-2006.10.16)] Sixth International Conference on Intelligent Systems Design](https://reader037.fdocuments.us/reader037/viewer/2022092707/5750a6751a28abcf0cb9bdbe/html5/thumbnails/1.jpg)

Reinforcement Learning with Hierarchical Decision-Making

Shahar Cohen, Oded Maimon, Evgeni Khmlenitsky Department of Industrial Engineering, Tel Aviv University, Israel

[email protected], [email protected], [email protected]

Abstract

This paper proposes a simple, hierarchical decision-making approach to reinforcement learning, under the framework of Markov decision processes. According to the approach, the choice of an action, in every time stage, is made through a successive elimination of actions and sets of actions from the underlined action-space, until a single action is decided upon. Based on the approach, the paper defines a hierarchical Q-function, and shows that this function can be the basis for an optimal policy. A hierarchical reinforcement learning algorithm is then proposed. The algorithm, which can be shown to converge to the hierarchical Q-function, provides new opportunities for state abstraction.

1. Introduction

Reinforcement Learning (RL) is the process in which an artificial agent gradually induces a desired behavior, based on interaction with its surrounding environment ([9], [14]). The interaction can often be described by the model of Markov Decision Process (MDP), where the mean-reward and state-transition functions are not provided to the agent.

In real-life decision-making scenarios, actions are often decided upon hierarchically. For example, a couple, planning a rendezvous, will first decide on whether to go to the cinema or to the theater, and only then on a specific movie or show. The formalism of MDPs, however, does not include the agent's decision-making mechanism. In the absence of such mechanism, the literature on MDPs often assumes that when observing a state, the agent considers the entire action-space, as a single, flat set.

RL with hierarchical decision-making mechanisms was addressed by several authors (e.g., [5], [6], [10], [15]). The basic idea in hierarchical RL is of an agent that makes decisions and learns in several levels of abstraction. In most cases, hierarchical RL involve some sort of temporal abstraction ([1]). Hierarchical

methods were shown to significantly contribute to the learning speed, but on the other hand, they may converge to a sub-optimal solution.

This paper proposes a hierarchical decision-making approach to RL. Differently from the currently available hierarchical methods, in the proposed approach all the actions are executed in a single time-stage. Relied on the approach, extensions to flat RL algorithms (e.g., Q-Learning) can be shown to converge to an optimal policy. The hierarchical approach is shown to provide new opportunities for state abstraction and as a result an increased learning speed.

The rest of this paper is organized as follows: Section 2 provides a brief introduction to several RL essentials. Section 3 describes the new, hierarchical decision-making mechanism. Section 4 defines a hierarchical Q-function, and shows that this function can be the basis for an optimal policy. Section 5 proposes an RL algorithm, which can be shown to converge to the hierarchical Q-function. The section also shows that owing to the hierarchical decision-making mechanism it becomes possible to employ state abstraction. Section 6 uses a simple navigation task to demonstrate the main idea of the work and its contribution. Finally, Section 7 concludes the work.

2. Reinforcement Learning

RL is often based on the model of MDP ([11]). An MDP is defined by the tuple <S,A,R,P>, where S is a state-space, A is an action-space, R:S×A is a mean-reward function, and P:S×A×S [0,1] is a state-transition function. The MDP is controlled by an agent, through a discrete sequence of stages. At the tth stage, the agent observes a state st∈S, and chooses an action at∈A. As a result, it receives an immediate, bounded, real-valued reward rt, which mean-value is R(st,at), and is introduced with the next state st+1∈S with probability P(st,at,st+1).

The agent searches for an optimal policy. A policy is a mechanism that guides the agent on what action to

Proceedings of the Sixth International Conference on Intelligent Systems Design and Applications (ISDA'06)0-7695-2528-8/06 $20.00 © 2006

![Page 2: [IEEE Sixth International Conference on Intelligent Systems Design and Applications - Jian, China (2006.10.16-2006.10.16)] Sixth International Conference on Intelligent Systems Design](https://reader037.fdocuments.us/reader037/viewer/2022092707/5750a6751a28abcf0cb9bdbe/html5/thumbnails/2.jpg)

choose, based on the state it observes. In this paper we assume that the objective of the agent is to maximize the expected, infinite, discounted sum of rewards it receives, that is:

1

1maxt

tt

E rγ∞

−

=⋅ →

where γ∈[0,1) is a discount factor. The literature on MDPs often focuses on deterministic policies. A deterministic policy is a degenerate policy that maps each state to a single action.

The agent's expected future-value, associated with the choices of actions, while being in the various states, is represented by the Q-function Q*:S×A , which is defined for all s∈S and a∈A by:

( ) ( ) ( ) ( )* *

'', , , , ' max ', '

a As SQ s a R s a P s a s Q s aγ

∈∈= + ⋅ ⋅ .

If the Q*(s,a) values are known for all s∈S, and a∈A,then an optimal, deterministic policy, π*:S A can be calculated by:

( ) ( )* *arg max , ,a A

s Q s a s Sπ∈

= ∀ ∈ .

Dynamic Programming (DP) is often employed for finding an optimal policy (given an underlined MDP). In order to employ DP algorithms, such as Value Iteration or Policy Iteration ([3], [8]), an explicit knowledge of both the mean-reward and the state-transition functions is required. Although RL problems are usually modeled as MDPs, they need to be solved without an explicit knowledge of these functions. Several generalizations of DP to RL were proposed in the literature ([2], [7], [12], [13], [16], [17]).

Q-Learning ([16], [17]) is a widely-accepted RL algorithm. A Q-Learning agent successively estimates its Q-function based on the experience it collects. The agent begins with some arbitrary initializations - Q1(s,a), for all s∈S and a∈A. Given the experience <st,at,rt,st+1>, which was gathered on the tth stage, the agent updates its tth estimations, for all s∈S, and a∈A,by:

( ) ( )( ) ( )( ) ( )

1

1'

, 1 , ,

, max , ' ,

t t t

t t t ta A

Q s a s a Q s a

s a r Q s a

α

α γ

+

+∈

= − ⋅

+ ⋅ + ⋅

where αt(s,a) is the learning-rate, corresponding to sand a on stage t. Watkins and Dayan ([17]) proved that, under several assumptions, the Q-Learning agent's estimations converge to its Q-function. In order to assure convergence, it is required that the agent will update the estimation, corresponding to each state-

action pair, infinitely often (i.e., the agent needs to incorporate a continuous exploration-policy).

3. Hierarchical Decision-Making

The hierarchical decision-making approach relies on the notion of action-hierarchy.

Definition 1: The finite collection of the k sets of actions, H={A1,A2,…,Ak}, is termed an action-hierarchy with respect to the MDP M=<S,A,R,P>, if there exists an index, j∈{1,2,…,k}, so that Aj=A,(A1∪A2∪…∪Aj-1∪Aj+1∪…∪Ak)=A, and H\Aj is composed of mutually-exclusive sub-collections, where each sub-collection either consists of a single set, containing just a single action (such sub-collections will be henceforth referred to as actions), or is itself an action-hierarchy.

An action-hierarchy can be represented by a tree. The terminals of the tree represent single actions, and the root represents the distinct action set Aj (see Definition 1). For exposition reasons, instead of referring to the tree that represents H, H itself will be henceforth considered as if it was a tree. Moreover, we will write v∈H to denote that v is a node in H. The following example demonstrates the notion of action-hierarchy and its representation as a tree.

Example 1: Consider the task of planning your way to work, on some arbitrary Monday morning, and assume that you insist to have a detailed (possibly tentative) plan before you leave and that there are six general options: you can make your way walking, riding on your bicycle, driving your car, taking a taxi, using a bus or by taking the subway. In order to be considered a plan, the taxi option (for example) needs to be coined into practical terms. Maybe you can use the phone to order a taxi, alternatively, you can go down, to the street, and try to catch one or, you can walk the way to the nearest main road and catch the taxi there. The alternatives (actions) under a certain option can be further grouped together. For example, if you decide to walk all the way to work, you must plan the exact route. There can easily be few dozens of reasonable routes. These routes can be, for example, grouped by the key junctions that they go through. The space of all the possible detailed plans (actions) can be thought of as an action-hierarchy. The root of the hierarchy consists of all the possible plans. The root has six descendants (one for each general option). The descendent that represents the walking option, for example, consists of all the plans for this option, and so

Proceedings of the Sixth International Conference on Intelligent Systems Design and Applications (ISDA'06)0-7695-2528-8/06 $20.00 © 2006

![Page 3: [IEEE Sixth International Conference on Intelligent Systems Design and Applications - Jian, China (2006.10.16-2006.10.16)] Sixth International Conference on Intelligent Systems Design](https://reader037.fdocuments.us/reader037/viewer/2022092707/5750a6751a28abcf0cb9bdbe/html5/thumbnails/3.jpg)

on. Each of the hierarchy's terminals consists of a single (detailed) plan.

When observing a state, the agent in the proposed approach makes a sequence of sub-choices, which correspond to the nodes in H, starting from the root, v0.As a result of each sub-choice, the agent moves to one of the descendents of the currently visited node, until eventually, it reaches a terminal. The action, represented by the terminal, is executed against the environment, and consequently a reward is generated and the state transmits. Let the sets of terminal and non terminal nodes in H be denoted by HT and HN,respectively. For any v∈HN, denote the set of all the descendents of v by D(v), and for all v∈HT, let a(v) be the action represented by v.

It can be seen that the agent in the proposed approach chooses actions by elimination. When moving from a node to one of its descendents, the agent removes, from the set of potential actions, all the actions that are included in the node but not in the descendant.

4. The Hierarchical Q-Function

In the (typical) flat decision-making approach, the Q-function, Q*:S×A , maps any state-action pair to its future value. Under the optimality criterion of this paper (see Section 2), the Q-function can be written, for all s∈S and a∈A, as:

( )* 11 1

1

, max ,tt

t

Q s a E r s s a aππγ

∞−

∈Π =

= ⋅ = = ,

where Π is the policy-space and Eπ is the expectation, given that actions are chosen according to the policy π.In the hierarchical decision-making approach, the agent makes multiple sub-choices in each stage, thus there should be a Q-value corresponding to each pair of state and sub-choice. For all s∈S and v∈HT (recall that terminal nodes represents actions), the hierarchical Q-function is defined as QH*(s,v)=Q*(s,a(v)), and for all s∈S and v∈HN, it is recursively defined as:

( )( )

( )* *

', max , '

v D vQH s v QH s v

∈= .

The hierarchical Q-function can serve as the basis for an optimal policy, as stated by the following proposition (intuitively, it can be seen that the non-terminal node with the highest QH* value must have underneath it the action with the highest Q* value, therefore choosing this non-terminal node does not heart the opportunity of reaching the optimal action) However, recall that in RL it is assumed that the

functions R and P are not provided to the agent, and therefore the hierarchical Q-function cannot be calculated analytically.

Proposition 1: A hierarchical policy, that in each s∈Sand v∈HN chooses to move to the node v'∈D(v) for which the corresponding QH* value is maximal, is an optimal policy.

Proof: By the definition of the flat Q-function, the optimal choice, while being in s∈S, is to take the action for which Q* is maximal. Consider the certain state s, let a be the action that results from the proposed hierarchical policy, and assume negatively that a is sub-optimal (i.e., assume that there exists a'∈A so that Q*(s,a')>Q*(s,a)). Without loss of generality, assume that a' is the optimal action for the state s, and focus on the last sub-choice of the proposed approach (given s). Since Q*(s,a') Q*(s,a''), for all a''∈A (a' is an optimal action), the node v∈HL, for which a'=a(v) will be reached, given that the agent reaches the parent of v. For the purpose of this proof, let us denote the parent of v by p(v). Since by definition, QH*(s,p(v))=QH*(s,v)=Q*(s,a') QH*(s,v') , for every v' in H, we can infer that the agent will choose to move to p(v), given that it is currently in the parent of p(v). Repeating the same argument up until reaching the hierarchy's root, contradicts the initial assumption (i.e., a is an optimal action).

Q.E.D

5. Hierarchical Reinforcement-Learning Algorithm

This section presents a hierarchical RL algorithm. The algorithm (see Figure 1) uses the hierarchical decision-making mechanism, and converges to the hierarchical Q-function, presented in the previous section (thus in the algorithm's initialization phase, an arbitrary estimation is generated for each pair of state and sub-choice). On each stage, the agent begins its decision-making from the root of the underlined hierarchy, and it sequentially sub-chooses to move from nodes to their descendants, until it reaches a terminal and executes the action that this terminal represents. The stack L (see Figure 1) contains the nodes, for which the corresponding Q-estimations need to be updated, on the current stage. It can be seen that the reached terminal and the non-terminals, from which all the subsequent sub-choices were made greedily, are pushed to L. The update equation of the algorithm is much alike that of Q-Learning. Notice that, in turn, the agent attributes the rewards and state transitions to every sub-choice in L.

Proceedings of the Sixth International Conference on Intelligent Systems Design and Applications (ISDA'06)0-7695-2528-8/06 $20.00 © 2006

![Page 4: [IEEE Sixth International Conference on Intelligent Systems Design and Applications - Jian, China (2006.10.16-2006.10.16)] Sixth International Conference on Intelligent Systems Design](https://reader037.fdocuments.us/reader037/viewer/2022092707/5750a6751a28abcf0cb9bdbe/html5/thumbnails/4.jpg)

The algorithm can be shown to converge to the hierarchical Q-function, as stated by the following proposition. The proposition can be proven by implementing Proposition 4.5 of Bertsekas and Tsitsiklis ([4]) along with a bottom-up induction on the depth of the hierarchy. The proof is omitted due to paper-size limitations.

Proposition 2: QHt(s,v) converges to QH*(s,v) for all s∈S and v∈H as t increases, with probability 1, if: (a) the reward-function is bounded, (b) the agent continuous to choose each branch of the tree infinitely often and (c) the learning rates αt have an infinite sum, but finite sum of squares.

Initialization:for all s∈S and v∈H, set QH1(s,v) arbitrarily; set st to be the first visited state; for t = 1,2,…:

L = an empty stack; v = v0;while v∈HN

choose w – one of the descendents of v,according to an exploration policy, which is derived from QHt;

if:

( )( )

'arg max , 't t

v D vw QH s v

∈=

then: push v into L;else: clear L;

end (if) v = w;

end (while) push v into L;execute a(v), observe the reward rt, and the next

state st+1;while L is not empty

v = pop(L); ( ) ( ) ( )

( )1

1 0

, 1 ,

,t t t t t

t t t t

QH s v QH s v

r QH s v

α

α γ+

+

= − ⋅

+ ⋅ + ⋅

end (while) st = st+1

Figure 1. The hierarchical RL algorithm.

As the approximation, QHt, approaches the hierarchical Q-function, QH* it becomes possible to use it, in order to construct a policy (as if the approximation was the real function, and according to the discussion in the previous section). Such a policy will be optimal at the limit.

When compared to the flat Q-learning algorithm, the proposed algorithm has three considerable advantages. First, the hierarchical decision-making

approach is more comprehensible to humans, especially when the number of actions is large. Second, the time, required in order to search for an action in the tree, can be reduced to a logarithm in the corresponding time that is required in flat Q-Learning. Finally (and probably most significantly), the hierarchical approach enables the agent to incorporate state abstraction.

When the agent chooses actions from a flat set, it must consider all the aspects of the current state (irrelevant aspects are not included in the model on the first place). However, it may be the case that the consequences of a certain sub-choice depend only on part of the state's aspects (a sub-choice is only a part of the eventual action). In such a case, the irrelevant aspects can be ignored. Considering lookup-table estimations and ignoring aspects of the state means that several table entries can be merged together and have a single estimation. Reducing the number of distinct estimations not only reduces the memory consumption, but it can also significantly increase the learning speed.

6. An Illustrative Example

This section uses a navigation task that involves three robots, to illustrate the advantages of the hierarchical decision-making approach, and the proposed algorithm. In particular, the section focuses on the aspects of state abstraction and its contribution.

Consider three robots, situated on an n×n grid-world, and trying to coordinate a meeting within as few moves as possible. Each episode of the task begins with an arbitrary joint-position of the three robots, and ends when the three robots occupy the same position. There are n6 possible join-positions, each of which is represented by a distinct state.

On each stage, the three robots observe the current state and choose a move (moves are actions; each action specifies three sub-moves, one for each robot). There are five possible sub-moves for each robot (the sub-moves are denoted by N, S, E, W and DN, standing for moves to the North, South, East, West and for no moves at all, respectively), thus there are 125 actions altogether. It is assumed that every robot moves deterministically in the direction dictated to it by the chosen action, unless such move will take it out of the world boundary, in which case the robot does not move at all.

Considering the action-space as a flat set, each stage requires the manipulation of 125 actions, and in order to implement a flat RL algorithm (e.g., Q-Learning), 125n6 estimations are required. Consider alternatively the action-hierarchy, in which the 1st sub-choice indicates on the move of the 1st robot, the 2nd sub-

Proceedings of the Sixth International Conference on Intelligent Systems Design and Applications (ISDA'06)0-7695-2528-8/06 $20.00 © 2006

![Page 5: [IEEE Sixth International Conference on Intelligent Systems Design and Applications - Jian, China (2006.10.16-2006.10.16)] Sixth International Conference on Intelligent Systems Design](https://reader037.fdocuments.us/reader037/viewer/2022092707/5750a6751a28abcf0cb9bdbe/html5/thumbnails/5.jpg)

choice indicates on the move of the 2nd robot, and the 3rd sub-choice indicates on the move of the 3rd robot. Using the hierarchical approach with this action-hierarchy, each robot can be seen as an autonomous decision-maker. Moreover, each sub-choice requires the manipulation of a set of (only) five sub-actions, and there are only three sub-choices (much faster than manipulating the 125 actions altogether).

In this task, implementing the hierarchical decision-making approach (with the specified hierarchy) comes with an opportunity for state abstraction. Notice that the three robots will meet, if and only if the 1st robot will meet the 2nd robot, at the same time that it meets the 3rd robot. Namely, the task can be broken down into two sub-tasks, where the 2nd and the 3rd robots do not share a sub-task. When the 2nd robot decides on its move, it only considers the 1st sub-task, and can therefore ignore the position of the 3rd robot, and vice versa. Therefore, the position of the 3rd robot can be ignored in the 2nd level of the hierarchy, and the position of the 2nd robot can be ignored in the 3rd level of the hierarchy. The overall number of Q-estimations reduces from 125n6 to 5n6+150n4.

A policy for the task was first learned in the flat approach, by implementing flat Q-Learning, for the case of n=6 (n was chosen as to limit the number of states to a reasonable level of 46,656 distinct states). Thereafter, the hierarchical decision-making approach was implemented (by the algorithm from Section 5), once without and once with state abstraction. In order to encourage optimal choices, a unit of reward was provided as a response to any action which terminated the task and a zero-reward was provided otherwise. A discount factor of 0.9 was used to motivate the agent to terminate the task (coordinate a meeting) as soon as possible. An ε-greedy exploration policy was incorporated for comparing the performance of the three solutions. In flat Q-Learning, an ε-greedy policy is specified merely through a single parameter (the probability, ε). However, in the two hierarchical solutions, a distinct parameter needs to be set for each node. Let εj denote the exploration probability corresponding to the nodes in the jth level of the hierarchy. In order to maintain compatibility, the same amount of exploration was used in all three solutions. The specification, of ε=0.2, was used in the flat Q-Learning algorithm, and accordingly the specification of ε1=ε2=ε3=1-(1-ε)1/3 was used in the hierarchical algorithm. For simplicity, a fixed learning rate of 0.1 was used in all the update equations.

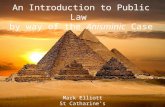

Figure 2 shows the learning-curves that were obtained by the three solutions, in the first 100 learning episodes. The experience is expressed by the number of episodes thus-far, and the performance is measured by the number of steps, required in order to complete an episode once again. Each point on the curves represents the average performance measure, over 50 independent repeats. The measures were taken while altering ε to 0.03 (and ε1, ε2 and ε3 accordingly), in the different experience levels. Altering ε represents greedy choices more closely (some degree of exploration was still maintained in order to avoid deadlocks). Each episode was initiated with a random joint-position.

All implementations have converged into an equally desired policy (Figure 2 only presents the first 100 episodes, however curves 1 and 2 stabilize only after around 4000 captures, and reach to an average of about 5 stages per episode). The learning curves, which were obtained from flat Q-Learning and the hierarchical solution, with no state abstraction, are similar (but recall that the per-stage time consumption of the hierarchical solution is better). However, it can be seen that the hierarchical solution which uses state abstraction (Curve 3) required considerably less experience in order to approach the optimal policy. The boost in the learning speed can be explained by the reduced number of estimations. The fewer are the estimations the less experience is required in order to obtain accurate results.

7. Conclusion

This paper proposed a hierarchical decision-making approach, and an RL algorithm, which uses the approach, and converges to an optimal policy. The hierarchical approach can create opportunities for state abstraction. Using a navigation task, it was shown that with state abstraction, the hierarchical algorithm is likely to increase the learning speed.

Due to the hierarchical form, each learning stage can be completed with several simple searches, instead of a single complex search. This computational advantage is achieved compared to any algorithm that considers the action-space as a flat set.

The paper extends the state of the art research on hierarchical RL. Specifically, when compared to current hierarchical approaches, the proposed approach

Proceedings of the Sixth International Conference on Intelligent Systems Design and Applications (ISDA'06)0-7695-2528-8/06 $20.00 © 2006

![Page 6: [IEEE Sixth International Conference on Intelligent Systems Design and Applications - Jian, China (2006.10.16-2006.10.16)] Sixth International Conference on Intelligent Systems Design](https://reader037.fdocuments.us/reader037/viewer/2022092707/5750a6751a28abcf0cb9bdbe/html5/thumbnails/6.jpg)

provides opportunities for state abstraction, but it results in no temporal abstraction, and its implementations can converge to an optimal policy.

The contribution of this work was demonstrated compared to a lookup-table based Q-Learning. The lookup-table representation simplified the paper and enabled the strong convergence result. Q-Learning was chosen as a widely-accepted, standard algorithm. However, the approach is likely to be advantageous in more complex setups as well. We suggest examining this assumption in a future research.

References

[1] A.G. Barto, and S. Mahadevan, S, "Recent Advances in Hierarchical reinforcement Learning". Discrete Event Dynamic Systems: Theory and Applications 13, 2003, pp. 341-379.

[2] A.G. Barto, S.J. Bradtke, and S.P. Singh, "Learning to Act Using Real-Time Dynamic Programming", Artificial Intelligence 72, 1995, pp. 81-138.

[3] R. Bellman, Dynamic Programming, Princeton University Press, 1957.

[4] Bertsekas, D.P. and Tsitsiklis, J.N. (1996). Neuro-dynamic programming. Athena Scientific, Belmont Massachusetts.

[5] P. Dayan, and G.E. Hinton, "Feudal Reinforcement Learning", Proceedings of Advances in Neural Information Processing Systems 5, 1993, pp. 271-278.

[6] T.D. Dietterich, "Hierarchical Reinforcement Learning with the MAXQ Value Function Decomposition", Journal of Artificial Intelligence Research 13, 2000, pp. 227—303.

[7] V. Gullapalli, and A.G. Barto, "Convergence of Indirect Adaptive Asynchronous Value Iteration Algorithms" Proceedings of Advances in Neural Information Processing Systems 6, 1994. pp. 695-702.

[8] R.A. Howard, Dynamic Programming and Markov Processes, MIT Press, 1960.

[9] L.P. Kaelbling, L.M. Littman, and A.W. Moore, "Reinforcement Learning: a Survey", Journal of Artificial Intelligence Research 4, 1996, pp. 237-285.

[10] R. Parr, and S. Russell. "Reinforcement Learning with Hierarchies of Machines", Proceedings of Advances in Neural Information Processing Systems 10, 1997, pp. 1043-1049.

[11] M.L. Puterman, Markov Decision Processes: Discrete Stochastic Dynamic Programming, John Wiley & Sons, 1994.

[12] S.P. Singh, T. Jaakkola, M.L. Littman, C. Szepesvári, "Convergence Results for Single-Step On-Policy Reinforcement-Learning Algorithms", Machine Learning 38, 2000, pp. 287—308.

[13] R.S. Sutton, "Integrated Architectures for Learning, Planning and Reacting Based on Approximating Dynamic Programming", Proceedings of the 7th International Conference on Machine Learning, 1990, pp. 216-224.

[14] R.S. Sutton, and A.G. Barto, Reinforcement learning, an introduction, MIT Press, 1998.

[15] R.S. Sutton, D. Precup, and S. Singh. "Between MDPs and Semi-MDPs: a Framework for Temporal Abstraction in Reinforcement Learning", Artificial Intelligence 112, 1999, pp. 181-211.

[16] C.J.C.H. Watkins, Learning from Delayed Rewards, Ph.D. thesis, Cambridge University, Cambridge, England.

[17] C.J.C.H. Watkins, and P. Dayan, "Technical note: Q-Learning", Machine Learning 8, 1992, pp. 279-292.

Figure 2. The learning curves, obtained from using flat Q-Learning (Curve 1), the hierarchical algorithm without state abstraction (Curve 2), and the hierarchical algorithm with state abstraction (Curve 3).

Proceedings of the Sixth International Conference on Intelligent Systems Design and Applications (ISDA'06)0-7695-2528-8/06 $20.00 © 2006