Human action recognition based on skeleton splitting

Transcript of Human action recognition based on skeleton splitting

Expert Systems with Applications 40 (2013) 6848–6855

Contents lists available at SciVerse ScienceDirect

Expert Systems with Applications

journal homepage: www.elsevier .com/locate /eswa

Human action recognition based on skeleton splitting q

0957-4174/$ - see front matter � 2013 Elsevier Ltd. All rights reserved.http://dx.doi.org/10.1016/j.eswa.2013.06.024

q This work has started at the Interactive Graphics Systems Group, TechnischeUniversität Darmstadt, Germany and was finalized at Kookmin University, Korea.⇑ Corresponding author.

E-mail addresses: [email protected] (S.M. Yoon), [email protected], [email protected] (A. Kuijper).

Sang Min Yoon a, Arjan Kuijper b,⇑a School of Computer Science, Kookmin University, 77 Jeongneung-ro, Sungbuk-gu, Seoul 136-702, Republic of Koreab Technische Universität Darmstadt & Fraunhofer IGD, Fraunhoferstr. 5, Darmstadt, Germany

a r t i c l e i n f o

Keywords:Human action recognitionDiffusion tensor fieldsSkeleton

a b s t r a c t

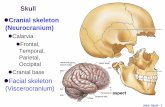

Human action recognition, defined as the understanding of the human basic actions from video streams,has a long history in the area of computer vision and pattern recognition because it can be used for var-ious applications. We propose a novel human action recognition methodology by extracting the humanskeletal features and separating them into several human body parts such as face, torso, and limbs to effi-ciently visualize and analyze the motion of human body parts.

Our proposed human action recognition system consists of two steps: (i) automatic skeletal featureextraction and splitting by measuring the similarity between neighbor pixels in the space of diffusiontensor fields, and (ii) human action recognition by using multiple kernel based Support Vector Machine.Experimental results on a set of test database show that our proposed method is very efficient and effec-tive to recognize the actions using few parameters.

� 2013 Elsevier Ltd. All rights reserved.

1. Introduction

A human action analysis and recognition system is defined toclassify the unlabeled human motions into the basic human actionssuch as jogging, walking, and boxing from image sequences. In thearea of computer vision and pattern recognition, human action rec-ognition gained significant concerns by numerous researchers tounderstand the intention/attention of humans (Fernández-Cabal-lero, Castillo, & Rodríguez-Sánchez, 2012).

The interest in this topic is encouraged by a multitude of appli-cations, like automated surveillance systems, smart home applica-tions (Diraco, Leone, & Siciliano, 2013), video indexing andbrowsing, virtual reality, human–computer interaction, analysisof sports events, and assisted living (Chaaraoui, Climent-Pérez, &Flórez-Revuelta, 2012).

For efficient human action recognition, researchers tried tounderstand the motion of humans in a single camera or multiplecamera environment (Lin & Wang, 2012). In particular, there is ahuge literature on the human action understanding from a singlecamera, but still a challenging issue due to partial occlusion, clut-ter, dependence of viewpoint, and pose ambiguity of users.

An important issue of human action recognition, which can beinterpreted as one kind of object recognition and retrieval, ishow to extract the reliable features from the deformable objectsthat have a high degree of freedom, and to automatically assess

the similarity between any pair of human motions based on a suit-able notion of similarity.

In this paper, we propose to solve these problems of human ac-tion recognition by focusing on the adequate automatic skeletalfeature extraction and separating human body into several humanbody parts like head, torso and limbs by analyzing the the Normal-ized Gradient Vector Flow (NGVF) in the space of Diffusion TensorFields (DTF). In particular, the human action recognition using seg-mented human skeleton features are very efficient because theyare very familiar to human visual perception using one dimensionaldata. Second, we adapt a Multiple Kernel-Support Vector Machine(MK-SVM) approach to efficiently classify the unlabeled humanmovement into human basic actions. Preliminary results were pre-sented in Yoon and Kuijper (2010a, 2010b).

Fig. 1 shows the total flowchart of our proposed method howwe extract and split the skeletal features for motion analysis andfeature classification for human action understanding.

In this paper, we will explain the details how we extract andvisualize the motion of deformable objects which have high degreeof freedom by extracting its skeletal feature in the topologicalspace of diffusion tensor fields. The main contribution of this papercan be classified as follows:

� We extract the skeletal features by analyzing the NGVF in thespace of diffusion tensor fields. The eigenvectors and eigen-values which come from DTF are mainly used to measure thedissimilarity between neighboring pixels to automaticallymerge and split the skeleton.� The split skeletal features will be connected to MK-SVM which

is very efficient to classify the unlabeled human motions whichhave high degree of freedom.

Fig. 1. Total flowchart of our proposed human action recognition by using skeletal feature extraction and classification.

S.M. Yoon, A. Kuijper / Expert Systems with Applications 40 (2013) 6848–6855 6849

� The experimental results show that our proposed methodologyfor human action recognition system is very independent oncamera viewpoints, and partial occlusion of the human body part.

This paper presents a combination of methods that approachthe task of human motion analysis and action recognition. Section 2presents a brief summary of related work on motion interpretationusing skeletal features: the overview of skeleton extraction ofdeformable objects and its splitting that create an image and briefsurvey of the previous remarkable methods for human action rec-ognition and diffusion tensor fields. The main contribution byusing our proposed approach is explained in Section 3. Section 3.1describes the theory which is used as a basis for an analyzing thedeformable object by segmenting the image into several sub-re-gions which have similar characteristics. The features which areextracted by analyzing the segmented human body parts are usedfor recognizing the basic human actions like walking, jogging, andboxing, etc. The experimental results which are presented in Sec-tion 4 show that our proposed method is very reliable and efficientto understanding the ambiguous human motions. Lastly, Section 5concludes the work with a summary and a discussion of the pre-sented approach and possibilities for extensions.

2. Related work

This paper for human action recognition is closely related toseveral areas in computer vision and pattern recognition includingskeleton extraction from binarized foreground object and auto-matic skeleton splitting and segmentation and diffusion tensorfields. In this section, we survey the previous remarkable workson human action analysis and its methodologies.

2.1. Skeleton extraction and splitting

The structural analysis of deformable objects is one of impor-tant issues in computer vision and pattern recognition because itcan be used in many applications, including shape matching,computer animation, and object registration and visualization. In

particular, the skeleton which is precisely defined as a Medial AxisTransform (MAT) in the continuum was given by Blum (1967), is acompact one-dimensional representation of complex and deform-able objects which have high degree of freedom. It also describesan object’s geometry and topology by using few data. Since the firststudy of skeleton technique through estimating the MAT, the skel-eton extraction from the deformable objects has attracted atten-tion from many researchers in various application domains.Previous skeleton computing methodologies can be roughly classi-fied into three categories by approaches: topological thinning(Borgefors, Nyström, & Di Baja, 1999), distance transform basedskeleton extraction (Bitter, Kaufman, & Sato, 2001; Kuijper & Olsen,2005), and geometric methods (Kuijper, 2007; Ma, Wu, & Ouh-young, 2003). However, existing skeleton extraction and splittingalgorithms are still weak because previous methods are very ori-ented to their applications and dependent on high computationalcomplexity, noise sensitivity, and partial occlusions within a singu-lar region of a given shape.

The deformable object’s appearance representation methodolo-gies using local features like SIFT, color, texture, shape, depth mapfrom stereo image, and skeleton can have a significant impact onthe effectiveness of motion analysis strategy. A successful recogni-tion technique has to be robust to visual transformations likearticulation has to effectively capture the variations in the shapeof the target object due to these transformations. In previous shaperepresentation and analysis methodologies, the objects arerepresented as curves, point sets or feature sets, and skeletons.Sebastian and Kimia (2001) compare two techniques for matchingshapes, one is based on matching their outline curves and thesecond based on matching their skeletons. They provided thatthe skeleton based shape representation and analysis methodwas better than curve based representation methods. As theapplications of motion analysis, human action recognition fromimage sequences/volume data and sketch based image detectionand retrieval provide numerous literature. The experimentalresults from Sebastian and Kimia (2001) encourage us to compli-cate the drawbacks of previous works and overcome the state ofthe art.

6850 S.M. Yoon, A. Kuijper / Expert Systems with Applications 40 (2013) 6848–6855

2.2. Human action recognition

Some of the recent work which is done in the area of human ac-tion recognition can be largely separated into four categories:structural methods (Bregler & Malik, 1998; Deutscher, Blake, &Reid, 2000; Gavrila & Davis, 1995; Rohr, 1997), appearance meth-ods using motion templates (Bobick, Davis, Society, & Society,2001; Hoey & Little, 2000; Li, Dettmer, & Shah, 1997), statisticalappearance-based methods (Doretto, Cremers, Favaro, & Soatto,2003; Efros, Berg, Berg, Mori, & Malik, 2003; Irani, An, & Cohen,1999; Polana & Nelsons, 1992), and event-based motion interpre-tation methods (Engel & Rubin, 1986; Rao, Yilmaz, & Shah, 2002;Rubin & Richards, 1985; Zelnik-manor & Irani, 2001).

The structural methods use parameterized models describinggeometric configurations and relative motions of parts in the mo-tion patterns. The structural motion analysis and recognition pro-vides the explicit locations of parts which lead to advantages forapplication of Human Computer Interaction and motion animation,but this approach requires a large number of free parameters thathave to be estimated. An appearance-based methods usingtemplates features need a lower degree of freedom than structuralapproach, but it relies on either spatial alignment, or spatial–temporal registration of image sequences prior to reconstruction.A statistical approach is proposed to overcome the difficulty offinding corresponding features between models and structure intest images of structural and appearance based methods. Eventbased human action recognition methods are suffered from thelack of information about the motion. Most of the above studiesare based on computing local space–time gradient or other inten-sity based features and thus may be unreliable in the cases of lowquality video, motion discontinuities and motion aliasing.

2.3. Diffusion tensor fields

In medical applications, Diffusion Magnetic Resonance Imaging(MRI) is introduced as a powerful way to map white matter fibersin vivo images of biological tissues weighted with local micro-structural characteristics of water diffusion (Hsu, Muzikant, Matu-levicius, Penland, & Henriquez, 1998; Kniss, Kindlmann, & Hansen,2002; Scollan, Holmes, Winslow, & Forder, 1998; Taylor & Bushell,1985). Diffusion MRI methods are separated into two large catego-ries: one is the Diffusion Weighted Imaging (DWI) (Hsu et al.,1998; Taylor & Bushell, 1985) and the other is Diffusion TensorImaging (DTI) (Hsu et al., 1998; Scollan et al., 1998).

The Diffusion Tensor Imaging technique takes advantage of themicroscopic diffusion of water molecules, which is less restrictedalong the direction aligned with the internal structure than alongits traverse direction. The measured ratio of water diffusion willdiffer depending on the direction from which an observer is look-ing. In DT imaging, each pixel/voxel has one or more pairs ofparameters: a ratio of diffusion and a preferred direction ofdiffusion for which parameter is valid. The properties of each

Fig. 2. Normalized Gradient Vecto

pixel/voxel can be calculated by vector, each obtained with a dif-ferent orientation of the diffusion sensitizing gradients. Histori-cally, Moseley (Liu, Acar, & Moseley, 2004) reported that waterdiffusion in white matter is varied dependent on the orientationof tracts relative to the orientation of the diffusion gradient appliedby image scanner and described in tensor. Basser, Mattiello, and Bi-han (1994) showed the classical ellipsoid tensor formulation couldbe deployed to analyze diffusion MR data.

3. Skeleton extraction and splitting in diffusion tensor fields

In this section, we explain the methodology for skeleton extrac-tion and splitting in the space of two dimensional second-orderdiffusion tensor fields. In a black and white image from the clutterimage sequence, we first convert it to the vertical and horizontalgradient vectors. Beased on the gradient vector from an image,we extract the normalized gradient vector flow.

3.1. Normalized Gradient Vector Flow of image

Originally, the Gradient Vector Flow (GVF) field was proposedto solve the problem of initialization and poor convergence toboundary concave objects yielding a traditional snake form Kass,Witkin, and Terzopoulos (1988).

The traditional GVF is a vector diffusion approach on Partial Dif-ferential Equations (PDEs). It converges towards the object bound-ary when very near to the boundary, but varies smoothly overhomogeneous image regions extending to the image border. Themain advantage of traditional GVF fields is that it is able to capturea snake from a long range and could force it onto the concave re-gions. Mathematically defined, the traditional GVF is the vectorfield v that minimizes the following energy functional;

e ¼Z Z

l u2x þ u2

y þ v2x þ v2

y

� �þ krfk2kv �rfk2dxdy ð1Þ

where v = [u(x,y),v(x,y)], and the initial value of v(x,y) is determinedbyrf(x,y).rf(x,y) is the gradient image derived from a given image.l is a regularization parameter to be set on the basis of noise pres-ent in image. Minimizing this energy will force v(x,y) nearly equalto the gradient of the edge map where krf(x,y)k is large. Neverthe-less, the general GVF method cannot efficiently extract the medialaxis as a weak vector has very little impact on its neighbors thathave much stronger magnitudes.

A Normalized Gradient Vector Flow (NGVF) can tremendouslyaffect a strong vector, both in magnitude and in orientation by nor-malizing the vectors over the image domain during each diffusioniteration (Yu, Bajaj, & Bajaj, 2002). The NGVF also reduce the noiseswhen the edges are disconnected.

Fig. 2 shows the NGVF field from a given image. The traditionalGVF has difficulty preventing the vectors on the boundary frombeing significantly influenced by the nearby boundaries and thuscauses a problem such that the ‘‘snake’’ may move out of the

r Flow from a simple image.

Fig. 4. Ellipsoidal representation of each pixel by using eigenvalues and eigenvec-tors which come from diffusion tensor fields.

S.M. Yoon, A. Kuijper / Expert Systems with Applications 40 (2013) 6848–6855 6851

boundary gap. NGVF avoids this problem. We can see the detail ofthe NGVF in the vector around the boundary gap point.

3.2. Skeleton extraction in second order diffusion tensor field

In this section, we will explain an automatic skeleton extractionand refinement by a topological analysis in NGVF fields.

The diffusion tensor field, which is defined as a topological rep-resentation of a 2D symmetric, second-order tensor field, is givenby:

Tð�xÞ ¼T11ðx; yÞ T12ðx; yÞT21ðx; yÞ T22ðx; yÞ

� �ð2Þ

Tð�xÞ is fully equivalent to two orthogonal eigenvectors

Tð�xÞ ¼ kið�xÞ�eið�xÞ ð3Þ

where i = 1,2. kið�xÞ are the eigenvalues of Tð�xÞ and �eið�xÞ define theunit eigenvectors. The topology of a tensor field Tð�xÞ is the topologyof its eigenvector fields �v ið�xÞ.

According to Pajevic and Pierpaoli (1999), we can build a topo-logical analysis of diffusion tensor fields from the concept ofdegenerated points, which play the role of critical points in vectorfields. Streamlines in vector fields never cross each other except atcritical points and hyperstreamlines in tensor fields meet eachother only at degenerated points. Thus, the degenerated pointsare the basic singularities underlying the topology of tensor fields.Mathematically, those points are defined as the two eigenvalues ofTð�xÞ which are equal to each other. Degenerated points in tensorfields are the basic constituents of critical points in vector fields.There are various types of critical points – such as nodes, foci, cen-ters, and saddle points – that correspond to different local patternsof the neighboring streamlines. Delmarcelle and Hesselink (1994)has proven that the local classification of line fields or degeneratepoints can be determined by constraints.

From the degenerated point, x0, the partial derivatives are eval-uated according to

a ¼ 12@ðT11 � T22Þ

@xb ¼ 1

2@ðT11 � T22Þ

@y

c ¼ @ðT12Þ@x

d ¼ @ðT12Þ@y

ð4Þ

Fig. 3. Skeleton extraction in

An important quantity for the characterization of degeneratedpoints is

d ¼ ad� bc ð5Þ

So a simple point topologically should be classified into twotypes: trisector if d < 0, and wedge if d > 0. Within the target object,these points are assumed as trisector.

Thinning the skeleton, connected by continuous degeneratedpoints, can be very efficiently done by using the fact that a pointwithin the object which has not at least one background point asan immediate neighbor cannot be removed, since this would createa hole. Therefore, the only potentially removable points are at theborder of the object. Once a border point is removed, only itsneighbors may become removable. Fig. 3 shows the extracted skel-etal features from human motions.

3.3. Automatic skeleton splitting using diffusion tensor similaritymeasure

After obtaining a skeleton of human body parts, the skeleton issplit into several branches by analyzing its tensorial characteris-tics. Within an extracted skeleton, we can separate the elementsby using following definition.

diffusion tensor fields.

Fig. 5. Skeleton extraction in diffusion tensor fields.

Fig. 6. Skeleton extraction and splitting of human body parts.

6852 S.M. Yoon, A. Kuijper / Expert Systems with Applications 40 (2013) 6848–6855

� branch point is the pixel inside the skeleton that connects eachbranch.� end point is the pixel inside the skeleton with only one neighbor.� joint point is the pixel inside a branch that separate the

neighbor.

End points can be interpreted as the polar points in the space ofdiffusion tensor fields and branch points are also can be under-stand as the combination of various eigenvalues in neighbor pixels.

Within a branch, we split the branch into several parts withsimilarity measure between neighbor skeletal elements. To sepa-rate the human skeletal features into several human body parts,we first analyze its skeletal features into two-dimensional ellipsoi-dal model which is shown in Fig. 4. The scale and rotation of twodimensional ellipsoidal model is determined by its eigenvaluesand eigenvectors which follow from the diffusion tensor field.

For each pixel Ii which is recognized as the skeleton, we mea-sure the dissimilarity between neighbor skeleton pixel elementsand measure the dissimilarity using tensorial dissimilarity func-tion. Given two tensors Ti and Tj, there are some dissimilarity mea-

sures that might be used to compare them. The tensor can berepresented by an ellipsoid, where the main axis lengths are pro-portional to the square roots of the tensor eigenvalues k1 andk2(k1 > k2) and their direction correspond to the respective eigen-vectors. With this properties, we can measure the dissimilarity be-tween neighbor elements. The simplest one is the tensor dotproduct:

d1ðTi; TjÞ ¼X2

i

X2

j

k1i k

2j e1

i � e2j

� �2ð6Þ

It uses not only the principal eigenvector direction, but the fulltensor information. Another dissimilarity measure that uses thefull tensor information is the Frobenius norm:

d2ðTi; TjÞ ¼ffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiTraceððTi � TjÞ2Þ

qð7Þ

The dissimilarity measure between two elements is the multi-plication of d1 and d2. Joint points are determined by comparingthe similarity measure between neighbor points and joint points

Tabl

e1

2Dhu

man

acti

onre

cogn

itio

nra

tio

ofH

uman

Eva

Dat

aset

.In

bold

face

:th

eac

tion

tobe

reco

gniz

ed.

Act

ion

Box

Wal

kJo

gB

oxW

alk

Jog

Box

Wal

kJo

gB

oxW

alk

Jog

Box

Wal

kJo

gB

oxW

alk

Jog

Box

Wal

kJo

g

(a)

Our

prop

osed

hum

anac

tion

reco

gnit

ion

for

diff

eren

t7

view

poin

tsB

ox95

32

972

193

43

877

697

21

935

285

105

Wal

k3

889

683

111

927

388

93

8710

588

74

839

Jog

25

934

1185

27

914

1086

612

826

985

69

85

Act

ion

Box

Wal

kJo

gG

estu

reB

oxW

alk

Jog

Ges

ture

Box

Wal

kJo

gG

estu

reB

oxW

alk

Jog

Ges

ture

Box

Wal

kJo

gG

estu

reB

oxW

alk

Jog

Ges

ture

(b)

2Dhu

man

acti

onre

cogn

itio

nra

tio

usin

gCo

ndit

iona

lR

ando

mFi

elds

for

6m

odel

s(N

ing

etal

.,20

08)

Box

79.4

0.4

10.9

9.3

75.0

016

.28.

892

.50.

95.

80.

889

.21.

08.

61.

289

.50.

86.

53.

279

.52.

27.

610

.7W

alk

0.1

954.

90

096

.33.

70

097

.80.

22.

00

94.8

0.5

4.7

095

.60.

73.

70.

495

.52.

21.

9Jo

g3.

94.

389

.72.

11.

40

97.3

1.3

0.2

0.4

99.4

00.

40.

299

.40

00.

599

.40.

10.

94.

594

.60

Ges

ture

25.0

1.6

073

.421

.40.

20

77.4

7.9

2.4

0.1

89.6

11.8

2.9

0.3

85.1

10.9

00.

688

.511

.35.

50.

482

.8

S.M. Yoon, A. Kuijper / Expert Systems with Applications 40 (2013) 6848–6855 6853

are decided when the direction of NGVF changes and scale of mainand sub eigenvalue is over the threshold. In Fig. 5, we display theextracted skeleton using ellipsoid representation method. Theend points are painted red, the branch points green, and the jointpoints blue, according to the tensorial dissimilarity measure.

Using the ellipsoidal representation of the human model, wesegment the human model according to its tensorial characteristicswhich are shown in Fig. 6. It shows the splitting areas of the targetobject and its ellipsoidal representation of the segmented regionsfrom the image. The eigenvalues and eigenvectors of the seg-mented skeletons from the images will be used as the input datafor human action recognition.

3.4. Human action recognition

We describe how the Support Vector Machines (SVM) is usedfor efficient classification of highly variant human motions. SVMsupports the classification tasks and handles the multiple continu-ous and categorical variables. The performance of different classi-fiers applied in object detection and recognition systems havebeen evaluated and compared in the area of pattern recognitionand data learning. Bazzani et al. (2001) concluded that the SVMperforms better than the Multi-Layer Perception (MLP) for a smallnumber of training data (Bazzani et al., 2001). Having evaluatedthe SVM, Kernel Fisher Discriminant (KFD), Relevant Vector Ma-chine (RVF), Feedforward Neural Network (FNN), and committeemachines, Wei, Yang, Nishikawa, and Jiang (2005) concluded thatthe Kernel-based classification like SVM yielded the bestperformance.

4. Experiments

For human action recognition, we used public image data suchas the HumanEva database1 and the KTH human action dataset2 tocompare our method to other proposed human action recognitionapproaches. The HumanEva dataset is separated into boxing, walk-ing, and jogging, while the KTH human action database is morespecifically separated into 6 human actions like hand-clapping,hand-waving, jogging, running, walking, and boxing. In the MultipleKernel Support Vector Machine methodology, various kernels likeRadial Basis Function (RBF), quadric, and linear kernels are used fora robust action recognition system.

Table 1 shows the human action recognition results for theHumanEva database. Table 1(a) shows the our proposed methodol-ogy from the 7 different viewpoints and Table 1(b) is the humanaction recognition using Conditional Random Fields (CRF) for 6models (Ning, Xu, Gong, & Huang, 2008). Unfortunately, it is diffi-cult to directly compare the our proposed method to the CRF basedhuman action recognition, but it can clearly be seen that our pro-posed method has a more balanced acceptance ratio than the ap-proach by Ning et al. (2008). In particular, the variations of theboxing action recognition ratio are larger than other human actionslike walking and jogging, because the boxing action is moredependent on the viewpoints. In the boxing action, the acceptanceratio of camera views 4 and 7 is less than other camera view-points, because we were not able to extract the features whichare closely related to boxing actions as the hands were not dis-tinctly visible. We also applied to our proposed skeletal featuresto KNN and single kernel Support Vector Machine to comparethe acceptance ratio in Table 2. In a single kernel based SupportVector Machine, Radial Basis Function (RBF) is used for kernel inour experiments.

1 http://vision.cs.brown.edu/humaneva/2 http://www.nada.kth.se/cvap/actions/

Table 22D human action recognition ratio of the KTH Dataset using different classification methods. In bold face: the action to be recognized.

Action Box Walk Jog Run Hand-clap Hand-wave Box Walk Jog Run Hand-clap Hand-wave

(a) our approach (b) Wang and Mori (2009)Box 95 1 3 1 0 0 91 3 2 3 0 1Walk 3 81 9 5 2 0 2 80 11 5 0 2Jog 1 3 89 7 0 0 2 17 53 28 0 1Run 0 1 6 92 0 1 2 12 28 55 1 3Hand-clap 1 1 2 3 87 6 0 0 1 1 97 2Hand-wave 0 1 3 4 2 90 0 1 0 1 1 96

(c) Roth et al. (2009) (d) Danafar and Gheissari (2007)Box 93 0 0 0 6 1 86 0 0 0 14 0Walk 1 89 4 5 1 0 0 89 0 11 0 0Jog 0 20 56 24 0 0 0 92 8 0 0 0Run 0 3 13 84 0 0 0 0 8 92 0 0Hand-clap 22 0 0 1 76 1 22 0 0 0 78 0Hand-wave 7 0 0 1 4 88 13 0 0 0 12 75

(e) Niebles et al. (2006) (f) Yeo et al. (2006)Box 100 0 0 0 0 0 86 0 0 0 14 0Walk 1 79 14 1 0 0 0 89 0 11 0 0Jog 0 11 52 37 0 1 0 2 98 0 0 0Run 0 1 11 88 0 0 0 0 8 92 0 0Hand-clap 6 0 0 0 93 1 22 0 0 0 78 0Hand-wave 23 0 0 0 0 77 13 0 0 0 12 75

6854 S.M. Yoon, A. Kuijper / Expert Systems with Applications 40 (2013) 6848–6855

The KTH human action data is less dependent on camera view-point than the HumanEva dataset, but its image resolution is lessthan the HumanEva dataset and has noise in the background. Re-sults for the HumanEva presented in Table 2 show that our pro-posed method (Table 2(a)) is again more balanced in recognizingthe various human motions than other methods (Table 2(b–f))which are based on local features, spatio–temporal features oroptical flow (Danafar & Gheissari, 2007; Niebles, Wang, & Fei-fei,2006; Roth, Mauthner, Khan, & Bischof, 2009; Wang & Mori,2009; Yeo, Ahammad, Ramch, & Sastry, 2006). This is becauseour method is very robust against noise, clutter, pose ambiguityand illumination. We also effectively classify the features by mea-suring the dissimilarity between actions using tensorial features.

Generally, there are many reasons for not successfully recogniz-ing human actions, like shadows, viewpoints, and the ambiguity ofhuman actions. In our approach, we were only not able to extractthe meaningful features when the arms were located in the torso.

5. Discussion

In this paper we presented a novel human action recognitiontechnique whose properties come from the segmented humanbody parts’ eigenvalues and eigenvectors, derived using diffusiontensor fields. These properties are used in a multiple kernel SVMapproach yielding a efficient and effective human action recogni-tion system. Experiments on publicly available data sets show thatour method results in recognition rates that are better and morestable than those from other approaches.

Future work will focus on efforts to extract the robust featuresfrom a target object to effectively understand human behaviors,and extensions to 3D models.

Acknowledgement

S.M. Yoon was funded by Weather Information Service Engine(WISE) project of CATER (Center for Atmosphere and EarthquakeResearch) sponsored by the Korea Meteorological Administration.

References

Basser, P., Mattiello, J., & Bihan, D. L. (1994). Estimation of the effective self-diffusiontensor from the nmr spin echo. Journal of Magnetic Resonance B, 103(3),247–254.

Bazzani, A., Bevilacqua, A., Bollini, D., Bevilacquaz, R., Brancaccio, R., Lanconelli,N., et al. (2001). An svm classifier to separate false signals frommicrocalcifications in digital mammograms. Physics in Medicine and Biology,1651–1663.

Bazzani, A., Bevilacqua, A., Bollini, D., Bevilacquaz, R., Brancaccio, R., Lanconelli,N., et al. (2001). An svm classifier to separate false signals frommicrocalcifications in digital mammograms. Physics in Medicine and Biology,46, 1651–1663.

Bitter, I., Kaufman, A. E., & Sato, M. (2001). Penalized-distance volumetric skeletonalgorithm. IEEE Transactions on Visualization and Computer Graphics, 7, 195–206.

Blum, H. (1967). A transformation for extracting new descriptors of shape. In W.Wathen-Dunn (Ed.), Models for the perception of speech and visual form(pp. 362–380). Cambridge: MIT Press.

Bobick, A. F., Davis, J. W., Society, I. C., & Society, I. C. (2001). The recognition ofhuman movement using temporal templates. IEEE Transactions on PatternAnalysis and Machine Intelligence, 23, 257–267.

Borgefors, G., Nyström, I., & Di Baja, S. G. (1999). Computing skeletons in threedimensions. Pattern Recognition, 32(7), 1225–1236<http://dx.doi.org/10.1016/S0031-3203(98)00082-X> .

Bregler, C., & Malik, J. (1998). Tracking people with twists and exponential maps. InProceedings. 1998 IEEE computer society conference on computer vision andpattern recognition, 1998 (pp. 8–15). http://dx.doi.org/10.1109/CVPR.1998.698581

Chaaraoui, A. A., Climent-Pérez, P., & Flórez-Revuelta, F. (2012). A review on visiontechniques applied to human behaviour analysis for ambient-assisted living.Expert Systems with Applications, 39(12), 10873–10888.

Danafar, S., & Gheissari, N. (2007). Action recognition for surveillance applicationsusing optic flow and svm. In Proceedings of ACCV (pp. 457–466).

Delmarcelle, T., & Hesselink, L. (1994). The topology of symmetric, second-ordertensor fields. In Proceedings of the conference on visualization ’94 (pp. 140–147).Los Alamitos, CA, USA: IEEE Computer Society Presshttp://portal.acm.org/citation.cfm?id=951087.951115.

Deutscher, J., Blake, A., & Reid, I. (2000). Articulated body motion capture byannealed particle filtering. In Proceedings. IEEE conference on computer vision andpattern recognition, 2000 (Vol. 2, pp. 126–133). http://dx.doi.org/10.1109/CVPR.2000.854758

Diraco, G., Leone, A., & Siciliano, P. (2013). Human posture recognition with a time-of-flight 3d sensor for in-home applications. Expert Systems with Applications,40(2), 744–751.

Doretto, G., Cremers, D., Favaro, P., & Soatto, S. (2003). Dynamic texturesegmentation. In IEEE International conference on computer vision 2003 (pp.1236–1242).

Efros, A. A., Berg, A. C., Berg, E. C., Mori, G., & Malik, J. (2003). Recognizing action at adistance. In ICCV 2003 (pp. 726–733).

Engel, S., & Rubin, J. (1986). Detecting visual motion boundaries. WMRA, 86,107–111.

Fernández-Caballero, A., Castillo, J. C., & Rodríguez-Sánchez, J. M. (2012). Humanactivity monitoring by local and global finite state machines. Expert Systemswith Applications, 39(8), 6982–6993.

Gavrila, D. M., & Davis, L. S. (1995). Towards 3-d model-based tracking andrecognition of human movement: a multi-view approach. In internationalworkshop on automatic face- and gesture-recognition 1995 (pp. 272–277). IEEEComputer Society.

Hoey, J., & Little, J.J. (2000). Representation and recognition of complex humanmotion. In Proceedings of the IEEE conference on computer vision and patternrecognition (CVPR) (Vol. 1, pp. 752–759).

S.M. Yoon, A. Kuijper / Expert Systems with Applications 40 (2013) 6848–6855 6855

Hsu, E. W., Muzikant, A. L., Matulevicius, S. A., Penland, R. C., & Henriquez, C. S.(1998). Magnetic resonance myocardial fiber-orientation mapping withdirect histological correlation. American Journal of Physiology, 274(5 pt 2),1627–1634.

Irani, M., An, P., & Cohen, M. (1999). Direct recovery of planar-parallax frommultiple frames. In ICCV99 workshop: Vision algorithms 99 (pp. 1528–1534).Corfu: Springer-Verlag.

Kass, M., Witkin, A., & Terzopoulos, D. (1988). Snakes: Active contour models.International Journal of Computer Vision, 1(4), 321–331.

Kniss, J., Kindlmann, G., & Hansen, C. (2002). Multidimensional transfer functionsfor interactive volume rendering. IEEE Transactions on Visualization andComputer Graphics, 8, 270–285http://doi.ieeecomputersociety.org/10.1109/TVCG.2002.1021579 .

Kuijper, A. (2007). Deriving the medial axis with geometrical arguments for planarshapes. Pattern Recognition Letters, 28(15), 2011–2018.

Kuijper, A., & Olsen, O. (2005). Geometrical skeletonization using the symmetry set.In IEEE proceedings of the international conference on image processing – ICIP2005, Genova, Italy, 11–13 September, 2005 (Vol. I, pp. 497–500).

Li, N., Dettmer, S., & Shah, M. (1997). Visually recognizing speech usingeigensequences. In M. Shah & R. Jain (Eds.), Motion-based recognition(pp. 345–371). Kluwer Academic Publishing.

Lin, Y.-L., & Wang, M.-J. J. (2012). Constructing 3d human model from front and sideimages. Expert Systems with Applications, 39(5), 5012–5018.

Liu, C., Acar, B., & Moseley, M. E. (2004). Characterizing non-Gaussian diffusion byusing generalized diffusion tensors. Magnetic Resonance in Medicine, 51(5),924–937.

Ma, W. C., Wu, F. C., & Ouhyoung, M. (2003). Skeleton extraction of 3d objects withradial basis functions. In Proceedings of shape modeling international 2003 (pp.207–215).

Niebles, J. C., Wang, H., & Fei-fei, L. (2006). Unsupervised learning of human actioncategories using spatial–temporal words. In Proceedings of the british machinevision conference 2006 Edinburgh, UK, September 4–7 (pp. 1249–1258).

Ning, H., Xu, W., Gong, Y., & Huang, T. (2008). Latent pose estimator for continuousaction recognition. In Computer vision – ECCV 2008, 10th european conference oncomputer vision, Marseille, France, October 12–18, 2008, proceedings, Part II.Lecture notes in computer science (Vol. 5303, pp. 419–433). Springer.

Pajevic, S., & Pierpaoli, C. (1999). Color schemes to represent the orientation ofanisotropic tissues from diffusion tensor data: application to white matter fibertract mapping in the human brain. Magnetic Resonance in Medicine, 42(3),526–540.

Polana, R., & Nelson, R. C. (1992). Recognition of motion from temporal texture. InProc. computer vision and pattern recognition 1992 (pp. 129–134).

Rao, C., Yilmaz, A., & Shah, M. (2002). View-invariant representation and recognitionof actions. International Journal of Computer Vision, 50, 203–226. http://dx.doi.org/10.1023/A:1020350100748<http://dx.doi.org/10.1023/A:1020350100748> .

Rohr, K. (1997). Human movement analysis based on explicit motion models. In M.Shah & R. Jain (Eds.), Motion-based recognition. Computational imaging and vision(Vol. 9, pp. 171–198). Netherlands: Springer.

Roth, P., Mauthner, T., Khan, I., & Bischof, H. (2009). Efficient human actionrecognition by cascaded linear classifcation. In 2009 IEEE 12th internationalconference on computer vision workshops (ICCV Workshops) (pp. 546–553).http://dx.doi.org/10.1109/ICCVW.2009.5457655.

Rubin, J. M., & Richards, W. A. (1985). Boundaries of visual motion, Tech. Rep., MITAI Lab.

Scollan, D. F., Holmes, A., Winslow, R., & Forder, J. (1998). Histological validation ofmyocardial microstructure obtained from diffusion tensor magnetic resonanceimaging. American Journal of Physiology, 275(6 pt 2), 2308–2318.

Sebastian, T. B., & Kimia, B. B. (2001). Curves vs skeletons in object recognition. InIEEE international conference of image processing 2001 (pp. 247–263).

Taylor, D. G., & Bushell, M. C. (1985). The spatial mapping of translational diffusioncoefficients by the nmr imaging technique. Physics in Medicine and Biology,30(4), 345<http://stacks.iop.org/0031-9155/30/i=4/a=009> .

Wang, Y., & Mori, G. (2009). Max-margin hidden conditional random fields forhuman action recognition. In 2009 IEEE computer society conference on computervision and pattern recognition (CVPR 2009) 20–25 June 2009, Miami, Florida, USA(pp. 872–879).

Wei, L., Yang, Y., Nishikawa, R. M., & Jiang, Y. (2005). A study on several machine-learning methods for classification of malignant and benign clusteredmicrocalcifications. IEEE transaction on Medial Imaging, 371–380.

Yeo, C., Ahammad, P., Ramch, K., & Sastry, S. S. (2006). Compressed domain real-time action recognition. In Proceedings of 8th IEEE international workshop onmultimedia signal processing (MMSP) (pp. 33–36).

Yoon, S. M., & Kuijper, A. (2010). 3D human action recognition using modelsegmentation. In International conference on image analysis and recognition –ICIAR 2010 (June 21–23, 2010, Povoa de Varzim, Portugal). LNCS (Vol. 6111, pp.189–199).

Yoon, S. M., & Kuijper, A. (2010a). Human action recognition using segmentedskeletal features. In 20th International conference on pattern recognition –ICPR2010 (Istanbul, Turkey, August 23–26, 2010) (pp. 3740–3743). IEEE.

Yu, Z., Bajaj, C., & Bajaj, R. (2002). Normalized gradient vector diffusion and imagesegmentation. In Proceedings of ECCV (pp. 517–530). Springer.

Zelnik-manor, L., & Irani, M. (2001). Event-based analysis of video. In Proc. CVPR2001 (pp. 123–130).