Hidden Markov Models I Biology 162 Computational Genetics Todd Vision 14 Sep 2004.

-

date post

19-Dec-2015 -

Category

Documents

-

view

215 -

download

0

Transcript of Hidden Markov Models I Biology 162 Computational Genetics Todd Vision 14 Sep 2004.

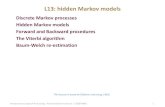

Hidden Markov Models I

Biology 162 Computational Genetics

Todd Vision14 Sep 2004

Hidden Markov Models I

• Markov chains• Hidden Markov models

– Transition and emission probabilities– Decoding algorithms

• Viterbi• Forward• Forward and backward

– Parameter estimation• Baum-Welch algorithm

Markov Chain• A particular class of Markov

process– Finite set of states– Probability of being in state i at time

t+1 depends only on state at time t (Markov property)

• Can be described by– Transition probability matrix– Initial probability distribution 0

Markov Chain

Markov chain

1 2 3a11 a22

a12a23

a33

a21 a32

a13

a31

Transition probability matrix

• Square matrix with dimensions equal to the number of states

• Describes the probability of going from state i to state j in the next step

• Sum of each row must equal 1

€

A =

a11 a12 a13

a21 a22 a23

a31 a32 a33

⎡

⎣

⎢ ⎢ ⎢ ⎢ ⎢

⎤

⎦

⎥ ⎥ ⎥ ⎥ ⎥

aij =1j

∑

Multistep transitions• Probability of 2 step transition is sum of

probability of all 1 step transitions• And so on for n steps

€

aij(2) = aijakj

k

∑

A(2) = A2

A(n ) = An

Stationary distribution• A vector of frequencies that exists if chain

– Is irreducible: each state can eventually be reached from every other

– Is aperiodic: state sequence does not necessarily cycle

€

′ = ′ A

A(n ) →n →∞

′ π 1 ′ π 2.. ′ π N

′ π 1 ′ π 2.. ′ π N

.. .. ..

⎡

⎣

⎢ ⎢ ⎢ ⎢ ⎢

⎤

⎦

⎥ ⎥ ⎥ ⎥ ⎥

′ π ii

∑ =1

Reducibility

Periodicity

Applications

• Substitution models– PAM– DNA and codon substitution models

• Phylogenetics and molecular evolution

• Hidden Markov models

Hidden Markov models: applications

• Alignment and homology search• Gene finding• Physical mapping• Genetic linkage mapping• Protein secondary structure

prediction

Hidden Markov models

• Observed sequence of symbols• Hidden sequence of underlying

states• Transition probabilities still govern

transitions among states• Emission probabilities govern the

likelihood of observing a symbol in a particular state

Hidden Markov models

€

Let π represent the state and x represent the symbol

Transition probabilities : axy = P(π i = y | π i−1 = x)

Emission probabilities : ek (b) = P(x i = b | π i = k)

A coin flip HMM

• Two coins– Fair: 50% Heads, 50% Tails– Loaded: 90% Heads, 10% Tails

What is the probability for each of these sequences assuming one coin or the other?A: HHTHTHTTHTB: HHHHHTHHHH

€

PA ,F = (0.5)10 =1×10−4 PA ,L = (0.9)5(0.1)5 = 6 ×10−6

PB ,F = (0.5)10 =1×10−4 PA ,L = (0.9)9(0.1)1 = 4 ×10−2

A coin flip HMM• Now imagine the coin is switched with some

probability

Symbol: HTTHHTHHHTHHHHHTHHTHTTHTTHTTHState: FFFFFFFLLLLLLLLFFFFFFFFFFFFFL

HHHHTHHHTHTTHTTHHTTHHTHHTHHHHHHHTTHTTLLLLLLLLFFFFFFFFFFFFFFLLLLLLLLLLFFFFF

The formal model

where aFF, aLL > aFL, aLF

F L

H 0.5T 0.5

H 0.9T 0.1

aFF

aLF

aFL

aLL

Probability of a state path

Symbol: T H H H

State: F F L L

Symbol: T H H H

State: L L F F

Generally€

P(x,π)=a0FeF(T)aFFeF(H)aFLeL(H)aLLeL(H)€

P(x,π)=a0LeL(T)aLLeL(H)aLFeF(H)aFFeF(H)

€

P(x,π)=a0π1eπi

i=1

L

∏(xi)aπiπi+1

HMMs as sequence generators

• An HMM can generate an infinite number of sequences– There is a probability associated with each one– This is unlike regular expressions

• With a given sequence– We might want to ask how often that sequence

would be generated by a given HMM– The problem is there are many possible state

paths even for a single HMM

• Forward algorithm – Gives us the summed probability of all state

paths

Decoding• How do we infer the “best” state path?

– We can observe the sequence of symbols– Assume we also know

• Transition probabilities• Emission probabilities• Initial state probabilities

• Two ways to answer that question– Viterbi algorithm - finds the single most likely

state path– Forward-backward algorithm - finds the

probability of each state at each position– These may give different answers

Viterbi algorithm

€

We use dynamic programming again

Maximum likelihood path : π ∗ = argmaxπ

P(x,π )

Assume we know the most probable path

ending in state k at position i : vk (i)

We can recursively find the most probable path

for the next position l :

v l (i +1) = el (x i+1)maxk

(vk (i)akl )

Viterbi with coin example

• Let aFF=aLL=0.7, aFL aLF=0.3, a0=(0.5, 0.5)

T H H HB 1 0 0 0 0F 0 0.25 0.03125 0.0182* 0.0115*L 0 0.05 0.0675* 0.0425 0.0268

• * = F L L L• Better to use log probabilities!

Forward algorithm

• Gives us the sum of all paths through the model

• Recursion similar to Viterbi but with a twist– Rather than using the maximum state

k at position i , we take the sum of all possible states k at i

€

fk (i) = P(x1..x i,π i = k)

€

f l (i +1) = el (x i+1) fk (i)akl

k

∑

Forward with coin example

• Let aFF=aLL=0.7, aFL aLF=0.3, a0=(0.5, 0.5)

• eL(H)=0.9

T H H H B 1 0 0 0 0F 0 0.25 0.101 ? ?L 0 0.05 0.353 ? ?

Forward-Backward algorithm

€

We wish to calculate P(π i = k | x)

P(π i = k | x) = P(x1..x i,π i = k)P(x i+1..xL | π i = k)

= fk (i)bk (i)

where bk (i) is the backward variable

We calculate bk (i) like fk (i),but starting at the

end of the sequence

Posterior decoding• We can use the forward-backward algorithm to

define a simple state sequence, as in Viterbi

• Or we can use it to look at ‘composite states’– Example: a gene prediction HMM– Model contains states for UTRs, exons, introns, etc.

versus noncoding sequence– A composite state for a gene would consist of all the

above except for noncoding sequence– We can calculate the probability of finding a gene,

independent of the specific match states

€

ˆ π i = argmaxk

P(π i = k | x)

Parameter estimation

• Design of model (specific to application)– What states are there?– How are they connected?

• Assigning values to– Transition probabilities– Emission probabilities

Model training• Assume the states and connectivity are

given• We use a training set from which our

model will learn the parameters – An example of machine learning– The likelihood is probability of the data

given the model– Calculate likelihood assuming j, j=1..n

sequences in training set are independent

€

l(x1,..x n |θ) = log P(x1,..x n |θ) = log P(x j |θ)j=1

n

∑

When state sequence is known

• Maximum likelihood estimators

• Adjusted with pseudocounts€

Akl = observed number of transistions from k to l

E k (b) = observed number of emissions of symbol b in state k

ˆ a kl =Akl

Ak ′ l ′ l

∑

ˆ e k (b) =E k (b)

Ek ( ′ b )′ b

∑

When state sequence is unknown

• Baum-Welch algorithm– Example of a general class of EM

(Expectation-Maximization) algorithms– Initialize with a guess at akl and ek(b)– Iterate until convergence

• Calculate likely paths with current parameters• Recaculate parameters from likely paths

– Akl and Ek(b) are calculated from posterior decoding (ie forward-backward algorithm) at each iteration

– Can get stuck on local optima

Preview: Profile HMMs

Reading assignment

• Continue studying: – Durbin et al. (1998) pgs. 46-79 in

Biological Sequence Analysis