GraphBolt: Streaming Graph Approximations on Big Data · vertex centrality used to rank websites in...

Transcript of GraphBolt: Streaming Graph Approximations on Big Data · vertex centrality used to rank websites in...

GraphBolt: Streaming Graph Approximations on Big DataMiguel E. Coimbra

INESC-ID/IST, Universidade de Lisboa

Lisbon, Portugal

Renato Rosa

INESC-ID/IST, Universidade de Lisboa

Lisbon, Portugal

Sérgio Esteves

INESC-ID/IST, Universidade de Lisboa

Lisbon, Portugal

Alexandre P. Francisco

INESC-ID/IST, Universidade de Lisboa

Lisbon, Portugal

Luís Veiga

INESC-ID/IST, Universidade de Lisboa

Lisbon, Portugal

AbstractGraphs are found in a plethora of domains, including online social

networks, theWorldWideWeb and the study of epidemics, to name

a few. With the advent of greater volumes of information and the

need for continuously updated results under temporal constraints,

it is necessary to explore novel approaches that further enable

performance improvements.

In the scope of stream processing over graphs, we research the

trade-offs between result accuracy and the speedup of approximate

computation techniques. We believe this to be a natural path to-

wards these performance improvements. Herein we present Graph-

Bolt, through which we conducted our research. It is an innovative

model for approximate graph processing, implemented in ApacheFlink.

We analyze our model and evaluate it with the case study of

the PageRank algorithm [21], perhaps the most famous measure of

vertex centrality used to rank websites in search engine results. In

light of our model, we discuss the challenges driven by relations

between result accuracy and potential performance gains.

Our experiments show that GraphBolt can reduce computational

time by over 50% while achieving result quality above 95% when

compared to results of the traditional version of PageRank without

any summarization or approximation techniques.

1 IntroductionWorking with graphs in the context of stream processing is a sig-

nificant shift in paradigm when compared to traditional graph

processing. One must consider incoming graph updates, such as

vertex and edge additions and removals. Conceptually, the graph

itself could be modeling dynamic systems such as social networks,

recommendation systems or the monitoring of the movement of

people and vehicles, where the ability to quickly react to change

would allow for useful detection of trends.

There are contexts in which approximate results, under specific

error bounds, would be as equally acceptable as exact results (of-

ten better if returned more promptly), for application users and

decision makers. Approximate results may allow for considerable

improvements in speed (e.g., reduced latency and processing time,

increased throughput) and, equally important, resource efficiency

(e.g., lower resource usage, cloud computing costs, energy foot-

print). Traditionally, this has been addressed in the literature via

techniques such as:

• Graph sampling [14]: has many purposes, among which main-

taining a summarization of the graph based on a sample. This is

relevant both in case the complete graph is known (the sample

is representative of the graph in this case) or its representation

is incomplete (for which the sampling serves as an enabler of

exploration).

• Task dropping [23], [13]: consists in dropping parts of a parti-

tioned global processing task list and estimating the introduced

error. Some instances of task dropping use statistical models to

extrapolate results with a predefined maximum error bound.

• Load shedding [2]: requires assuming a specific shedding coor-

dination scheme in distributed systems [28], and may also be em-

ployed in scenarios such as data warehouses and databases [27].

Analyzing and developing novel techniques for approximate

graph processing can strongly benefit many systems and applica-

tions. This would pave the road for refined high-level optimizations,

in the form of Service-Level Agreements (SLAs) for graph process-

ing, with different tiers of accuracy and resource efficiency. In

this paper, we explore the trade-offs between result accuracy and

speedup in graph processing, leveraging approximate computation

to obtain gains in speed and resource efficiency.

We analyze the potential advantages of our model by evaluat-

ing it (in the context of our implementation) with a relevant and

representative case study: the PageRank algorithm [21]. In light of

our model, we discuss the challenges driven by relations between

result accuracy and potential performance gains.

The rest of the paper is organized as follows. Section 2 provides

information on the particular method of PageRank we used for our

analysis and evaluation. An overview of the GraphBolt model is

provided in Sec. 3. Section 4 describes the architecture. In Sec. 5

we present the experimental evaluation, followed by an analysis

of the improvements brought about by our model. Section 6 an-

alyzes existing state-of-the-art techniques to achieve the several

sub-functionalities that we employ. Lastly, we summarize our con-

tribution as well as a future research direction in Sec. 7.

2 PageRank through the power methodThe PageRank algorithm [21] initializes all vertices with the same

value. We focus on a vertex-centric implementation of PageRank,

where for each iteration, each vertex u sends its value (divided by

its outgoing degree) through each of its outgoing edges. A vertex vdefines its score as the sum of values received from its incoming

edges, multiplied by a constant factor β and then summed with a

constant value (1 − β) with 0 ≤ β ≤ 1. PageRank, based on the

random surfer model, uses β as a dampening factor. If the PageRank

of a vertex (also referred to as the score or rank of a vertex) is the

probability that a web surfer would visit the page represented by the

arX

iv:1

810.

0278

1v1

[cs

.DC

] 5

Oct

201

8

vertex, then the β factor is the chance of the surfer visiting another

random page. Iterative versions of PageRank usually terminate

when a maximum number of iterations has been reached, or when

the values have converged within a predefined limit [9].

PageRank has been built on previous definitions of vertex cen-

trality. It has been used to determine the relevance of vertices

representing web pages and its concept has been applied to other

graphs such as social networks. In our use-case, we consider the

computation of PageRank through the power method [6], which is

in itself an approximated PageRank algorithm. For our work, this

means that whether one considers one-time offline processing or

online processing over a stream of graph updates, the underlying

computation of PageRank is an approximate numerical version well

known in the literature. This distinction is important, for when we

state GraphBolt enables approximate computing, we are consid-

ering a potential for applicability to a scope of graph algorithms,

such as algorithms for computing eigenvector based centralities

and optimization algorithms for finding communities/clusters in

networks. Whether the specific graph algorithm itself incurs nu-

merical approximations (such as the power method) or not, that isorthogonal to our model, which remains a valid contribution in

either case.

3 GraphBolt ModelOurmodel aims to establish a computationally-conservative scheme.

The goal is to enable different approximate computation strategies

in exchange for result accuracy. When processing a stream of new

edges, the parameters of our model highlight a subset K of the

graph’s vertices, known as hot vertices. The aim of this set is to re-

duce the number of processed vertices as close as possible to O(K).These vertices are to be used for updating current graph algorithm

values. In Sec. 5 we go over our results showing that the PageRank

algorithm benefits from restricting computation around this set of

hot vertices.

3.1 Not all vertices are equalIn order to use only a subset K of the vertices, one will necessarily

employ approximate computing techniques which make use of a

fraction of the total data. For our case, we implemented in GraphBolt

a summarized version [17] of the aforementioned PageRank powermethod. In this version, there is an aggregating vertex B. We refer

to B as the big vertex – it is a single vertex which represents all

the vertices outside K (our model considers outside vertices are not

worth recomputing). For the original graphG = (V ,E) and vertex

setK , we define a summary graph G = (V, E), whereV = K∪{B}.We define E = EK ∪EB , where EK = {(u,v) ∈ E : u,v ∈ K}, whichis the set of edges with both source and target vertices contained

in K . Lastly, we define EB = {(w, z) ∈ E : w < K , z ∈ K} asthe set of edges with sources contained inside B and target in K .Conceptually, this consists in replacing all vertices of G which are

not in K by a single big vertex B and adapting edges accordingly.

The summary graph G does not contain vertices outside of K .In its numerical version, the PageRank algorithm normally ex-

ecutes a certain number of iterations. In each iteration, the score

of each vertex is updated. It is relevant to retain that for each iter-

ation, the rank of a vertex v depends on ranks received through

its incoming edges. By definition, the rank of the big vertex B is

irrelevant – B represents all vertices whose rank is not expected

to change in a significant way. With this scheme, the contribution

of each vertex v < K (and therefore represented in B) is constantbetween iterations, so it can be registered initially and used after-

ward. As a consequence, the summary graph G does not contain

edges targeting vertices represented in B. However, their existencemust be recorded: even if the outgoing edges of K are irrelevant

for the computation, they still matter for the vertex degree, which

influences the emitted scores of the vertices in K . Despite the factthose edges are being discarded when building G, the summarized

computation must occur as if nothing had happened conceptually.

To ensure correctness pertaining this fact, for each edge (u,v) ∈ EK ,we store val((u,v)) = 1/dout (u) with dout (u) as the out-degree ofu before discarding.

It is also necessary to record the contribution of all the vertices

fused together in B. To do so, for each edge whose source w is

represented in B and target z is in K , we store the rank that would

originally be sent fromw as val((w, z)) = ws/dout (w) wherews is

the rank of w and the out-degree of w is defined as dout (w). Therank contribution of B as a single vertex in G is then represented

as Bs and defined as:

Bs =∑w

val((w, z)), (w, z) ∈ EB (1)

This way, the fusion of vertices into B is performed while pre-

serving the global influence of ranks emitted from vertices in B to

vertices in K .Our model embodies the intuition that vertices which receive

more updates have a greater probability of having their measured

score1changed in between execution points [3]. Their neighboring

vertices are also likely to incur changes, but as we consider vertices

further and further away from K , ranks are likely to remain the

same [2, 8] (as far as we know, this applies to PageRank and most

likelymany other vertex-centered algorithms like randomwalk [26]

and greedy clustering methods).

GraphBolt aims to enable approximate computing on a stream Sof incoming edge updates, in this case tested with the PageRank

power method algorithm. While some of this centrality algorithm’s

details had to be considered to employ it with our model, Graph-

Bolt’s model has the potential to be applied to other classes of graph

algorithms using the same principles.

Our contribution strikes a balance between two opposite graph

processing strategies for when a query is to be served. Conceptually,

they are: a) recomputing the whole graph; b) returning a previous

query result without incurring any type of additional computation.

While the former is obviously much more time-consuming, it has

the property of maintaining result accuracy. The latter, on the

other hand, would quickly lower the accuracy of the algorithm’s

results. Throughout this document, we use the term query to state

that a graph algorithm’s results (PageRank as our case study) are

required. So when it is said that a query is executed, it means that

the algorithm (PageRank) was executed, independently of it being

the complete or summarized version.

1Rank in the case of PageRank, but potentially-different metrics in the case of other

graph processing algorithms.

2

3.2 Incrementally building the modelThe model is based on techniques such as defining and determining

a confidence threshold for error in the calculation [1], graph sam-

pling [14] and other hybrid approaches in order to find an effective

and efficient mix. GraphBolt registers updates as they arrive for

both statistical and processing purposes. Vertex and edge changes

are kept until updates are formally applied to the graph. Until they

are applied, statistics such as the total change in number of ver-

tices and edges (with respect to accumulated updates) are readily

available. When applying the generic concepts of our technique,

a useful insight is that most likely, not all vertex scores will need

to be recalculated (as shown later in our results). In our model,

generating the subset K of a graph G = (V ,E) depends on three

parameters (r ,n,∆) used in a two-step procedure. In this execution

model, from the client perspective, we consider a query to be an

updated view of the algorithm information pertaining G (in our

use-case, the information is the ranking of vertices). As each indi-

vidual query represents an important instant as far as computation

is concerned, we refer to each query as a measurement point t .

1. Update ratio threshold r. This parameter defines the minimal

amount of change in a vertex u’s degree in order to include uin K . Parameters r and n are parameters of GraphBolt’s generic

model to harness a graph algorithm’s heuristics to approximate

a result. In the case of PageRank, we use the vertex degree.

We adopt the notation where the set of neighbors of vertex

u in a directed graph at measurement instance t is written as

Nt (u) = {v ∈ V : (u,v) ∈ E}. We further write that the de-

gree of vertex u in measurement instance t (during GraphBolt’s

processing) is written as dt (u) = |Nt (u) |. The function d (u,v)represents the length (number of edges) of the minimum path

between vertices u and v . If it is subscripted with t , we havedt (u,v) which represents the same concept but at measurement

instance t . Note that this does not imply maintaining shortest

paths between vertices (that would be a whole different problem).

Our technique with respect to this is based on a vertex-centric

breadth-first neighborhood expansion. Let us define as Kr theset of vertices which satisfy parameter r , where dt (u) is thedegree of vertex u, t represents the current measurement point

and t − 1 is the previous measurement point:2

Kr = {u : | dt (u)dt−1 (u)

− 1| > r } (2)

2. Neighborhood diameter n. This parameter is used as an ex-

pansion around the neighborhood of the vertices in Kr . It aims

to capture the locality in graph updates, i.e., those vertices neigh-

boring the ones beyond the threshold, and as such still likely to

suffer relevant modifications when vertices inK are recalculated

(attenuating as distance increases). For every vertex u ∈ Kr , wewill expandKr to include every additional vertexv satisfying pa-

rameter n, which is essentially an upper limit on the expansion

diameter:

Kn = {v : dt (u,v) ≤ n,u ∈ Kr ,v ∈ Vt \ Kr } (3)

Vt is the set of vertices of the graph at measurement point t .3. Vertex-specific neighborhood extension ∆. This last param-

eter allows users to extend the functionality of n by fine-tuning

2A new vertex does not have a defined previous rank – it is included in K in that case.

neighborhood size on a per-vertex basis. This is achieved by

accounting for specific underlying algorithm’s properties which

may be used as heuristics. This further allows to update the

vertex ranks around those vertices that while not included by

Eq. 2 or Eq. 3, are neighbors to vertices subject to change. In the

context of PageRank, we use the relative change of rank in a

vertex between the two consecutive measurement points t andt − 1. We thus have an extension of the expansion diameter set

based on ∆:

K∆ = {v : dt (u,v) ≤ f∆ (v) ,u ∈ Kn ,v ∈ Vt \ {Kr ∪ Kn }} (4)

where f∆ (v) represents the ∆ expansion function

f∆ (v) =1

logdlog

(n +

d vs∆dt (v)

)(5)

and vs is the score3of vertex v and d the average degree of the

currently accumulated vertices with respect to stream S . This al-lows us to have a fine-grained neighborhood expansion starting

at v and limited by ∆ on the maximum contribution of the score

of v . The intuition here is that vertex v would contribute to the

PageRank of its immediate (away from v by 1 hop) neighbors

(by virtue of its outgoing edges) with a value ofvs

dout (v) . For itsneighbors’ neighbors (away from v by 2 hops), the influence of

v would be further diminished (because the score contribution

would now bevs

dout (v)dout (u) where u is a direct neighbor of v).

Additional expansions would further dilute the contribution that

v could possibly have. For example, when evaluating GraphBolt

with a bound of ∆ = 0.1, we keep considering further neighbor-

hood expansion hops fromv until the contribution fromv drops

below 10% of its score.

As a result, we then have a set of hot vertices K = Kr ∪Kn ∪K∆

which is used as part of the graph summary construct [17], written

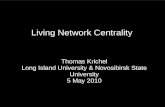

here as G = (V, E)).An example of how the parameters influence the selection of

vertices is depicted in Fig. 1. The left side represents a zoom of a

small portion ofG (which may be composed of millions of vertices).

Part a) shows the vertices whose amount of change satisfied the

threshold ratio r , leading to Kr : in this case, only the top vertex was

included, for which its contour is a solid line with a gray fill. Part

b) then shows the usage of the neighborhood expansion parameter

n = 1 over the vertex of a): note how the two middle vertices are

now gray as well (the one included in the previous part is colored

black). This makes up Kr ∪ Kn . Lastly, part c) accounts for the per-vertex neighborhood extension parameter ∆ and represents the set

of hot vertices (for a very close zoom over a graph with potentially

millions of vertices) K = Kr ∪Kn ∪K∆. This is depicted by coloring

the bottom vertex in gray and the remaining ones in black (they

are already part of K ). Some dashed arrows remain to illustrate that

the vertices on the other end of the edges were not included in K .

4 GraphBolt ArchitectureThe architecture of GraphBolt was designed while taking in account

three information types which are inherent to the work flow. A vi-

sual depiction of the work flow is presented in Fig. 2. The GraphBolt

module will constantly monitor one or more streams of data and

3PageRank score in this case, it can depend on the algorithm we are approximating.

3

Algorithm 1 GraphBolt execution structure

1: OnStart

2: repeat3: msg← TakeMessage(stream)

4: if msg is Add then RegisterAddEdge(msg)

5: else if msg is Remove then RegisterRemoveEdge(msg)

6: else if msg is Query then7: update?← BeforeUpdates(graphUpdates, graphUpdateStatistics)

8: if update? then9: ApplyUpdates(graphUpdates)

10: end if11: response← OnQuery(id, msg, graph, updates, statistics, infoMap, config)

12: if response = Repeat-last-answer then13: newRanks← previousRanks

14: else if response = Compute-approximate then15: newRanks← ComputeApproximate(graph, previousRanks)

16: else if response = Compute-exact then17: newRanks← ComputeExact(graph)

18: end if19: OutputResult(newRanks)

20: OnQueryResult(id, msg, response, graph, summaryGraph, newRanks, jobStatistics)

21: end if22: until stopped23: OnStop

∅a)Kr

b)Kr ∪ Kn

c)Kr ∪ Kn ∪ K∆

Figure 1. From left to right: evolution of K due to GraphBolt model

parameters.

track the changes made to the graph. When a client requests (query)the data (in this case, PageRank results), the GraphBolt module will

execute the request, which will consist of submitting a Flink jobto a cluster of machines. Local execution on the GraphBolt module

machine is also possible (e.g. a single high-capacity machine). In

our experiments, we trigger the incorporation of updates into the

graph (and then produce PageRank results) whenever a client query

arrives.

The main elements of the flow of information in an execution

scenario are:

• Initial graph G. It represents the original state of the problem

domain, upon which updates and queries will be performed. Gwill thus require intensive computations.

• Stream of updates S. Our model of updates could be the re-

moval e− or addition e+ of edges and the same for vertices

(v−,v+). We make as little assumptions as possible regarding

S : the data supplied needs not respect any defined order. To eval-

uate our hypothesis, however, we restricted our problem space

to one composed exclusively of the edge addition operation e+.• Result R. Information produced by the system as an answer to

the queries received in S . With PageRank as a case study of our

module, the results are a ranking R of vertices.

Figure 2. A user’s conceptual interaction with GraphBolt. Com-

putation could occur on a remote Flink cluster or locally on the

user’s machine

We aim to enable programmers to define fine-grained logic for

the approximate computation, if necessary and/or desired. This is

achieved through the usage of User-Defined Functions (UDFs)provided in GraphBolt. As a design decision, there are five distinct

UDFs which are articulated in a fixed program structure compris-

ing GraphBolt. The API of GraphBolt consists of these five ordered

UDFs which specify the execution logic that will guide the approxi-

mate processing. These UDFs are key points in execution where

important decisions should take place (e.g., how to apply updates,

how to perform monitoring tasks). Users who need the additional

behavior control need only customize the model by implementing

their own UDF(s). Overall, this approach has the advantage of ab-

stracting away the API’s complexity, while still empowering power

users who wish to create fine-tuned policies. We present in Alg. 1

these different UDFs and the structures and the workflow when

serving updates and queries to the graph. For simple rules, these

functions don’t need to be programmed, as we supply the imple-

mentation with parameters for the simplest rules such as threshold

comparisons, fixed values, intervals and change ratios.

4

1. OnStart. A preparatory function with the goal of setting up

resources such as files, databases or performing other tasks.

2. BeforeUpdates. Executed after a query q is received, but before

graph updates are applied. Its purpose as a UDF is to enable

programmers to choose how to process the graph updates as

a function of the magnitude of their impact. It exposes: the

sequence of graph operations which were pending since the last

computation; statistics such as the number of changed vertices

and the total amount of vertices and edges in the graph.

3. OnQuery. This function is called every time a query q arrives.

Each call is uniquely identified throughout GraphBolt’s lifetime.

The query is served after any processing that may have taken

place in BeforeUpdates. This UDF is defined in the API to re-

turn an action indicator that will dictate how the query is to be

served. It could be done by: a) by returning the last calculated re-sult; b) performing an approximation of the result and returning

it; c) providing an exact answer after a complete recalculation

of the result.

4. OnQueryResult. Invoked after q’s response has been processed.This UDF is aware of the action indicator returned by OnQuery.

It has access to the response’s results, execution statistics (such

as total execution time, physical space, network traffic, among

others) and details specific to the approximation technique used

(in the case of PageRank, the graph summary over which an

approximation was computed).

5. OnStop. Symmetrical to OnStart, it is responsible for (if nec-

essary) proper resource clearing and post-processing.

To employ ourmodule, the user can express the algorithm execution

using Flink dataflow programming primitives. They will be fed

the updated graph and the processing infrastructure of Flink.

Implementation. Apache Flink [15] is a framework built for dis-

tributed stream processing4. It supports many APIs, among which

Gelly, its official graph processing library. The framework fea-

tures algorithms such as PageRank, Single-Source Shortest Paths

and Community Detection, among others. Overall, it empowers

researchers and engineers to express graph algorithms in familiar

ways such as the gather-sum-apply or the vertex-centric approachof Google Pregel [19], while providing a powerful abstraction with

respect to the underlying distributed computations. We employ

Flink’s mechanism for efficient dataflow iterations [16] with in-

termediate result usage.5

5 EvaluationExperiments were performed on an SMPmachine with 256 GB RAM

and 8 Intel(R) Xeon(R) CPU E7- 4830 @ 2.13GHz with eight cores

each. Each dataset execution was performed with a parallelism of

one6and with either 4096MB or 8192MB of memory, depending

on the dataset. This was done to simulate the conditions offered

by commodity cloud [25] providers such as Amazon, Azure or

Google and so that any overhead introduced by GraphBolt is not

masked away by network latency or transfer times as it could be a

distributed deployment of less powerful instances.

4https://flink.apache.org/5https://ci.apache.org/projects/flink/flink-docs-stable/dev/batch/iterations.html#delta-iterate-operator6https://ci.apache.org/projects/flink/flink-docs-stable/dev/parallel.html

Our execution scenario is based on computing an initial PageR-

ank (not summarized) and then processing a stream S of chunks of

incoming edge additions in GraphBolt. For each chunk received in

GraphBolt we: 1) integrate the new edges in the graph; 2) compute

the summarized graph G = (V, E) as described in Sec. 3) and exe-

cute PageRank over it. Henceforth, we say that we are processing

a query when a PageRank version (summarized or complete) is

executed after integrating a chunk of updates.

To reduce the variability in our experiments, they were set up

so that the stream S of edge additions is such that the number Q of

queries for each dataset and parameter combination is always the

same: fifty (Q = 50). For each dataset and stream size, we defined

(offline) a tab-separated file containing the stream of edge additions.

Additionally, for each dataset, streams were generated by uniformly

sampling from the edges in the original dataset file. Different stream

edge counts were used: |S| = {5000, 10000, 20000, 40000}. This has the

consequence of allowing for scenarios with different edge densities

|S |/Q (for 5000 edges there are 100 edges per update, for 20000

there are 400 and so on).

For each dataset and stream S of size Q , each combination of

parameters (r ,n,∆) is tested against a replay of the same stream.

Essentially, each execution (representing a unique combination of

parameters) will begin with a complete PageRank execution fol-

lowed by Q = 50 summarized PageRank executions. This initial

computation represents the real-world situation where the results

have already been calculated for the whole graph. In such a situa-

tion, one is focused on the incoming updates. For each dataset and

stream S , we also execute a scenario which does not use the pa-

rameters: it starts likewise with a complete execution of PageRank,

but the complete PageRank is executed for all Q queries. This is to

generate ground-truth PageRank results against which to measure

accuracy and performance of the summarized implementation on

the GraphBolt model.

It is important to note that many datasets such as web graphs

are usually provided in an incidence model [5]. In this model, the

out-edges of a vertex are provided together sequentially. This may

lead to an unrealistically favorable scenario, as it is a property that

will not necessarily hold in online graphs and which may benefit

performance measurements. To account for this fact, we tested the

same parameter combinations with a previous shuffling of stream S(a single shuffle was performed offline a priori so that the random-

ized stream is the same for different parameter combinations). This

increases the entropy and allows us to validate our model under

fewer assumptions.

5.1 DatasetsThe datasets’ vertex and edge counts are shown in Table 1, along

with the associated stream size of the corresponding figures herein

presented. We evaluate results over three four types of dataset: web

graphs, social networks, a publication citation network and a single

ego network. The web graphs and social networks were obtained

from the Laboratory for Web Algorithmics [4], [5]. CitHep-Ph [18]is the high energy physics phenomenology citation graph. The

single Facebook-ego network [29] we used for testing was made

of user-to-user links of the Facebook New Orleans network. This

suite of datasets ensured a heterogeneous representation of real-

world network examples.

5

Dataset |V| |E| |S|

cnr-20001 325,557 3,216,152 40,000

eu-20051 862,664 19,235,140 20,000

Cit-HepPh2 [18] 34,546 421,576 40,000

enron2 69,244 276,143 40,000

dblp-20102 326,186 1,615,400 40,000

amazon-20082 735,323 5,158,388 20,000

Facebook-ego [29] 63,731 1,545,686 40,000

Table 1. Except Cit-HepPh and Facebook-ego, all datasets were re-trieved from the Laboratory for Web Algorithmics [5]. Web graphs

are indicated by1and social networks by

2.

5.2 Assessment MetricsWe measure the results of our approach in terms of: a) obtainedexecution speedup; reduction in number of processed b) verticesand c) edges; d) ability to delay computation in light of result accu-

racy. Accuracy in our case takes on special importance and requires

additional attention to detail. The PageRank score itself is a mea-

sure of importance and we wish to compare summarized execution

rankings against the complete version’s ranks. As such, what is

desired is a technique to compare rankings.

Rank comparison can incur different pitfalls. If we order the list

of PageRank results in decreasing order, only a set of top-vertices

is relevant. After a given index in the ranking, the centrality of the

vertices is so low that they are not worth considering for compar-

ative purposes. But where to define the truncation? The decision

to truncate at a specific position of the rank is arbitrary and leads

to the list being incomplete. Furthermore, the contention between

ranking positions is not constant. Competition is much more in-

tense between the first and second-ranked vertices than between

the two-hundred and two-hundred and first.

We employed Rank-Biased-Overlap (RBO) [30] as a useful eval-

uation metric (representing relative accuracy) developed to deal

with these inherent issues of rank comparison. RBO has useful

properties such as weighting higher ranks more heavily than lower

ranks, which is a natural match for PageRank as a vertex centrality

measure. It can also handle rankings of different lengths. This is in

tune with the output of a centrality algorithm such as PageRank.

The RBO value obtained from two rank lists is a scalar in the inter-

val [0, 1]. It is zero if the lists are completely disjoint and one if they

are completely equal. While more recent comparison metrics have

been proposed [20], we believe they go beyond the scope of what

is required in our comparisons. Effectively, the quality of our result

accuracy is itself the result of a comparison (between sequences of

rank lists). As far as we know, and due to the specificity of our eval-

uation, we believe there is no established baseline in the literature

against which to compare our own ranking comparison results.

We chose parametrization values r = {0.10, 0.20, 0.30}, n ={0, 1} and ∆ = {0.01, 0.1, 0.9}, leading to 18 parameter combina-

tions for each pair of dataset and update stream. Performance-wise,

we test values of r associated to different levels of sensitivity to

vertex degree change (the higher the number, the less expected ob-

jects to process per query). With n = 0, we minimize the expansion

around the set Kr . For n = 1, we are taking a more conservative

approach regarding result accuracy. The higher the value of n is,

the higher the RBO (this is demonstrated in our results). The ∆values were chosen to evaluate individual different weight schemes

applied to vertex score changes.

Additionally, regarding evaluation of the rank list RBOs, for an

update density lower or equal to 200 edges per update, we used the

top 1000 ranks. Above the 200 edge density, we used the top 4000

ranks. Using a higher number of ranks for the RBO evaluation favors

a comparison of calculated ranks which has greater resolution, as

more vertices are being compared.

5.3 ResultsFor each dataset we present a mix of five or six results, as relevant,

to highlight upper and lower bounds of GraphBolt’s performance,

across relevant metrics as detailed next. These were drawn from

all the 18 experiments carried out varying all parameters, as de-

scribed earlier. This aims to demonstrate how GraphBolt is able to

consistently achieve very relevant speedups, while ensuring high

accuracy, and, at the lower end, its overhead is so small that it is

never worst than baseline Flink Gelly.Regarding the results in detail, they are tailored to illustrate

each of the most relevant metrics regardng GraphBolt operation:

a) summary graph vertices as a percentage of the original graph’s;

b) summary graph edges as a percentage of the original graph’s; c)RBO evolution as the number of executions increases (it starts with a

value of 1 as the complete version of PageRank is executed initially

whether we use the parameters r ,n and ∆ or not); d) obtainedspeedup for each execution (compared against the execution over

the complete graph).

For each of these four, the plots shown for each metric were

ordered by quality of the metric’s average value. The horizontalaxis is always the same: it is the sequence of queries from 1 up

to Q = 50. Furthermore, we note that all figures have an amount of

plots equal to the number of shown labels. Due to how the param-

eter combinations interact with the datasets, some combinations

produced extremely similar values, leading to overlapping plots.

An example of this is seen on the figures for dataset cnr-2000.cnr-2000: we evaluate this dataset under an entropy-intensive

scenario: not only do we shuffle the stream, we also set it to the

maximum size out of the possible values we contemplated. With

the exception of the eu-2005 and amazon-2008 datasets, all streamsizes were set to |S | = 40, 000. As shown in Figures 3 and 4 , there

was a tendency for decreasing size of the vertices and edges of the

summary graph G, albeit with some fluctuations on the number of

edges. The parameter combinations were the same for the lowest

three vertex and edge summary ratios for this dataset. The same

occurred with respect to the worst three vertex and edge summary

ratios. The RBO continuously decreased as shown in Fig. 5. Overall,

the different parameters led to different summarized graph sizes.

On this dataset, the worst speedup was above 4 as seen in Fig. 6.

eu-2005: For this dataset we focus on parameter combinations

for a fixed r = 0.10. It is interesting to observe in Fig. 7 and 8 the

two largest summary graph sizes: while n = 1 plays an impor-

tant role, the lower value of delta boosted the inclusion in K of

neighboring vertices. Figure 9 presents the evolution of RBO. As

expected, n = 1 led to higher RBOs. Overall, there was a tendency

for RBO decrease, which represents an accumulation of error of the

summarized version with respect to the complete executions. The

worst speedups obtained for this dataset were greater than 3 (and

6

5 10 15 20 25 30 35 40 45 5010.00%

0.50%

1.00%

1.50%

2.00%

2.50%

3.00%

Summaryvertex

coun

tas

afraction

oftotalvertices

r = 0.10, n = 0, ∆ = 0.900

r = 0.20, n = 0, ∆ = 0.900

r = 0.30, n = 0, ∆ = 0.900

r = 0.10, n = 1, ∆ = 0.010

r = 0.20, n = 1, ∆ = 0.010

r = 0.30, n = 1, ∆ = 0.010

Figure 3. cnr-2000 best three and worst three average vertex ra-

tios.

5 10 15 20 25 30 35 40 45 5010.0%

1.0%

2.0%

3.0%

4.0%

5.0%

6.0%

Summaryedge

coun

tas

afraction

oftotaledg

es

r = 0.10, n = 0, ∆ = 0.900

r = 0.20, n = 0, ∆ = 0.900

r = 0.30, n = 0, ∆ = 0.900

r = 0.10, n = 1, ∆ = 0.010

r = 0.20, n = 1, ∆ = 0.010

r = 0.30, n = 1, ∆ = 0.010

Figure 4. cnr-2000 best three and worst three average edge ratios.

5 10 15 20 25 30 35 40 45 501

95.49%

96.24%

96.99%

97.74%

98.50%

99.25%

100.00%

PageRan

ksimilarity

measure

RBO

r = 0.10, n = 1, ∆ = 0.010

r = 0.20, n = 1, ∆ = 0.010

r = 0.30, n = 1, ∆ = 0.010

r = 0.10, n = 0, ∆ = 0.900

r = 0.20, n = 0, ∆ = 0.900

r = 0.30, n = 0, ∆ = 0.900

Figure 5. cnr-2000 best three and worst three average RBOs.

this was obtained in the context of an accuracy-oriented parameter

configuration), as can be seen in Fig. 10.

enron: as can be seen in Figures 11 and 12, the most summa-

rized combinations of parameters all depended on n = 0 (it is a

5 10 15 20 25 30 35 40 45 501

5.00

6.00

7.00

8.00

9.00

10.00

11.00

12.00

Summarized

PageRan

kexecutionspeedu

p

r = 0.30, n = 0, ∆ = 0.900

r = 0.30, n = 1, ∆ = 0.900

r = 0.30, n = 1, ∆ = 0.100

r = 0.20, n = 0, ∆ = 0.010

r = 0.10, n = 1, ∆ = 0.900

r = 0.10, n = 1, ∆ = 0.100

Figure 6. cnr-2000 best three and worst three average speedups.

5 10 15 20 25 30 35 40 45 5010.00%

0.50%

1.00%

1.50%

2.00%

2.50%

3.00%

Summaryvertex

coun

tas

afraction

oftotalvertices r = 0.10, n = 0, ∆ = 0.900

r = 0.10, n = 0, ∆ = 0.100

r = 0.10, n = 0, ∆ = 0.010

r = 0.10, n = 1, ∆ = 0.100

r = 0.10, n = 1, ∆ = 0.010

Figure 7. eu-2005 subset of parameter combination average vertex

ratios.

5 10 15 20 25 30 35 40 45 5010.00%

0.50%

1.00%

1.50%

2.00%

2.50%

3.00%

Summaryedge

coun

tas

afraction

oftotaledg

es

r = 0.10, n = 0, ∆ = 0.900

r = 0.10, n = 0, ∆ = 0.100

r = 0.10, n = 0, ∆ = 0.010

r = 0.10, n = 1, ∆ = 0.100

r = 0.10, n = 1, ∆ = 0.010

Figure 8. eu-2005 subset of parameter combination average edge

ratios.

7

5 10 15 20 25 30 35 40 45 501

99.38%

99.49%

99.59%

99.69%

99.79%

99.90%

100.00%

PageRan

ksimilarity

measure

RBO

r = 0.10, n = 1, ∆ = 0.010

r = 0.10, n = 1, ∆ = 0.100

r = 0.10, n = 0, ∆ = 0.010

r = 0.10, n = 0, ∆ = 0.100

r = 0.10, n = 0, ∆ = 0.900

Figure 9. eu-2005 subset of parameter combination average RBOs.

5 10 15 20 25 30 35 40 45 501

3.00

4.00

5.00

6.00

7.00

8.00

Summarized

PageRan

kexecutionspeedu

p

r = 0.10, n = 0, ∆ = 0.100

r = 0.10, n = 0, ∆ = 0.900

r = 0.10, n = 0, ∆ = 0.010

r = 0.10, n = 1, ∆ = 0.010

r = 0.10, n = 1, ∆ = 0.100

Figure 10. eu-2005 subset of parameter combination average

speedups.

performance-oriented parameter value). It is interesting to note that

for this dataset, choosing a higher value of degree update ratio r isnot necessarily going to lead to a smaller summary graph G. Thesmaller the value of ∆, the more weight is given to individual vertex

score changes (as per Eq. 5). Figure 13 shows that the best RBOs,

achieved through conservative parameter combinations, were very

close to 100%, which is excellent regarding accuracy. Those same

parameter combinations led to extremely low speedup values as

seen in Fig. 14. This is positive, as it highlights the flexibility of our

model in terms of setting parameters for different strategies such

as optimizing result accuracy, or speedup, or trying to achieve a

balance.

Cit-HepPh: similar results compared to enron with respect to

the dimensions of the summary graph G, as shown in Figures 15

and 16. In Fig. 17 we see there was a far greater disparity in the

evolution of RBO between the best three and worst three parame-

ter combinations, with the three worst RBO parameter combina-

tions having similar values and an accentuated decline. In terms

of speedup, this dataset’s behavior matched our formulation – the

performance-oriented parameter combinations achieved the high-

est speedups. This is shown in Fig. 18.

5 10 15 20 25 30 35 40 45 50110%

20%

30%

40%

50%

60%

70%

80%

90%

Summaryvertex

coun

tas

afraction

oftotalvertices

r = 0.10, n = 0, ∆ = 0.900

r = 0.20, n = 0, ∆ = 0.900

r = 0.30, n = 0, ∆ = 0.900

r = 0.10, n = 1, ∆ = 0.010

r = 0.20, n = 1, ∆ = 0.010

r = 0.30, n = 1, ∆ = 0.010

Figure 11. enron best three and worst three average vertex ratios.

5 10 15 20 25 30 35 40 45 501

0%

20%

40%

60%

80%

100%

Summaryedge

coun

tas

afraction

oftotaledg

es

r = 0.10, n = 0, ∆ = 0.900

r = 0.20, n = 0, ∆ = 0.900

r = 0.30, n = 0, ∆ = 0.900

r = 0.10, n = 1, ∆ = 0.010

r = 0.20, n = 1, ∆ = 0.010

r = 0.30, n = 1, ∆ = 0.010

Figure 12. enron best three and worst three average edge ratios.

5 10 15 20 25 30 35 40 45 50194.3%

95.2%

96.2%

97.1%

98.1%

99.0%

100.0%

PageRan

ksimilarity

measure

RBO

r = 0.10, n = 1, ∆ = 0.010

r = 0.20, n = 1, ∆ = 0.010

r = 0.30, n = 1, ∆ = 0.010

r = 0.10, n = 0, ∆ = 0.900

r = 0.20, n = 0, ∆ = 0.900

r = 0.30, n = 0, ∆ = 0.900

Figure 13. enron best three and worst three average RBOs.

dblp-2010: this dataset showed the sharpest decline tendency

in the summary graph’s size as the stream progressed, out of all

the datasets. We present this in Fig. 19 and 20. Still, it achieved

very good results with a relative RBO above 95% (error under 5%),

as shown in Fig. 21 for both the best and the worst RBO results.

8

5 10 15 20 25 30 35 40 45 501

1.00

2.00

3.00

4.00

5.00

6.00

Summarized

PageRan

kexecutionspeedu

p

r = 0.10, n = 0, ∆ = 0.100

r = 0.20, n = 0, ∆ = 0.100

r = 0.10, n = 0, ∆ = 0.900

r = 0.10, n = 1, ∆ = 0.010

r = 0.20, n = 1, ∆ = 0.010

r = 0.30, n = 1, ∆ = 0.010

Figure 14. enron best three and worst three average speedups.

5 10 15 20 25 30 35 40 45 501

20%

30%

40%

50%

60%

70%

80%

Summaryvertex

coun

tas

afraction

oftotalvertices

r = 0.10, n = 0, ∆ = 0.900

r = 0.20, n = 0, ∆ = 0.900

r = 0.30, n = 0, ∆ = 0.900

r = 0.10, n = 1, ∆ = 0.010

r = 0.20, n = 1, ∆ = 0.010

r = 0.30, n = 1, ∆ = 0.010

Figure 15. Cit-HepPh best three and worst three average vertex

ratios.

5 10 15 20 25 30 35 40 45 501

0%

20%

40%

60%

80%

Summaryedge

coun

tas

afraction

oftotaledg

es

r = 0.10, n = 0, ∆ = 0.900

r = 0.20, n = 0, ∆ = 0.900

r = 0.30, n = 0, ∆ = 0.900

r = 0.10, n = 1, ∆ = 0.010

r = 0.20, n = 1, ∆ = 0.010

r = 0.30, n = 1, ∆ = 0.010

Figure 16. Cit-HepPh best three and worst three average edge

ratios.

5 10 15 20 25 30 35 40 45 50189.1%

90.9%

92.8%

94.6%

96.4%

98.2%

100.0%

PageRan

ksimilarity

measure

RBO

r = 0.10, n = 1, ∆ = 0.010

r = 0.20, n = 1, ∆ = 0.010

r = 0.30, n = 1, ∆ = 0.010

r = 0.10, n = 0, ∆ = 0.900

r = 0.20, n = 0, ∆ = 0.900

r = 0.30, n = 0, ∆ = 0.900

Figure 17. Cit-HepPh best three and worst three average RBOs.

5 10 15 20 25 30 35 40 45 501

1.00

2.00

3.00

4.00

5.00

6.00

Summarized

PageRan

kexecutionspeedu

p

r = 0.10, n = 0, ∆ = 0.100

r = 0.20, n = 0, ∆ = 0.100

r = 0.10, n = 0, ∆ = 0.010

r = 0.30, n = 1, ∆ = 0.900

r = 0.30, n = 1, ∆ = 0.100

r = 0.30, n = 1, ∆ = 0.010

Figure 18. Cit-HepPh best three andworst three average speedups.

5 10 15 20 25 30 35 40 45 5010.00%

0.50%

1.00%

1.50%

2.00%

2.50%

3.00%

Summaryvertex

coun

tas

afraction

oftotalvertices r = 0.10, n = 0, ∆ = 0.900

r = 0.20, n = 0, ∆ = 0.900

r = 0.30, n = 0, ∆ = 0.900

r = 0.10, n = 1, ∆ = 0.010

r = 0.20, n = 1, ∆ = 0.010

r = 0.30, n = 1, ∆ = 0.010

Figure 19. dblp-2010 best three and worst three average vertex

ratios.

Like the previous dataset, the speedup behaved as described in our

model (Fig. 22).

amazon-2008: in Fig. 23 and Fig. 24 we see replicated the be-

havior of the smallest summary graph size being associated with

9

5 10 15 20 25 30 35 40 45 5010.00%

0.50%

1.00%

1.50%

2.00%

2.50%

3.00%

3.50%

4.00%

Summaryedge

coun

tas

afraction

oftotaledg

es

r = 0.10, n = 0, ∆ = 0.900

r = 0.20, n = 0, ∆ = 0.900

r = 0.30, n = 0, ∆ = 0.900

r = 0.10, n = 1, ∆ = 0.010

r = 0.20, n = 1, ∆ = 0.010

r = 0.30, n = 1, ∆ = 0.010

Figure 20. dblp-2010 best three and worst three average edge

ratios.

5 10 15 20 25 30 35 40 45 501

95.30%

96.09%

96.87%

97.65%

98.43%

99.22%

100.00%

PageRan

ksimilarity

measure

RBO

r = 0.10, n = 1, ∆ = 0.010

r = 0.20, n = 1, ∆ = 0.010

r = 0.30, n = 1, ∆ = 0.010

r = 0.10, n = 1, ∆ = 0.900

r = 0.20, n = 1, ∆ = 0.900

r = 0.30, n = 1, ∆ = 0.900

Figure 21. dblp-2010 best three and worst three average RBOs.

5 10 15 20 25 30 35 40 45 501

5.50

6.00

6.50

7.00

7.50

8.00

8.50

9.00

9.50

Summarized

PageRan

kexecutionspeedu

p

r = 0.30, n = 1, ∆ = 0.900

r = 0.30, n = 1, ∆ = 0.100

r = 0.20, n = 0, ∆ = 0.010

r = 0.10, n = 1, ∆ = 0.010

r = 0.30, n = 1, ∆ = 0.010

r = 0.20, n = 1, ∆ = 0.010

Figure 22. dblp-2010 best three andworst three average speedups.

performance-oriented parameters, and the biggest summary graph

sizes associated to accuracy-oriented parameters. It is interesting to

note, for this dataset, that parameter ∆ played a greater role in the

quality of RBO results. Effectively, the lowest value of ∆ = 0.010

5 10 15 20 25 30 35 40 45 501

0.000%

0.050%

0.100%

0.150%

0.200%

0.250%

0.300%

0.350%

Summaryvertex

coun

tas

afraction

oftotalvertices r = 0.10, n = 0, ∆ = 0.900

r = 0.20, n = 0, ∆ = 0.900

r = 0.30, n = 0, ∆ = 0.900

r = 0.10, n = 1, ∆ = 0.010

r = 0.20, n = 1, ∆ = 0.010

r = 0.30, n = 1, ∆ = 0.010

Figure 23. amazon-2008 best three and worst three average vertexratios.

5 10 15 20 25 30 35 40 45 501

0.000%

0.050%

0.100%

0.150%

0.200%

Summaryedge

coun

tas

afraction

oftotaledg

esr = 0.10, n = 0, ∆ = 0.900

r = 0.20, n = 0, ∆ = 0.900

r = 0.30, n = 0, ∆ = 0.900

r = 0.10, n = 1, ∆ = 0.010

r = 0.20, n = 1, ∆ = 0.010

r = 0.30, n = 1, ∆ = 0.010

Figure 24. amazon-2008 best three and worst three average edge

ratios.

slowed down the decrease in RBO towards the final stages (around

execution 37) as seen in Fig. 25. It is thus an important parameter

associated with how conservatively one wishes to treat incoming

degree changes. On most parameter combinations for this dataset,

∆ was a determining factor for differencing summary graph size.

Regarding speedups, on average, the combinations with the highest

values for parameter r yielded the best results (Fig. 26) – this is due

to r raising the required vertex degree variation before a vertex is

to be included in the Kr set. The worst three speedup parameter

combinations produced speedups above 3.

Facebook-ego: this dataset is interesting, because despite beingstructured differently compared to other social networks, it obeyed

the same tendencies regarding parameters. We see in Fig. 27 that

overall, the summary vertex counts were higher for parametern = 1

than n = 0. The summary graph’s edges constituted a very small

portion of the original graph’s. As such, ∆ impacted the importance

of individual vertices more than the vertex degree variation cutoff

parameter r . That is shown in Fig. 28 in the fact that the combina-

tions with (∆ = 0.900) yielded less summary edges. This is in tune

with the RBO results shown in Fig. 29. We observe that, in general,

10

5 10 15 20 25 30 35 40 45 501

98.67%

98.89%

99.11%

99.34%

99.56%

99.78%

100.00%

PageRan

ksimilarity

measure

RBO

r = 0.10, n = 1, ∆ = 0.010

r = 0.20, n = 1, ∆ = 0.010

r = 0.30, n = 1, ∆ = 0.010

r = 0.10, n = 0, ∆ = 0.900

r = 0.20, n = 0, ∆ = 0.900

r = 0.30, n = 0, ∆ = 0.900

Figure 25. amazon-2008 best three and worst three average RBOs.

5 10 15 20 25 30 35 40 45 501

3.00

4.00

5.00

6.00

7.00

8.00

9.00

10.00

Summarized

PageRan

kexecutionspeedu

p

r = 0.20, n = 0, ∆ = 0.900

r = 0.20, n = 0, ∆ = 0.010

r = 0.10, n = 0, ∆ = 0.010

r = 0.30, n = 0, ∆ = 0.900

r = 0.30, n = 1, ∆ = 0.010

r = 0.30, n = 1, ∆ = 0.900

Figure 26. amazon-2008 best three and worst three average

speedups.

the greater the value of ∆, the greater the reduction in graph size

(and the lower the RBO value). Effectively, speedup is maximized

by combinations of parameters that either minimize n or maximize

∆ (Fig. 30).

For all the obtained graphs across different datasets, we gen-

erally obtained very small summary graph dimensions. In turn,

this enabled the high speedup values we presented, and associated

savings in computing. The obtained speedups are also indicative

that, as we test with larger datasets, the benefit of executing a

graph algorithm over just GraphBolt’s K set instead of the com-

plete graph, clearly outweighs the cost of computing that same set.

Due to space limitations, however, we cannot display additional

insights such as more detailed relations between the dataset topolo-

gies, the summary graph and the quality of results. Despite this,

we believe our method has achieved a very good trade-off between

result accuracy and reduction in total computation (be it in number

of processed graph elements or direct time comparison). The fact

that different datasets behaved similarly with respect to the sum-

marization mechanism could be interpreted as a positive indicator

that this technique may also behave uniformly for alternate graph

algorithms.

5 10 15 20 25 30 35 40 45 5010.0%

2.5%

5.0%

7.5%

10.0%

12.5%

15.0%

17.5%

20.0%

Summaryvertex

coun

tas

afraction

oftotalvertices r = 0.10, n = 0, ∆ = 0.900

r = 0.20, n = 0, ∆ = 0.900

r = 0.30, n = 0, ∆ = 0.900

r = 0.10, n = 1, ∆ = 0.010

r = 0.20, n = 1, ∆ = 0.010

r = 0.30, n = 1, ∆ = 0.010

Figure 27. Facebook-links best three and worst three average

vertex ratios.

5 10 15 20 25 30 35 40 45 5010.0%

5.0%

10.0%

15.0%

20.0%

25.0%

30.0%

Summaryedge

coun

tas

afraction

oftotaledg

es

r = 0.10, n = 0, ∆ = 0.900

r = 0.20, n = 0, ∆ = 0.900

r = 0.30, n = 0, ∆ = 0.900

r = 0.10, n = 1, ∆ = 0.010

r = 0.20, n = 1, ∆ = 0.010

r = 0.30, n = 1, ∆ = 0.010

Figure 28. Facebook-links best three and worst three average

edge ratios.

5 10 15 20 25 30 35 40 45 501

98.25%

98.54%

98.83%

99.12%

99.42%

99.71%

100.00%

PageRan

ksimilarity

measure

RBO

r = 0.10, n = 1, ∆ = 0.010

r = 0.20, n = 1, ∆ = 0.010

r = 0.30, n = 1, ∆ = 0.010

r = 0.10, n = 0, ∆ = 0.900

r = 0.20, n = 0, ∆ = 0.900

r = 0.30, n = 0, ∆ = 0.900

Figure 29. Facebook-links best three and worst three average

RBOs.

11

5 10 15 20 25 30 35 40 45 501

2.00

3.00

4.00

5.00

6.00

7.00

8.00

Summarized

PageRan

kexecutionspeedu

p

r = 0.10, n = 0, ∆ = 0.010

r = 0.10, n = 0, ∆ = 0.900

r = 0.10, n = 1, ∆ = 0.100

r = 0.10, n = 1, ∆ = 0.010

r = 0.20, n = 1, ∆ = 0.010

r = 0.30, n = 1, ∆ = 0.010

Figure 30. Facebook-links best three and worst three average

speedups.

6 Related WorkThe multidisciplinary aspect of our work requires considering dif-

ferent aspects.

Batch Processing.MapReduce-inspired techniques divide the

data in many chunks to process them independently in a distributed

and scalable fashion. Graphs however, as an instance of big data,

have implicit interconnections which make this model hard to

employ. Furthermore, graph algorithms often have an iterative

nature. This conflicts with the acyclic dataflow assumption inherent

to the MapReduce model.

Iterative Processing. To retain the benefits of the MapReduce

computational model while adding higher-level programming op-

erators and supporting iterative computations over the same data,

new approaches emerged. Among themwe have Apache Spark [31],which has its Resilient Distributed Datasets abstraction (RDDs).

RDDs are parallel data structures which allow for keeping inter-

mediate results in memory in order to reuse and manipulate them

with different operators. A contender to Apache Spark is ApacheFlink [7], which is a platform for distributed stream and batch

processing. In Flink, all data is represented internally as streams

- the batch processing that Flink performs is defined internally

as a limited stream. Computations in Flink are expressed in user

programs which define a dataflow execution graph, whose oper-

ators are then distributed across a cluster of Flink nodes. Flinkalso supports iterative dataflows [11] and studies have been made

on their applications over graphs. We opted for Flink due to its

assumed goal of unifying batch and stream processing, its rapidly

growing community and due to its recent attention as a tool to

process large graphs [16].

Streams. Another limitation of the MapReduce paradigm is that

it aims to process static batches of huge data and not continuous

flows of data (streams). In an attempt to mitigate this disadvantage,

incremental MapReduce models emerged, such as Google Perco-

lator [22]. Stream processing systems usually receive information

from heterogeneous sources and establish the processing plan as a

dataflow graph (directed acyclic graph), which is then transformed

into an execution graph (Flink is an example of such a system). Due

to these properties, Apache Flink was chosen for implementation

and development of this work.

ExpressingGraphProcessing. It also remains relevant tomen-

tion known approaches to expressing graph processing programs.

Perhaps the most widespread model is what is known as think-like-a-vertex, described in Google Pregel [19], where computation is

expressed from the point of view of the vertex itself. In this model,

the user provides a function that will be executed in the context of

every vertex. Each vertex receives incoming messages, performs

user-specified logic and then, if necessary, produces output mes-

sages to its outgoing neighbors. This approach allows for the com-

putation to be parallelized across the vertices. Both Spark GraphXand Flink Gelly support this model. GraphBolt’s implementation

of the complete and summarized versions of PageRank is expressed

in this model. This model hides communication and coordination

details from the users, making it a powerful abstraction.

Another model that has been proposed is the edge-centric model,

debuted in X-Stream [24]. X-Stream exposes the scatter-gather pro-gramming model and was motivated by the lack of access locality

when traversing edges, which makes it difficult to obtain good

performance. State is maintained in vertices. This tool uses the

streaming partition, which works well with RAM and secondary

(SSD and Magnetic Disk) storage types. It does not provide any way

by which to iterate over the edges or updates of a specific vertex. A

sequential access to vertices leads to random access of edges which

decreases performance. X-Stream is relevant in the sense that it

explored the concept of enforcing sequential processing of edges

(edge-centric) in order to improve performance.

7 ConclusionHerein we presented and evaluated GraphBolt, a model and API

implementation for approximate graph processing over streams

that is both flexible and powerful. We designed the GraphBolt mod-

ule and evaluated its approximate computing capabilities over a

PageRank implementation for stream processing based on a graph

summary technique. The evaluated summarized technique is built

over the GraphBolt model and its parameters r ,n and ∆. At thesame time, it is flexible for those who wish to enrich the model

by providing a well-defined structure to incorporate their own ap-

proximate processing strategies (e.g, repeating the last results if

the updates were not deemed significant or performing an exact

computation if too much entropy has accumulated from the up-

date stream) and to choose between built-in behaviors. The effort

of incorporating additional algorithms will also benefit from the

model, as we already provide a structure which establishes an order

for tasks such as ingesting updates, keeping track of accumulated

operations and performing statistical analysis.

As we have shown, GraphBolt provides programmers an API

with a well-defined structure to fine-tune the balance between

computational effort, performance and result approximation. Our

experiments in the context of PageRank leads us to conclude that

GraphBolt is a viable basis for enabling efficient and configurable

graph processing on other algorithms.

Future work. A direct follow-up of our work is to analyze the

impact of using different stream operations (edge and vertex re-

movals) and also varying the nature of the stream. For example, one

variation could represent an edge stream corresponding to power-

law graph growth [12], another one could be generated through

the insights of the Erdős–Rényi model [10].

12

Orthogonally, we also aim to extend and reproduce GraphBolt’s

techniques to other problems such as maintaining online commu-

nities updated. It would be desirable to provide through our API a

set of built-in personalized graph approximation algorithms. We

are researching different approximation strategies based on the

statistical records, from a set of manually implemented policies to

automations based on machine learning.

References[1] Sameer Agarwal, Henry Milner, Ariel Kleiner, Ameet Talwalkar, Michael Jordan,

Samuel Madden, Barzan Mozafari, and Ion Stoica. 2014. Knowing when You’Re

Wrong: Building Fast and Reliable Approximate Query Processing Systems. In

Proceedings of the 2014 ACM SIGMOD International Conference on Managementof Data (SIGMOD ’14). ACM, New York, NY, USA, 481–492. https://doi.org/10.1145/2588555.2593667

[2] Brain Brian Babcock, Mayur Datar, Rajeev Motwani, Brian Babcock Mayur,

Brain Brian Babcock, Mayur Datar, and Rajeev Motwani. 2003. Load Shedding

Techniques for Data Stream Systems. In In Proc. of the 2003 Workshop on Man-agement and Processing of Data Streams (MPDS. 1–3. http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.5.1941

[3] Bahman Bahmani, Ravi Kumar, Mohammad Mahdian, and Eli Upfal. 2012. Pager-

ank on an evolving graph. In Proceedings of the 18th ACM SIGKDD internationalconference on Knowledge discovery and data mining. ACM, 24–32.

[4] Paolo Boldi, Marco Rosa, Massimo Santini, and Sebastiano Vigna. 2011. Layered

Label Propagation: A MultiResolution Coordinate-Free Ordering for Compress-

ing Social Networks. In Proceedings of the 20th international conference on WorldWideWeb, Sadagopan Srinivasan, Krithi Ramamritham, Arun Kumar, M. P. Ravin-

dra, Elisa Bertino, and Ravi Kumar (Eds.). ACM Press, 587–596.

[5] Paolo Boldi and Sebastiano Vigna. 2004. The WebGraph Framework I: Com-

pression Techniques. In Proc. of the Thirteenth International World Wide WebConference (WWW 2004). ACM Press, Manhattan, USA, 595–601.

[6] Kurt Bryan and Tanya Leise. 2006. The $25,000,000,000 eigenvector: The linear

algebra behind Google. Siam Review 48, 3 (2006), 569–581.

[7] Paris Carbone, Asterios Katsifodimos, Stephan Ewen, Volker Markl, Seif Haridi,

and Kostas Tzoumas. 2015. Apache Flink: Stream and batch processing in a

single engine. Bulletin of the IEEE Computer Society Technical Committee on DataEngineering 36, 4 (2015).

[8] Steve Chien, Cynthia Dwork, Ravi Kumar, Daniel R Simon, and D Sivakumar.

2003. Link Evolutions: Analysis and Algorithms. Internet Math. 1, 3 (2003),

277–304. http://projecteuclid.org/euclid.im/1109190963[9] Easley David and Kleinberg Jon. 2010. Networks, Crowds, and Markets: Reasoning

About a Highly Connected World. Cambridge University Press, New York, NY,

USA.

[10] Paul Erdös and Alfréd Rényi. 1959. On random graphs, I. Publicationes Mathe-maticae (Debrecen) 6 (1959), 290–297.

[11] Stephan Ewen, Kostas Tzoumas, Moritz Kaufmann, and Volker Markl. 2012.

Spinning Fast Iterative Data Flows. Proc. VLDB Endow. 5, 11 (July 2012), 1268–

1279. https://doi.org/10.14778/2350229.2350245[12] Santo Fortunato, Alessandro Flammini, and Filippo Menczer. 2006. Scale-free

network growth by ranking. Physical review letters 96, 21 (2006), 218701.[13] Inigo Goiri, Ricardo Bianchini, Santosh Nagarakatte, and Thu D Nguyen. 2015.

ApproxHadoop: Bringing Approximations to MapReduce Frameworks. SIGPLANNot. 50, 4 (2015), 383–397. https://doi.org/10.1145/2775054.2694351

[14] Pili Hu andWing Cheong Lau. 2013. A Survey and Taxonomy of Graph Sampling.

CoRR abs/1308.5 (aug 2013). arXiv:1308.5865 https://arxiv.org/abs/1308.5865[15] Martin Junghanns, André Petermann, Niklas Teichmann, Kevin Gómez, and

Erhard Rahm. 2016. Analyzing Extended Property Graphs with Apache Flink.

In Proceedings of the 1st ACM SIGMOD Workshop on Network Data Analytics(NDA ’16). ACM, New York, NY, USA, Article 3, 8 pages. https://doi.org/10.1145/2980523.2980527

[16] Vasiliki Kalavri, Stephan Ewen, Kostas Tzoumas, Vladimir Vlassov, Volker Markl,

and Seif Haridi. 2014. Asymmetry in Large-Scale Graph Analysis, Explained. In

Proceedings of Workshop on GRAph Data Management Experiences and Systems(GRADES’14). ACM, New York, NY, USA, Article 4, 7 pages. https://doi.org/10.1145/2621934.2621940

[17] Amy Nicole Langville and Carl Dean Meyer. 2004. Updating Pagerank with

Iterative Aggregation. In Proceedings of the 13th International World Wide WebConference on Alternate Track Papers &Amp; Posters (WWW Alt. ’04). ACM, New

York, NY, USA, 392–393. https://doi.org/10.1145/1013367.1013491[18] Jure Leskovec, Jon Kleinberg, and Christos Faloutsos. 2005. Graphs over Time:

Densification Laws, Shrinking Diameters and Possible Explanations. In Pro-ceedings of the Eleventh ACM SIGKDD International Conference on KnowledgeDiscovery in Data Mining (KDD ’05). ACM, New York, NY, USA, 177–187.

https://doi.org/10.1145/1081870.1081893

[19] Grzegorz Malewicz, Matthew H. Austern, Aart J.C Bik, James C. Dehnert, Ilan

Horn, Naty Leiser, and Grzegorz Czajkowski. 2010. Pregel: A System for Large-

scale Graph Processing. In Proceedings of the 2010 ACM SIGMOD InternationalConference on Management of Data (SIGMOD ’10). ACM, New York, NY, USA,

135–146. https://doi.org/10.1145/1807167.1807184[20] Alistair Moffat. 2018. Computing Maximized Effectiveness Distance for Recall-

Based Metrics. IEEE Transactions on Knowledge and Data Engineering 30, 1 (2018),198–203.

[21] Lawrence Page, Sergey Brin, Rajeev Motwani, and Terry Winograd. 1999. ThePageRank Citation Ranking: Bringing Order to the Web. Technical Report 1999-66.Stanford InfoLab. http://ilpubs.stanford.edu:8090/422/

[22] Daniel Peng and Frank Dabek. 2010. Large-scale Incremental Processing Using

Distributed Transactions and Notifications. In Proceedings of the 9th USENIXConference on Operating Systems Design and Implementation (OSDI’10). USENIXAssociation, Berkeley, CA, USA, 251–264. http://dl.acm.org/citation.cfm?id=1924943.1924961

[23] Martin Rinard. 2006. Probabilistic Accuracy Bounds for Fault-tolerant Com-

putations That Discard Tasks. In Proceedings of the 20th Annual InternationalConference on Supercomputing (ICS ’06). ACM, New York, NY, USA, 324–334.

https://doi.org/10.1145/1183401.1183447[24] Amitabha Roy, Ivo Mihailovic, and Willy Zwaenepoel. 2013. X-stream: Edge-

centric graph processing using streaming partitions. In Proceedings of the Twenty-Fourth ACM Symposium on Operating Systems Principles. ACM, 472–488.

[25] Matthew NO Sadiku, Sarhan M Musa, and Omonowo D Momoh. 2014. Cloud

computing: opportunities and challenges. IEEE potentials 33, 1 (2014), 34–36.[26] Frank Spitzer. 2013. Principles of random walk. Vol. 34. Springer Science &

Business Media.

[27] Liwen Sun, Michael J Franklin, Sanjay Krishnan, and Reynold S Xin. 2014. Fine-

grained partitioning for aggressive data skipping. In Proceedings of the 2014 ACMSIGMOD international conference on Management of data - SIGMOD ’14 (SIGMOD’14). ACM Press, New York, New York, USA, 1115–1126. https://doi.org/10.1145/2588555.2610515

[28] Nesime Tatbul, Uğur Çetintemel, and Stan Zdonik. 2007. Staying FIT: Efficient

Load Shedding Techniques for Distributed Stream Processing. In Proceedings ofthe 33rd International Conference on Very Large Data Bases (VLDB ’07). VLDBEndowment, 159–170. http://dl.acm.org/citation.cfm?id=1325851.1325873

[29] Bimal Viswanath, Alan Mislove, Meeyoung Cha, and Krishna P Gummadi. 2009.

On the Evolution of User Interaction in Facebook. In Proceedings of the 2Nd ACMWorkshop on Online Social Networks (WOSN ’09). ACM, New York, NY, USA,

37–42. https://doi.org/10.1145/1592665.1592675 Dataset: http://socialnetworks.mpi-sws.org/data-wosn2009.html.

[30] WilliamWebber, Alistair Moffat, and Justin Zobel. 2010. A Similarity Measure for

Indefinite Rankings. ACM Trans. Inf. Syst. 28, 4, Article 20 (Nov. 2010), 38 pages.https://doi.org/10.1145/1852102.1852106

[31] Matei Zaharia, Reynold S. Xin, Patrick Wendell, Tathagata Das, Michael Arm-

brust, Ankur Dave, Xiangrui Meng, Josh Rosen, Shivaram Venkataraman,

Michael J. Franklin, Ali Ghodsi, Joseph Gonzalez, Scott Shenker, and Ion Stoica.

2016. Apache Spark: A Unified Engine for Big Data Processing. Commun. ACM59, 11 (Oct. 2016), 56–65. https://doi.org/10.1145/2934664

13

![Centrality Estimation in Large Networks - uni-konstanz.de · 2016. 3. 30. · centrality by the maximum distance to any other vertex [Harary and Hage 1995], or subtract each distance](https://static.fdocuments.us/doc/165x107/60e42fcf76d36177f6748fa6/centrality-estimation-in-large-networks-uni-2016-3-30-centrality-by-the.jpg)