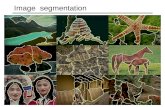

What is Image Segmentation? Image Segmentation Methods Thresholding Boundary-based

Evaluation of Image Segmentation · 2010-08-17 · Validation of Image Segmentation • Comparison...

Transcript of Evaluation of Image Segmentation · 2010-08-17 · Validation of Image Segmentation • Comparison...

Computational Radiology Laboratory Harvard Medical School www.crl.med.harvard.edu

Children’s Hospital Department of Radiology Boston Massachusetts

Evaluation of Image Segmentation

Simon K. Warfield, Ph.D. Associate Professor of Radiology Harvard Medical School

ComputationalRadiologyLaboratory. Slide 2

Segmentation • Segmentation

– Identification of structure in images. – Many different algorithms and a wide range

of principles upon which they are based. • Segmentation is used for:

– Quantitative image analysis – Image guided therapy – Visualization

• Evaluation : How to know when we have a good segmentation ?

ComputationalRadiologyLaboratory. Slide 3

Validation of Image Segmentation • Spectrum of accuracy versus realism in

reference standard. • Digital phantoms.

– Ground truth known accurately. – Not so realistic.

• Acquisitions and careful segmentation. – Some uncertainty in ground truth. – More realistic.

• Autopsy/histopathology. – Addresses pathology directly; resolution.

• Clinical data ? – Hard to know ground truth. – Most realistic model.

ComputationalRadiologyLaboratory. Slide 4

Validation of Image Segmentation • Comparison to digital and physical

phantoms: – Excellent for testing the anatomy, noise and

artifact which is modeled. – Typically lacks range of normal or

pathological variability encountered in practice.

MRI of brain phantom from Styner et al. IEEE TMI 2000

ComputationalRadiologyLaboratory. Slide 5

Comparison To Higher Resolution

MRI Photograph MRI

Provided by Peter Ratiu and Florin Talos.

ComputationalRadiologyLaboratory. Slide 6

Comparison To Higher Resolution

Photograph MRI Photograph Microscopy

Provided by Peter Ratiu and Florin Talos.

ComputationalRadiologyLaboratory. Slide 7

Comparison to Autopsy Data • Neonate gyrification index

– Ratio of length of cortical boundary to length of smooth contour enclosing brain surface

ComputationalRadiologyLaboratory. Slide 8

Staging

Stage 3 Stage 5

Stage 4 Stage 6

Stage 3: at 28 w GA shallow indentations of inf. frontal and sup. Temp. gyrus (1 infant at 30.6 w GA, normal range: 28.6 ± 0.5 w GA)

Stage 4: at 30 w GA 2 indentations divide front. lobe into 3 areas, sup. temp.gyrus clearly detectable (3 infants, 30.6 w GA ± 0.4 w, normal range: 29.9 ± 0.3 w GA)

Stage 5: at 32 w GA frontal lobe clearly divided into three parts: sup., middle and inf. Frontal gyrus (4 infants, 32.1 w GA ± 0.7 w, normal range: 31.6 ± 0.6 w GA)

Stage 6: at 34 w GA temporal lobe clearly divided into 3 parts: sup., middle and inf. temporal gyrus (8 infants, 33.5 w GA ± 0.5 w normal range: 33.8 ± 0.7 w GA)

“Assessment of cortical gyrus and sulcus formation using MR images in normal fetuses”, Abe S. et al., Prenatal Diagn 2003

ComputationalRadiologyLaboratory. Slide 9

Neonate GI: MRI Vs Autopsy

ComputationalRadiologyLaboratory. Slide 10

GI Increase Is Proportional to Change in Age.

ComputationalRadiologyLaboratory. Slide 11

GI Versus Qualitative Staging

ComputationalRadiologyLaboratory. Slide 12

Neonate Gyrification

ComputationalRadiologyLaboratory. Slide 13

Validation of Image Segmentation

• STAPLE (Simultaneous Truth and Performance Level Estimation): – An algorithm for estimating performance

and ground truth from a collection of independent segmentations.

– Warfield, Zou, Wells, IEEE TMI 2004. – Warfield, Zou, Wells, PTRSA 2008. – Commowick and Warfield, IEEE TMI 2010.

ComputationalRadiologyLaboratory. Slide 14

Validation of Image Segmentation • Comparison to expert performance; to other

algorithms. • Why compare to experts ?

– Experts are currently doing the segmentation tasks that we seek algorithms for:

• Surgical planning. • Neuroscience research. • Response to therapy assessment.

• What is the appropriate measure for such comparisons ?

ComputationalRadiologyLaboratory. Slide 15

Measures of Expert Performance • Repeated measures of volume

– Intra-class correlation coefficient • Spatial overlap

– Jaccard: Area of intersection over union. – Dice: increased weight of intersection. – Vote counting: majority rule, etc.

• Boundary measures – Hausdorff, 95% Hausdorff.

• Bland-Altman methodology: – Requires a reference standard.

• Measures of correct classification rate: – Sensitivity, specificity ( Pr(D=1|T=1), Pr(D=0|T=0) ) – Positive predictive value and negative predictive value

(posterior probabilities Pr(T=1|D=1), Pr(T=0|D=0) )

ComputationalRadiologyLaboratory. Slide 16

Measures of Expert Performance • Our new approach:

• Simultaneous estimation of hidden ``ground truth’’ and expert performance.

• Enables comparison between and to experts.

• Can be easily applied to clinical data exhibiting range of normal and pathological variability.

ComputationalRadiologyLaboratory. Slide 17

How to judge segmentations of the peripheral zone?

1.5T MR of prostate Peripheral zone and segmentations

ComputationalRadiologyLaboratory. Slide 18

Estimation Problem

• Complete data density: • Binary ground truth Ti for each voxel i. • Expert j makes segmentation decisions Dij. • Expert performance characterized by sensitivity

p and specificity q. – We observe expert decisions D. If we knew

ground truth T, we could construct maximum likelihood estimates for each expert’s sensitivity (true positive fraction) and specificity (true negative fraction):

ComputationalRadiologyLaboratory. Slide 19

Expectation-Maximization • General procedure for estimation

problems that would be simplified if some missing data was available.

• Key requirements are specification of: – The complete data. – Conditional probability density of the hidden

data given the observed data. • Observable data D • Hidden data T, prob. density • Complete data (D,T)

f (T | D,θ̂)

ComputationalRadiologyLaboratory. Slide 20

Expectation-Maximization • Solve the incomplete-data log likelihood

maximization problem

• E-step: estimate the conditional expectation of the complete-data log likelihood function.

• M-step: estimate parameter values Q(θ | θ̂) = E ln f (D,T |θ) |D,θ̂

€

argmaxθ Q θ | ˆ θ ( )

ComputationalRadiologyLaboratory. Slide 21

Expectation-Maximization • Since we don’t know ground truth T, treat T as

a random variable, and solve for the expert performance parameters that maximize:

• Parameter values θj=[pj qj]T that maximize the conditional expectation of the log-likelihood function are found by iterating two steps: – E-step: Estimate probability of hidden ground truth T given a

previous estimate of the expert quality parameters, and take the expectation.

– M-step: Estimate expert performance parameters by comparing D to the current estimate of T.

Q(θ | θ̂) = E ln f (D,T |θ) |D,θ̂

ComputationalRadiologyLaboratory. Slide 22

STAPLE • Consider binary labels:

– foreground. – background.

• Spatial correlation of the unknown true segmentation can be modelled with a Markov Random Field.

ComputationalRadiologyLaboratory. Slide 23

To Solve for Expert Parameters:

ComputationalRadiologyLaboratory. Slide 24

True Segmentation Estimate

ComputationalRadiologyLaboratory. Slide 25

Expert Performance Estimate Now we seek an expression for the conditional expectation of the complete-data log likelihood function that we can maximize.

ComputationalRadiologyLaboratory. Slide 26

Expert Performance Estimate Now, consider each expert separately:

Differentiate this with respect to pj,qj and solve for zero.

ComputationalRadiologyLaboratory. Slide 27

Expert Performance Estimate

p (sensitivity, true positive fraction) : ratio of expert identified class 1 to total class 1 in the image.

q (specificity, true negative fraction) : ratio of expert identified class 0 to total class 0 in the image.

ComputationalRadiologyLaboratory. Slide 28

Extension to Several Tissue Labels

• Complete data density: • True segmentation Ti for each voxel i

– May be binary

– May be categorical

• Expert j makes segmentation decisions Dij

• Expert performance θs’s characterizes probability of deciding label s’ when true label is s.

ComputationalRadiologyLaboratory. Slide 29

Probability Estimate of True Labels

ComputationalRadiologyLaboratory. Slide 30

Expert Performance Estimate Now, consider each expert separately:

Note constraint on sum of parameters. Solve for maximum.

ComputationalRadiologyLaboratory. Slide 31

Parameter Estimation Noting that

We can formulate the constrained optimization problem:

ComputationalRadiologyLaboratory. Slide 32

Parameter Estimation Therefore

And noting that

We find that

ComputationalRadiologyLaboratory. Slide 33

Results: Synthetic Experts • Several experiments with known ground truth

and known performance parameters. • Goal:

– Determine if STAPLE accurately identifies known ground truth.

– Determine if STAPLE accurately determines known expert performance parameters.

– Understand sensitivity of STAPLE with respect to changes in prior hyper-parameters; requirements for number of observations to enable good estimation; convergence characteristics.

ComputationalRadiologyLaboratory. Slide 34

Synthetic Experts 10 observations of segmentation by expert with p=q=0.99

Four segmentations of ten shown. STAPLE ground truth.

STAPLE p,q estimates: mean p 0.990237 std. dev p 0.000616 mean q 0.990121 std. dev q 0.00071

ComputationalRadiologyLaboratory. Slide 35

Synthetic Experts 10 segmentations by experts with p=0.95, q=0.90

Four segmentations of ten shown. STAPLE ground truth.

STAPLE p,q estimates: mean p 0.950104 std. dev p 0.001201 mean q 0.900035 std. dev q 0.001685

ComputationalRadiologyLaboratory. Slide 36

Expert and Student Segmentations

Test image Expert consensus Student 1

Student 2 Student 3

ComputationalRadiologyLaboratory. Slide 37

Phantom Segmentation

Image Expert Students Voting STAPLE

Image Expert segmentation

Student segmentations

ComputationalRadiologyLaboratory. Slide 38

Prostate Peripheral Zone

Frequency of selection by experts. STAPLE truth estimate

1 2 3 4 5

pj .879 .991 .937 .918 .895

qj .998 .994 .999 .999 .999

Dice .913 .951 .967 .955 .944

ComputationalRadiologyLaboratory. Slide 39

A Binary MRF Model for Spatial Homogeneity. Include a prior probability for the neighborhood configuration:

ComputationalRadiologyLaboratory. Slide 40

MAP Estimation With MRF Prior

ComputationalRadiologyLaboratory. Slide 41

Synthetic Experts Only three segmentations by different quality experts.

STAPLE ground truth.

STAPLE p,q estimates: p1, q1 0.9505,0.9494 p2, q2 0.9511,0.8987 p3, q3 0.9000,0.8987

p=0.95,q=0.95 p=0.95,q=0.90

p=0.90,q=0.90 With MRF prior

ComputationalRadiologyLaboratory. Slide 42

Cryoablation of Kidney Tumor Segmentations before training session with radiologist:

Rater frequency. STAPLE with MRF. After training session:

Based on the STAPLE performance assessment, we found the training session created a statistically significant increase in performance of the raters.

ComputationalRadiologyLaboratory. Slide 43

Newborn MRI Segmentation

ComputationalRadiologyLaboratory. Slide 44

Newborn MRI Segmentation

Summary of segmentation quality (posterior probability Pr(T=t|D=t) ) for each tissue type for repeated manual segmentations.

ComputationalRadiologyLaboratory. Slide 45

STAPLE Summary • Key advantages of STAPLE:

– Estimates ``true’’ segmentation. – Assesses expert performance.

• Principled mechanism which enables: – Comparison of different experts. – Comparison of algorithm and experts.

• Extensions for the future: – Can we learn image features that lead to

different levels of expert performance?

ComputationalRadiologyLaboratory. Slide 46

Acknowledgements

• Neil Weisenfeld. • Andrea Mewes. • Petra Huppi. • Olivier Clatz. • William Wells. • Olivier Commowick.

This study was supported by: Center for the Integration of Medicine and Innovative Technology R01 RR021885, R01 GM074068 and R01 HD046855.

Colleagues contributing to this work: • Arne Hans. • Heidelise Als. • Lianne Woodward. • Frank Duffy. • Arne Hans. • Kelly Zou.