Early recognition of speech

Transcript of Early recognition of speech

Focus Article

Early recognition of speechRobert E. Remez∗ and Emily F. Thomas

Classic research on the perception of speech sought to identify minimal acousticcorrelates of each consonant and vowel. In explaining perception, this viewdesignated momentary components of an acoustic spectrum as cues to therecognition of elementary phonemes. This conceptualization of speech perceptionis untenable given the findings of phonetic sensitivity to modulation independentof the acoustic and auditory form of the carrier. The empirical key is provided bystudies of the perceptual organization of speech, a low-level integrative functionthat finds and follows the sensory effects of speech amid concurrent events.These projects have shown that the perceptual organization of speech is keyedto modulation; fast; unlearned; nonsymbolic; indifferent to short-term auditoryproperties; and organization requires attention. The ineluctably multisensorynature of speech perception also imposes conditions that distinguish languageamong cognitive systems. © 2012 John Wiley & Sons, Ltd.

How to cite this article:WIREs Cogn Sci 2013, 4:213–223. doi: 10.1002/wcs.1213

INTRODUCTION

To understand an utterance, a perceiver mustgrasp its linguistic properties, the words, phrases

and clauses, as well as the entailments of priorand looming events, spoken or not. Ordinarily, theattention of a perceiver is focused on a talker’simmediate meaning, but the linguistic constituentsthat represent meaning are arrayed at the fine grain bylinguistically governed segments, the consonants andvowels that compose each utterance. So, to recognize asequence of spoken words necessitates the perceptualresolution of phoneme contrasts, a small set ofcharacteristic distinctions that indexes words by theirform. Listening to English, for example, is a version ofthis challenge that is well bounded, for there are onlya dozen and a half contrast features and fewer thanfour dozen phoneme segments. In practice, the speechencountered by a perceiver exhibits vastly greatervariation. In small part, this is due to the coarticulationof each phoneme with preceding and followingphonemes, conferring the influence of receding andanticipated contrasts on motor expression and theconsequent acoustic and sensory effects. Althoughphoneme contrasts contribute to the structure of an

∗Correspondence to: [email protected]

Department of Psychology and Program in Neuroscience &Behavior, Barnard College, Columbia University, New York,NY, USA

utterance, talkers simply cannot produce a standardset of acoustic properties, due to differences inanatomy. This variation in scale affects articulationand the resulting properties of speech as a consequenceof the physics of acoustic resonance.

Anatomical differences aside, no talker simplyspeaks phonemes. An utterance expresses personalcharacteristics: a talker’s maturity, sex and gender,vitality, regional linguistic habits, and idiosyncrasiesof diction, not to mention idiosyncrasies of dentition.In conversations, an utterance can be producedcarefully or casually, and can express features ofmood or motive, making the contribution of canonicalphonemic form just one of the determinants ofarticulation. This convergence of causes takes thephoneme sequence that indexes the words and givesit personal and circumstantial shape. It is implausibleto view these effects as a kind of normally distributedvariation in the phonetics of consonants and vowels.1

Overall, the expressed form reflects this bottleneck inproduction, resulting in huge variation in the physicaleffects of any single consonant or vowel. For theperceiver to recognize words requires the phonemicgrain, but this is available only as a compromiseamong linguistic structure, paralinguistic expression,and circumstance. Accordingly, the relation betweenthe phoneme series of a word and the actual spokenexpression is commonly described as a problem ofinvariance and variability, that is to say, invariance

Volume 4, March/Apr i l 2013 © 2012 John Wiley & Sons, Ltd. 213

Focus Article wires.wiley.com/cogsci

in a recognized phoneme contrast despite variabilityin its phonetic, paralinguistic, and situation-specificcharacteristics.

The perceptual recognition of speech is not sim-ply a topic of auditory concern, although it has beenprimarily so. Research on auditory sensitivity, whetherpsychoacoustic or physiological, has produced adescription of the sensory effects of speech sounds,beginning in the cochlea and spanning the auditorynucleus to the primary auditory areas in vertebrates.Although these projects show the preservation of theacoustic features of speech, it is acknowledged2 thatthey offer a model of hearing speech sounds, not oflistening to spoken language. The portrait of speechsounds provided by this research stops far short ofan account of the resolution of linguistic propertiesof speech, and offers a description of the mere sur-vival of the raw structure of the speech spectrum asit propagates along the auditory pathway. Acknowl-edging that recognition by features is inadequate boththeoretically and descriptively to meet the challengeof invariance and variability, such accounts present adescription of the audibility of speech sounds, and donot offer an account of speech perception.

From this perspective, it is sobering to notethe persistence of the disposition to explain speechperception normatively, as if the resolution ofphonemic properties obscured by paralinguisticand circumstantial influences were accomplished bysensitivity to distributions of sensory elements.3,4 Thisperennial theory of first and last resort must be false,however, appealing it is in its simplicity. At the heartof the normative conceptualization is the premise thatthe perceptual essence of a phoneme can be found inmomentary articulatory or acoustic characteristics ofspeech. However, the acoustic details of speech reflectlinguistic properties shaped by the characteristics ofunique individuals and communities. In consequence,a statistical description of acoustic cues—the physicalconstituents of speech and their auditory effects—addsnothing more than precision to a premise thatconflates linguistic and personal causes of acousticvariation. The normative premise is hopeful butfalse. Apart from an argument in principle, empiricalwork shows this clearly, motivated by the problemof perceptual organization, a fundamental aspectof speech perception that establishes the conditionsrequisite for the analysis of linguistic properties.

PERCEPTUAL ORGANIZATION

How does a listener find a speech stream in sensoryactivity? Commonly, the environments in whichspeech occurs are acoustically busy, with several

sources of sound in addition to a spoken source.Indoors, a reverberant enclosure sustains echoes ofspeech that exhibit spectral and temporal patternssimilar to the perceived utterance. In social gatherings,several talkers speak concurrently. This centralphenomenon confronting the scientific description ofspeech perception was well framed by Cherry5 as theability to find and to follow a familiar if unpredictablyvarying signal within an acoustically lively settingsimilar in spectrum and pattern. Most descriptions ofspeech perception only begin after the auditory effectsof speech are isolated from concurrent sound sources.This is readily seen in theoretical discussions of speechperception (see Refs 6–10; others reviewed by Klatt11).Indeed, whether or not an account is avowedlymodular, asserting that special functions are requiredfor the perception of speech, most are tacitly modular,explaining speech perception as if speech were the onlysound a listener ever encountered, and as if only onetalker ever spoke at once.12 Although this designationis easily met in a laboratory, it is not an adequatedescription of the ordinary perceptual challenges thatconfront a listener. Perceptual organization is thefundamental function presumed by such accounts: Themeans by which sensory effects of a spoken source arebound and rendered fit to analyze.

Technical research has produced two differingconceptualizations of perceptual organization. In one,direct measures of the perceptual organization ofspeech have been used to motivate a description.12–14

These studies reveal the action of a perceptual functionthat is fast, unlearned, nonsymbolic, sensitive tocoordinate variation within the spectrum, indifferentto the detailed grain of sensation, and requiringattention. Although characteristics of this descriptioncontinue to emerge in new findings, it offers a clearcontrast to the portrait of perceptual organizationgiven in a generic auditory account.15,16 This accountderives chiefly from studies of acoustic patternscomposed by design rather than sounds produced bynatural sources.17 It elaborates the grouping principlesnoted by the Gestalt psychologist Wertheimer 90 yearsago and expanded by Bregman15 and colleagues asan obligatory and automatic mechanism for sortingprimitive elements of auditory sensation. Accordingto this view, as the sensory elements of an auditorysample are individually resolved, they are rapidly andautomatically arranged in segregated streams. Eachstream is composed of similar sensory elements, andeach stream is taken as the effect of a source ofsound, whether the dimension of similarity alongwhich binding occurs is temporal or spectral. Thisnotion, recently reviewed by Darwin,16 asserts thatgrouping by similarity is an optimization produced

214 © 2012 John Wiley & Sons, Ltd. Volume 4, March/Apr i l 2013

WIREs Cognitive Science Early recognition of speech

by long-term evolutionary pressure on the auditorysystem; and, the perceptual organization of speechreduces to principles established in psychoacousticstudies of similarity grouping and their physiologicalcounterparts, chiefly of neural comodulation andsynchronization observed in studies of low-levelhearing (see Refs 18 and 19). These two accounts, onebased on direct examination of utterances and anotheron the psychoacoustics of arbitrarily constructedsound patterns, offer contrasting claims about theperceptual organization of speech. It cannot be verysurprising that an account based on direct study ofspeech offers a decent estimate of some functionsthat apply in the early sensory processing of thiskind of signal. More surprising is that the generalauditory account fares so poorly in explaining theperceptual organization of speech despite assertionsof its physiological plausibility.

Direct Investigations of SpeechDirect investigations of the perceptual organizationof speech have included studies that examined theintimate as well as the boisterous cocktail party.In the intimate case, the organizational challengeto perception is to take the physically varied andintricately patterned acoustic effects of the speech of asingle individual and to group these into a perceptualstream attributed to one source of sound. Theboisterous counterpart is a competitive organizationalchallenge, namely, to isolate the speech stream of asingle individual from the speech of other talkers,reverberation, and sounds produced by nonvocalevents.

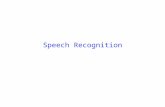

Modulation SensitivityIn virtue of the indifference to short-term elementaryacoustic characteristics, the organization of speechseems to depend instead on sensitivity to modulationdirectly. This has been observed in four independentcases: when time-varying sinusoids replicate theamplitude peaks of the center-frequencies of naturalvocal resonances,20 when vocoded synthesis of speechin 1 kHz wide bands employs a carrier that isuniformly noisy13 or uniformly harmonic,21 and whenthe composition of the carrier varies arbitrarily,14

for instance, when the modulation characteristic ofspeech is imposed on a sample of sound produced bya collection of musical instruments (Figure 1).

The finding that organization does not dependon the resolution and categorization of individualacoustic moments was actually anticipated by theobservation that the constituents distributed througha speech spectrum are not unique to speech. Eachloses its phonetic effect when excised from a speech

signal and presented alone.22 An individual acousticmoment in a speech spectrum can be grouped withthe physically diverse and discontinuous set of naturalvocal products that issues from a talker—the whistles,clicks, hisses, buzzes, and hums that speech is madeof—because its relation to the ongoing acousticpattern is consistent with a dynamically deformablevocal origin. A corollary of this claim is that theperceptually critical attributes of the speech streamare its modulations, and not the individual vocalpostures, nor moments of articulatory occlusionand release, nor the specific acoustic elements thatthese brief vocal acts produce. This indifference oforganizational functions to the short-term spectrumof speech demotes the classically designated acousticcue to a role of diminished importance in perception.

Research on modulation sensitivity in theauditory system in the past decade producedpreliminary descriptions both of the physicalmodulation of speech spectra23 and of the modulation-contingent trigger features of neural populations.24

Although these projects have observed the capacityof auditory neurons from the thalamus to the cortexto follow spectral modulations in speech, overall thefindings describe sensitivity to a broader range ofacoustic properties than is typical of speech, chiefly atthe rapidly changing end of spectrum modulation.Further, no study has sought to identify neuralpopulations that are effective for an auditory contourthat is criterial for speech. One significant obstacleto identifying this aspect of modulation sensitivityin any nonhuman auditory system is that there islittle behavioral evidence in animals of integration ofthe acoustically diverse speech stream. Proof that aspeech sample introduced to the auditory pathwayof a ferret or a cat evokes consistent neural effectsshows that its acoustic constituents are audible, butis not evidence of integration of diverse acousticconstituents into a perceptual stream, neither bysensitivity to modulation nor by other means. A recentstudy of spoken word recognition by a chimpanzee25

presents a single potential instance of a nonhumananimal sensitive to modulation independent of thecharacteristics of the carrier. If adequate controlmethods were to prove eventually that perceptualintegration and spoken word identification do occurin this nonhuman instance, on ethical grounds theauditory characteristics of this chimp are no likelierto be calibrated physiologically than those of ahuman listener. The neural correlates of modulationsensitivity in the perceptual organization of speechmight be identifiable grossly in humans,26 but thesearch for neural circuitry responsible for bindingdiverse components based on their characteristic

Volume 4, March/Apr i l 2013 © 2012 John Wiley & Sons, Ltd. 215

Focus Article wires.wiley.com/cogsci

freq

uenc

y

Time

(a)

(b)

(c)

(d)

FIGURE 1 | Spectrographic representation of four variants of the sentence, ‘Jazz and swing fans like fast music’. (a) Natural speech; (b) sine-wavespeech; (c) noiseband vocoded speech; and (d) speech–music chimera.

modulation must wait for research methods to catchup to the question.

Fast Pace of IntegrationThe physical modulations of speech correspond tothe linguistic constituents of an utterance. Becauselinguistic constituents are nested, speech exhibits

acoustic modulation ranging from fine to coarsetemporal grain. At the slower end of this range,a breath group is typically 2 s in duration, anda clause within it about half as long. A phrasemight last 500 ms, a syllable 200 ms, and adiphone—the concurrent production of a consonantand vowel—about 100 ms. It can be difficult to isolate

216 © 2012 John Wiley & Sons, Ltd. Volume 4, March/Apr i l 2013

WIREs Cognitive Science Early recognition of speech

the acoustic effects of individual segments, yet anestimate of 50 ms can be found, nonetheless, in thetechnical literature. Although each of these linguisticconstituents must be resolved auditorily to apprehendthe symbolic properties of speech, the psychoacousticdynamic that applies is tied to the rapid fading of anyauditory trace of sound. In this respect speech enjoysno special sensory status, persisting in its auditoryform for 100 ms. Relative to almost every otherkind of acoustic pattern, speech is familiar, whichfosters a listener’s proficiency in recasting a fadingtrace into a more durable form. The rapid pace ofsensory integration is set by this psychoacoustic limit,and a window of integration 100 ms wide is fast incomparison to the leisurely pace of entire utterances.Claims of fast integration based on acoustic analysesof speech27,28 are corroborated by a diverse set ofdirect measures.29–34 Although some reports favora description of sensitivity at the leisurely pace ofsyllables35–37 these findings are not consistent withthe direct measures of performance, and thereforeprobably reflect integrative functions applied post-perceptually to linguistic properties already derived.38

The accretion of constituents superordinate to asegmental grain is an organizational function, butat this slow pace the ingredients to be integrated mustalready be resolved within a speech stream, much asa speech stream itself must already be resolved withinan auditory scene to yield a coherent sensory samplefit to analyze.

Unlearned SensitivityDevelopmentally, the functions of perceptual orga-nization are apparently unlearned, neither requiringnor admitting exposure to speech. In this respect,the grammatical functions in language, which requireexposure to take shape, can be considered dissimilarfrom the perceptual functions that find and followthe sensory effects of speech. The youngest infantsare attracted to speech before exhibiting sensitivity tolinguistic structure.39 Indeed, an infant’s attention tothe linguistic properties of speech rests on a devel-opmentally prior perceptual facility to organize thesensory effects of utterances. This necessarily pre-cedes mastery of the native language, whether thecoarse grain of prosody or the fine grain of phono-tactics is concerned. An infant (at 14 weeks40; at 20weeks41) demonstrates adult-like modulation sensitiv-ity to spatially and spectrally disaggregated acousticconstituents of the rapidly changing consonant onsetof a speech signal40 despite evident indifference tophonemic properties of the native language. An infantat this age is capable of finding speech in the nursery,yet it is unable to distinguish utterances on linguisti-cally governed criteria, for instance, the phonotactics

and prosodic attributes that distinguish the nativelanguage from utterances in as yet unencountered lan-guages. There is some evidence that this acuity to thecoherence of speech signals is especially protective innoisy environments during childhood42 and dissipateswith age. Such a developmental course is consistentwith an explanation of perceptual organization that isnative, or very nearly so.

NonsymbolicDirect measures reveal that integration occurspreliminary to phonetic analysis, for which reason itscharacter can be understood as nonsymbolic. Thesemethods have used competitive listening conditions,in which the perceptual organization of speech isdisrupted by an acoustic lure, depending on its pattern.An extraneous acoustic signal that masks a speechstream in whole or in part can block the perceptionof speech, but this form of competition, which simplyobscures significant portions of the spectrum, is notespecially informative about integrating functions.When both speech and lures are well above thresholdand resolvable, it is possible to determine whetherthe pattern of the lure can compete for attentionwithout also masking the speech. Indeed, a competitoris most effective when its frequency variationimitates a vocal resonance, although it need not beintelligible itself to compete for organization withacoustic components that are capable of formingan intelligible utterance.43,44 Ineffective competitorshave exhibited natural amplitude variation in theabsence of natural frequency variation, or frequencyvariation inconsistent with a vocal source. Adose–response effect was also reported in whichthe more speechlike the frequency variation of acompetitor, the more it drew attention from thecomponents of a speech signal.45 The property sharedby the effective cases was frequency modulationtypical of speech, despite remaining unintelligible,phonetically. Although amplitude co-modulation hasfigured prominently in auditory treatments of sensoryintegration, modulation at the pace of syllables isapparently ineffective in promoting the coherence ofspeech.

Indifference to Short-Term AcousticCharacteristicsPerhaps, the signature of the integrative challengein the case of speech is the complexity andheterogeneity of the spectrotemporal pattern. Manynatural sound sources exhibit nonlinear acousticeffects. Among these is the vocal apparatus, whichis capable of issuing a wide range of acousticproperties. Modeling of acoustic phonetics46 hasshown that small differences in configuration of the

Volume 4, March/Apr i l 2013 © 2012 John Wiley & Sons, Ltd. 217

Focus Article wires.wiley.com/cogsci

articulators can have large and discontinuous acousticeffects, producing spectrotemporal patterns in whichresonances emerge and break up, for instance, whenthe release of an occluded tract produces a shockexcitation, when narrowing produces frication, orwhen coupling of the oral and nasal pharyngesproduces discontinuous resonance and anti-resonance.Aperiodic effects are produced throughout the tract,from the larynx to the lips, and can be concentratedwithin narrow bands or spread across broad frequencyranges. Overall, speech is produced as a collectionof different kinds of sounds rather than a singlekind. Because the patterned spectrum of an utteranceexhibits neither physical continuity, nor commonmodulation, nor a simple composite of similar acousticelements, the organizational functions that find andfollow speech must apprehend the coordinate ifheterogeneous patterned variation. It is uncertainwhether this is accomplished by a specific functionthat is reciprocal to the mechanics of vocalization.Speech is a well examined instance of mechanicalsound production, yet no evidence suggests thatthe characteristics of the perceptual organizationof speech must be unique among nonlinear soundsources. Whether these functions reflect a genericorganizational function adequate for dynamicallydeformable sound sources, or a function unique tospeech, can be answered by the relevant investigations,which have not yet occurred. Accordingly, there islittle to report about the neural mechanisms thatexhibit functional characteristics matched to thesedynamic properties.

Requiring AttentionIn ordinary listening, the sound of speech engagesa listener’s attention by virtue of its vocal timbre.Naı̈vely, this might seem to occur without effort orintention, but this ease is misleading. Studies thathave used sine-wave speech to decouple vocal timbrefrom the effectiveness of spectral modulation revealthat perceptual organization is neither automaticnor obligatory (see Ref 47), contradicting thedescription of the perceptual module hypotheticallydedicated to speech.48 The finding that the perceptualorganization of speech requires attention also opposesthe conceptualization of perception by means ofmodular pandemonium49 and its variants. Thesemodels share an assertion of passive organizationand analysis. Descriptions of attention have restedon the notions of selection and dedication of specificcognitive resources. Alternatively, automatic cognitivefunctions occur neither with selection, nor withcommitment of specific functional resources, nor witheffort.

In the perceptual organization of natural speech,an impression of vocal timbre typically evokesattention to the time-varying spectrum as soundissuing from a spoken source, and a listener attendsto the evolving sound pattern as speech. When theresonances are replaced with sinusoids, this createsan initial impression in a naı̈ve listener of unrelatedtones varying asynchronously in pitch and loudness.The tonal auditory impression of sine-wave speechis insufficient to summon attention to the patternas speech. In this instance, the requisite attentionalset can be supplied extrinsically. An instruction tolisten to sine-wave replicas of spoken words as a kindof synthetic speech permits a listener to group thetones into an effective if anomalous vocal stream.Neural measures show that this condition—a spectralpattern adequately structured to evoke a phoneticimpression in combination with attention—differedfrom contrasting cases in which the acoustic structurewas inadequate, phonetically, or when the acousticstructure was effective but listeners did not attend tothe incident pattern as speech.

A Generic Auditory Accountof OrganizationDespite the evidence accumulating from direct stud-ies of the perceptual organization of speech, a genericapproach to auditory perceptual organization remainsfirmly established. At the heart of the conceptualiza-tion is the notion of similarity appropriated fromGestalt studies of visual and sometimes auditorygrouping. In vision, the original demonstrations ofgrouping by similarity, or proximity, or closure, etc.,relied on instances drafted with ink on paper. In audi-ble examples of grouping, melodies were described asplayed on a musical instrument. More contemporaryexpressions of similarity grouping have invoked themotivation to understand the perceptual resolutionof sound into streams, each issuing from an acous-tic source in worldly scene. In contrast to the loftyideal, the empirical development of the generic audi-tory account of perceptual organization has relied onarbitrary test patterns produced with oscillators andnoise generators, largely eschewing the direct analy-sis of auditory scenes produced by actual mechanicalsources of sound. As a consequence of these empir-ical practices, the generic auditory account includesan automatic mechanism that imposes an analysisof the auditory spectrum into its low-level features,and a grouping function that sorts features into seg-regated streams of similar features. Binding occursacross frequency to group simultaneously occurringfeatures, and binding occurs over time to fuse fea-tures into temporal streams. Physiological evidence

218 © 2012 John Wiley & Sons, Ltd. Volume 4, March/Apr i l 2013

WIREs Cognitive Science Early recognition of speech

of some kinds of grouping have conferred biologicalplausibility on this account.

However, adequately this hypothetical explana-tion might fare in an ideal world of audiometric testpatterns, an account devoted to similarity as the fun-damental principle of grouping cannot be effective inexplaining the perceptual coherence of a sound sourceas acoustically diverse as speech. Nonetheless, thegeneric account has been appealing because the hypo-thetical automaticity of its action and the simplicityof its grouping criteria seemed a good hypotheticalfit to the low-level perceptual function of organi-zation. Wholly apart from the claims of researchon speech against the adequacy of this account,more specific counterevidence has undermined thedescription.

A serious challenge to the legitimacy of thegeneric auditory account of organization stems from aseries of methodological investigations of the researchparadigm used all but exclusively to establish thephenomena of grouping in human listeners. In thesestudies,50–53 the conventional method of inducingthe formation of auditory groups was used, thecyclical repetition of a series of acoustic elements. Forexample, the perceptual evidence that organizationtends to depend on the similarity in frequencyamong a set of tones is commonly observed in thesubjective impressions of the order of a pattern ofrepeating tones. Evidence of perceptual organizationof a single series of tones into two streams dependedon the perceptual insensitivity to the intercalationorder between streams, while within a stream ofsimilar tones order was resolved veridically. Theseoriginal findings led to a claim on general principlethat grouping by similarity was automatic, fast andobligatory.

In contrast to the customary premise of theGestalt-derived auditory account, recent projectsfound without exception that streams of reduplicatedtones which formed along similarity criteria were slowto build. Converging measures exposed the fact thatsimilarity-based streams were far from automatic informing. When attention was drawn away from astream that was already established, the perceptualstate reset to an ungrouped form, indicating thatcognitive effort is required to form streams evenaccording to the similarity of arbitrarily composedelements. This disproved the long-standing claimthat grouping by similarity is a primitive peripheralauditory mechanism acting rapidly, automatically,and effortlessly. Moreover, neurological patientsexhibiting auditory spatial neglect did not establishauditory groups in the neglected hemifield. Overall,grouping failed to occur or was lost when attention

was diverted from a tone stream by an audible ora visible distraction, or was simply unavailable dueto neurological symptoms. In light of these reports,it is difficult to sustain the claim that perceptualorganization includes a first step in which a fast,primitive, automatic function composes streams byauditory sensory similarity, whether for speech orother sounds. Direct performance measures of theperceptual organization of speech arguably offer amore realistic gauge of perceptual organization ofcomplex sounds at this juncture.

Multimodal Perceptual Organizationof SpeechStudies revealed long ago that intelligibility improvesrelative to a unimodal case when a talker is both visibleand audible. From the perspective of organization, theaudiovisual circumstance has been conceptualized intwo distinct ways. In one, perceptual organizationapplies within each modality, independently resolvingthe auditory and visual samples of the source ofspeech, and analysis of the linguistic propertiesproceeds to conclusion before sensory combination oralignment occurs. This conceptualization applies wellto the familiar phenomenon of the McGurk effect.54

In that circumstance, a single syllable is presentedfor identification audiovisually. A limited response setpromotes low uncertainty, and the experiment variesthe extent of discrepancy, phonemically, betweenthe visible and the audible display. Under suchcircumstances, perceivers report compromise between,for instance, a visually presented [ga] and an auditorilypresented [ba], reporting [da]. Often, the syllableevoked in conditions of audiovisual discrepancycannot be distinguished from a syllable perceivedin consistent conditions. In some cases, incompletecompromise between the audible and visible rivalsis reported. Sometimes, the conflict between sensorystreams results in an impression that even violatesthe phonotactics of the language ([pta]). Impressionsevoked in such presentations are both irresistible andprimary, that is, impossible to attribute, subjectively,to rivalry between visible and audible experience ofthe spoken syllable. Because of this, some55 haveclaimed that this testing paradigm expressed theimpenetrability to belief and indifference to sensoryqualities that define a specialized perceptual moduledevoted to speech.

Other empirical phenomena of audiovisualspeech perception have required a different concep-tualization. In these reports, audiovisual integrationis necessary for analysis, for which reason this con-dition is not amenable to an account of organization

Volume 4, March/Apr i l 2013 © 2012 John Wiley & Sons, Ltd. 219

Focus Article wires.wiley.com/cogsci

of each sensory stream in isolation from the other.56

To take an example,57 when audible properties ofspeech were taken from electroglottograph signals,these provided an impression of variation in vocalpitch, but when combined with a visible face pro-ducing fluent speech, the same auditory samples offercorrelates of the voicing contrast and perhaps a bit ofemphatically expressive laryngealization. Presumably,the visible face was intelligible on its own only inter-mittently, especially considering that the listeners werenot expert speechreaders. Nonetheless, the combina-tion was readily intelligible, evidence that intersensorycombination was a condition on perceptual analy-sis, therefore, that primary organization necessarilyincluded both senses.

When auditory and visual organization andanalysis occur in parallel, the descriptive challengeof an account of intermodal organization is obviated.Unimodal organization is sufficient, because bindingand phonetic perception occur independently in eachmodality.58 To explain the cases in which intermodalorganization is requisite, although, the descriptivechallenge is significant because of the incommensuratedimensionality of vision and hearing. Indeed, the onlydimension common to visual and auditory samples istemporal, and empirical measures show clearly thattemporal coincidence is neither necessary nor sufficientfor intermodal binding to occur.59 This finding isa consistent observation whether the phenomenalobjects are flashes of light and audible clicks, orspeech. Although this research encourages a model ofintermodal perceptual organization that includes a fastand nonsymbolic combination of sensory streams as acondition of phonetic analysis, neither the perceptualfunctions nor the functional neuroanatomy are welldescribed.

CONCLUSION

Most accounts of speech perception begin with ananalysis of a speech stream. In this assumption,the hypotheses that follow must neglect thefundamental function of perceptual organization.Before perceptual analysis can resolve sensoryproperties into the symbolic entities on whichrecognition and comprehension depend, a priorfunction must find speech and follow its evolvingsensory effects. In so doing, organization provides acoherent sensory sample appropriate to analyze. Acomplete description of the comprehension of spokenmessages depends, therefore, on the resolution ofa speech stream from its characteristic modulation.Although this function is apparently nonsymbolic innature, without its action perceptual analysis can only

apply indiscriminately to auditory samples, withoutdisaggregating the sensory effects of speech and othersound sources.

The characterization given here has portrayedorganization as prior and analysis as consequent,although this logical contrast belies the concurrencyof these functions in practice. Organization proceedsat a rapid pace tied to the narrow window ofintegration; analysis projects auditory types into thesymbolic properties that compose phonemes, syllables,words, and larger constituents by which meaning isconveyed. By this dovetailed relation of organizationand analysis, the fragile auditory properties of speechbecome durable. Although a faded sensory sampleof speech is lost to subsequent reorganization orreanalysis, the readily memorable results of linguisticanalysis persist. Reimagining a talker’s message mustdepend on this hardy representation of speech in theabsence of a surviving trace of the original incidentspectra.

What is the likely shape of an ultimate account ofthe early sensory functions in speech perception? Thebenchmarks from direct investigations of speech pointto a perceptual resource that operates without muchexposure, for it is evident in early infancy; it has arapid pace, obliged by the decay of ephemeral sensorytraces in a tenth of a second; it is widely tolerant ofdistortion, and apparently indifferent to the absenceof short-term characteristics of natural vocalization;it requires attention, and is not characteristic ofpassive hearing; and, in the main, it is keyedto spectrotemporal modulation consistent with adeformable and nonlinear sound source. As with manyaccounts in contemporary research within cognitiveneuroscience, the role of attention is a slumberinggiant in this conceptualization. Many contemporaryaccounts of sensory functions and their cognitiveeffects presuppose attention, and portray a functionalarchitecture within a perceiver’s undifferentiatedeffort. New research has the potential to identifydifferent types of attention and to establish principledgrounds for the observation that a listener’s beliefdetermines whether a phonetically adequate sine-wave pattern is experienced as a set of contrapuntaltones or as an utterance. The contrast betweenhearing and listening also hinges on attention ofsome kind, and the validity of studies of passiveexposure to speech, whether human or animal earsare enlisted, depends on an adequate account ofattention no less than an account of the dimensions ofaudibility.

Sensitivity to complex spectrotemporal modula-tion also offers significant potential for investigation.

220 © 2012 John Wiley & Sons, Ltd. Volume 4, March/Apr i l 2013

WIREs Cognitive Science Early recognition of speech

Although psychoacoustics and electrophysiology iden-tified sensitivity to simple forms of modulation longago, the basis for integration across wide frequencyspans typical in speech—at least 6 kHz—is not wellunderstood. Neither is there a detailed account ofrapid integration despite discontinuity and dissimilar-ity. Because this function does not require extensiveexposure to language for its inception, it is doubt-ful that it reflects an aspect of perception that is

dedicated to speech in a specific language. But, thesingular focus of auditory research on sounds pro-duced by linear emitters as a surrogate for mechan-ical sound sources denies us a generic account ofperceptual organization of broadband, physically het-erogeneous sound streams. Extending empirical prac-tice to include natural sound sources might providethe principles underlying the perceptual organizationof speech.

REFERENCES1. Remez RE. Spoken expression of individual identity

and the listener. In: Morsella E, ed. Expressing One-self/Expressing One’s Self: Communication CognitionLanguage and Identity. New York, NY: PsychologyPress; 2010, 167–181.

2. Schnupp J, Nelken I, King A. Auditory Neuroscience:Making Sense of Sound. Cambridge, MA: MIT Press;2011.

3. Liberman AM, Cooper FS. In search of the acousticcues. In: Valdman A, ed. Papers in Linguistics and Pho-netics to the Memory of Pierre Delattre. The Hague:Mouton; 1972, 329–338.

4. Idemaru K, Holt LL. Word recognition reflectsdimension-based statistical learning. J Exp PsycholHum Percept Perform 2011, 37:1939–1956.

5. Cherry EC. Some experiments on the recognition ofspeech with one and two ears. J Acoust Soc Am 1953,25:975–979.

6. Goldstein L, Fowler CA. Articulatory phonology: Aphonology for public language use. In: Meyer AS,Schiller NO, eds. Phonetics and Phonology in Lan-guage Comprehension and Production: Differences andSimilarities. Berlin: Morton Degruyter; 2003, 1–53.

7. Holt LL, Lotto AJ. Speech perception within an audi-tory cognitive science framework. Curr Dir Psychol Sci2008, 17:42–46.

8. Kuhl PK. Early language acquisition: phonetic and wordlearning neural substrates and a theoretical model. In:Moore B, Tyler L, Marslen-Wilson W, eds. The Per-ception of Speech: From Sound to Meaning. Oxford:Oxford University Press; 2009, 103–131.

9. Massaro DW. From multisensory integration to talkingheads and language learning. In: Calvert GA, Spence C,Stein BE, eds. The Handbook of Multisensory Processes.Cambridge, MA: MIT Press; 2004, 153–176.

10. Poeppel D, Monahan PJ. Speech perception: Cogni-tive foundations and cortical implementation. Curr DirPsychol Sci 2008, 17:80–85.

11. Klatt DH. Review of selected models of speech percep-tion. In: Marslen-Wilson W, ed. Lexical Representa-tion and Process. Cambridge, MA: MIT Press; 1989,169–226.

12. Remez RE. Perceptual organization of speech. In: PisoniDB, Remez RE, eds. The Handbook of Speech Percep-tion. Oxford: Basil Blackwell; 2005, 28–50.

13. Shannon RV, Zeng F-G, Kamath V, Wygonski J, EkelidM. Speech recognition with primarily temporal cues.Science 1995, 270:303–304.

14. Smith ZM, Delgutte B, Oxenham AJ. Chimaeric soundsreveal dichotomies in auditory perception. Nature 2002,416:87–90.

15. Bregman AS. Auditory Scene Analysis. Cambridge, MA:MIT Press; 1990.

16. Darwin CJ. Listening to speech in the presence of othersounds. Philos Trans R Soc B 2008, 363:1011–1021.

17. Gaver WW. What in the world do we hear? An ecologi-cal approach to auditory event perception. Ecol Psychol1993, 5:285–313.

18. Young ED, Sacks MB. Representation of steady-statevowels in the temporal aspects of the discharge patternsof populations of auditory-nerve fibers. J Acoust SocAm 1979, 66:1381–1403.

19. Blackburn CC, Sacks MB. The representation of thesteady-state vowel sound /e/ in the discharge patternsof cat anteroventral cochlear nucleus neurons. J Neuro-physiol 1990, 63:1191–1212.

20. Remez RE, Rubin PE, Pisoni DB, Carrell TD. Speechperception without traditional speech cues. Science1981, 212:947–950.

21. Dorman MF, Loizou PC, Rainey D. Speech intelligibilityas a function of the number of channels of stimulationfor signal processors using sine-wave and noise-bandoutputs. J Acoust Soc Am 1997, 102:2403–2411.

22. Rand TC. Dichotic release from masking for speech. JAcoust Soc Am 1974, 55:678–680.

23. Elliott TM, Theunissen FE. The modulation transferfunction for speech intelligibility. PLoS Comput Biol2009, 5:e1000302. doi:10.1371/journal.pcbi.1000302.

24. Miller LM, Escabi MA, Read HL, Schreiner CE.Spectrotemporal receptive fields in the lemniscal audi-tory thalamus and cortex. J Neurophysiol 2002,87:516–527.

Volume 4, March/Apr i l 2013 © 2012 John Wiley & Sons, Ltd. 221

Focus Article wires.wiley.com/cogsci

25. Heimbauer LA, Beran MJ, Owren MJ. A chimpanzeerecognizes synthetic speech with significantly reducedacoustic cues to phonetic content. Curr Biol 2011,21:1210–1214.

26. Liebenthal E, Binder JR, Piorkowski RL, RemezRE. Short-term reorganization of auditory analysisinduced by phonetic experience. J Cogn Neurosci 2003,15:549–558.

27. Lashley KS. The problem of serial order in behavior.In: Jeffress LA, ed. Cerebral Mechanisms in Behavior:The Hixon Symposium. New York, NY: John Wiley &Sons; 1951, 112–136.

28. Liberman AM, Cooper FS, Shankweiler DP, Studdert-Kennedy M. Perception of the speech code. Psychol Rev1967, 74:421–461.

29. Baddeley AD. Working Memory. Oxford: ClarendonPress; 1986.

30. Fu Q-J, Galvin JJ, III. Recognition of spectrallyasynchronous speech by normal-hearing listeners andNucleus-22 cochlear implant users. J Acoust Soc Am2001, 109:1166–1172.

31. Haggard M. Temporal patterning in speech: The impli-cations of temporal resolution and signal-processing. In:Michelson A, ed. Time Resolution in Auditory Systems.Heidelberg: Springer Verlag; 1985, 215–237.

32. Huggins AWF. Distortion of the temporal pattern ofspeech: Interruption and alternation. J Acoust Soc Am1964, 36:1055–1064.

33. Pisoni DB. Auditory and phonetic memory codes inthe discrimination of consonants and vowels. PerceptPsychophys 1973, 13:253–260.

34. Remez RE, Ferro DF, Wissig SC, Landau CA. Asyn-chrony tolerance in the perceptual organization ofspeech. Psychon Bull Rev 2008, 15:861–865.

35. Ghazanfar AA, Chandrasekaran C, Morrill RJ.Dynamic rhythmic facial expressions and the superiortemporal sulcus of macaque monkeys: Implications forthe evolution of audiovisual speech. Eur J Neurosci2010, 31:1807–1817.

36. Greenberg S, Arai T, Grant K. The role of tempo-ral dynamics in understanding spoken language. In:Divenyi P, Greenberg S, Meyer G, eds. Dynamics ofSpeech Production and Perception. Amsterdam: IOSPress; 2006, 171–190.

37. Saberi K, Perrott DR. Cognitive restoration of reversedspeech. Nature 1999, 398:760.

38. Peelle JE, Gross J, Davis MH. Phase-locked responsesto speech in human auditory cortex are enhanced duringcomprehension. Cerebral Cortex. Submitted for publi-cation.

39. Houston DM. Speech perception in infants. In: PisoniDB, Remez RE, eds. Handbook of Speech Perception.Oxford: Basil Blackwell; 2005, 417–448.

40. Eimas P, Miller J. Organization in the perception ofspeech by young infants. Psychol Sci 1992, 3:340–345.

41. Newman RS, Jusczyk PW. The cocktail party effect ininfants. Percept Psychophys 1995, 58:1145–1156.

42. Nittrouer S, Tarr E. Coherence masking protectionfor speech in children and adults. Attention PerceptPsychophys 2011, 73:2606–2623.

43. Remez RE, Rubin PE, Berns SM, Pardo JS, Lang JM.On the perceptual organization of speech. Psychol Rev1994, 101:129–156.

44. Roberts B, Summers RJ, Bailey PJ. The perceptualorganization of sine-wave speech under competitiveconditions. J Acoust Soc Am 2010, 128:804–817.

45. Remez RE. The interplay of phonology and perceptionconsidered from the perspective of perceptual organiza-tion. In: Hume E, Johnson E, eds. The Role of SpeechPerception in Phonology. San Diego, CA: AcademicPress; 2001, 27–52.

46. Stevens KN. The quantal nature of speech: evidencefrom articulatory-acoustic data. In: David EE, DenesPB, eds. Human Communication. A Unified View. NewYork, NY: McGraw-Hill; 1972, 51–66.

47. Joseph JS, Chun MM, Nakayama K. Attentionalrequirements in a ‘preattentive’ feature search task.Nature 1997, 387:805–807.

48. Fodor JA, The Modularity of Mind. Cambridge, MA:MIT Press; 1983.

49. Zevin JD, Yang JF, Skipper JI, McCandliss BD. Domaingeneral change detection accounts for ‘‘dishabituation’’effects in temporal-parietal regions in fMRI studies ofspeech perception. J Neurosci 2010, 30:1110–1117.

50. Carlyon RP, Cusack R, Foxton JM, Robertson IH.Effects of attention and unilateral neglect on audi-tory stream segregation. J Exp Psychol Hum PerceptPerform 2001, 27:115–127.

51. Carlyon RP, Plack CJ, Fantini DA, Cusack R. Cross-modal and non-sensory influences on auditory stream-ing. Perception 2003, 32:1393–1402.

52. Cusack R, Carlyon RP, Robertson IH. Auditory mid-line and spatial discrimination in patients with unilateralneglect. Cortex 2001, 37:706–709.

53. Cusack R, Deeks J, Aikman G, Carlyon RP. Effectsof location frequency region and time course of selec-tive attention on auditory scene analysis. J Exp PsycholHum Percept Perform 2004, 30:643–656.

54. McGurk H, MacDonald J. Hearing lips and seeingvoices. Nature 1976, 264:746–748.

55. Liberman AM. The relation of speech and writing. In:Frost R, Katz L, eds. Orthography Morphology andMeaning. New York, NY: Elsevier Science Publishers;1992, 167–178.

56. Green KP. The use of auditory and visual informationduring phonetic processing: Implications for theoriesof speech perception. In: Campbell R, Dodd B, Burn-ham D, eds. Hearing by Eye Part II: Advances in thePsychology of Speech Reading and Audiovisual Speech.East Sussex: Psychology Press; 1998, 3–25.

222 © 2012 John Wiley & Sons, Ltd. Volume 4, March/Apr i l 2013

WIREs Cognitive Science Early recognition of speech

57. Rosen SM, Fourcin AJ, Moore BCJ. Voice pitch as anaid to lipreading. Nature 1981, 291:150–152.

58. Massaro DW, Stork DG. Speech recognition and sen-sory integration: a 240 year old theorem helps explainhow people and machines can integrate auditory and

visual information to understand speech. Am Sci 1998,86:236–244.

59. de Gelder B, Bertelson P. Multisensory integration per-ception and ecological validity. Trends Cogn Sci 2003,7:460–467.

FURTHER READINGPardo JS, Remez RE. The perception of speech. In: Traxler M, Gernsbacher MA, eds. The Handbook of Psycholinguistics,2nd ed. New York, NY: Academic Press; 2005, 201–248.

Remez RE, Rubin PE, Berns SM, Pardo JS, Lang JM. On the perceptual organization of speech. Psychol Rev 1994,101:129–156.

Volume 4, March/Apr i l 2013 © 2012 John Wiley & Sons, Ltd. 223