DESCRIPTION: State the application’s broad, long-term...

Transcript of DESCRIPTION: State the application’s broad, long-term...

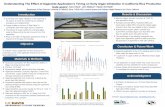

Principal Investigator/Program Director (Last, first, middle): > Guenther, Frank H.INTRODUCTION

Overview. This application is a first resubmission. The original application proposed three inter-related sub-projects concerning modeling and neuroimaging of (i) the brain regions involved in speech sound sequence generation, (ii) the neural processes underlying the learning of new sound sequences, and (iii) problems with this sequencing circuitry that may underlie stuttering. The reviewers of the original application generally agreed that the proposed research was highly innovative, was of high potential significance, and was theoret -ically well-motivated. We have therefore left the Background and Significance intact, as well as the theoretical description of the proposed model and the theoretical motivation for the fMRI experiments (with the exception of the removal of the stuttering component of the project, as described below). The reviewers’ primary con-cerns were that the application was too ambitious, and that (perhaps as a result of the large scope) important details concerning the experiments were lacking, as were descriptions of how the model simulations would be compared to the experimental results. Our revisions have therefore focused on improvements to these as-pects of the proposal, as detailed in the following paragraphs. Changes to the application text are indicated with vertical bars in the left margin of the Research Plan.

Project was too ambitious as originally proposed. The reviewers generally felt that the project as originally proposed was too ambitious. Reviewer 3 explicitly referred to the stuttering work, which formed over 1/3 of the original research plan, as overextending the project. To address these concerns, we have removed the stuttering component of the proposed project and used the resulting space to expand the remainder of the re -search design and methods. Removal of the stuttering component also addresses the concern of Reviewers 1 and 3 that this component may be confounded by treatment history and/or compensatory strategies of the stuttering subjects. Removal of the stuttering component included removal of three fMRI experiments and three modeling projects.

Simulating a BOLD signal from the model and comparing model activations to fMRI results. The re-viewers generally felt that the description of how the model simulations will be compared to the results of fMRI experiments was not sufficiently detailed. To address this concern, we have added a section entitled “Gener-ating simulated fMRI activations from model simulations” (Section C.2), in which we detail how we simulate fMRI data from the models. Our method is based on the most recent results concerning the relationship be -tween neural activity and the blood oxygen level dependent (BOLD) signal measured with fMRI, as detailed in this section. The section also includes a treatment of how inhibitory neurons are modeled in terms of BOLD effects, thus addressing Reviewer 3’s concern about this issue. Additional text describing how we will com-pare the model fMRI activations to the results of our fMRI experiments has been added after the descriptions of each of the fMRI experiments.

fMRI power analysis. Reviewer 2 expressed concern regarding how many subjects would be needed to ob-tain significant results in our fMRI experiments. To address this concern, we have added a subsection entitled “fMRI power analysis” at the beginning of section D that justifies the subject population sizes proposed in the fMRI experiments. In this analysis, we have considered the possibility that many trials may contain production errors such as insertions of extra phonemes, etc. (as pointed out by Reviewers 2 and 3). When determining the number of subjects needed to obtain significant results, we have very conservatively assumed that as many as 30% of the trials in the experiments in Section D.1 and 50% of the trials in the experiments in Sec-tion D.2 may need to be removed from the analysis due to such errors. These assumed error rates are much higher than those obtained in our previous fMRI experiment on sound sequencing described in Section C.4 (which had an average error rate of 14.4% for the most difficult utterances) and our pilot studies for the learn -ing experiments in D.2 (which had an average error rate of 21%).

Effective connectivity analyses. Reviewer 1 noted that effective connectivity analyses should be more em-phasized in the proposal since they may provide a valuable means for testing model predictions. We have accordingly added a sub-section called “Effective connectivity analysis” in Section D of the proposal. We have also added more explicit descriptions of how effective connectivity analysis will be used to test predictions in the fMRI experiments.

Hypothesis tests. The reviewers also felt that descriptions of how specific hypotheses would be tested were lacking. We have thus added text explicitly stating the hypotheses to be tested and the manner in which they will be tested. To address reviewer concerns that it was not clear what we would conclude if our hypotheses are not supported, we have added descriptions of alternative interpretations. It is important to note that, al -though most of the hypotheses to be tested are embodied by our proposed neural model, they are not simply our view, but instead reflect current hypotheses proposed by many other researchers studying non-speech

PHS 398 (Rev. 09/04) Page> 14

Principal Investigator/Program Director (Last, first, middle): > Guenther, Frank H.motor control, as detailed in the theoretical background portions of Section D. Indeed, these hypotheses were the primary forces that shaped the proposed model. Thus our experiments not only test our particular model, but they also test whether these non-speech motor control theories, which generally arise from the animal lit -erature, generalize to the neural processes underlying speech production in humans. If the hypotheses are supported by our experimental results, then we have gained important information regarding similarities be-tween the brain mechanisms underlying speech in humans and non-speech motor behaviors in animals. If the hypotheses are not supported, we have gained equally important information regarding how the neural bases of speech motor control differ from other forms of motor control.

Potential problems with the fMRI experiments of novel sound sequence learning. Two reviewers ex-pressed concern regarding the design of fMRI experiments involving novel speech sound sequence learning in Section D.2, in particular the experiment involving generation of novel sub-syllabic sequences that are phonotactically illegal or highly unlikely in English. Specifically, the reviewers pointed out that subjects might insert additional phonemes or change some consonant clusters into more familiar forms, which would make these utterances easier to produce. Reviewer 1 also noted that the original experiment, which involved learn -ing within a single fMRI scanning session, would necessarily be limited to very early stages of learning. To address these concerns, we have modified the design of the fMRI experiments in Section D.2. The experi -ments now involve learning in two training sessions that take place on different days, performed before the fMRI experiment. Furthermore, only subjects that show significant learning over the training sessions (as measured by improvements in error rate, duration, and reaction time) will be used in the corresponding fMRI experiment. Finally, all trials in the fMRI experiment will be checked for production errors, and any trials con-taining errors will be removed from subsequent data analysis.

Timing issues. Reviewer 3 stated that it was not clear how much priority is being given to modeling timing. We have provided text clarifying this in several places. The models to be developed will make systematic pre-dictions regarding latencies and other aspects of the timing of speech sequences. In particular, we have em-phasized that the modeling framework adopted (competitive queuing, CQ) is the only one that has been shown able to explain not just latency patterns for correct performances but also the latencies of errors in a recent comparison of four classes of sequencing models, conducted by Farrell & Lewandowsky (2004).

Computational framework. Reviewer 3 also expressed concern about missing details regarding software, hardware, etc. used to implement the proposed computational model. A sub-section called “Computational framework” has been added to the beginning of Section D to address this concern.

Miscellaneous concerns. Two reviewers were concerned with potential confounds in the jaw clench condi-tion in fMRI Experiment 1 in Section D.1. This condition has been removed in the revised experiment.

.

PHS 398 (Rev. 09/04) Page> 15

Principal Investigator/Program Director (Last, first, middle): > Guenther, Frank H.A. SPECIFIC AIMSThe primary aim of this project is to develop and experimentally test a neural model of the brain interactions underlying the production of speech sound sequences. In particular, we will focus on several brain regions thought to be involved in motor sequence production, including the lateral prefrontal cortex, lateral premotor cortex, supplementary motor area (SMA), pre-SMA, and associated subcortical structures (basal ganglia, cerebellum, and thalamus). Each of these brain regions will be modeled mathematically with equations gov -erning neuron activities, and the interactions between the regions will be modeled with equations governing synaptic strengths. The resulting model will be implemented in computer software and integrated with an ex -isting neural model of speech sound production, the DIVA1 model (Guenther, 1994, 1995; Guenther et al., 1998, in press), to allow generation of simulated articulator movements (along with corresponding acoustic signals) for producing speech sound sequences. The results of these computer simulations will be compared to existing behavioral and functional neuroimaging data to guide model development. We also propose 4 new functional magnetic resonance imaging (fMRI) experiments designed to test key hypotheses of the model, to test between the model and competing hypotheses, and to fill in gaps in the existing neuroimaging literature.

The project is divided into two separate but highly integrated subprojects whose aims are as follows:(1) Creating and testing a neural model of speech sequence production. The primary aim of this subpro-ject is to develop a model of the neural circuits involved in the properly ordered and properly timed production of speech sound sequences, such as a sequence of phonemes, syllables, and words making up a sentence. The model will be implemented in computer software and integrated with the DIVA model. The DIVA model describes the brain mechanisms responsible for producing individual speech sounds, whereas the model de-veloped in this proposal describes the “higher-level” brain mechanisms involved in representing a sequence of sounds and determining which sound in the sequence to produce next (sequencing), as well as when to produce it (initiation). Thus the output of the current model essentially acts as input to the DIVA model, which then commands the sequence of articulator movements needed to produce each sound. We also pro-pose two speech production fMRI experiments which test key hypotheses of our preliminary model: (i) the processing of syllable “frames” by the SMA/pre-SMA and “content” by lateral premotor areas, and (ii) the exis-tence of a working memory representation for speech sound sequences in the inferior frontal sulcus. Simula -tions of the model performing the same speech tasks as subjects in the fMRI experiments will be run, and the results of these simulations will be compared to results from the fMRI studies to test key aspects of the model.(2) Investigating the learning of new speech sequences. The primary aim of this subproject is to further develop the model created in Subproject 1 to incorporate the effects of practice on the neural circuits underly -ing speech sound sequence generation. In two modeling projects, we will model learning effects as changes in synaptic strengths in two subcortical structures: the basal ganglia and the cerebellum. This work will be guided by the existing literature on learning of motor sequences, and we propose two behavioral experiments and two corresponding fMRI experiments to test hypotheses concerning learning to quickly and accurately produce novel supra-syllabic sequences (multi-syllable utterances involving novel combinations of known syllables) and sub-syllabic sequences (new syllables consisting of infrequent combinations of phonemes).

We believe our integrated approach of computational neural modeling and functional brain imaging will provide a clearer, more mechanistic account of the neural processes underlying speech production in nor-mal speakers and individuals with disorders affecting speech sound initiation and sequencing. In the long term, we believe this improved understanding will aid in developing better treatments for these disorders. B. BACKGROUND AND SIGNIFICANCECombining neural models and functional brain imaging to understand the neural bases of speech. Re-cent years have witnessed a large number of functional brain imaging experiments studying speech and lan-guage, and much has been learned from these studies regarding the brain mechanisms underlying speech and its disorders. For example, functional magnetic resonance imaging (fMRI) studies have identified the cor-tical and subcortical areas involved in simple speech tasks (e.g., Hickok et al., 2000; Riecker et al, 2000a,b; Wise et al, 1999; Wildgruber et al., 2001) as well as more complex language tasks (e.g., Dapretto & Bookheimer, 1999; Kerns et al., 2004; Vingerhoets et al., 2003). However these imaging experiments do not, by themselves, answer the question of what important function, if any, a particular brain region may play in speech. For example, activity in the anterior insula has been identified in numerous speech neuroimaging studies (Wise et al, 1999; Hickok et al., 2000; Riecker et al., 2000a), but much controversy still exists con-cerning its particular role in the neural control of speech (Dronkers, 1996; Ackermann & Riecker 2004; Hillis et al, 2004; Shuster & Lemieux, 2005). A better understanding of the exact roles of different brain regions in speech requires the formulation of computational neural models whose components model the computa-

1 DIVA stands for Directions Into Velocities of Articulators, which describes a central aspect of the model’s control scheme.

PHS 398 (Rev. 09/04) Page> 16

Principal Investigator/Program Director (Last, first, middle): > Guenther, Frank H.tions performed by individual brain regions, as well as the interactions between these regions (see Horwitz & Braun, 2004; Husain et al., 2004; Tagamets & Horwitz, 1997; Fagg & Arbib, 1998 for other examples of this approach). In the past decade we have developed one such model of speech production called the DIVA model (Guenther, 1994, 1995; Guenther et al., 1998, in press). The model’s components correspond to re -gions of the cerebral cortex and cerebellum, and they consist of modeled neurons whose activities during speech tasks can be measured and compared to the brain activities of human subjects performing the same task (e.g., Guenther et al., in press, included in Appendix materials for this application). These neurons are connected by adaptive synapses that become tuned during a babbling process as well as with continued practice with a speech sound. Computer simulations of the model have been shown to provide a unified ac-count for a wide range of observations concerning speech acquisition and production, including data concern-ing the kinematics and acoustics of speech movements (Callan et al., 2000; Guenther, 1994, 1995; Guenther et al., 1998, in press; Guenther and Ghosh, 2003; Perkell et al., 2000) as well as the brain activities underly -ing speech production (Guenther et al., in press). The DIVA model computes articulator movement trajecto-ries for producing individual speech sounds that are presented to it by the modeler. Importantly, however, the DIVA model does not model the brain regions and computations that are responsible for initiating and se-quencing of speech sounds2. Understanding the computations performed by these brain regions could pro-vide important insight into a number of important communication disorders that are characterized by their ap-parent malfunctions.

In this application we propose to develop a new model that identifies the neural computations underly -ing the initiation and sequencing of speech sounds during production. For clarity, we will refer to this model as the sequence model in this grant application. This model addresses several brain regions not treated in the DIVA model, including the supplementary and pre-supplementary motor areas, ventrolateral prefrontal cortex, and basal ganglia. These brain regions are believed to be involved in the initiation and sequencing of motor actions and appear to functional abnormally in several communication disorders (see Sequencing and initia-tion communication disorders below). Furthermore we propose fMRI studies of speech initiation and sequenc-ing designed to test and refine this neural model. The “neural” nature of the model’s components makes pos -sible direct comparisons between the model’s cell activities and the results of neuroimaging experiments. The resulting model will provide a computational account of normal speech mechanisms, and it will serve as a the-oretical framework for investigating communication disorders involving malfunctions in speech initiation and sequencing, thus aiding in the development of better treatments/prostheses for these disorders.Sequencing in motor control and language. A sine qua non of speech production is our ability to learn and perform many sequences defined over a relatively small set of elements. Behaviorist theories postulated that sequences are produced by sequential chaining, in which associative links allowed early responses in a se-quence to elicit later ones. Recurrent neural network models (e.g., Elman, 1995; Dominey, 1998; Beiser & Houk, 1998) proposed revisions to the associative chaining theory, hypothesizing that an entire series of se-quence-specific cognitive states must be learned to mediate any sequence recall. Although this type of recur -rent net allows more than one sequence to be learned over the same alphabet of elements, there is no basis for performance of novel sequences, learning is often unrealistically slow with poor temporal generalization (Henson et al., 1996; Page, 2000; Wang, 1996), and internal recall of a sequence remains an iterative se-quential operation. In contrast, competitive queuing (CQ) models allow performance of novel sequences (including reuse of the same alphabet of elements), rapid learning, and internal recall of a sequence repre -sentation as a parallel operation. Since Lashley (1951), behavioral evidence has accumulated (cf. Rhodes et al., 2004) to support the idea that parallel representation of elements constituting a sequence underlies much of our learned serial behavior. From speech and typing errors, Lashley inferred that there must be an active “co-temporal” representation of the items constituting a forthcoming sequence. He also inferred that item-item associative links may be unnecessary in, and even a hindrance to, the learning of many sequences defined over a small finite “alphabet”. Left unanswered were questions of mechanism: What is the nature of the paral-lel representation? How is the relative priority of simultaneously active item representations “tagged”? What limitations are inherent in this representation? What mechanisms convert the parallel representation to serial action? All four questions have been addressed, without any reliance on item-item associations, in various CQ models (Grossberg, 1978a,b; Houghton, 1990; Bullock & Rhodes, 2003). These neural network models postulate that a standing parallel representation of all the items constituting a planned sequence exists in a motor working memory prior to initiating performance of the first item. As explained in Section C.2, this paral -lel representation works in tandem with an iterated choice process to generate a sequential performance.

To date, CQ-compatible neural models have been used to account for data in many domains of learned serial behavior, including: eye movements (Grossberg & Kuperstein, 1986); recall of novel lists

2 If presented with a sequence of sounds, the DIVA model can produce them in the order presented. However this “se -quencing” is external to the model; a computer program simply tells the model to produce the first sound, then the second sound, etc.

PHS 398 (Rev. 09/04) Page> 17

Principal Investigator/Program Director (Last, first, middle): > Guenther, Frank H.(Boardman & Bullock, 1991; Page & Norris, 1998) and highly practiced lists (Rhodes & Bullock, 2002); cur-sive handwriting (Bullock et al., 1993); working memory storage of sequential inputs (Bradski et al., 1994); word recognition and recall (Grossberg, 1986; Hartley & Houghton, 1996; Gupta & MacWhinney, 1997); lan-guage production (Dell et al., 1997; Ward, 1994); and music learning and performance (Mannes, 1994; Page, 1999). The stature of CQ as a neurobiological model has grown steadily due to an accumulation of directly pertinent neurophysiological observations (e.g., Averbeck et al., 2002, 2003; discussed in C.2). Section C.2 will summarize CQ theory and the evidence that led us to choose CQ circuitry as a core for a new model of sequential speech motor control. Communication disorders involving sequencing and/or initiation of speech sounds. A number of com-munication disorders, including aphasias, apraxia of speech (AOS), and stuttering, include deficits in the proper initiation and/or sequencing of speech sounds. Sequencing errors known as literal or phonemic para-phasias, in which “well-formed sounds or syllables are substituted or transposed in an otherwise recognizable target word” (Goodglass, 1993) exist in most aphasic patients, most commonly in conduction aphasics. Most of the common symptoms reported in AOS patients3 are selection and sequencing problems including articu-lation errors, phonemic mistakes, prosodic disturbances, difficulties initiating speech, and slowed speech (Mc-Neil and Doyle 2004; Dronkers 1996). Several brain areas have been implicated in AOS, including the left premotor cortex, Broca’s area, and the left anterior insula (Miller 2002). Models of speech production have largely been unable to inform the study of AOS because “theories of AOS encounter a dilemma in that they begin where the most powerful models of movement control end and end where most cognitive neurolinguis -tic models begin” (Ziegler 2002). The model proposed herein attempts to fill this gap between neurolinguistic models and movement control models.

Though different in many ways, stuttering, which affects approximately 1% of the adult population in the United States, shares with AOS the trait of improper initiation of speech motor programs without impair-ment of comprehension or damage to the peripheral speech neuromuscular system (Kent 2000; Dronkers 1996). Phenomenological, physiological, and psychological studies of developmental stuttering over the past several decades have produced a large body of data and spawned many theories regarding both its etiology (e.g., Geshwind & Galaburda, 1985; Starkweather, 1987; Travis, 1931; West, 1958) and its expression (e.g., Johnson & Knot, 1936; Mysak, 1960; Perkins et al., 1991; Postma & Kolk, 1993; Zimmerman, 1980). The ad-vent of structural and functional imaging technologies has provided a means to investigate the neural under-pinnings of developmental stuttering and greatly increased the available data while offering a new perspec -tive on the disorder. A proliferation of imaging studies prompted Peter Fox, whose group has conducted sev-eral imaging studies of stuttering (e.g. Fox et al., 1996, 2000), to describe the need for a model of the neural systems of speech, their breakdown in stuttering, and their normalization with treatment, in order to advance the study of developmental stuttering (Fox, 2003). The modeling work described here will provide a substrate for exploring the initiation and repetition behaviors in persons who stutter. Perhaps more importantly, it will provide a cohesive framework for the examination of available data and the assessment of theories of stutter-ing and other disorders (see also van der Merwe, 1997).

In addition to considering symptom-based diagnoses, it is important to consider the effects of lesions and pathological conditions involving particular brain regions on speech processes. Case studies in patients with lesions of the supplementary motor area (e.g. Jonas, 1981, 1987; Ziegler et al., 1997; Pai, 1999) or basal ganglia pathologies (e.g. Ho et al., 1998; Pickett et al., 1998) have shown that these areas provide spe -cific contributions to the sequencing and initiation of speech sounds. While pathological speech data are abundant, parsimonious explanations for differential syndromes remain elusive. Many authors have noted the importance of establishing well-specified models of normal and disordered speech to help provide differential diagnoses and treatment options for these conditions. In just this context, while describing the DIVA model of speech production, McNeil et al. (2004) write “While this model addresses phenomena that may be relevant in the differential diagnosis of motor speech disorders…in it’s current stage of development it has not been extended to make claims about the relationship between disrupted processing and speech errors in motor speech disorders” (p. 406). The work proposed here seeks to extend the DIVA model in this direction.C. PRELIMINARY STUDIESC.1. The DIVA model. Over the past decade our laboratory has developed and experimentally tested the DIVA model, a neural network model of the brain processes underlying speech acquisition and production (e.g., Guenther, 1994, 1995; Guenther et al., 1998; Guenther et al., in press). The model is able to produce speech sounds (including both articulator movements and a corresponding acoustic signal) by learning map-pings between articulator movements and their acoustic consequences, as well as auditory and somatosen-sory targets for individual speech sounds. It accounts for a number of speech production phenomena includ-3 Darley et al. (1975) describe AOS as a unique syndrome that affects motor speech production without diminished mus-cle strength. AOS has been associated with phoneme substitution errors similar to literal paraphasias (e.g. Wertz et al, 1984).

PHS 398 (Rev. 09/04) Page> 18

Principal Investigator/Program Director (Last, first, middle): > Guenther, Frank H.ing aspects of speech acquisition, coarticulation, contextual variability, motor equivalence, velocity/distance relationships, and speaking rate effects (see Guenther, 1995 and Guenther et al., 1998 in Appendix docu -ments). The latest version of the DIVA model is detailed in Guenther et al. (in press), in the Appendix docu -ments. Here we briefly describe the model with attention to aspects relevant for this proposal.

A schematic of the DIVA model is shown in Fig. 1. Each box in the diagram corresponds to a set of neurons in the model, and arrows correspond to synaptic projec-tions that form mappings from one type of neural repre-sentation to another. Several mappings in the network are tuned during a babbling phase in which semi-random articulator movements lead to auditory and somatosen-sory feedback; the model’s synaptic projections are ad-justed to encode sensory-motor relationships based on this combination of articulatory, auditory, and somatosen-sory information. The model posits additional forms of learning wherein (i) auditory targets for speech sounds are learned through exposure to the native language (Au-ditory Goal Region in Fig. 1), (ii) feedforward commands between premotor and motor cortical areas are learned during “practice” in which the model attempts to produce a learned sound (Feedforward Command), and (iii) so-matosensory targets for speech sounds are learned through practice (Somatosensory Goal Region).

In the model, production of a phoneme or syllable starts with activation of a Speech Sound Map cell corre-sponding to the sound to be produced. Speech sound map cells are hypothesized to lie in left lateral premotor cortex, specifically posterior Broca’s area (left Brodmann’s Area 44; ab-breviated as BA 44 herein). After the cell has been activated, signals project from the cell to the auditory and somatosensory cortical areas through tuned synapses that encode sensory ex-pectations for the sound, where they are compared to incoming sensory information. Any discrepancy between expected and actual sensory information constitutes a production error (Audi-tory Error and/or Somatosensory Error) which leads to correc-tive movements via projections from the sensory areas to the motor cortex (Auditory Feedback-based Command and So-matosensory Feedback-based Command). Additional synaptic projections from speech sound map cells to the motor cortex form a feedforward motor command; this command is tuned by monitoring the effects of the feedback control system and incor-porating corrective commands into the feedforward command.

Feedforward and feedback control signals are combined in the model’s motor cortex. Early in development the feedfor-ward command is inaccurate, and the model depends on feed-back control. Over time, however, the feedforward command becomes well tuned through monitoring of the movements con-trolled by the feedback subsystem. Once the feedforward sub-system is accurately tuned, the system can rely almost entirely on feedforward commands because no sen -sory errors are generated unless external perturbations are applied.

Of particular note for the current application are the BA 44 speech sound map cells that form the “in-put” to the DIVA model. In Section D we describe a new model of the sequencing and initiation of sound se -quences. Activation of the speech sound map cells in the DIVA model basically comprises the “output” of this new model, which will be integrated with the DIVA model to form a more complete description of the neural bases underlying speech. Also of note for the current application is the fact that the latest version of the DIVA model (Guenther et al., in press, in Appendix documents) incorporates realistic transmission delays between brain regions, including sensory feedback delays. This development has made it possible to more precisely simulate the timing of articulator movements during normal and perturbed speech. The results of these simu-lations closely approximate behavioral results (see Guenther et al., in press in Appendix). The modeling project proposed in Section D.1 extends this work to account for serial reaction time studies of sequence pro -

PHS 398 (Rev. 09/04) Page> 19

Fig. 2. Top: fMRI activation (white) measured during CV syllable production. [10 subjects; random effects analysis; p<0.001 uncorrected.] Bottom: Simulated fMRI activation when the DIVA model produces CV syllables. [For additional views, in color, see Guenther et al. (in press) in Appendix.]

fMRI Activations

Model Simulations

Principal Investigator/Program Director (Last, first, middle): > Guenther, Frank H.duction. Finally, a unique feature of the DIVA model is that each of the model’s components is associated with a particular neuroanatomical location based on the results of fMRI and PET studies of speech production and articulation (see Guenther et al., in press in Appendix documents for details). Since the model’s compo -nents correspond to groups of neurons, it is possible to generate simulated fMRI activations corresponding to model cell activities during a simulation (described further in Section C.2). Fig. 2 compares results from an fMRI study performed by our lab of single consonant-vowel (CV) syllable production to simulated fMRI data from the DIVA model in the same speech task. Comparison of the top and bottom panels of Fig. 2 indicates that the model accounts for most of the fMRI activations. The proposed research will extend this work to ac-count for additional fMRI activations that occur in more complex multi-syllabic speaking tasks.C.2 Generating simulated fMRI activations from model simulations. The relationship between the signal measured in blood oxygen level dependent (BOLD) fMRI and electrical activity of neurons has been studied by numerous investigators in recent years. It is well-known that the BOLD signal is relatively sluggish com-pared to electrical neural activity. That is, for a very brief burst of neural activity, the BOLD signal will begin to rise and continue rising well after the neural activity stops, peaking about 4-6 seconds after the neural activation burst before falling down somewhat below the starting level around 10-12 seconds after the neural burst and slowly rising back to the starting level. This hemodynamic response function (HRF) is schematized in the figure at right. We use such a response function, which is part of the SPM software package that we use for fMRI data analysis, to trans-form neural activities in our model cells into simulated fMRI activity. However there are different possible definitions of “neural activity”, and the exact nature of the neural activity that gives rise to the BOLD signal is still currently under debate (e.g., Caesar et al., 2003; Heeger et al., 2000; Logothetis et al., 2001; Logothetis and Pfeuffer, 2004; Rees et al., 2000; Tagamets and Horwitz, 2001).

In our modeling work, each model cell is hypothesized to correspond to a small population of neurons that fire together. The output of the cell corresponds to neural firing rate (i.e., the number of action potentials per second of the population of neurons). This output is sent to other cells in the network, where it is multi -plied by synaptic weights to form synaptic inputs to these cells. The activity level of a cell is calculated as the sum of all the synaptic inputs to the cell (both excitatory and inhibitory), and if the net activity is above zero, the cell’s output is proportional to the activity level. If the net activity is below zero, the cell’s output is zero. It has been shown that the magnitude of the BOLD signal typically scales proportionally with the average firing rate of the neurons in the region where the BOLD signal is mea-sured (e.g., Heeger et al., 2000; Rees et al., 2000). It has been noted elsewhere, however, that the BOLD signal actually correlates more closely with local field poten-tials, which are thought to arise pri-marily from averaged postsynaptic potentials (corresponding to the in-puts of neurons), than it does to the average firing rate of an area (Logothetis et al., 2001). In particu-lar, whereas the average firing rate may habituate down to zero with prolonged stimulation (greater than 2 sec), the local field potential and BOLD signal do not habituate com-pletely, maintaining non-zero steady state values with prolonged stimulation. In accord with this find-ing, the fMRI activations that we generate from our models are de-termined by convolving the total in-puts to our modeled neurons (i.e., the activity level as defined above), rather than the outputs4 (firing rates),

4 It is noteworthy, however, that total synaptic input correlates highly with firing rate, both physiologically and in our mod -els. Thus the two measures for estimating neural activity (firing rate vs. total input) are likely to produce similar results.

PHS 398 (Rev. 09/04) Page> 20

Fig. 4. Left: Locations of the cell types in the DIVA model. Right: Generating BOLD signals for a jaw-perturbed speech – unperturbed speech contrast. Left panels show cell activities (gray) and resulting BOLD signals (black) for two cell types in the model. The perturbation results in increased somatosensory error cell activation, but no increased auditory error cell activation. The corresponding BOLD signals are spatially smoothed and plotted on the standard single subject brain from the SPM software package (top right panel). The results of an fMRI experiment comparing perturbed to unperturbed speech are located in the bottom right panel.

Locations of DIVA Model Cells

fMRI Experimental Result

Model Simulation

Perturbed – Unperturbed Speech

Model Cell Activitiesand BOLD Response

Somatosensory Error Cells (S)

Auditory Error Cells ()

Time (s)

Bold

Signal

Neural

Actvity

Fig. 3. Typical hemodynamic response function (HRF).

Principal Investigator/Program Director (Last, first, middle): > Guenther, Frank H.with an idealized hemodynamic response function generated using default settings of the function ‘spm_hrf’ from the SPM toolbox (see Guenther et al., in press in Appendix for details).

In our models, an active inhibitory neuron has two effects on the BOLD signal: (i) the total input to the inhibitory neuron will have a positive effect on the local BOLD signal, and (ii) the output of the inhibitory neu -ron will act as an inhibitory input to excitatory neurons, thereby decreasing their summed input and, in turn, reducing the corresponding BOLD signal. Relatedly, it has been shown that inhibition to a neuron can cause a decrease in the firing rate of that neuron while at the same time causing an increase in cerebral blood flow, which is closely related to the BOLD signal (Caesar et al., 2003). Caesar et al. (2003) conclude that this cere-bral blood flow increase probably occurs as the result of excitation of inhibitory neurons, consistent with our model. They also note that the cerebral blood flow increase caused by combined excitatory and inhibitory in-puts is somewhat less than the sum of the increases to each input type alone; this is also consistent with our model since the increase in BOLD signal caused by the active inhibitory neurons is somewhat counteracted by the inhibitory effect of these neurons on the total input to excitatory neurons.

Figure 4 illustrates the process of generating fMRI activations from a model simulation and comparing the resulting activation to the results of an fMRI experiment designed to test a model prediction. The left panel of the figure illustrates the locations of the DIVA model components on the “standard” single subject brain from the SPM2 software package. The DIVA model predicts that unexpected perturbation of the jaw during speech will cause a mismatch between somatosensory targets and actual somatosensory inputs, causing activation of somatosensory error cells in higher-order somatosensory cortical areas in the supra-marginal gyrus of the inferior parietal cortex. The location of these cells is denoted by S in the left panel of the figure. Simulations of the DIVA model producing speech sounds with and without jaw perturbation were performed. The top middle panel indicates the neural activity (gray) of the somatosensory error signals in the perturbed condition minus activity in the unperturbed condition, along with the resulting BOLD signal (black). Since the somatosensory error cells are more active in the perturbed condition, a relatively large positive re -sponse is seen in the BOLD signal. Auditory error cells, on the other hand, show little differential activation in the two conditions since very little auditory error is created by the jaw perturbation (bottom middle panel), and thus the BOLD signal for the auditory error cells in the perturbed – unperturbed contrast is near zero. The de-rived BOLD signals are Gaussian smoothed spatially and plotted on the standard SPM brain in the top right panel. The bottom right panel shows the results of an fMRI study we carried out to compare perturbed and unperturbed speech (13 subjects, random effects analysis, false discovery rate = 0.05). In this case, the model correctly predicts the existence and location of somatosensory error cell activation, but additional acti -vation not explained by the model is found in the left frontal operculum region. C.3 Competitive queuing (CQ) models of motor sequencing. As described above, competitive queuing (CQ) models have been applied to many domains of learned serial behavior (see Background and Signifi-cance) and account for a number of behavioral and neurophysiological observations. An Investigator on the proposed project, Dr. Daniel Bullock, and his colleagues have published a series of articles describing CQ models and providing computer simulations verifying the ability of this class of models to account for se-quencing, timing, and kinematic phenomena in non-speech motor tasks (Boardman & Bullock, 1991; Bullock et al. 1993; Bullock et al., 1999; Rhodes & Bullock, 2002; Bullock & Rhodes, 2003; Brown et al., 2004; Bul-lock, 2004a,b; Rhodes et al., 2004). Here we describe the basic CQ mechanism as formulated in such stud -ies; further details are available in Bullock (2004a) and Rhodes et al. (2004) in the Appendix materials.

A schematic of a CQ network is shown in Fig. 5. A fundamental principle of CQ networks is the parallel representation of items in a motor sequence (e.g., a sequence of phonemes making up an utterance) in work-ing memory prior to initiation of movement. These items are represented by nodes in the model’s planning layer. The relative activations of these nodes determine the ordering of the segments within the sequence. Items in the planning layer compete via mutually inhibitory connections at the competitive choice layer. The “winning” item, typically the item with the highest activity in the planning layer, is selected by the “winner-take-all” (WTA) dynamics of the neural network making up the choice layer, such that only one node (sequence item) is active at the choice layer, and the motor program corresponding to that item is executed by down -stream mechanisms outside the CQ model. At this point the chosen item’s representation is extinguished at the planning layer, a new competition is run, and the item with the next highest activation is chosen. This cy-cle continues until all sequence items are performed.

Biologically plausible recurrent or feedforward competitive neural nets (e.g., Grossberg, 1978b; Durstewitz & Seamans, 2002) provide the types of interactions required in a CQ model. Because of its need to store an activity pattern, the planning layer is modeled as a normalized recurrent net in which the activity of each item is lessened as more items are added, and when an item is extinguished its share of activity auto-matically redistributes to the remaining items. Such networks afford parametric modulation of the competition, e.g., the rate at which the choice layer selects the most highly active node from the planning layer. Psychophysical studies of serial planning and performance support these properties of the CQ model. CQ models explain error patterns in verbal immediate serial recall (ISR) studies including primacy and recency ef-

PHS 398 (Rev. 09/04) Page> 21

Principal Investigator/Program Director (Last, first, middle): > Guenther, Frank H.fects (Henson, 1996) and transposition or “fill-in” errors (e.g, Page & Norris, 1998) that occur when noise causes two items with similar activation levels to be selected in the wrong order. They also account for word length effects found in ISR (Cowan, 1994) and sequence length effects on latency and interresponse inter-vals in typing tasks (e.g., Sternberg et al, 1978; Rosenbaum et al., 1984; Boardman & Bullock, 1991; Rhodes et al. 2004). CQ models are unique for their ability to explain both timing and error data. Farrell & Lewandowsky (2004) recently compared four major classes of sequencing models, and showed that three of the four classes made incorrect predictions regarding the latencies of transposition errors. Only the CQ model predicted both the correct distribution of transposition errors and the latencies of those errors in production.

Complementary to such CQ-consistent behavioral patterns are recent neural recordings that provide di-rect evidence of CQ-like processing in the frontal cor-tex of monkeys. These studies have strikingly sup-ported four key predictions of CQ models, as indi-cated by the neurophysiological data (top) and model simulations (bottom) in Figure 6. First, the primate electrophysiological studies of Averbeck et al. (2002; 2003) demonstrated that prior to initiating a serial act (using a cursor to draw a geometric form with a pre-scribed stroke sequence), there exists in prefrontal area 46 an active parallel (simultaneous) representa-tion of each of the strokes planned as components of the forthcoming sequence. Small pools of active neu-rons code each stroke. Second, the relative strength of activation of a stroke representation (neural pool) predicts its order of production, with higher activation level indicating earlier production. Third, as the se-quence is being produced, the initially simultaneous representations are serially deactivated in the order that the corresponding strokes are produced. Fourth, several studies (Averbeck et al., 2002; Basso & Wurtz, 1998; Cisek & Kalaska, 2002; Pellizer & Hedges, 2003) of neural planning sites also show partial activity normalization: the amount of activation that is spread among the plans grows more slowly than the number of plans in the sequence, and eventually stops grow-ing. This property, which is critical to the CQ planning layer, explains why the capacity of working memory to encode novel serial orders is limited. A simulation of the planning layer dynamics of a CQ model (Boardman & Bullock, 1991) is shown at the bottom of Fig. 6 for comparison with recording data from Averbeck et al. (2002). The simulation traces correspond remarkably well with empirical observations made a decade later. Taken together, the physiological evidence of CQ-like processing in the brain and the behavioral results ex-plained by the model provide a strong argument for choosing the CQ model as the basis of a sequencing mechanism for speech production.

In Section D.1 we propose the development of a model that effectively combines the CQ model with the DIVA model of speech production. CQ output will interface with the DIVA Speech Sound Map to enable serial performance of speech sound sequences. C.4 fMRI study of brain activations during syllable sequence production. As part of an existing grant concerning the DIVA model (R01 DC02852), we have conducted an fMRI experiment to explore brain activity underlying the sequencing of speech sounds. Here we present results from this experiment. The experiment and associated modeling work serve as the starting point for much of the work proposed herein5.

5 The research projects described herein do not duplicate any of the research in R01 DC02852, which focuses primarily on sensory-motor interactions in the production of speech sounds as embodied by the DIVA model. The current proposal, in contrast, focuses on the frontal cortical mechanisms involved in higher-level aspects of the planning of speech se -

PHS 398 (Rev. 09/04) Page> 22

Fig. 5. Schematic of a competitive queuing (CQ) network. All CQ models have at least two layers, a parallel planning layer and a competitive choice layer. The planning layer contains nodes representing possible sequence elements (in this example, planning layer nodes represent drawing stroke directions). To plan a sequence, a desired subset of these nodes is activated in parallel (e.g., the subset of nodes representing the strokes required to draw a square) and the relative amount of activation (signaled by the relative heights of bars placed above the nodes) specifies the relative order of performance. At the onset of a performance, a gating signal (not shown) causes competition within the planning layer for output via the choice layer to begin. Typically the most active planning layer node wins the competition and thereby generates a corresponding output from the choice layer, which initiates the action associated with that item. A second effect of this output, mediated by an inhibitory pathway from each output node to its corresponding planning layer node, is deletion of activity at whatever planning layer node has just won. Iteration of this choose-perform-delete cycle assures that an element’s initial relative activation level in the planning layer implicitly codes its relative order in the forthcoming sequence. In this example, the CQ network dynamics step through the segments required to draw a square.

PlanningLayer

ChoiceLayer

1. 2. 3. 4.

Order of sequence progression:

PlanningLayer

ChoiceLayer

1. 2. 3. 4.

Order of sequence progression:

Principal Investigator/Program Director (Last, first, middle): > Guenther, Frank H.This experiment was designed to elucidate the

roles of several brain regions in speech production, including the medial premotor cortex (the supple-mentary motor area proper (SMA), pre-supplemen-tary motor area (pre-SMA), and cingulate motor ar-eas), the peri- and intra-sylvian cortex (including the anterior insula, frontal operculum and inferior frontal gyrus), cerebellum, and basal ganglia. Clinical stud-ies have suggested that these areas may be impor-tant for sequencing in speech motor control (e.g. Dronkers, 1996; Jonas, 1981, 1987; Riva, 1998; Pickett et al., 1998). Only a small portion of the functional imaging work dedicated to speech and language has dealt with overt speech production, but the largest body of relevant studies comes from Ackermann, Riecker, and colleagues (reviewed in Dogil et al., 2002). Regarding sequencing, Riecker et al. (2000b) examined brain activations evoked by overt production of speech stimuli of varying com-plexity: CV’s, CCCV’s, CVCVCV sequences, and lexical items (words). These speech test materials failed to elicit activation of SMA, cerebellum, or an-terior insula. This finding contrasts with other stud-ies (e.g. Fiez, 2001; Indefrey & Levelt, 2004) as well as our own findings suggesting involvement of these areas even in simple mono-syllable produc-tion (Guenther et al., in press). This issue provided further motivation for the following experiment.

In this study we examined the differences in brain activations during preparation for and overt production of memory-guided non-lexical se-quences of three syllables. Two parameters deter-mined the stimulus content. The first, sub-syllabic complexity, varied the number of phoneme seg-ments that constituted each individual syllable (i.e. CV vs. CCCV). The second parameter, supra-syl-labic complexity, varied the number of unique sylla-bles comprising the three-syllable sequence (repeti-tion of the same syllable vs. three different sylla-bles). Each factor was varied between one of two values (simple or complex), yielding four stimulus types. Each of these types was presented either for vocal-ization (GO condition) or for preparation only (NOGO condition).

13 neurologically normal right-handed adult American English speakers participated. In a 3T Siemens Trio scanner, subjects were visually presented the syllable sequences. After 2.5s the syllables were removed from the projection screen and replaced by a white fixation cross. In the GO case, following a short random dura-tion (0.5 - 2.0s), the white cross turned green, signaling the subject to begin vocalization of the most recent sequence. During this production period, the scanner remained silent. In the NOGO case, the fixation cross remained white throughout. Following a 2.5s production period (or equivalent time in the NOGO case), the scanner was triggered (see fMRI experiment protocol in Section D for details) to acquire three full brain func-tional images (TR=2.5s, 30 slices, 5mm thickness). Following the third volume, the fixation cross disap-peared, and the next stimulus was presented. The subjects’ vocal responses were recorded using an MRI-compatible microphone and checked offline for accuracy. Functional image volumes were realigned, coregis-tered to a high-resolution structural series acquired for each subject, normalized into stereotactic space, and smoothed using a Gaussian kernel with full-width at half-maximum of 12mm. Analysis was performed using a random effects model with SPM2 (http://www.fil.ion.ucl.ac.uk/spm/).

Fig. 7 shows statistically significant cortical activations during overt production (Go condition) of complex sequences of complex syllables compared to baseline (p < 0.05). Overt production activated a wide bilateral cortical and subcortical network: precentral gyrus and somatosensory cortices, the SMA and pre-SMA, audi -

quences.

PHS 398 (Rev. 09/04) Page> 23

Fig. 6. A comparison of simulated CQ dynamics with cellular data from area 46 of prefrontal cortex. Top: Each black or gray data trace (solid, dashed, dotted) represents the relative activation level in monkey area 46 of a small neural ensemble that represents one element of a 3-, 4-, or 5-element sequence used to draw a geometric form. [Adapted from Averbeck et al., 2002.] Bottom: A simulation of cellular dynamics in the plan layer of a normalized CQ model (Boardman & Bullock, 1991) during production of a 5-item sequence. Each simulation trace depicts the activation history of one of the sequence element representations during the interval from just before initiation of sequence performance to just after production of the last element.

Principal Investigator/Program Director (Last, first, middle): > Guenther, Frank H.tory cortical regions, the intra-sylvian cortex and nearby frontal regions, as well subcortical regions including thalamus, basal ganglia, and superior cerebellum (not pictured).

Active regions relevant to the sequence model proposed in Section D include the left hemisphere SMA, pre-SMA, inferior frontal sulcus (IFS), and posterior Broca’s areas (BA 44). These areas are labeled in Fig. 7. The remaining activations in the figure, along the sensorimotor cortex surrounding the central sulcus as well as superior temporal auditory cortical areas, are accounted for by the DIVA model (see Section C.1 and Guenther et al., in press in Appendix materials).

The preparation (NOGO) condition (not shown) activated much of the same cortical network to a lesser degree. The auditory cortical areas as well as motor and somatosensory face areas were much less active in the NOGO conditions, as expected. The superior cerebellum, basal ganglia/anterior thalamus, left anterior in-sula/frontal operculum, and SMA also showed significantly increased activity for overt production compared to preparation. These findings generally agree with studies comparing overt to covert speech production (Mur-phy et al., 1997; Wise et al., 1999; Sakurai et al., 2001; Riecker et al., 2000a), although there is considerable variability in experimental designs and outcomes.

Production of complex sequences of three dis-tinct syllables (e.g. ba-da-ti) was expected to engage cortical sequencing mechanisms to a greater degree than production of syllable repetitions (e.g. ba-ba-ba). Several cortical regions responded more strongly for complex sequences in our experiment (Fig. 8, top), in-cluding bilateral pre-SMA, frontal operculum/anterior in-sula, left IFS, and superior parietal cortex. Furthermore, subcortical activation in right inferior cerebellum and bi-lateral basal ganglia was observed for complex – sim-ple sequences of simple syllables (Fig. 8, bottom).

Increased syllable complexity (e.g. stra-stra-stra vs. ba-ba-ba) should engage brain mechanisms neces-sary for programming articulator movements at a sub-syllabic level. Additional activations for overt production of complex vs. simple syllable types were observed in the pre-SMA bilaterally, within the left pre-central gyrus, and in the superior paravermal cerebellum.

The results of this experiment give some important initial data points to aid in understanding how the brain represents and executes sequences of syllables. The region of activity around the left inferior frontal sulcus (IFS) represents the “highest-level” brain region that showed reliable differential activations in this experiment and is likely to serve as a working memory representation for planned utterances (see D.1 for further discussion). The pre-SMA showed great sensitivity to both supra- and sub-syllabic complexity and has suitable connectivity to serve as an interface between the prefrontal cortex and the motor executive system (Jurgens, 1984; Luppino et al, 1993). The SMA proper, in contrast, showed larger activations for overt production and is likely to provide for initiation of motor outputs. A region at the junc-tion of the frontal operculum and anterior insula also showed speech complexity differences; this region may play a role in online monitoring during speech production (see also Ackermann & Riecker, 2004). The cerebellum showed distinct activation patterns along its superior and inferior aspects. The superior portions were more active for overt pro-duction than for preparation and more active for complex syllables than for simple ones. This region may be crucial for execution of well-learned syllables and/or for coarticulation effects. The right inferior cerebellum was significantly active for complex sequences but not sim-ple ones. In line with cerebellar projections to prefrontal cortex (Middle-ton and Strick, 2000; Dum and Strick, 2003), this area may play a role in support of the working memory representation in IFS (discussed in D.2).D. RESEARCH DESIGN AND METHODS

PHS 398 (Rev. 09/04) Page> 24

Fig. 8. Top: Cortical activations for complex – simple sequences, GO condition. Bottom: Right inferior cerebellum (left) and basal ganglia (right) activity for complex – simple sequences of simple syllables, GO condition.

Fig. 7. Cortical activation (white patches) during production of complex sequences of complex syllables.

Principal Investigator/Program Director (Last, first, middle): > Guenther, Frank H.The proposed research consists of two closely inter-related subprojects that combine fMRI with computational neural modeling to investigate sequencing and initiation in speech production. For the sake of clarity, the ba -sic fMRI methods and modeling framework are described first, followed by the subproject descriptions. fMRI experimental protocol. The fMRI experiments proposed herein will each involve 17 subjects6. All fMRI sessions will be carried out on a 3 Tesla Siemens scanner at the Massachusetts General Hospital NMR Cen -ter. Prior to functional runs, a high-resolution structural image of the subject's brain is collected. This struc-tural image serves as the basis for localizing task-related blood oxygenation level dependent (BOLD) activity. The fMRI experiment parameters will be based on the sequences available at the time of scanning7. The fac-ulty and research staffs at MGH, together with engineers from Siemens, develop and test pulse sequences continuously that optimize T1 and BOLD contrast while providing maximum spatial and temporal resolution for the installed Siemens scanners (Allegra, Sonata and Trio). Because scanner noise related to echo-planar imaging (EPI) may alter normal auditory cortical responses and/or cause subjects to adopt abnormal strate -gies during speech, and because articulator movements can induce artifacts in MR images, it is important to avoid image acquisition during stimulus presentation and articulation (e.g., Munhall, 2001). To this end, we will use an event-triggered paradigm in which the scanner is triggered to collect 2 full-brain image volumes following production of each stimulus. Because BOLD changes induced by the task persist for many seconds, this technique allows us to measure activation changes while avoiding scanner noise confounds and motion artifacts. The inter-stimulus interval will be determined for each experiment to be long enough to allow collec-tion of two volumes starting approximately 3 seconds8 after the speech production period is complete (total ISI of approximately 12-15 seconds). Data analysis will correct for summation of blood oxygen level across trials using a general linear model (including correction for the effects of the scanner noise during the previous trial).

Each session will consist of approximately 4-8 functional runs of approximately 6-12 minutes each. During a run, stimuli will typically be presented in a pseudo-random order. For each experiment, the task(s) and stim-ulus type(s) are carefully chosen to address the aspect of speech sequencing and/or initiation being studied; these tasks and stimuli are described in the subproject descriptions in Sections D.1-D.2. We have developed software to allow us to send event triggers to the scanner and to analyze the resulting data, and we have successfully used this technique to measure brain activation during speech production as part of another grant (R01 DC02852; see Sections C.2 and C.4 and Guenther et al., in press in Appendix). fMRI power analysis. Following the methodology described in Zarahn and Slifstein (2001), we utilized the data from our syllable sequence production experiment described in Section C.4 to obtain reference parame-ters from which to derive power estimations for the fMRI studies proposed in this application. Activations dur-ing overt speech productions compared to baseline provided measures of within- and between-subject vari-ability as well as a reference effect size of the SPM-derived general linear model parameters. The expected within-subject variability for our proposed studies was then computed from the reference value by using the number of conditions and stimulus presentations in the proposed studies compared to the same values for the reference study. Power estimates for the two proposed fMRI experiments in Section D.1, which involve 5 stimulus conditions, show that 17 subjects would be needed to detect (with probability>.8) in a random-effect analysis (at a p<.01 type I error level) an effect size that is 35% as large as the effect size of the reference contrast (overt speech - baseline). Most of the contrasts evaluated in the reference study resulted in effect sizes that fell well above the 35% threshold. This power calculation is based on the assumption that 30% of the trials in any condition contain subject errors or have other problems that cause them to be removed from the analysis. (For comparison, the error rate for the most difficult condition in the preliminary sequencing ex-periment described in Section C.4 -- the complex syllable/complex sequence condition -- was 14.2%)

The fMRI experiments in Section D.2 involve only 3 conditions. However the production tasks may be more prone to errors as they involve more difficult phoneme/syllable sequences. If we assume 50% of the tri -als must be removed from the analysis due to production errors, we arrive at power estimates nearly identical to those described above for the experiments in D.1.

These power estimations are conservative, particularly considering that they assume large numbers of

6 Subject pool sizes and selection criteria. All subjects will be between the ages of 18 and 55 with normal hearing and no known neurological disorders. We anticipate the need for 17 subjects per fMRI study to produce optimal results (see fMRI power analysis above). We further anticipate that 1-2 subjects will be needed to fine-tune each fMRI experiment, and data from 1-2 fMRI scanning sessions will be unusable due to subject motion or technical difficulties, yielding a bud-geting estimate of 20 fMRI sessions per experiment. 7 Current scanning parameters are as follows. T1-weighted high resolution anatomical scans: voxel size 1.33mm sagittal x 1mm coronal x 1mm axial. T2-weighted Echo Planar Imaging (EPI) functional scans: 16 slices per second, matrix size 64x64, field of view (FOV) 200x200mm, in-plane resolution 3.125 x 3.125mm. Our experience has shown that a scan vol -ume of 200mm x 200mm x 150mm (e.g., 37 slices of 4.05mm thickness) is sufficient to cover the entire brain. Slices are acquired in an interleaved manner and the volume acquisition time is approximately 2.25 seconds. 8 The 3s offset allows us to scan at the peak of the hemodynamic response to speech production (cf. Birn et al., 1999).

PHS 398 (Rev. 09/04) Page> 25

Principal Investigator/Program Director (Last, first, middle): > Guenther, Frank H.unusable trials and are based on voxel-level statistics. The use in the proposed studies of cluster-level and/or ROI analyses, methods that use information from neighboring voxels, should increase statistical power of the proposed studies beyond the values predicted here (Friston et al. 1996, Nieto-Castanon et al. 2003).Effective connectivity analysis. Whereas commonly used voxel-based analyses of fMRI data rely on the notion of functional specialization, the brain, as well as the neural model proposed herein, is a connected structure in a graph-theoretic sense, and connections between specialized regions bring about functional in-tegration (see e.g. Horwitz, 2000; Friston, 2002). Functional integration, or the task-specific interactions be-tween brain regions, can be assessed through various network analyses that measure effective connectivity. In the current proposal, we will use structural equation modeling (SEM) to examine these interactions. SEM is the most widely used method for making effective connectivity inferences from fMRI (Penny et al., 2004), and benefits from a large literature regarding its application to neuroimaging (e.g. McIntosh et al., 1994a, 1994b; Büchel and Friston, 1997; Bullmore et al., 2000; Mechelli et al., 2002) as well as its general theory (see e.g. Bollen, 1989). This method requires the specification of a causal, directed anatomical (structural) model, and estimates path coefficients (strength of influence) for each connection in the model that minimize the differ -ence between the measured inter-regional covariance matrix and that implied by the model.We will utilize a single characteristic time-course of the BOLD response from each region-of-interest (ROI) corresponding to a component of our model in the SEM calculations. There exists a natural correspondence between structural equation models and neural network models, as both are specified by connectivity graphs and connection strengths. We can specify the connectivity structure in both models identically (based on known anatomy from primate studies, diffusion tensor imaging studies, etc.), and directly compare the resulting inferred path coefficients with the connectivity in the model. In both cases, inter-regional interaction may be dynamic in the sense that the activity in one region may be driven by different regions (or in different proportions) in varying tasks and contexts; likewise, learning may result in the strengthening or weakening of effective connections (e.g. Büchel et al., 1999). To assess the overall goodness of fit of the SEM we will use the χ2 statistic corre-sponding to a likelihood ratio test. If we are unable to obtain proper fits using our theoretical structural models (i.e. P(χ2) > 0.05), we will consider this evidence that the connectivity structure is insufficient and we will de -velop and test alternative models. To make inferences about changes in effective connectivity due to task manipulations or learning (see Sections D.1 and D.2 for details), we will utilize a “stacked model” approach; this consists of comparing a ‘null model’ in which path coefficients are constrained to be the same across conditions with an ‘alternative model’ in which the coefficients are unconstrained. A χ2 difference test will be used to determine if the alternative model provides a significant improvement in the overall goodness-of-fit. If so, the null model can be rejected, indicating that effective connectivity differed across the conditions of inter-est.Computational modeling framework. The model will be implemented using the Matlab programming envi-ronment on Windows and Linux workstations equipped with large amounts of RAM (4 Gigabytes) to allow ma -nipulation of large matrices of synaptic weights, as well as other memory-intensive computations, in the neu-ral network simulations. Matlab allows graphical user interface generation, sophisticated matrix-based compu-tations (ideal for simulating neural networks as proposed herein), generation of graphical output (in the form of moving speech articulators, simulated brain activation patterns, and plots of variables of interest), and gen-eration of acoustic output (in the form of speech sounds produced by the model). Portions of the model that require intensive computations will be implemented in C and imported into Matlab as MEX executable files in order to speed up the simulations. Our department has all the relevant Matlab licenses, and our laboratory has used this environment for development of the DIVA model of speech production as part of another project. D.1 Subproject 1: Combining the CQ and DIVA models to investigate the neural basis of speech sound sequence production. This subproject will consist of one modeling project and two neuroimaging experi-ments. The goal of the modeling project is to create a competitive queuing (CQ) based model whose compo -nents specifically relate to cells in brain areas underlying sequence generation in speech. This model, which we will refer to as the sequence model, will then be integrated with the DIVA model of speech production. The combined model will be referred to as the CQ+DIVA model in this application. Simulations of the CQ+DIVA model producing syllable strings in particular speaking tasks will be run and compared to the re -sults of the proposed fMRI studies which involve those same speaking tasks. These experiments are de-signed to guide model development, test particular aspects of the model, and test between the model and al -ternative theories. This work offers three advancements over previous modeling work in this area: 1) it builds upon the CQ work of Bullock and colleagues (Boardman & Bullock, 1991; Rhodes & Bullock, 2002; Rhodes et al., 2004) which has thus far focused on sequences of externally cued manual movements, 2) the CQ net -work will work in concert with a well-developed neural model of speech production (the DIVA model) to allow quantitative measurement of interactions between the planning and motor execution stages of speech, and 3) by developing a model that includes both stages, a framework will exist for examining speech disorders that involve sequencing, initiation, and/or motor execution of speech sounds, such as stuttering and apraxia of

PHS 398 (Rev. 09/04) Page> 26

Principal Investigator/Program Director (Last, first, middle): > Guenther, Frank H.speech.

In the following paragraphs we describe the theoretical framework that will be implemented (in equations and computer simulations) in the proposed modeling project and tested in the accompanying fMRI experi-ments. The framework synthesizes a number of theoretical and experi-mental contributions into a cohesive, unified account of a broad range of neurological and behavioral data. We first describe a basic computational unit, the basal ganglia (BG) loop, that will be used in the complete se-quence model, before describing the rest of the model.The cortico-basal ganglia-thalamo-cortical loop as a functional unit. The proposed sequence model is built upon the basic functional unit illus-trated in Fig. 9. This unit consists of a cortico-BG-thalamo-cortical loop that begins and ends in the same portion of the cerebral cortex. Such neural loops have been widely reported in the monkey neuroanatomical literature (e.g., Alexander et al., 1986; Alexander & Crutcher, 1990; Cum-mings, 1993; Middleton & Strick, 2000). In our model we will include two “cells”9 to represent a cortical column: a superficial layer cell and a deep layer cell. This simplified breakdown of the layers in a cortical column is analogous to the breakdown utilized in the detailed model of BG function of Brown et al. (2004). The two-layer simplification allows the model to in-corporate two major empirical generalizations regarding cortical-BG and cortico-cortical projections. First, the dominant cortico-striatal projection is from layers 5a or above (“superficial”) whereas the cortico-thalamic and cortico-sub-thalamic projections are from deeper layers (5b, 6). Second, the cortico-cortical projections are either from deep layers to superficial layers or from superficial layers to deep layers10; cortico-cortical projections between layers of equivalent depth appear to be excluded (e.g., Barbas & Rempel-Clower, 1997). Each superficial layer cortical cell projects to a corresponding cell in the striatum (the major input portion of the BG, which includes the caudate and putamen). The striatum then projects to cells in the internal segment of the globus pallidus (GPi) or the substantia nigra pars reticulata (SNr), the output structures of the BG, via two pathways: a direct pathway in which each cell in the striatum projects through an inhibitory connection to a corresponding output cell (Albin et al., 1989), and an indirect pathway that projects through inhibitory pathways to the external segment of the globus pallidus (GPe) which in turn provides diffuse inhibition of the GPi/SNr11 (Parent & Hazrati, 1995; Mink, 1996). The BG output nuclei, typically tonically active, project through inhibitory connections to the thal-amus (Penney and Young, 1981; Deniau and Chevalier, 1985), which in turn sends excitatory projections to the deep layer of the cortical column from which the loop originated.

We will typically assume that each cortical column represents a different planned motor action (e.g., a particular phoneme). Thus the cortex schematized in Fig. 9 represents four motor actions. The superficial layer in this example contains a parallel representation of the four actions, as in the planning layer of the CQ model described in Section C.3 (see Fig. 5). We follow prior interpretations (Mink & Thach, 1993; Kropotov & Etlinger, 1999; Brown et al. 2004) in hypothesizing that pathways through BG have the effect of selectively enabling output from the winner of the competition between motor actions. In the schematic of Fig. 9, a “win-ner take all” or “choice” dynamic enables the cortical column responsible for the largest input to the BG to re -ceive enough thalamic activation to generate an output from its deep layer, which drives a subsequent stage of processing. In contrast, the columns representing the other items do not receive such output-enabling acti -vation from thalamus and thus have no deep layer activity. In other instances, the BG loop may simply “gate off” or scale the activity in the deep layer of the cortical column instead of performing a “choice”.

To understand the functionality of the BG loop, it is useful to consider the net effect of the excitatory and inhibitory projections between the striatum and cortex via the direct and indirect pathways. In the direct pathway, activation of a striatal cell has the effect of inhibiting the corresponding GPi/SNr cell. This dis-in -hibits the corresponding thalamic cell, which in turn excites the corresponding superficial cortical layer cell. In other words, the direct pathway is excitatory and has the specific effect of exciting only the same column(s) 9 Although we use the term “cells” to describe the smallest computational units in the model, we expect that each cell cor-responds to a small population of neurons that behave in a highly coordinated fashion rather than a single neuron. Ac-cordingly, when modeling the fMRI activity associated with a model cell’s activity, we generate a Gaussian spread of acti-vation centered at the cell’s defined location in stereotactic space. This spread also helps account for the spatial averag -ing and smoothing inherent in fMRI data analysis.10 Due to spatial resolution limits of fMRI, it is not possible to reliably distinguish deep from superficial cortical layers. The deep and superficial layer cells of a cortical column will be assumed to be at the same spatial location in our model.11 Due to space constraints, we do not explicitly address the role of the subthalamic nucleus (STN) in the indirect pathway in this application, though it is implicit in our indirect pathway and we will include it in the proposed modeling study.

PHS 398 (Rev. 09/04) Page> 27

Fig. 9. The cortico-BG-thalamo-cortical functional unit (BG loop).

C o rtex

Sup erfic ia l

Dee p

Ba sa l G a ng liaStria tum

G Pi/SNr

Tha l

To o the rc o rte x

G Pe--

-

-

Fro mo the rc o rte x

Principal Investigator/Program Director (Last, first, middle): > Guenther, Frank H.as the superficial layer cell(s) that provide the initial excitatory input to the striatum. Because it involves one extra inhibitory connection, the indirect pathway is inhibitory on thalamus and cortex. Furthermore, due to the diffuse indirect path projection, this inhibition spreads across all competing columns (i.e., across all motor action representations). Thus the larger an action’s advantage in superficial layer activation, the more it will excite itself and inhibit other actions in the BG-loop-mediated competition. This inherent connectivity provides the types of interactions that would be expected for a CQ-like choice network. The balance between direct and indirect pathways can change; e.g., the release of dopamine in the striatum has an excitatory effect on the direct pathway and an inhibitory effect on the indirect pathway (Gerfen & Wilson, 1996; Brown et al., 2004; Frank, 2005), thus biasing the system toward the direct pathway. Such a bias can affect competitions between actions. For example, a stronger indirect pathway will more strongly suppress all action; such a process may be responsible for the overall lack of movement seen in Parkinson’s disease, which is character-ized by a lack of dopamine in the striatum and corresponding bias toward the indirect pathway (e.g. Wich-mann and DeLong, 1996) in addition to pathological neuronal bursting that leads to tremor (Bevan et al., 2002).