Deep Learning Broad Overviewadv-ml-2017.wdfiles.com/.../deep_learning_overview.pdf · Brief History...

Transcript of Deep Learning Broad Overviewadv-ml-2017.wdfiles.com/.../deep_learning_overview.pdf · Brief History...

Deep Learning Broad Overview

Learning Complex Functions• We would like to learn complex mappings.

• Text translation

• Speech to text

• Situation to action

• The ML way: use a lot of training data to train a model.

• What should the model look like?

• We know there exists such a model…

Neural Networks• Since we want to model things humans do,

why not try to use “neural network” models.

Credit: CLARITY process. K. Deisseroth, StanfordDisclaimer: “Deep Learning” models are very different from biological neural networks.

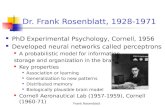

Brief History• Initial ideas by McCullogh, Pitts and Rosenblatt

(1940-1960)

• Limitations pointed out by Minksy and Papert (1969)

• In the 1980s neural network models were proposed and attracted much interest, with some empirical success.

• In the 1990s interest turned to SVM, which used convex optimization, and were empirically successful.

Neural Nets Revisited - Deep Learning

• Since 2012 neural nets have re-emerged, and showed striking success on tasks such as:

• Image understanding

• Speech recognition

• Translation

• Game playing

Wash the dishes שטוף את הכלים

Wash the dishes

Plates

Neural Networks• Some properties of neural networks:

• Neurons sum their inputs (via dendrites). If the sum is large enough. The neuron fires a spike or sequence of spikes, that are transmitted to the axon.

• Visual input undergoes a sequence of processing steps (first in the retina, then in V1, V2 etc). Caution: This is highly simplified.

Artificial Neural Networks • Construct models that have:

• Summation of inputs followed by non-linearity.

• A sequence of layers

• Last layer is often linear.

x

z1

z2

zL

z0 = x

zk = h(Wkzk�1 + bk)

Classification with Deep Learning

• Use network to construct binary classifier:

x

z1

z2

v• Or multi-class: x

z1

z2

v1 v2 v3

y = sign [v(x)]

y = max

yvy(x)

Loss for Multi Class• Given training data (xi

, y

i)

• Want vy to be maximized at yi

• Several options for loss (as in structured prediction)

X

i

log p(y

i|xi;w)

Log loss (aka cross entropy)

Hinge Loss

x

z1

z2

v1 v2 v3

X

i

max

y

⇥vy(x;w)� vyi

(x;w) + �y,yi

⇤

p(y|x;w) =e

vy(x;w)

Py e

vy(x;w)

Optimization • Want to minimize:

• Most common approach: stochastic gradient descent

wt+1 = wt � ⌘

@

@w`(xi

, y

i;wt)

• Popular variation. Use gradient over batch of size B (e.g., B = 128)

• Lower variance estimate. For small B can be calculated in parallel at same time as B=1.

wt+1 = wt �⌘

B

@

@w

BX

i=1

`(xi, y

i;wt)

nX

i=1

`(xi, y

i;w)

Optimization • SGD often works well in practice.

• Although not guaranteed due to non-convexity and vanishing gradient (see later).

• Quite sensitive to step size and initialization.

• Many variants. One of the most successful is AdaGrad (Duchi, Singer, Hazan 2011).

• Different step size for different coordinates.

Back propagation• To run SGD we just need to calculate the gradient of

the loss wrt W.

• There are software tools that will do this for you (e.g., Theano, Tensorflow, Cafe, MXNet).

• The gradient calculation for feed forward nets is called back propagation (Rumelhart, Hinton and Williams, 86)

Back propagation• Back propagation has three phases:

• Forward pass. For each layer calculate the linear output and non linear activation

• Calculate “error” vector for each layer. From last to first:

vt = Wtzt�1

zt = h(vt)

�t

�t =⇣h0(vt+1) � �Tt+1

⌘Wt+1

• Set gradient: @`

@Wt= (�t � h0(vt))z

Tt�1

x

z1

z2

zL

x

z1

z2

zL�L = `0(y, zL(x))

Vanishing Gradient

• The delta functions are “products” of weights

• As number of layers grow, they can potentially decrease exponentially. This is known as the vanishing gradient problem.

• Intuitively, a single weight in the lower layers has a small effect on the top layer.

�t =⇣h0(vt+1) � �Tt+1

⌘Wt+1

@`

@Wt= (�t � h0(vt))z

Tt�1

�L = `0(y, zL(x))

Vanishing Gradient• This is a real problem and makes it harder to train deeper

layers.

• See some analysis here.

• Models in 2012 were still relatively shallow (eight layers), but current models (e.g., ResNets) can go up to hundreds of layers using various tricks. Discussed below:

• Batch normalization

• ResNet

Batch Normalization• One issue is the changing scale of the network

outputs.

• One popular approach to this Batch Normalization.

• It adds a mean removal and variance normalization “gadget” after each layer.

ResNets• A highly successful architecture for deep neural nets.

• Appeared in: Kaiming He, Xiangyu Zhang, Shaoqing Ren, & Jian Sun. “Deep Residual Learning for Image Recognition”. CVPR 2016.

• Model with 152 layers and high accuracy.

• Slides credit: ICML tutorial by Kaiming He.

Depth and Performance

RevolutionofDepth

3.57

6.7 7.3

11.7

16.4

25.828.2

ILSVRC'15ResNet

ILSVRC'14GoogleNet

ILSVRC'14VGG

ILSVRC'13 ILSVRC'12AlexNet

ILSVRC'11 ILSVRC'10

ImageNetClassificationtop-5error(%)

shallow8layers

19layers22layers

152layers

8layers

KaimingHe,XiangyuZhang,ShaoqingRen,&JianSun.“DeepResidualLearningforImageRecognition”.CVPR2016.

Failure of Standard Deep Nets• Fails to get low training error, despite being a more

expressive modelSimplystackinglayers?

0 1 2 3 4 5 60

10

20

iter. (1e4)

trainerror(%)

0 1 2 3 4 5 60

10

20

iter. (1e4)

testerror(%)CIFAR-10

56-layer

20-layer

56-layer

20-layer

• Plain nets:stacking3x3convlayers…• 56-layernethashighertrainingerror andtesterrorthan20-layernet

KaimingHe,XiangyuZhang,ShaoqingRen,&JianSun.“DeepResidualLearningforImageRecognition”.CVPR2016.

The Identity Failure• Why are more layers as expressive as less layers?

• You can always set the extra layer to be the identity transformation.

• But apparently this is hard to learn!

New Layers

• How can we “help” the architecture to learn the shallower solution.

ResNets• Allow layer “skipping”.

New Layers

+

• Now if we set the red weights to zero, we get the original solution.

• The new layers need only learn the “residual”

• Also makes gradients less likely to vanish.

Building a Network for your Task• In building a network we need to decide on:

• Which pairs of neurons are connected? e.g., can have K layers with all neurons in layer t connected to all neurons in t+1).

• What are the activation functions?

• Do different neurons use the same weights? (parameter tying).

• Application dependent. Next we discuss some aspects that work well for machine-vision.

Which non-linearity?• The non linearity is also called

the activation function.

Threshold Sigmoid

h(z) =1

1 + e�zh(z) = max [0, z]

Rectified linear unit (ReLU)

z0 = x

zk = h(Wkzk�1 + bk)

ReLU often works better

than the others.

What’s in an image?

The ImageNet Dataset

Neural Nets for Images• One of the striking successes of deep learning

• ImageNet dataset: 1000 classes. About a million labeled images.

• In 2012, the paper “ImageNet Classification with Deep Convolutional Neural Networks” by Krizhevsky, Sutskever and Hinton.

• Previous accuracy (top 5) 25%. Theirs 17%. Today 3%!

• How did they do it?

Architectures for Images• Combination of local features (edges, texture, color)

and global (shape, relation between objects).

• Local features expected to be similar across image.

Same weights

• A convolution of the image with a filter!

Architectures for Images

• Use many such filters

• Reduce resolution by pooling: max

“Alex Net”

Figure 2: An illustration of the architecture of our CNN, explicitly showing the delineation of responsibilitiesbetween the two GPUs. One GPU runs the layer-parts at the top of the figure while the other runs the layer-partsat the bottom. The GPUs communicate only at certain layers. The network’s input is 150,528-dimensional, andthe number of neurons in the network’s remaining layers is given by 253,440–186,624–64,896–64,896–43,264–4096–4096–1000.

neurons in a kernel map). The second convolutional layer takes as input the (response-normalizedand pooled) output of the first convolutional layer and filters it with 256 kernels of size 5⇥ 5⇥ 48.The third, fourth, and fifth convolutional layers are connected to one another without any interveningpooling or normalization layers. The third convolutional layer has 384 kernels of size 3 ⇥ 3 ⇥256 connected to the (normalized, pooled) outputs of the second convolutional layer. The fourthconvolutional layer has 384 kernels of size 3 ⇥ 3 ⇥ 192 , and the fifth convolutional layer has 256kernels of size 3⇥ 3⇥ 192. The fully-connected layers have 4096 neurons each.

4 Reducing Overfitting

Our neural network architecture has 60 million parameters. Although the 1000 classes of ILSVRCmake each training example impose 10 bits of constraint on the mapping from image to label, thisturns out to be insufficient to learn so many parameters without considerable overfitting. Below, wedescribe the two primary ways in which we combat overfitting.

4.1 Data Augmentation

The easiest and most common method to reduce overfitting on image data is to artificially enlargethe dataset using label-preserving transformations (e.g., [25, 4, 5]). We employ two distinct formsof data augmentation, both of which allow transformed images to be produced from the originalimages with very little computation, so the transformed images do not need to be stored on disk.In our implementation, the transformed images are generated in Python code on the CPU while theGPU is training on the previous batch of images. So these data augmentation schemes are, in effect,computationally free.

The first form of data augmentation consists of generating image translations and horizontal reflec-tions. We do this by extracting random 224⇥ 224 patches (and their horizontal reflections) from the256⇥256 images and training our network on these extracted patches4. This increases the size of ourtraining set by a factor of 2048, though the resulting training examples are, of course, highly inter-dependent. Without this scheme, our network suffers from substantial overfitting, which would haveforced us to use much smaller networks. At test time, the network makes a prediction by extractingfive 224 ⇥ 224 patches (the four corner patches and the center patch) as well as their horizontalreflections (hence ten patches in all), and averaging the predictions made by the network’s softmaxlayer on the ten patches.

The second form of data augmentation consists of altering the intensities of the RGB channels intraining images. Specifically, we perform PCA on the set of RGB pixel values throughout theImageNet training set. To each training image, we add multiples of the found principal components,

4This is the reason why the input images in Figure 2 are 224⇥ 224⇥ 3-dimensional.

5

From: “ImageNet Classification with Deep Convolutional Neural Networks”

60 Million parameters! Would you expect this to work?

Slide credit: Fei-Fei Li & Andrej Karpathy and Yann LeCun

What do the neurons calculate?

Create chart

Avoiding Overfitting• Many models have much more parameters than

training points

• AlexNet: 1 Million points and 60 Million parameters?

• How can this not overfit??

• If you return an arbitrary network that minimizes training error, you will overfit.

• So you must use some sort of regularization.

Avoiding Overfitting• Some approaches to avoid overfitting:

• SGD: When started from zero, will not return an arbitrary loss minimizer (e.g., in linear case will return something in span of inputs).

• Add artificial examples. e.g., add noise to image, resize, flip, etc. Uses known invariances.

• Dropout

Dropout• The brain can overcome death of many neurons.

• It is somehow robust to losing these

• Srivastava et al. proposed Dropout as a method to achieve this in neural models.

• Simple idea: while training, randomly reset (to zero) a subset of the neurons.

• Then individual neurons cannot be “relied” on.

• Equivalent to regularization. e.g., see Wager et al.

DropoutSrivastava, Hinton, Krizhevsky, Sutskever and Salakhutdinov

(a) Standard Neural Net (b) After applying dropout.

Figure 1: Dropout Neural Net Model. Left: A standard neural net with 2 hidden layers. Right:An example of a thinned net produced by applying dropout to the network on the left.Crossed units have been dropped.

its posterior probability given the training data. This can sometimes be approximated quitewell for simple or small models (Xiong et al., 2011; Salakhutdinov and Mnih, 2008), but wewould like to approach the performance of the Bayesian gold standard using considerablyless computation. We propose to do this by approximating an equally weighted geometricmean of the predictions of an exponential number of learned models that share parameters.

Model combination nearly always improves the performance of machine learning meth-ods. With large neural networks, however, the obvious idea of averaging the outputs ofmany separately trained nets is prohibitively expensive. Combining several models is mosthelpful when the individual models are di↵erent from each other and in order to makeneural net models di↵erent, they should either have di↵erent architectures or be trainedon di↵erent data. Training many di↵erent architectures is hard because finding optimalhyperparameters for each architecture is a daunting task and training each large networkrequires a lot of computation. Moreover, large networks normally require large amounts oftraining data and there may not be enough data available to train di↵erent networks ondi↵erent subsets of the data. Even if one was able to train many di↵erent large networks,using them all at test time is infeasible in applications where it is important to respondquickly.

Dropout is a technique that addresses both these issues. It prevents overfitting andprovides a way of approximately combining exponentially many di↵erent neural networkarchitectures e�ciently. The term “dropout” refers to dropping out units (hidden andvisible) in a neural network. By dropping a unit out, we mean temporarily removing it fromthe network, along with all its incoming and outgoing connections, as shown in Figure 1.The choice of which units to drop is random. In the simplest case, each unit is retained witha fixed probability p independent of other units, where p can be chosen using a validationset or can simply be set at 0.5, which seems to be close to optimal for a wide range ofnetworks and tasks. For the input units, however, the optimal probability of retention isusually closer to 1 than to 0.5.

1930

From Srivistava et al.

Dropout

setting that do not use dropout or unsupervised pretraining achieve an error of about1.60% (Simard et al., 2003). With dropout the error reduces to 1.35%. Replacing logisticunits with rectified linear units (ReLUs) (Jarrett et al., 2009) further reduces the error to1.25%. Adding max-norm regularization again reduces it to 1.06%. Increasing the size ofthe network leads to better results. A neural net with 2 layers and 8192 units per layergets down to 0.95% error. Note that this network has more than 65 million parameters andis being trained on a data set of size 60,000. Training a network of this size to give goodgeneralization error is very hard with standard regularization methods and early stopping.Dropout, on the other hand, prevents overfitting, even in this case. It does not even needearly stopping. Goodfellow et al. (2013) showed that results can be further improved to0.94% by replacing ReLU units with maxout units. All dropout nets use p = 0.5 for hiddenunits and p = 0.8 for input units. More experimental details can be found in Appendix B.1.

Dropout nets pretrained with stacks of RBMs and Deep Boltzmann Machines also giveimprovements as shown in Table 2. DBM—pretrained dropout nets achieve a test error of0.79% which is the best performance ever reported for the permutation invariant setting.We note that it possible to obtain better results by using 2-D spatial information andaugmenting the training set with distorted versions of images from the standard trainingset. We demonstrate the e↵ectiveness of dropout in that setting on more interesting datasets.

With dropout

Without dropout

@R

@@R

Figure 4: Test error for di↵erent architectureswith and without dropout. The net-works have 2 to 4 hidden layers eachwith 1024 to 2048 units.

In order to test the robustness ofdropout, classification experiments weredone with networks of many di↵erent ar-chitectures keeping all hyperparameters, in-cluding p, fixed. Figure 4 shows the testerror rates obtained for these di↵erent ar-chitectures as training progresses. Thesame architectures trained with and with-out dropout have drastically di↵erent testerrors as seen as by the two separate clus-ters of trajectories. Dropout gives a hugeimprovement across all architectures, with-out using hyperparameters that were tunedspecifically for each architecture.

6.1.2 Street View House Numbers

The Street View House Numbers (SVHN)Data Set (Netzer et al., 2011) consists ofcolor images of house numbers collected byGoogle Street View. Figure 5a shows some examples of images from this data set. Thepart of the data set that we use in our experiments consists of 32⇥ 32 color images roughlycentered on a digit in a house number. The task is to identify that digit.

For this data set, we applied dropout to convolutional neural networks (LeCun et al.,1989). The best architecture that we found has three convolutional layers followed by 2fully connected hidden layers. All hidden units were ReLUs. Each convolutional layer was

1937

Beyond Classification • Many cognitive tasks are not “simple” classification.

• Speech recognition

• Translation

• Driving

• Here the input is a sequence over time and the output is a sequence.

• Structured prediction!

Language Model• “He stepped on my foot. It was really _____”

• Goal: predict next word wn from previous w1,…,wn-1

• Intuitively: no need to remember all the history. Just the relevant context.

Using Context States• Say at time n-1, we have a vector sn-1 capturing the

relevant context. Then makes sense to assume context determines the next word:

p(wn|w1, . . . , wn�1) = f(wn, sn�1(w1, . . . , wn�1))

• And context is updated as:sn = g(sn�1, wn)

• Recursive Neural Nets (RNNs) implement this idea.

RNNs• Assume wn are vectors, and so are sn

• State update is:

• Where is an “activation function” (e.g., tanh).

• Output distribution:

sn = � (Asn�1 +Bwn)

�

p(wn|w1, . . . , wn�1)) / ev(wn)·sn�1

s1

w1

s2

w2

s3

w3

He stepped

Sequence to Sequence Mapping

• Similar approach can be used for translation.

• Proposed in “Sequence to Sequence Learning with Neural” by Sutskever et al. 2014

• State of the art speech recognition and translation.

sequence of words representing the answer. It is therefore clear that a domain-independent methodthat learns to map sequences to sequences would be useful.

Sequences pose a challenge for DNNs because they require that the dimensionality of the inputs andoutputs is known and fixed. In this paper, we show that a straightforward application of the LongShort-Term Memory (LSTM) architecture [16] can solve general sequence to sequence problems.The idea is to use one LSTM to read the input sequence, one timestep at a time, to obtain large fixed-dimensional vector representation, and then to use another LSTM to extract the output sequencefrom that vector (fig. 1). The second LSTM is essentially a recurrent neural network language model[28, 23, 30] except that it is conditioned on the input sequence. The LSTM’s ability to successfullylearn on data with long range temporal dependencies makes it a natural choice for this applicationdue to the considerable time lag between the inputs and their corresponding outputs (fig. 1).

There have been a number of related attempts to address the general sequence to sequence learningproblem with neural networks. Our approach is closely related to Kalchbrenner and Blunsom [18]who were the first to map the entire input sentence to vector, and is very similar to Cho et al. [5].Graves [10] introduced a novel differentiable attention mechanism that allows neural networks tofocus on different parts of their input, and an elegant variant of this idea was successfully appliedto machine translation by Bahdanau et al. [2]. The Connectionist Sequence Classification is anotherpopular technique for mapping sequences to sequences with neural networks, although it assumes amonotonic alignment between the inputs and the outputs [11].

Figure 1: Our model reads an input sentence “ABC” and produces “WXYZ” as the output sentence. Themodel stops making predictions after outputting the end-of-sentence token. Note that the LSTM reads theinput sentence in reverse, because doing so introduces many short term dependencies in the data that make theoptimization problem much easier.

The main result of this work is the following. On the WMT’14 English to French translation task,we obtained a BLEU score of 34.81 by directly extracting translations from an ensemble of 5 deepLSTMs (with 380M parameters each) using a simple left-to-right beam-search decoder. This isby far the best result achieved by direct translation with large neural networks. For comparison,the BLEU score of a SMT baseline on this dataset is 33.30 [29]. The 34.81 BLEU score wasachieved by an LSTM with a vocabulary of 80k words, so the score was penalized whenever thereference translation contained a word not covered by these 80k. This result shows that a relativelyunoptimized neural network architecture which has much room for improvement outperforms amature phrase-based SMT system.

Finally, we used the LSTM to rescore the publicly available 1000-best lists of the SMT baseline onthe same task [29]. By doing so, we obtained a BLEU score of 36.5, which improves the baselineby 3.2 BLEU points and is close to the previous state-of-the-art (which is 37.0 [9]).

Surprisingly, the LSTM did not suffer on very long sentences, despite the recent experience of otherresearchers with related architectures [26]. We were able to do well on long sentences because wereversed the order of words in the source sentence but not the target sentences in the training and testset. By doing so, we introduced many short term dependencies that made the optimization problemmuch simpler (see sec. 2 and 3.3). As a result, SGD could learn LSTMs that had no trouble withlong sentences. The simple trick of reversing the words in the source sentence is one of the keytechnical contributions of this work.

A useful property of the LSTM is that it learns to map an input sentence of variable length intoa fixed-dimensional vector representation. Given that translations tend to be paraphrases of thesource sentences, the translation objective encourages the LSTM to find sentence representationsthat capture their meaning, as sentences with similar meanings are close to each other while different

2

Vanishing Gradient in RNN• For long sequences RNNs are a deep network.

• Usual problems of vanishing gradients.

• Related problem: hard to maintain state values over long periods.

• The Long Short Term Memory (LSTM) architecture (Hochreiter and Schmidhuber, 97 and Graves et al, 2009) addresses this by allowing state to not be updated in certain coordinates.

LSTMs• Key ideas in LSTM:

• The state memory ct is not the same as its observed value ht

• Some coordinates of the states may be reset, some may be updated by the input

• These “binary” decisions are functions of the input, and are actually differentiable functions of the input (sigmoids).

LSTMs• See nice explanation in http://colah.github.io/posts/

2015-08-Understanding-LSTMs/

Ct-1 Ct

1-0

�

LSTMs• Closely related variant: Gated recurrent unit (GRU)

by Cho et al 2014

• LSTMs are pretty standard for sequence to sequence models (also for language models)

• Often outperform plain RNNs

Attention• “Remembering” a whole sentence is pretty wasteful

if we can have access to specific parts that are relevant.

• Basic idea for attention models (introduced for translation by Bahdanau et al. 2014 and then to vision by Xu et al. 2015)

• Key idea: predict next element by combining:

• Current state

• Certain segment of the past (look at soft vs hard)

Attention for Caption Generation

Neural Image Caption Generation with Visual Attention

Figure 2. Attention over time. As the model generates each word, its attention changes to reflect the relevant parts of the image. “soft”(top row) vs “hard” (bottom row) attention. (Note that both models generated the same captions in this example.)

Figure 3. Examples of attending to the correct object (white indicates the attended regions, underlines indicated the corresponding word)

two variants: a “hard” attention mechanism and a “soft”attention mechanism. We also show how one advantage ofincluding attention is the ability to visualize what the model“sees”. Encouraged by recent advances in caption genera-tion and inspired by recent success in employing attentionin machine translation (Bahdanau et al., 2014) and objectrecognition (Ba et al., 2014; Mnih et al., 2014), we investi-gate models that can attend to salient part of an image whilegenerating its caption.

The contributions of this paper are the following:• We introduce two attention-based image caption gen-

erators under a common framework (Sec. 3.1): 1) a“soft” deterministic attention mechanism trainable bystandard back-propagation methods and 2) a “hard”stochastic attention mechanism trainable by maximiz-ing an approximate variational lower bound or equiv-alently by REINFORCE (Williams, 1992).

• We show how we can gain insight and interpret theresults of this framework by visualizing “where” and“what” the attention focused on. (see Sec. 5.4)

• Finally, we quantitatively validate the usefulness ofattention in caption generation with state of the artperformance (Sec. 5.3) on three benchmark datasets:Flickr8k (Hodosh et al., 2013) , Flickr30k (Younget al., 2014) and the MS COCO dataset (Lin et al.,2014).

2. Related WorkIn this section we provide relevant background on previouswork on image caption generation and attention. Recently,several methods have been proposed for generating imagedescriptions. Many of these methods are based on recur-rent neural networks and inspired by the successful use ofsequence to sequence training with neural networks for ma-chine translation (Cho et al., 2014; Bahdanau et al., 2014;Sutskever et al., 2014). One major reason image captiongeneration is well suited to the encoder-decoder framework(Cho et al., 2014) of machine translation is because it isanalogous to “translating” an image to a sentence.

The first approach to use neural networks for caption gener-ation was Kiros et al. (2014a), who proposed a multimodallog-bilinear model that was biased by features from the im-age. This work was later followed by Kiros et al. (2014b)whose method was designed to explicitly allow a naturalway of doing both ranking and generation. Mao et al.(2014) took a similar approach to generation but replaced afeed-forward neural language model with a recurrent one.Both Vinyals et al. (2014) and Donahue et al. (2014) useLSTM RNNs for their models. Unlike Kiros et al. (2014a)and Mao et al. (2014) whose models see the image at eachtime step of the output word sequence, Vinyals et al. (2014)only show the image to the RNN at the beginning. Along

Neural Image Caption Generation with Visual Attention

Figure 2. Attention over time. As the model generates each word, its attention changes to reflect the relevant parts of the image. “soft”(top row) vs “hard” (bottom row) attention. (Note that both models generated the same captions in this example.)

Figure 3. Examples of attending to the correct object (white indicates the attended regions, underlines indicated the corresponding word)

two variants: a “hard” attention mechanism and a “soft”attention mechanism. We also show how one advantage ofincluding attention is the ability to visualize what the model“sees”. Encouraged by recent advances in caption genera-tion and inspired by recent success in employing attentionin machine translation (Bahdanau et al., 2014) and objectrecognition (Ba et al., 2014; Mnih et al., 2014), we investi-gate models that can attend to salient part of an image whilegenerating its caption.

The contributions of this paper are the following:• We introduce two attention-based image caption gen-

erators under a common framework (Sec. 3.1): 1) a“soft” deterministic attention mechanism trainable bystandard back-propagation methods and 2) a “hard”stochastic attention mechanism trainable by maximiz-ing an approximate variational lower bound or equiv-alently by REINFORCE (Williams, 1992).

• We show how we can gain insight and interpret theresults of this framework by visualizing “where” and“what” the attention focused on. (see Sec. 5.4)

• Finally, we quantitatively validate the usefulness ofattention in caption generation with state of the artperformance (Sec. 5.3) on three benchmark datasets:Flickr8k (Hodosh et al., 2013) , Flickr30k (Younget al., 2014) and the MS COCO dataset (Lin et al.,2014).

2. Related WorkIn this section we provide relevant background on previouswork on image caption generation and attention. Recently,several methods have been proposed for generating imagedescriptions. Many of these methods are based on recur-rent neural networks and inspired by the successful use ofsequence to sequence training with neural networks for ma-chine translation (Cho et al., 2014; Bahdanau et al., 2014;Sutskever et al., 2014). One major reason image captiongeneration is well suited to the encoder-decoder framework(Cho et al., 2014) of machine translation is because it isanalogous to “translating” an image to a sentence.

The first approach to use neural networks for caption gener-ation was Kiros et al. (2014a), who proposed a multimodallog-bilinear model that was biased by features from the im-age. This work was later followed by Kiros et al. (2014b)whose method was designed to explicitly allow a naturalway of doing both ranking and generation. Mao et al.(2014) took a similar approach to generation but replaced afeed-forward neural language model with a recurrent one.Both Vinyals et al. (2014) and Donahue et al. (2014) useLSTM RNNs for their models. Unlike Kiros et al. (2014a)and Mao et al. (2014) whose models see the image at eachtime step of the output word sequence, Vinyals et al. (2014)only show the image to the RNN at the beginning. Along

Image

Attention map

Neural Image Caption Generation with Visual Attention

Figure 2. Attention over time. As the model generates each word, its attention changes to reflect the relevant parts of the image. “soft”(top row) vs “hard” (bottom row) attention. (Note that both models generated the same captions in this example.)

Figure 3. Examples of attending to the correct object (white indicates the attended regions, underlines indicated the corresponding word)

two variants: a “hard” attention mechanism and a “soft”attention mechanism. We also show how one advantage ofincluding attention is the ability to visualize what the model“sees”. Encouraged by recent advances in caption genera-tion and inspired by recent success in employing attentionin machine translation (Bahdanau et al., 2014) and objectrecognition (Ba et al., 2014; Mnih et al., 2014), we investi-gate models that can attend to salient part of an image whilegenerating its caption.

The contributions of this paper are the following:• We introduce two attention-based image caption gen-

erators under a common framework (Sec. 3.1): 1) a“soft” deterministic attention mechanism trainable bystandard back-propagation methods and 2) a “hard”stochastic attention mechanism trainable by maximiz-ing an approximate variational lower bound or equiv-alently by REINFORCE (Williams, 1992).

• We show how we can gain insight and interpret theresults of this framework by visualizing “where” and“what” the attention focused on. (see Sec. 5.4)

• Finally, we quantitatively validate the usefulness ofattention in caption generation with state of the artperformance (Sec. 5.3) on three benchmark datasets:Flickr8k (Hodosh et al., 2013) , Flickr30k (Younget al., 2014) and the MS COCO dataset (Lin et al.,2014).

2. Related WorkIn this section we provide relevant background on previouswork on image caption generation and attention. Recently,several methods have been proposed for generating imagedescriptions. Many of these methods are based on recur-rent neural networks and inspired by the successful use ofsequence to sequence training with neural networks for ma-chine translation (Cho et al., 2014; Bahdanau et al., 2014;Sutskever et al., 2014). One major reason image captiongeneration is well suited to the encoder-decoder framework(Cho et al., 2014) of machine translation is because it isanalogous to “translating” an image to a sentence.

The first approach to use neural networks for caption gener-ation was Kiros et al. (2014a), who proposed a multimodallog-bilinear model that was biased by features from the im-age. This work was later followed by Kiros et al. (2014b)whose method was designed to explicitly allow a naturalway of doing both ranking and generation. Mao et al.(2014) took a similar approach to generation but replaced afeed-forward neural language model with a recurrent one.Both Vinyals et al. (2014) and Donahue et al. (2014) useLSTM RNNs for their models. Unlike Kiros et al. (2014a)and Mao et al. (2014) whose models see the image at eachtime step of the output word sequence, Vinyals et al. (2014)only show the image to the RNN at the beginning. Along

Output words

From: Show, Attend and Tell: Neural Image Caption Generation with Visual Attention. Xu et al.

Neural Nets for Algorithms• In computer science an program can make use of

memory, as well as control flow like loops.

• Can be implemented by RNN, but not natural.

• Instead, memory and control can be added directly.

• Some examples: Neural Turing Machines, Differential Neural Computers.

Summary• The art and challenge of deep learning consist of:

• Designing an architecture that fits your goal

• Controlling for overfitting

• Tricks to avoid bad local optima.

• Implementation. Easier with existing frameworks (Google TensorFlow, Cafe, Torch, Amazon MXNet, MSR CNTK etc).