D6.2 Final Evaluation Report - comsode.eu · v0.10.1 discussed in D6.1, for example implementation...

Transcript of D6.2 Final Evaluation Report - comsode.eu · v0.10.1 discussed in D6.1, for example implementation...

Page 1

DELIVERABLE D6.2

Final Evaluation Report

Project Components Supporting the Open Data Exploitation

Acronym COMSODE

Contract Number FP7-ICT-611358

Start date of the project 1st October 2013

Duration 24 months, until 31st September 2015

Date of preparation 30. 6. 2015

Author(s) Martin Nečaský, Jakub Klímek, Jan Kučera, Ján Gondol’, Oskár Štoffan, Tomáš Knap

Responsible of the deliverable Martin Nečaský

Email [email protected]

Reviewed by Ivan Hanzlík

Status of the Document Final

Version 1.0

Dissemination level

Public

Page 2

History Version Date Description Revised by

0.1 2015-06-01 Deliverable structure established

Jan Kučera

0.2 2015-06-14 Draft content added Jan Kučera

0.3 2015-06-24 Assessment of the methodologies summarized

Jan Kučera

0.4 2015-06-25 Testing results added Jan Kučera

0.5 2015-06-29 Finalization of the deliverable Jan Kučera, Martin Nečaský, Tomáš Knap, Jakub Klímek

1.0 2015-06-30 Final version of the deliverable Jan Kučera

Page 3

Table of contents

1 EXECUTIVE SUMMARY .......................................................................................................... 4

2 DELIVERABLE CONTEXT ...................................................................................................... 5

2.1 PURPOSE OF DELIVERABLE ......................................................................................................... 5 2.2 RELATED DOCUMENTS ................................................................................................................. 5

3 METHODOLOGY USED ........................................................................................................... 6

3.1 METHODOLOGY ............................................................................................................................ 6 3.2 PARTNER CONTRIBUTIONS .......................................................................................................... 6

4 ODN TESTING METHODOLOGY .......................................................................................... 7

4.1 TESTING ARTEFACTS .................................................................................................................... 7 4.1.1 Test Project, Test Plan and the Test Suites .......................................................................... 7 4.1.2 Test Case ................................................................................................................................... 8

4.2 ROLES OF THE TESTING TEAM MEMBERS .................................................................................. 8 4.3 TESTING WORKFLOW ................................................................................................................... 9

5 EVALUATION OF THE REQUIREMENTS ........................................................................ 10

5.1 TESTED OPEN DATA NODE RELEASE ....................................................................................... 10 5.2 TEST PLATFORM ......................................................................................................................... 11 5.3 OVERVIEW OF THE TEST CASES ................................................................................................ 11 5.4 EVALUATION OF THE ODN PLATFORM ON THE BASE OF TEST RESULTS ............................ 15

6 REVIEW OF THE COMSODE METHODOLOGIES ........................................................ 18

6.1 COMSODE METHODOLOGIES REVIEW STRATEGY .............................................................. 18 6.2 REVIEW RESULTS ........................................................................................................................ 20

6.2.1 D5.1 Methodology for publishing datasets as open data ................................................. 20 6.2.2 D5.4 Methodologies for the integration of datasets, examples of publication with the

ODN, integration techniques and tools............................................................................................ 21 6.2.3 D5.5 Contribution to international standards and best practices .................................. 23

7 CONCLUSIONS .......................................................................................................................... 24

8 REFERENCES ............................................................................................................................ 25

ANNEX 1 – TEST CASES ................................................................................................................. 25

ANNEX 2 – ODN USER STORIES STATUS ................................................................................. 25

ANNEX 3 – TEST RESULTS ............................................................................................................ 25

Page 4

1 Executive summary In this deliverable we present the final results of evaluation of the Open Data Node platform (ODN) and the methodologies developed in the COMSODE project. The goals of the deliverable are:

To provide an overview of the test scenarios and test cases that are used during the testing process of the COMSODE deliverables.

To report about the results of the evaluation how the ODN platform satisfies the proposed requirements.

To report about the results of the review of the methodologies developed in the COMSODE project.

This deliverable updates and extends the deliverable D6.1 Preliminary evaluation report. ODN Testing Methodology described in D6.1 was followed to develop the test cases and to perform the testing. Test cases were developed to evaluate that the requirements placed on the Open Data Node platform and the DPUs that enable its data extraction, transformation and loading (ETL) capabilities are satisfied in the final product. These requirements are described in the project deliverables D2.1 and D3.2. Because the requirements are sometimes very detailed, user stories were developed upon these requirements that bundle the related requirements into a scenarios describing typical use cases of the ODN platform from the user perspective. Therefore the some of the test cases are aimed at the user stories rather than the underlying requirements.

Results of the testing of the Open Data Node release v1.0.2 are reported in the deliverable. Despite the fact that the testing revealed some issues in the solution, Open Data Node release v1.0.2 brings many improvements when compared to the version v0.10.1 discussed in D6.1, for example implementation of REST API, fine tuning and testing synchronization between internal and public catalogue, improved dialogs and tooltips, integration with dataset visualizations or advanced DPU features such as "per-graph" execution or new DPU helpers. Further improvements are planned for the upcoming ODN release.

Results of the review of the following methodologies developed in the project are newly reported in this deliverable:

D5.1 Methodology for publishing datasets as open data

D5.4 Methodologies for the integration of datasets, examples of publication with the ODN, integration techniques and tools

D5.5 Contribution to international standards and best practices

Reviews of the D5.1 were positive and the reviewers also provided valuable comments and suggestions for improvement of the text of the methodology. Drafts of the deliverables D5.4 and D5.5 were reviewed and thus comments and suggestions of the reviewers might help the project members to improve the quality of the deliverables.

Page 5

2 Deliverable context

2.1 Purpose of deliverable

This deliverable builds upon the deliverable D6.1 Preliminary evaluation report. The aim of the deliverable D6.2 is to update the testing results described in D6.1 with the results of testing of the current ODN release and to provide the results of the evaluation of the methodologies developed in the project. The goals of the deliverable are:

To provide an overview of the test scenarios and test cases that are used during the testing process of the COMSODE deliverables.

To report about the results of the evaluation how the ODN platform satisfies the proposed requirements.

To report about the results of the review of the methodologies developed in the COMSODE project.

2.2 Related documents

Test cases described in this deliverable were developed to evaluate that the requirements placed on the Open Data Node platform and the DPUs that enable its data extraction, transformation and loading (ETL) capabilities, are satisfied in the final product. These requirements are described in the project deliverables D2.1 and D3.2. Because the requirements are sometimes very detailed, user stories were developed upon these requirements that bundle the related requirements into a scenarios describing typical use cases of the ODN platform from the user perspective. Therefore, some of the test cases are aimed at the user stories rather than the underlying requirements. These user stories are documented at <https://utopia.sk/wiki/display/ODN/User+stories>.

Related project deliverables:

D2.1 - User requirements for publication platform from target organizations, including the map of typical environment

D3.2 - Summary report on user requirements and techniques for data transformation, quality assessment, cleansing, data integration and intended data consumption of the selected datasets

D5.1 - Methodology for publishing datasets as open data

D5.4 - Methodologies for deployment and usage of COMSODE publication platform (ODN), tools and data

D5.5 - Contribution to international standards and best practises

D6.1 - Preliminary Evaluation Report

Related external documents:

User stories documented at: <https://utopia.sk/wiki/display/ODN/User+stories>

Page 6

3 Methodology used

3.1 Methodology

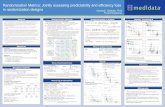

Methodology applied to prepare this deliverable is summarized in the figure 1.

Figure 1: Overview of the deliverable D6.2 development

ODN Testing Methodology, which describes guidelines for testing of the ODN platform, was proposed in D6.1 and it was followed during the development of D6.2. The ODN Testing Methodology (see the section 4) defines the testing workflow as well as the roles of the testing team members. Following this methodology a set of test cases was developed that address the requirements specified in the user stories (developed on top of the requirements described in the D2.1)1 and in the deliverable D3.2 – Summary

report on user requirements and techniques for data transformation, quality assessment, cleansing, data integration and intended data consumption of the selected datasets. Test cases that cover the requirements targeted for the ODN release 1.0.2 were executed in order to put the most recent version of the ODN platform under test.

Deliverable D6.2 extends the deliverable D6.1 with the results of the review of methodologies developed during the project (these methodologies are described in the deliverables D5.1, D5.4 and D5.5). Review strategy was set that was then used to prepare the evaluation form for collecting the review results.

Both the findings of the testing of the ODN platform and the results of the review of the COMSODE methodologies were summarized in this deliverable.

Deliverable D6.2 Preliminary Evaluation Report is the second deliverable out of three deliverables planned that deal with the testing and evaluation of the outcomes of the COMSODE project. This deliverable will be followed with the deliverable D6.3 Testing report that will provide results of the acceptance testing as well as the final evaluation of the documentation.

3.2 Partner contributions

The responsible partner for the deliverable is DSE CUNI that also summarized the available testing and review results for the purposes of this deliverable. EEA developed the ODN testing methodology and provided the instance of the TestLink server which is used for management and tracing of the testing process. EEA and DSE CUNI developed the user scenarios and test cases. Testing of the ODN platform was performed by DSE CUNI and EEA. UNIMIB reviewed the COMSODE testing methodology. DSE CUNI and MoI prepared the COMSODE Methodologies Review Strategy and the related evaluation form.

1 https://utopia.sk/wiki/display/ODN/User+stories

Page 7

4 ODN Testing Methodology ODN Testing Methodology was defined in order to manage the testing of the ODN during the COMSODE project. Testing process is supported by the TestLink2 software which

provides features for:

identification and tracking of the requirements,

management of the testing and definition of the test plan,

definition of the test cases,

tracking results of the testing and the test reporting.

TestLink (v. 1.9.13) is the test management software, it is not a test automation software. Therefore all the test cases are either manual or automated by other means than using the TestLink software.

Due to the use of the TestLink software the ODN Testing Methodology follows the recommendations proposed by the TestLink User Manual (TestLink Community, 2012).

4.1 Testing artefacts

This section provides definitions of the basic artefacts related to the testing process. The TestLink software provides the necessary structure of these artefacts. The basic testing artefacts are: Test Case, Test Suite, Test Plan and Test Project.

According to (TestLink Community, 2012):

Test Project in TestLink organizes related Test Specifications, Test Cases, Requirements and Keywords into a logical unit. Every User needs to have a defined role in the Test Project.

Test Case “describes a testing task via steps (actions, scenario) and expected results.” Test Case is the basic element of the TestLink software.

Test Suite is a set of related Test Cases that divides a Test Specification into logical parts.

Test Plan helps to manage execution of the Test Cases. Test Plan contains the Test Cases from the current Test Project. A Test Plan consists of Builds, Milestones, user assignment (e.g. assignments of testers to the respective Test Cases) and the Test Results.

4.1.1 Test Project, Test Plan and the Test Suites

One Test Project for the testing of the ODN platform was created in the TestLink environment. Then a test plan was created for testing of the ODN v1.0.2 release.

Test suites might be nested into a hierarchical structure. Therefore we structure test suites according to the ODN modules that provide the functionality targeted by the test suite. Because the user story might represent a complex set of tasks performed using the ODN platform, more than one test case might be needed to evaluate the user story. Therefore the test suites might be divided into sub-test suites according the user stories if needed.

For every release of the Open Data Node a test plan should be created. Every release implements a set of software requirements (user stories implemented in the ODN releases are summarized in Annex 2). A test case can target one or more of the software requirements. At the same time a software requirement can be evaluated by one or more test cases. One or more builds of the software are tested during testing of the ODN

2 http://testlink.org/

Page 8

release. Usually a build is compiled and tested afterwards. Then the identified bugs are fixed and new build is compiled. Several such iterations might be executed during a testing of the ODN release.

4.1.2 Test Case

A test case is structured into the following sections:

Identifier – Automatically assigned by the TestLink

Title – Title of the test case

Version – Version of the Test Case, different versions of the Test Case can be assigned to different Test Plans

Summary – Summary of the test case. It should describe what the purpose of the test case is and it should provide context for the performed steps.

Preconditions – Preconditions that needs to be satisfied before the test case is executed, i.e. state of the system that must exist before a test case can be performed, user role within the system which allows user to perform required step actions or the test environment in which the test is performed. It ensures that the test cases are executed in a consistent manner and that any inputs required in order to achieve the expected results are available.

Step actions – Every test case provides a step-by-step description of how the test case is executed.

Expected results of the step actions – For each of the step actions expected results are described. Evaluation of the test case is based on comparison of the actual results with the expected results of the step actions. All the expected results must be met in order to classify the test case as “Passed”.

Execution type – Type of the test case. It can be either automatic or manual.

Estimated execution duration - Estimated duration of the test case execution in minutes.

Priority – Priority of the test case. It should correspond to the priority of the requirements. The highest priority should be set to the test cases aimed at the “MUST” requirements.

Status – Status of the test case (draft or final).

Keywords – A test case can classified with a set of keywords.

4.2 Roles of the testing team members

Because the TestLink software was selected as a test management tool the roles of the testing team members correspond to the roles that exists in the TestLink. Each of the roles can access different set of the features provided by TestLink. One or more of the following roles can be assigned to a testing team member (adapted from (TestLink Community, 2012)):

Guest – guest is able to browse the test results and view the metrics.

Tester – tester (test executor) executes the assigned test cases and describe the test results.

Test Designer – test designer/analyst document the product requirements and specify the related test cases. Test analyst is also allowed to execute the test cases and report the results.

Test Manager – test manager (test leader) defines the test plan, i.e. s/he selects the appropriate test suites, sets priorities, selects builds to be tested and defines ownership of the requirements/test cases. Test leader can also document the product requirements.

Administrator – administrator manages the users and performs backups of the contents.

Page 9

4.3 Testing workflow

Testing workflow of the ODN Testing Methodology consists of the following steps:

1) Creation of the Test Project 2) Assigning roles to the testing team members within the Test Project 3) Definition of the Software Requirements for the system (at least for the Release

to be tested) (*) 4) Creation of the Requirements Specifications

a. Import/creation of the Software Requirements for each Requirement Specification (Requirements specifications can be extended any time)

5) Development of a Release (*) 6) Creation of the Test Cases according to the Software Requirements covered by

the Release 7) Mapping the Test Cases to the Software Requirements (enables tracking of

which Software Requirements are covered by which Test Cases - Requirement Traceability Matrix)

8) Creation of the Test Environment and the Test Data (*) 9) Creation of the Test Plan (related to some already developed Release) 10) Creation and assigning of Test Platform(s) to Test Plan 11) Creation of Build (first Build starts with 0, with increments of 1) for a Test Plan,

creation of Bugfix Release in accordance with Build number a. Selection of the Test Cases for the Test Plan b. Assigning Test Platform(s) to Test Cases c. Assigning testers (User(s) with tester Role) to the Test Cases d. Defining the testing Milestones for the Test Plan

12) Test Execution for each Test Platform assigned a. For any failed or blocked test case one or more issues are created in the

ODN development repository issue tracker3

13) Evaluation of test results a. If the test results are not satisfactory the bugs need to be fixed (*). After

fixing the bugs, repeat the step no. 11 by creating a new Build for the Test Plan including a new set (often it is only a subset) of Test Cases to be tested

b. If the test results are satisfactory then repeat the step no. 5 - development of a new Release, alternatively go to the step no. 3 and add some new Software Requirements if needed

Steps marked with (*) are not performed within the TestLink environment. Software Requirements were specified in the deliverables D2.1 and D3.2. Based on these requirements a set of user stories is being developed.4 We decided to use the user

stories as a base for development of the Test Cases because the requirements described in the deliverable D2.1 are further developed in the user stories and they represent a useful feature from the user perspective.

Development of the Open Data Node (Release) happens outside the TestLink environment as well as the bug fixing. Creation of the Test Environment and the Test Data also happens outside the TestLink environment.

Test Data represent samples of data used for execution of the Test Cases. Test Environment represents a configuration of the HW and SW components that undergo the testing. Once an instance of the Test Environment is created it becomes the Test Platform.

Waffle.io is used for visualization of the testing progress.

3 https://github.com/OpenDataNode/open-data-node/issues 4 The user stories are documented at: <https://utopia.sk/wiki/display/ODN/User+stories>

Page 10

Testing workflow is depicted on figure 2.

Figure 2: Testing workflow in the ODN Testing Methodology

5 Evaluation of the requirements

5.1 Tested Open Data Node release

Open Data Node v1.0.2 is the ODN release that the testing results refer to. It is a bug fixing release of the release v1.0.1 that implements new features compared to the previous releases.

According to (Štoffan and Krchnavý, 2015) there are the following categories of the user stories:

User stories for the Data Consumer – Data consumer can be any subject or person that consumes the data published using the ODN platform. Data Consumer can access the publicly available datasets and its metadata. It could be represented by general public as well as by some data publisher tuning the pipelines.

User stories for the Data Publisher – Data publisher is responsible for managing datasets available and for maintenance of the respective catalogue records.

Page 11

User stories for the ODN Administrator – Administrator is a person responsible for administration and management of some ODN instance. S/he installs, updates and configures the ODN platform.

User stories for the Application developer – Application developer is a special type of data consumer, s/he is also accessing the data via REST/SPARQL API or downloads dumps, using feeds to obtain new CKAN resources and uses them in an application that works with the data.

User stories and the status of their implementation are summarized in Annex 2.

5.2 Test Platform

Test platform consist of the server with a running ODN instance and a client (web browser). Testing server with the following configuration was used for the testing:

RAM: 16GB

CPU: 8 core

Storage: 100GB for system, 500GB for data

OS: Debian Wheezy (stable)

Mozilla Firefox v.34.0.5 (or newer) is the required client browser.

5.3 Overview of the test cases

Test cases are organized into Test Suites. A Test Suite groups the Test Cases related to the features of some of the ODN modules. The Test Suites are further divided into Sub-Test Suites according to the corresponding user stories. The following Test Suites were defined:

Data Retrieval – this test suite contains test cases aimed at the retrieval of the data published using the ODN platform.

Dataset Management – this test suite contains test cases that focus on dataset management features of the ODN platform.

Installation and GUI Management – this test suite contains test case for the GUI management features and ODN installation features of the ODN.

Metadata Management – this test suite contains test cases aimed at the features related to provision and consumption of the machine readable metadata.

ODN/UV DPUs – this test suite contains test cases aimed at the DPU requirements specified in the deliverable D3.2.

Catalog – this test suite contains test cases related to the data cataloguing features.

Pipeline – this test suite contains test cases aimed at the data transformation pipelines features.

User Management – this test suite contains test cases related to the management of the ODN user accounts.

Non-functional – this test suite contains test cases for testing the non-functional requirements.

Table 1 summarizes the number of test cases per test suite and it also shows that only a subset of the currently developed test cases was selected for the test plan aimed at the ODN v1.0.2 release.

Page 12

Table 1: Number of test cases per test suite

Test suite Current number of test

cases Number of test cases in the

1.0.2 test plan

Catalog 34 25

Pipeline 48 16

User Management 14 12

Data Retrieval 21 8

Dataset Management 18 10

Metadata Management 15 2

Installation and GUI Management 10 7

ODN/UV DPUs 103 97

Non-functional 57 27

Total 331 204

However it is necessary to say that the test cases are being continuously developed. Development of the ODN platform follows an iterative approach. New user stories are being developed for the planned releases and therefore the development of the test cases follows the development of the user stories. Number of the test cases might therefore increase in the future.

Some test cases that contribute to the total number presented in table 1 are related to the features that are planned to be implemented in the future ODN releases. Therefore only a subset of test cases was selected for the test plan of the release v1.0.2.

Table 2 lists user stories covered with at least one test case in the test plan for the ODN v1.0.2. Complete list of user stories is provided in Annex 2.

Table 2: Test coverage of the user stories

User story Category No. of test cases

sto_20: Publicly available catalog record browser Data Consumer 1 sto_21: Publicly available catalog records search using keywords Data Consumer 1 sto_23: Publicly available catalog records filtering Data Consumer 1 sto_27: List of available resources for a public dataset Data Consumer 2 sto_28: Download latest version of public dataset file dump Data Consumer 3 sto_25: Social media sharing Data Consumer 6 sto_59: Retrieving public data from dataset via REST API Data Consumer 1 sto_17: List of available resources for a dataset Data Publisher 2 sto_18: Download latest version of file dump Data Publisher 3 sto_2: New catalog record in catalog Data Publisher 2 sto_8: Modification of dataset metadata Data Publisher 2 sto_11: Make dataset publicly available Data Publisher 1 sto_12: Make dataset publicly invisible Data Publisher 1 sto_47: Associate an existing non-associated pipeline Data Publisher 3 sto_6: Modification of an associated pipeline manually Data Publisher 1 sto_3: New associated pipeline created manually Data Publisher 3 sto_53: Last publication status of a pipeline Data Publisher 1 sto_10: Dataset resource(s) created/updated on demand Data Publisher 2 sto_24: Pipeline scheduling information Data Publisher 2 sto_5: New associated pipeline as modified copy of an existing pipeline Data Publisher 3 sto_45: Delete external catalog for update Data Publisher 1

Page 13

User story Category No. of test cases

sto_50: Dataset resource(s) created/updated automatically when data processing done Data Publisher 4

sto_72: Instant manual synchronization with public catalog Data Publisher 1 sto_13: Catalog records browser Data Publisher 1 sto_14: Catalog records search using keywords Data Publisher 1 sto_16: Catalog records filtering Data Publisher 1 sto_43: Add external catalog for update Data Publisher 3 sto_44: Modify external catalog for update Data Publisher 3 sto_78: Disassociate a pipeline Data Publisher 1

sto_1: Login into ODN Data Publisher, ODN Administrator 4

sto_33: Modify user's own user profile Data Publisher 2 sto_42: Pipeline debugging Data Publisher 2 sto_60: Retrieving data via REST API Data Publisher 1

sto_51: Logout from ODN Data Publisher, ODN Administrator 1

sto_71: Instant manual synchronization with external catalog Data Publisher 1 sto_86: Default set of DPU templates Data Publisher 1 sto_34: Delete user ODN Administrator 1 sto_52: ODN installation ODN Administrator 1 sto_58: ODN catalog theme installation ODN Administrator 1 sto_69: Management of default public catalog for publicly available datasets ODN Administrator 1

sto_31: New user and its role ODN Administrator 2 sto_32: Modify user's profile and its role ODN Administrator 2 sto_70: GUI language management ODN Administrator 4

Table 3 summarize test coverage of the DPU requirements specified in the deliverable D3.2. Requirements for the DPUs that have not been implemented yet, but that are described in the deliverable D3.2, are currently uncovered by any of the test cases.

E-SK_DATANEST_ORGS DPU (see the deliverable D3.2) is no longer required because the data it was supposed to be extracting are already available in CSV. T-PDF and T-PDF2CSV DPUs will be replaced with a set of more specific DPUs. These DPUs are marked as obsolete and the related test cases are no longer in the test plan.

Test cases for DPUs Q-ACC_1, Q-ACC_6, Q-ACC_4, Q-C_2, Q-C_5 and Q-CU_1 have already been implemented but they were not included in the test plan for the ODN release v1.0.2. They will be included in test plans for the forthcoming releases of the ODN platform.

Table 3: Test coverage of the DPU requirements

DPU requirement No. of test cases

T-SPARQL-U : SPARQL transformer (Update) 5

T-SPARQL-C : SPARQL transformer (Construct) 5

T-TABULAR : Tabular transformer 5

T-SPARQL-SELECT : SPARQL Select transformer 5

E-SPARQL : Universal SPARQL extractor 5

E-DWNLD : Generic downloader 4

T-XSLT : XSLT transformer 3

T-RDF2FILE : RDF to File transformer 3

T-LINKER : Uses Silk to link RDF data to other RDF datasets 3

T-MDT : Metadata transformer 2

L-RFS : Remote file system loader 2

Page 14

DPU requirement No. of test cases

T-FILE2RDF : File to RDF transformer 2

T-UNZIP : Unzipper 2

T-ZIP : Zipper 2

E-RUIAN : RUIAN extractor 2

E-MFCR_1:001 : ARES extractor 2

T-7UNZIP : Unzipper of 7zip files 2

E-MFCR_3:001 : ARES extractor RZP 2

L-SPARQL : SPARQL loader 1

L-FS : File system loader 1

T-DCV : Data cube transformer 1

T-ENTITY-ANN : Entity annotation 1

T-CZECHBEIC : Czech BE IC transformer 1

E-MHCR-PRICES : Czech Medicines Prices Extractor 1

T-PICKFILE : File Picker 1

T-HTMLCSS : Extracts data from HTML pages using CSS selectors 1

T-ADDRESSCZ : Linker of Czech Schema.org addresses to RUIAN 1

T-SPSS : SPSS Transformer 1

E-MICR_3:001 : Extractor of functions performed by public bodies 1

T-CZLAW : Linker to CZLAW dataset 1

E-CZ_MZP_02:001 : Extractor from Integrated registry of pollution 1

E-CZ_MZP_04:001 : Extractor of levels of pollution from HTML 1

E-CZ_MZP_01:001 : Extractor from Integrated registry of pollution 1

T-PROVOZCZ : Linker of Czech places of business to ARES 1

T-CHEMICALNAMECZ : Linker of chemical compounds 1

E-SK_UIPS_SCHOOLS : SK_UIPS_SCHOOLS Extractor 1

E-VAVAI_1:001 : VAVAI-CEP Extractor 1

E-SUKL-MPP : MPP data extractor from SUKL 1

E-SUKL-CL : Codelists data extractor from SUKL 1

E-MICR_1:001 : Extractor of XML files from Gov.cz 1

E-VAVAI_2:001 : VAVAI-CEP Extractor 1

E-VAVAI_3:001 : VAVAI-CEP Extractor 1

E-VAVAI_4:001 : VAVAI-CEP Extractor 1

E-VAVAI_5:001 : VAVAI-CEP Extractor 1

E-VAVAI_6:001 : VAVAI-CEP Extractor 1

E-VAVAI_7:001 : VAVAI-CEP Extractor 1

E-FS : File system extractor 1

T-BENAMECZ : Linker of Czech Business Entities to ARES 1

T-TEXT-SPC : SPC Extractor from EMA Product Information 1

T-TEXT-PIL : PIL Extractor from EMA Product Information 1

E-SK_53 : SK_53 Extractor 1

E-SK_VLADA_CRP : SK_VLADA_CRP Extractor 1

E-SK_VLADA_CRZ : SK_VLADA_CRZ Extractor 1

E-SK_MARTIN_CONTRACTS : SK_MARTIN_CONTRACTS Extractor 1

E-SK_MARTIN_TAXDEPT : SK_MARTIN_TAXDEPT Extractor 1

Page 15

DPU requirement No. of test cases

E-SK_ORSR_ORGS : SK_ORSR_ORGS Extractor 1

E-SK_MARTIN_BUDGET : SK_MARTIN_BUDGET Extractor 1

E-SK_SOI_RESULTS : SK_SOI_RESULTS Extractor 1

E-SK_DOMAIN_ORGS : Extracts information about domain owners 1

E-MSSI-ES-PRICES : Spanish Medicines Prices Extractor 0

E-SUKL-ATC : Extractor of codelists for prices published by Czech SIDR 0

Q-MC : Metadata Completeness 0

Q-IN : Interpretability 0

Q-MACC : Metadata Accuracy 0

Q-AV : Availability 0

Q-LC : Licensing 0

Q-C_1 : Property Completeness for Null Values 0

Q-C_2 : Dataset Completeness for Null Values 0

Q-C_3 : Schema completeness 0

Q-C_4 : Property completeness 0

Q-CU_1 : Document Currency 0

Q-CU_2 : Triple Currency 0

Q-T : Timeliness 0

Q-ACC_1 : Accuracy of literals 0

Q-ACC_2 : Accuracy of the RDF model 0

Q-ACC_3 : Accuracy of literals based on reference data 0

Q-ACC_4 : Accuracy of range values 0

Q-ACC_5 : Accuracy with Functional Dependencies 0

Q-ACC_6 : Syntactic Accuracy based on Pattern Values 0

Q-ACC_7 : Accuracy of date formats 0

Q-CN_1 : Consistency of values through business rules 0

Q-CN_2 : Consistency of values through SPARQL 0

Q-C_5 : Completeness of Understandability data of a resource 0

Q-C_6 : Completeness of temporal metadata of a resource 0

T-Pattern : Pattern Cleaning 0

T-EntityCoreference : Entity Coreference Cleaning 0

T-PDF2CSV : PDF Transformer Obsolete

T-PDF : PDF Transformer Obsolete

E-SK_DATANEST_ORGS : SK_DATANEST_ORGS Extractor Obsolete

Total (without obsolete test cases) 97

Results of the testing are discussed in the following section.

5.4 Evaluation of the ODN platform on the base of test results

In this section we summarize the results of the testing and we provide evaluation of the ODN platform on the base of these results. Table 4 provides an overview of the passed and failed test cases. Complete test results are provided in the Annex 3.

Page 16

Table 4: Test Results per test suite

Test suite Number of test cases Passed Passed

[%] Failed Failed [%]

Catalog 25 18 72,0% 7 28,0%

Pipeline 16 15 93,8% 1 6,3%

User Management 12 5 41,7% 7 58,3%

Data Retrieval 8 7 87,5% 1 12,5%

Dataset Management 10 9 90,0% 1 10,0%

Metadata Management 2 2 100,0% 0 0,0%

Installation and GUI Management 7 6 85,7% 1 14,3%

ODN/UV DPUs 97 57 58,76% 40 41,24%

Non-functional 27 26 96,3% 1 3,7%

Total 204 145 71,08% 59 28,92%

In total 145 out of 204 test cases resulted in successful execution. Currently the User Management is the most problematic test suite because more than half of the related test cases failed. Failed test cases in this test suite indicate that issues related to the user log-out and modification of user profile need to be resolved.

It is necessary to note that the percentage of the passed or failed test cases cannot be interpreted as percentage of completion of the ODN platform. Even features that are mostly complete might be affected by a bug or some known issues. Features and user stories planned to be implemented are not always independent. This means that one issue might affect more than one user story and similarly resolving such issue might unblock more than one user stories.

TestLink is not used just for purposes of the deliverables in WP6 but as a tool for a long-term management of the testing of an enterprise ready product. Therefore the test cases are being designed to enable early identification of bugs that would allow their resolution before the product is rolled out to pilots and customers (e.g. we are currently finishing implementation of ODN for Slovak nationwide open data project “eDemokracia”).

In case of the test cases aimed at the ODN DPUs more than half of them passed. Even though there was a progress in implementation of DPUs the most common reason for test case failure is that the corresponding DPUs have not been implemented yet.

Resolution of the identified issues can be tracked in the Open Data Node issue tracker.5

Despite the known issues ODN v1.0.2 provides the following improvements and changes compared to the release v0.10.1 reported in D6.1:

Implementation of REST API. o From version 1.0 new Datastore extension was added. More

information about the extension can be found here: http://docs.ckan.org/en/ckan-2.3/maintaining/datastore.html

Fine tuning and testing synchronization between internal and public catalogue. o improved security - synchronization runs over HTTPS protocol o enhanced synchronization of datastore o improved stability, bug fixing o support of organization entities

Quality DPUs. o Quality DPUs have been analysed and designed in D3.2. Currently (end

of June 2015), there are 11 data quality techniques implemented, and 6

5 https://github.com/OpenDataNode/open-data-node/issues

Page 17

are going to be implemented by the end of July (5 of quality assessment and 1 of quality improvement). 5 techniques will not be implemented since there is a lack of metadata in the dataset and there is insufficient support by tools and lack of algorithms for RDF data. In addition, due to the high effort needed for their implementation and low usage in the selected datasets (which is lower than 3 datasets for each DPU), we decided to not include them. The 11 data quality techniques are packed in 8 data quality DPUs that are: Q-ACC_1 (including Q-ACC_1, Q-ACC_2, Q-ACC_7), Q-ACC_6, Q-ACC_4, Q-C_2 (including Q-C_1, Q-C_2), Q-C_5, Q-CU_1, Q-MC and Q-IN (for more details on the techniques see D3.2). They are fully working and will be included as stable DPUs in the next release of ODN.

Integration with dataset visualizations o It is now possible to create visualizations of a dataset using LDVMi6 and

store it as a CKAN resource. Live dataset visualization preview is then available directly in CKAN using the webpage_view CKAN view plugin.7

Advanced dataset management o Currently we do no support termination of a dataset maintenance and

termination of a dataset publication as was intended. Based on the feedback from pilot applications we rather focused on different features (localization, permissions and roles in UnifiedViews, etc.)

Advanced DPU features. o DPUs that process RDF now support "per-graph" execution, which

enables processing of large RDF data that was created e.g. from multiple files without merging it into a single graph where appropriate.

o DPUs support new helpers providing further features to DPU developers, such as:

SimpleRDF and SimpleFiles: SimpleRDF and SimpleFiles are wrappers on top of RDF data unit and files data unit, respectively. They provide plugin developers with easy to use methods to cover basic functionality when working with RDF data or files; for example, there are methods, which allow DPU developers to query RDF data within RDF data unit or to add new RDF data to RDF data unit using single line of code.

Dynamic configuration of plugins using RDF configuration: Dynamic configuration allows a plugin to be configured dynamically over one of its inputs (configuration input data unit). If the plugin supports dynamic configuration, it may receive configuration over its configuration input data unit; such configuration is then automatically deserialized from RDF data format and used instead of the configuration being defined via configuration dialog of the plugin.

Fault tolerance. Operation on top of RDF data can be time intensive (e.g., fetching tens of millions of RDF triples over SPARQL protocol or executing set of SPARQL Update queries on tens of millions of RDF triples). Such operations typically consist of set of calls against target RDF store. Since any such call can throw exception anytime and, as our experiments revealed, such exception is often caused by target RDF store not responding temporarily to certain operation, it makes sense to retry certain particular call rather than trying to retry whole operation or pipeline execution, as this could mean that hours of work were lost. As a

6 http://ldvm.net/ 7 http://docs.ckan.org/en/latest/maintaining/data-viewer.html

Page 18

result, developers may decide to use Fault tolerant extension to ensure that certain calls, which may fail, are retried in case of certain types of problems.

o DPUs are localizable. o There is a plugin template which DPU developers may use when they

start developing DPUs

Advanced ODN features. o Single Sign On (SSO) – ODN supports Single Sign On - a user has to

log in only once and then he is logged into all ODN components (UnifiedViews, catalogs, etc.)

o User Management – permissions and roles were refactored in UnifiedViews

o UnifiedViews is localizable

There are some features which have to be implemented. The detailed list of features can be seen in Annex 2 which lists the user stories which have not been implemented yet (column B – implemented). Annex 3 provides a note “not implemented yet” for each test case which has not passed and has not been implemented yet (column D – notes).

DCAT-AP is not supported in this release. The reason for this is that DCAT is still evolving and also mapping of DCAT to CKAN (distributions) is not straightforward. Custom extension of ODN was developed that supports DCAT-AP in order to allow harvesting and loading metadata into the Czech national Open Data catalogue.

Another initially planned feature, the datasets publication wizards, has not been implemented. After discussion with pilot partners and after re-examining the task, we decided not to prepare the wizards, but rather go in the way of simplifying the creation of pipelines, improving dialogs configuring DPUs, improving tooltips and other contextual help for pipeline developers. Also there were other features which were more important for pilot participants, such as user management and permissions (eDem pilot).

6 Review of the COMSODE methodologies In the following sections COMSODE Methodologies Review Strategy is described together with results of the review.

6.1 COMSODE Methodologies Review Strategy

Evaluation of the methodologies developed during the COMSODE project is based on the review performed by both the COMSODE team members (internal review) and the COMSODE User Board members (external review). In order to ensure that the reviewer is independent even in case of the internal reviewers only a team member that was not involved in development of a particular deliverable could become its reviewer.

Each of the reviewers was asked to perform the following steps:

1) select at least one methodology to review, 2) read the methodology and comment the text, 3) fill in the evaluation form.

An online evaluation form was developed in order to ease collecting assessments from the individual reviews. The reviewers were asked the following questions when assessing the methodologies:

1) Please rate the overall comprehensibility of the deliverable 2) Please rate the following attributes of the deliverable

c. Structure

Page 19

d. Length e. Level of detail f. Terminology g. Language h. Overall presentation

3) Please rate the level to which the following issues are addressed by the deliverable

a. Policy and management issues b. Legal issues c. Technical issues d. Economic issues e. Open Data utilisation

4) Are there any topics or issues that are missing in the deliverable or that need to be addressed in more details?

5) Would you like to add any comments to the deliverable?

The evaluation form described above served for collecting the overall assessment of the methodologies. Deliverables were also made accessible online and the reviewers were asked to add comments directly to the documents. This approach allowed the reviewers to add detailed comments if needed.

The following methodologies were put under review:

D5.1 Methodology for publishing datasets as open data

D5.4 Methodologies for the integration of datasets, examples of publication with the ODN, integration techniques and tools

D5.5 Contribution to international standards and best practices

All the deliverables that represent the final versions of the COMSODE methodologies were reviewed. Because D5.4 and D5.5 were still in development, drafts of these deliverables were reviewed. This allowed the relevant comments to be reflected in the deliverables and thus to improve their quality.

Table 5 summarizes the number of internal and external reviews per methodology. Two reviewers provided anonymous answers.

Table 5: Number of reviews per methodology

Methodology No. of internal reviews

No. of external reviews

Anonymous reviews Total

D5.1 Methodology for publishing datasets as open data 2 2 1 5

D5.4 Methodologies for the integration of datasets, examples of publication with the ODN, integration techniques and tools

2 2 0 4

D5.5 Contribution to international standards and best practices 2 2 1 5

Every methodology was reviewed by two internal reviewers and by two external reviewers. In case of the anonymous reviewers it was not possible to decide whether they were internal or external.

Table 6 summarizes the number of reviewers and reviews per country (including anonymous reviewers). It is obvious that some of the reviewers reviewed more than one methodology. Half of the reviews were provided by Slovak reviewers.

Page 20

Table 6: Number of reviews and reviewers per country

Country No. of reviews No. of reviewers

Czech Republic 4 3

Netherlands 2 2

Slovakia 7 2

Spain 1 1

Total 14 10

6.2 Review results

Results of the review of the methodologies developed in the COMSODE project are presented in the following sections. Reviews were collected between 28th May 2015 and 21st June 2015.

6.2.1 D5.1 Methodology for publishing datasets as open data

Needs indicated by the reviewers of the deliverable D5.1 are summarized in table 7.

Table 7: Needs for D5.1 per reviewer group

Needs External Internal Anonymous

Policy and management issues (e.g. getting the top management support, processes and roles involved in the Open Data publication)

2 1 1

Legal issues (e.g. licencing, compliance with the legislation, personal data protection) 2 1 1

Technical issues (e.g. data formats, data quality, interoperability, infrastructure and tools) 2 2 1

Economic issues (e.g. charging for data) 0 0 0

Open Data utilisation (e.g. business models, supporting re-use, value creation) 1 0 1

Reviewers mostly expected from the deliverable to provide guidance or practices related to the technical, legal and policy and management issues. As table 8 shows most of the reviewers found the technical issues to be well addressed. Legal and policy and management issues were in most cases rated as addressed enough to provide details. Therefore future development of the methodology should be aimed at improving guidance in these domains. Even though the Open Data utilisation did not rank among the most required issues to be addressed in the methodology, the reviewers mostly found this topic to be excellently addressed in the deliverable D5.1.

Page 21

Table 8: Assessment of issues addressed in D5.1

Issues Not relevant

Insufficiently addressed

Addressed to a limited

extent

Addressed enough to

provide basics

Well addressed

Excellently addressed

Policy and management issues

0 0 0 3 1 1

Legal issues 0 0 0 4 0 1

Technical issues 0 0 0 1 3 1

Economic issues 0 0 1 3 0 1

Open Data utilisation 0 0 0 1 1 3

Assessment of the attributes of the deliverable D5.1 is provided in table 9.

Table 9: Assessment of the attributes of the deliverable D5.1

Attribute Very poor Poor Average Good Excellent

Structure 0 0 0 2 3

Length 0 0 1 2 2

Level of detail 0 0 0 3 2

Terminology 0 0 0 3 2

Language 0 0 0 4 1

Overall presentation 0 0 0 4 1

Table 9 shows that the reviews of D5.1 were positive. Only one review ranked the length of the deliverable as average. All the evaluated attributes were ranked mostly as good or even as excellent.

6.2.2 D5.4 Methodologies for the integration of datasets, examples of publication with the ODN, integration techniques and tools

Needs indicated by the reviewers of the deliverable D5.4 are summarized in table 10.

Page 22

Table 10: Needs for D5.4 per reviewer group

Needs External Internal Anonymous

Policy and management issues (e.g. getting the top management support, processes and roles involved in the Open Data publication)

0 2 0

Legal issues (e.g. licencing, compliance with the legislation, personal data protection) 1 2 0

Technical issues (e.g. data formats, data quality, interoperability, infrastructure and tools) 2 2 0

Economic issues (e.g. charging for data) 1 2 0

Open Data utilisation (e.g. business models, supporting re-use, value creation) 2 2 0

Internal reviewers equally required all the analysed categories of issues to be covered in the methodology. External reviewers required mostly guidance and practices aimed at the technical issues and the Open Data utilisation. Addressing of the policy and management issues was not expected from the external reviewers and as the results in table 11 show, some of the reviewers do not consider such issues to be a relevant topic of the deliverable D5.4. This is also the case of the economic issues. Technical issues were ranked as addressed enough to provide basics in half of the reviews, where the other half ranked technical issues to be well addressed. Open Data utilisation was ranked as well addressed in two of the reviews, however in one of the reviews it was maker as addressed only to a limited extent.

Table 11: Assessment of issues addressed in D5.4

Issues Not relevant

Insufficiently addressed

Addressed to a limited

extent

Addressed enough to

provide basics

Well addressed

Excellently addressed

Policy and management issues

2 0 0 2 0 0

Legal issues 1 0 1 1 1 0

Technical issues 0 0 0 2 2 0

Economic issues 3 0 0 1 0 0

Open Data utilisation 1 0 1 0 2 0

Assessment of the attributes of the deliverable D5.4 is provided in table 12.

Page 23

Table 12: Assessment of the attributes of the deliverable D5.4

Attribute Very poor Poor Average Good Excellent

Structure 0 0 2 2 0

Length 0 1 0 3 0

Level of detail 0 2 2 0 0

Terminology 0 2 0 2 0

Language 0 0 1 3 0

Overall presentation 0 0 1 3 0

A draft of the deliverable D5.4 was reviewed which might be a reason why the level of detail was considered insufficient in some of the reviews. On the other hand the reviews show that a suitable structure of the deliverable was proposed and that the deliverable is of appropriate length. Language and the overall presentation were mostly ranked as good. Some of the reviewers pointed out that the terminology should be unified across the deliverable.

6.2.3 D5.5 Contribution to international standards and best practices

Needs indicated by the reviewers of the deliverable D5.5 are summarized in table 13.

Table 13: Needs for D5.5 per reviewer group

Needs External Internal Anonymous

Policy and management issues (e.g. getting the top management support, processes and roles involved in the Open Data publication)

1 2 0

Legal issues (e.g. licencing, compliance with the legislation, personal data protection) 1 2 0

Technical issues (e.g. data formats, data quality, interoperability, infrastructure and tools) 0 2 0

Economic issues (e.g. charging for data) 1 2 1

Open Data utilisation (e.g. business models, supporting re-use, value creation) 1 2 1

External reviewers required the deliverable D5.5 to provide guidance and practice aimed at policy and management issues, legal issues, economic issues as well as the Open Data utilisation. Preferences of the internal reviewers were distributed equally across all the categories of issues. Anonymous reviewers preferred the economic issues and the Open Data utilisation.

Assessment of the level to which various issues are addressed in the deliverable D5.5 is provided in table 14. In most of the reviews the issues were ranked as addressed enough to provide basics. In case of the policy and management issues one review ranked this topic to be well addressed, however two of the reviews assessed this topic to be only addressed to a limited extent. Similar results apply to the economic issues. Improvements to the relevant sections of the deliverable D5.5 should be considered.

Page 24

Table 14: Assessment of issues addressed in D5.5

Issues Not relevant

Insufficiently addressed

Addressed to a limited

extent

Addressed enough to

provide basics

Well addressed

Excellently addressed

Policy and management issues

1 0 2 1 1 0

Legal issues 0 0 1 2 1 1

Technical issues 1 0 1 2 1 0

Economic issues 1 0 2 2 0 0

Open Data utilisation 1 0 0 3 0 1

Assessment of the attributes of the deliverable D5.5 is provided in table 15.

Table 15: Assessment of the attributes of the deliverable D5.5

Attribute Very poor Poor Average Good Excellent

Structure 0 0 0 4 1

Length 0 0 1 3 1

Level of detail 0 1 1 1 2

Terminology 0 0 1 1 2

Language 0 1 0 2 2

Overall presentation 0 1 0 2 2

A draft of the deliverable D5.5 was reviewed. Despite this the assessment of the various attributes of the deliverable were mostly positive. Authors of the deliverable should pay attention to the reviews that ranked some the attributes as poor in order to improve the quality of the final deliverable.

7 Conclusions Results of the testing of the Open Data Node release v1.0.2 are reported in this deliverable. ODN Testing Methodology described in the deliverable D6.1 was followed which defines the testing workflow as well as the roles of the testing team members. Testing process is supported by the TestLink software.

In total 145 out of 204 test cases passed. Despite the fact that the testing revealed some issues in the solution, Open Data Node release v1.0.2 brings many improvements when compared to the version v0.10.1 discussed in D6.1, for example implementation of REST API, fine tuning and testing synchronization between internal and public catalogue, improved dialogs and tooltips, integration with dataset visualizations or advanced DPU features such as "per-graph" execution or new DPU helpers. Further improvements are planned for the upcoming ODN release.

Page 25

Results of the review of the methodologies developed during the COMSODE project are also reported in this deliverable. The following methodologies were put under review:

D5.1 Methodology for publishing datasets as open data

D5.4 Methodologies for the integration of datasets, examples of publication with the ODN, integration techniques and tools

D5.5 Contribution to international standards and best practices

Reviews of the D5.1 were positive and the reviewers also provided valuable comments and suggestions for improvement of the text of the methodology. Drafts of the deliverables D5.4 and D5.5 were reviewed and thus comments and suggestions of the reviewers might help the project members to improve the quality of the deliverables.

8 References Štoffan, Oskár, Krchnavý, Sveťo, 2015. User stories. In: Open Data Node [online]. Last modified Jun 05, 2015 [cit. 2015-06-29]. Available from: https://utopia.sk/wiki/display/ODN/User+stories

TestLink Community, 2012. User Manual. TestLink version 1.9. In: TestLink [online]. © 2004 - 2012 [cit. 2015-02-10]. Available from: https://gitorious.org/testlink-ga/testlink-documentation/source/2b79c4f132e51048c92c44d9be8f47a5832858e4:end-users/1.9/testlink_user_manual.odt

Annex 1 – Test Cases Description of the test cases that were used for the testing of the ODN v1.0.2 release is provided in the Annex 1. Please, see attached file: Annex_1-D6.2-Test_Cases.doc.

Annex 2 – ODN User Stories Status Implemented and not-implemented ODN User Stories are summarized in the Annex 2. Please, see a separate file: Annex-2_D6.2-ODN_User_Stories_Status.xlsx.

Annex 3 – Test Results Test results per test case are summarized in the separate file: Annex-3_D6.2-Test_Results.xlsx.