cs229 poster...

Transcript of cs229 poster...

Semantic Segmentation of 3D Particle Interaction Data Using Fully Convolutional DenseNet

AbstractLiquid Argon Time Projection Chamber (LArTPC) is a novel detector technology that is getting traction among particle physics experiments because of its high neutrino detection efficiency and low background. The analysis of LArTPC-‐derived images has been a challenge, however, having required a combination of many algorithm-‐based frameworks. Deep neural networks, on the other hand, can offer an end-‐to-‐end solution. We train a type of DNN called Fully Convolutional DenseNet (FC-‐DenseNet) to perform pixel-‐wise classification (semantic segmentation) of showers and tracks on a 3D simulation dataset. Our work will inform the analysis of future LArTPC experiments in removing cosmic ray-‐induced particles and reconstructing neutrino interactions.

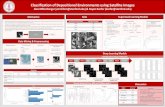

Model & Training ConfigurationsObjective: pixel-‐wise classification into 3 classes: background (zero pixels), track, and shower.

Use Adam optimizer, with batch size 1 (due to GPU memory limitations) and decrease learning rate mid-‐training when loss flattens.Minimize the log-‐sum of the weighted softmax over all voxels, where the weights were defined as the inverse of # of voxels in each instance of the class.

DataLArTPC consists of liquid argon in an electric field and a set of anode wire planes to collect drifting ionization electrons. We use 3D voxelized images generated from LArSoft, a simulation software for LArTPC-‐derived data.• 128 x 128 x 128 voxels

à 1cm3 per voxel• Each input data hastruth energy deposition.

• Each input label hastruth segmentation:-‐ background,-‐ electromagneticshower, or -‐ straight track

ResultsLoss & training non-‐zero accuracy over 21299 iterations(~ 2 epochs over the training dataset of 10,000 images):

Performance MetricsWe evaluate our training against that of a baseline, another segmentation network called U-‐ResNet [4]

Sample OutputReferences[1] Abratenko, P., et al. “Determination of muon momentum in the MicroBooNE LArTPC using an improved model of multiple Coulomb scattering.” arXiv preprint arXiv:1703.06187 (2017).[2] Jégou, Simon, et al. ”The one hundred layers tiramisu: Fully convolutional DenseNets for semantic segmentation.” arXiv preprint arXiv:1611.09326 (2016).[3] Deep Learning Physics, larcv (2017). GitHub repository.[4] Deep Learning Physics, u-‐resnet (2017). GitHub repository.

Ji Won Park, Zhilin Jiang, Aldo Gael Carranza { jwp, zjiang23, aldogael } @ stanford.eduStanford University

AcknowledgementsWe thank Kazuhiro Terao, Associate Scientist at the Elementary Particle Physics Group at SLAC National Accelerator Laboratory, for making the GPU resources, the UResNetcode repository, and the 3D particle dataset available for this project.

Network Architecture Discussion & Ongoing Studies

Learning rate = 1e-3Learning rate = 1e-4

* Solid lines represent moving averages

DB = dense block

5 DBs each in down- & up-sampling paths

* Trained with NVIDIA Titan X (Pascal) GPU, 12GB memory

A dense block [2]

Schematic of LArTPC detector [1]

Sample input data [3]Each voxel has charge info.

Sample ground-truth [3]Each voxel has value 0 (bg), 1 (track), or 2 (shower)

Proton 300 MeV

Electron 240 MeV

Proton 360 MeV

Pion 220 MeV

Yellow: trackCyan: shower

Semantic segmentation =downsampling (which extracts features) + upsampling (which retrieves original image resolution for pixel-‐wise labeling) FC-‐DenseNet = semantic segmentation architecture where the convolutions are embedded within DenseNet modules.Growth parameter controlshow # of features evolvesthroughout network.

Sample truth label [3] Sample output prediction [3](Reasonable error)

FC-‐DenseNet vs. U-‐ResNetBATCH SIZE MATTERS. In FC-‐DenseNet, # of features grows quickly which is difficult to accommodate for 3D images, given the GPU memory. Because batch size ~ size of ensemble, only exposing the algorithm to 1 image at a time made it vulnerable to statistical fluctuations. U-‐ResNet, with batch size = 8, learned faster and reached higher accuracy.

Attempts to solve batch problem:Implemented mini-‐batching, now available on our GitHub repository.Reduced the growth parameter and increased batch size, with similar results.

Choosing the right evaluation metricIn a typical image, 99.99% of voxels are background. This means the network can get a very good accuracy simply by guessing all voxels are background! To account for this, we calculate accuracy only for the non-‐background pixels.

Weighting the loss functionThe final instance-‐based weights we used were chosen based on the following lessons from iterating our network:-‐ Weigh nonzero pixels à network does poorly

on classes with more voxel count-‐ Weigh rarer classes à network does poorly on

lower-‐voxel instances of the same class

Weighting the loss by the instance instead of by the three classes or nonzero vs. zero helped the algorithm perform well on images with a very short track and a long track. [4]