Course on Data Mining (581550-4) - cs.helsinki.fi · 3 21.11.2001 Data mining: KDD Process 5...

Transcript of Course on Data Mining (581550-4) - cs.helsinki.fi · 3 21.11.2001 Data mining: KDD Process 5...

1

21.11.2001 Data mining: KDD Process 1

Intr o/Ass. Rules

Episodes

Text M ining

Home Exam

24./26.10.

30.10.

Cluster ing

KDD Process

Appl./Summary

14.11.

21.11.

7.11.

28.11.

Course on Data M ining (581550-4)

21.11.2001 Data mining: KDD Process 2

Today 22.11.2001

• Today's subject: o K DD Process

• Next week's program: o Lecture: Data mining

applications, future, summaryo Exercise: K DD Processo Seminar: K DD Process

Course on Data M ining (581550-4)

2

21.11.2001 Data mining: KDD Process 3

KDD process

• Overview• Preprocessing• Post-processing• Summary

Overview

21.11.2001 Data mining: KDD Process 4

What is KDD? A process!

• Aim: the selection and processing of data for

o the identification of novel, accurate, and useful patterns, and

o the modeling of real-world phenomena

• Data mining is a major component of the K DD process

3

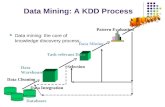

21.11.2001 Data mining: KDD Process 5

Typical KDD process

Data miningInput data ResultsPreprocessing Postprocessing

OperationalDatabase

Selection

Selection

Utilization

CleanedVer if iedFocused

Eval. ofinteres-tingness

Raw data

Target dataset

Selected usable

patterns

1 32

21.11.2001 Data mining: KDD Process 6

Learning the domain

Data reduction and pr ojection

Creating a target data set

Data cleaning, integrationand transfor mation

Choosing the DM task

Pre-processing

Phases of the KDD process (1)

4

21.11.2001 Data mining: KDD Process 7

Choosing the DM algor ithm(s)

K nowledge presentation

Data mining: search

Patter n evaluationand interpretation

Use of discovered knowledge

Post-processing

Phases of the KDD process (2)

21.11.2001 Data mining: KDD Process 8

Preprocessing - overview

• Why data preprocessing?• Data cleaning • Data integration and

transformation• Data reduction

Preprocessing

5

21.11.2001 Data mining: KDD Process 9

Why data preprocessing?

• Aim: to select the data relevant with respect to the task in hand to be mined

• Data in the real world is dirtyo incomplete: lacking attribute values, lacking certain

attributes of interest, or containing only aggregate data

o noisy: containing errors or outliers

o inconsistent: containing discrepancies in codes or names

• No quality data, no quality mining results!

21.11.2001 Data mining: KDD Process 10

M easures of data quality

o accuracyo completenesso consistencyo timelinesso believabilityo value addedo interpretabilityo accessibility

6

21.11.2001 Data mining: KDD Process 11

Preprocessing tasks (1)

• Data cleaningo fill in missing values, smooth

noisy data, identify or remove outliers, and resolve inconsistencies

• Data integrationo integration of multiple

databases, files, etc.

• Data transformationo normalization and aggregation

21.11.2001 Data mining: KDD Process 12

Preprocessing tasks (2)

• Data reduction (including discretization)

o obtains reduced representation in volume, but produces the same or similar analytical results

o data discretization is part of data reduction, but with particular importance, especially for numerical data

7

21.11.2001 Data mining: KDD Process 13

Data Cleaning

Data Integration

Data Transfor mation

Data Reduction

Preprocessing tasks (3)

21.11.2001 Data mining: KDD Process 14

Data cleaning tasks

• Fill in missing values• Identify outliers and

smooth out noisy data• Correct inconsistent

data

8

21.11.2001 Data mining: KDD Process 15

M issing Data

• Data is not always available• M issing data may be due to

o equipment malfunction

o inconsistent with other recorded data, and thus deleted

o data not entered due to misunderstanding

o certain data may not be considered important at the time of entry

o not register history or changes of the data

• M issing data may need to be inferred

21.11.2001 Data mining: KDD Process 16

How to Handle M issing Data? (1)

• Ignore the tupleo usually done when the class label is missing

o not effective, when the percentage of missing values per attribute varies considerably

• Fill in the missing value manuallyo tedious + infeasible?

• Use a global constant to fill in the missing value

o e.g., “unknown”, a new class?!

9

21.11.2001 Data mining: KDD Process 17

How to Handle M issing Data? (2)

• Use the attr ibute mean to fill in the missing value• Use the attr ibute mean for all samples belonging to

the same class to fill in the missing valueo smarter solution than using the “general” attribute

mean

• Use the most probable value to fill in the missing valueo inference-based tools such as decision tree

induction or a Bayesian formalism

o regression

21.11.2001 Data mining: KDD Process 18

Noisy Data

• Noise: random error or variance in a measured variable

• Incorrect attr ibute values may due too faulty data collection instruments

o data entry problems

o data transmission problems

o technology limitation

o inconsistency in naming convention

10

21.11.2001 Data mining: KDD Process 19

How to Handle Noisy Data?

• Binningo smooth a sorted data value by looking at the values

around it

• Clusteringo detect and remove outliers

• Combined computer and human inspectiono detect suspicious values and check by human

• Regressiono smooth by fitting the data into regression functions

21.11.2001 Data mining: KDD Process 20

Binning methods (1)

• Equal-depth (frequency) partitioningo sort data and partition into bins, N

intervals, each containing approximately same number of samples

o smooth by bin means, bin median, bin boundaries, etc.

o good data scaling

o managing categorical attributes can be tricky

11

21.11.2001 Data mining: KDD Process 21

Binning methods (2)

• Equal-width (distance) partitioningo divide the range into N intervals of equal

size: uniform grid

o if A and B are the lowest and highest values of the attribute, the width of intervals will be: W = (B-A)/N.

o the most straightforward

o outliers may dominate presentation

o skewed data is not handled well

21.11.2001 Data mining: KDD Process 22

Equal-depth binning - Example

• Sorted data for price (in dollars): o 4, 8, 9, 15, 21, 21, 24, 25, 26, 28, 29, 34

• Partition into (equal-depth) bins:o Bin 1: 4, 8, 9, 15o Bin 2: 21, 21, 24, 25o Bin 3: 26, 28, 29, 34

• Smoothing by bin means:o Bin 1: 9, 9, 9, 9o Bin 2: 23, 23, 23, 23o Bin 3: 29, 29, 29, 29

• …by bin boundaries:o Bin 1: 4, 4, 4, 15o Bin 2: 21, 21, 25, 25o Bin 3: 26, 26, 26, 34

12

21.11.2001 Data mining: KDD Process 23

Data Integration (1)

• Data integrationo combines data from multiple

sources into a coherent store

• Schema integrationo integrate metadata from different

sources

o entity identification problem: identify real world entities from multiple data sources, e.g., A.cust-id ≡ B.cust-#

21.11.2001 Data mining: KDD Process 24

Data Integration (2)

• Detecting and resolving data value conflictso for the same real world entity,

attribute values from different sources are different

o possible reasons: different representations, different scales, e.g., metric vs. British units

13

21.11.2001 Data mining: KDD Process 25

Handling Redundant Data

• Redundant data occur often, when multiple databases are integratedo the same attribute may have different names in different

databases

o one attribute may be a “derived” attribute in another table, e.g., annual revenue

• Redundant data may be detected by correlation analysis

• Careful integration of data from multiple sources mayo help to reduce/avoid redundancies and inconsistencies

o improve mining speed and quality

21.11.2001 Data mining: KDD Process 26

Data Transformation

• Smoothing: remove noise from data

• Aggregation: summarization, data cube construction

• Generalization: concept hierarchy climbing

• Normalization: scaled to fall within a small, specified range, e.g.,

o min-max normalization

o normalization by decimal scaling

• Attr ibute/feature constructiono new attributes constructed from the given ones

14

21.11.2001 Data mining: KDD Process 27

Data Reduction

• Data reductiono obtains a reduced representation of the data set that is

much smaller in volume

o produces the same (or almost the same) analytical results as the original data

• Data reduction strategieso dimensionality reduction

o numerosity reduction

o discretization and concept hierarchy generation

21.11.2001 Data mining: KDD Process 28

Dimensionality Reduction

• Feature selection (i.e., attribute subset selection):o select a minimum set of features such that the

probability distribution of different classes given the values for those features is as close as possible to the original distribution given the values of all features

o reduce the number of patterns in the patterns, easier to understand

• Heuristic methods (due to exponential # of choices):o step-wise forward selectiono step-wise backward eliminationo combining forward selection and backward elimination

15

21.11.2001 Data mining: KDD Process 29

Initial attr ibute set: { A1, A2, A3, A4, A5, A6}

> Reduced attr ibute set: { A1, A4, A6}

Dimensionality Reduction -Example

A4 ?

A1? A6?

Class 1 Class 2 Class 1 Class 2

21.11.2001 Data mining: KDD Process 30

Numerosity Reduction

• Parametric methodso assume the data fits some model, estimate model

parameters, store only the parameters, and discard the data (except possible outliers)

o e.g., regression analysis, log-linear models

• Non-parametric methodso do not assume models

o e.g., histograms, clustering, sampling

16

21.11.2001 Data mining: KDD Process 31

Discretization

• Reduce the number of values for a given continuous attr ibute by dividing the range of the attribute intointervals

• Interval labels can then be used to replace actual data values

• Some classification algorithms only accept categorical attr ibutes

21.11.2001 Data mining: KDD Process 32

Concept Hierarchies

• Reduce the data by collecting and replacing low level concepts byhigher level concepts

• For example, replace numeric values for the attribute age by more general values young, middle-aged, or senior

17

21.11.2001 Data mining: KDD Process 33

Discretization and concept hierarchy generation

for numer ic data

• Binning• Histogram analysis• Clustering analysis • Entropy-based discretization• Segmentation by natural

partitioning

21.11.2001 Data mining: KDD Process 34

Concept hierarchy generation for categor ical data

• Specification of a partial ordering of attr ibutes explicitly at the schema level by users or experts

• Specification of a portion of a hierarchy by explicit data grouping

• Specification of a set of attr ibutes, but not of their partial ordering

• Specification of only a partial set of attr ibutes

18

21.11.2001 Data mining: KDD Process 35

Specification of a set of attr ibutes

• Concept hierarchy can be automatically generated based on the number of distinct values per attribute in the given attribute set. The attribute with the most distinct values is placed at the lowest level of the hierarchy.

country

province_or_ state

city

street

15 distinct values

65 distinct values

3567 distinct values

674 339 distinct values

21.11.2001 Data mining: KDD Process 36

Post-processing - overview

• Why data post-processing?

• Interestingness• Visualization• Utilization

Post-processing

19

21.11.2001 Data mining: KDD Process 37

Why data post-processing? (1)

• Aim: to show the results, or more precisely the most interesting findings, of the data mining phase to a user/users in an understandable way

• A possible post-processing methodology:o find all potentially interesting patterns according to

some rather loose criteria

o provide flexible methods for iteratively and interactively creating different views of the discovered patterns

• Other more restr ictive or focused methodologies possible as well

21.11.2001 Data mining: KDD Process 38

Why data post-processing? (2)

• A post-processing methodology is useful, ifo the desired focus is not known in advance (the

search process cannot be optimized to look only for the interesting patterns)

o there is an algorithm that can produce all patterns from a class of potentially interesting patterns (the result is complete)

o the time requirement for discovering all potentially interesting patterns is not considerably longer than, if the discovery was focused to a small subset of potentially interesting patterns

20

21.11.2001 Data mining: KDD Process 39

Are all the discovered pattern interesting?

• A data mining system/query may generate thousands of patterns, but are they all interesting?

Usually NOT!

• How could we then choose the interesting patterns?

=> Interestingness

21.11.2001 Data mining: KDD Process 40

Interestingness cr iter ia (1)

• Some possible criteria for interestingness:

o evidence: statistical significance of finding?

o redundancy: similarity between findings?

o usefulness: meeting the user's needs/goals?

o novelty: already prior knowledge?

o simplicity: syntactical complexity?

o generality: how many examples covered?

21

21.11.2001 Data mining: KDD Process 41

Interestingness cr iter ia(2)

• One division of interestingness criteria:o objective measures that are based on statistics and

structures of patterns, e.g., � J-measure: statistical significance� certainty factor: support or frequency� strength: confidence

o subjective measures that arebased on user’s beliefs in the data, e.g.,

� unexpectedness: “ is the found pattern surprising?"� actionability: “can I do something with it?"

21.11.2001 Data mining: KDD Process 42

Cr iticism: Suppor t & Confidence

• Example: (Aggarwal & Yu, PODS98)o among 5000 students

� 3000 play basketball, 3750 eat cereal� 2000 both play basket ball and eat cereal

o the rule play basketball � eat cereal [40%, 66.7%]is misleading, because the overall percentage of students eating cereal is 75%, which is higher than 66.7%

o the rule play basketball � not eat cereal [20%, 33.3%] is far more accurate, although with lower support and confidence

22

21.11.2001 Data mining: KDD Process 43

Interest

• Yet another objective measure for interestingness isinterest that is defined as

• Properties of this measure:o takes both P(A) and P(B) in consideration:o P(A^B)=P(B)*P(A), if A and B are independent eventso A and B negatively correlated, if the value is less than 1;

otherwise A and B positively correlated.

)()(

)(

BPAP

BAP ∧

21.11.2001 Data mining: KDD Process 44

J-measure

• Also J-measure

is an objective measure for interestingness• Properties of J-measure:

o again, takes both P(A) and P(B) in considerationo value is always between 0 and 1o can be computed using pre-calculated values

( )

)))(1

)(1log()(1(

)(

)(log)()(

Bconf

BAconfBAconf

Bconf

BAconfBAconfAconfmeasureJ

−

�−�−

+�

�=−

23

21.11.2001 Data mining: KDD Process 45

Suppor t/Frequency/J-measure

0

500

1000

1500

2000

2500

3000

0 0,1 0,2 0,3 0,4 0,5 0,6 0,7 0,8 0,9 1

Threshold

Ru

les

Dataset 1

Dataset 2

Dataset 3

Dataset 4

Dataset 5

Dataset 6

21.11.2001 Data mining: KDD Process 46

Confidence

0

500

1000

1500

2000

2500

3000

0 0,1 0,2 0,3 0,4 0,5 0,6 0,7 0,8 0,9 1

Confidence threshold

Ru

les

Dataset 1

Dataset 2

Dataset 3

Dataset 4

Dataset 5

Dataset 6

24

21.11.2001 Data mining: KDD Process 47

Example – Selection of Interesting Association Rules

• For reducing the number of association rules that have to be considered, we could, for example, use one of the following selection criteria:o frequency and confidenceo J-measure or interesto maximum rule size (whole rule,

left-hand side, right-hand side)o rule attributes (e.g., templates)

21.11.2001 Data mining: KDD Process 48

Example –Problems with selection of rules

• A rule can correspond to prior knowledge or expectationso how to encode the background knowledge into the

system?

• A rule can refer to uninteresting attr ibutes or attr ibute combinationso could this be avoided by enhancing the

preprocessing phase?

• Rules can be redundanto redundancy elimination by rule covers etc.

25

21.11.2001 Data mining: KDD Process 49

Interpretation and evaluation of the results of data mining

• Evaluationo statistical validation and significance testing

o qualitative review by experts in the field

o pilot surveys to evaluate model accuracy

• Interpretationo tree and rule models can be read directly

o clustering results can be graphed and tabled

o code can be automatically generated by some systems

21.11.2001 Data mining: KDD Process 50

Visualization of Discovered Patterns (1)

• In some cases, visualization of the results of data mining (rules, clusters, networks…) can be very helpful

• Visualization is actually already important in the preprocessing phase in selecting the appropriate data or in looking at the data

• Visualization requires training and practice

26

21.11.2001 Data mining: KDD Process 51

Visualization of Discovered Patterns (2)

• Different backgrounds/usages may require different forms of representationo e.g., rules, tables, cross-tabulations, or pie/bar chart

• Concept hierarchy is also importanto discovered knowledge might be more understandable

when represented at high level of abstraction o interactive drill up/down, pivoting, slicing and dicing

provide different perspective to data• Different kinds of knowledge require different kinds of

representationo association, classification, clustering, etc.

21.11.2001 Data mining: KDD Process 52

Visualization

27

21.11.2001 Data mining: KDD Process 53

21.11.2001 Data mining: KDD Process 54

Increasing potentialto suppor tbusiness decisions End User

BusinessAnalyst

DataAnalyst

DBA

MakingDecisions

Data PresentationVisualization Techniques

Data MiningInformation Discovery

Data Exploration

OLAP, MDA

Statistical Analysis, Querying and Reporting

Data Warehouses / Data Marts

Data SourcesPaper, Files, Information Providers, Database Systems, OLTP

Utilization of the results

28

21.11.2001 Data mining: KDD Process 55

Summary

• Data mining: semi-automatic discovery of interesting patterns from large data sets

• K nowledge discovery is a process:o preprocessing

o data mining

o post-processing

o using and utilizing the knowledge

21.11.2001 Data mining: KDD Process 56

Summary

• Preprocessing is important in order to get useful results!

• If a loosely defined mining methodology is used, post-processing is needed in orderto find the interesting results!

• Visualization is useful in pre-and post-processing!

• One has to be able to utilizethe found knowledge!

29

21.11.2001 Data mining: KDD Process 57

References – KDD Process

• P. Adriaans and D. Zantinge. Data Mining. Addison-Wesley: Harlow, England, 1996.• R.J. Brachman, T. Anand. The process of knowledge discovery in databases. Advances in

Knowledge Discovery and Data Mining. AAAI/MIT Press, 1996. • D. P. Ballou and G. K. Tayi. Enhancing data quality in data warehouse environments.

Communications of ACM, 42:73-78, 1999.• M. S. Chen, J. Han, and P. S. Yu. Data mining: An overview from a database perspective. IEEE

Trans. Knowledge and Data Engineering, 8:866-883, 1996. • U. M. Fayyad, G. Piatetsky-Shapiro, P. Smyth, and R. Uthurusamy. Advances in Knowledge

Discovery and Data Mining. AAAI/MIT Press, 1996. • T. Imielinski and H. Mannila. A database perspective on knowledge discovery. Communications of

ACM, 39:58-64, 1996.• Jagadish et al., Special Issue on Data Reduction Techniques. Bulletin of the Technical Committee on

Data Engineering, 20(4), December 1997.• D. Keim, Visual techniques for exploring databases. Tutorial notes in KDD’97, Newport Beach, CA,

USA, 1997.• D. Keim, Visual data mining. Tutorial notes in VLDB’97, Athens, Greece, 1997.• D. Keim, and H.P. Krieger, Visual techniques for mining large databases: a comparison. IEEE

Transactions on Knowledge and Data Engineering, 8(6), 1996.

21.11.2001 Data mining: KDD Process 58

References – KDD Process

• M. Klemettinen, A knowledge discovery methodology for telecommunication network alarm databases. Ph.D. thesis, University of Helsinki, Report A-1999-1, 1999.

• M. Klemettinen, H. Mannila, P. Ronkainen, H. Toivonen, and A.I. Verkamo. Finding interesting rules from large sets of discovered association rules. CIKM’94, Gaithersburg, Maryland, Nov. 1994.

• G. Piatetsky-Shapiro, U. Fayyad, and P. Smith. From data mining to knowledge discovery: An overview. In U.M. Fayyad, et al. (eds.), Advances in Knowledge Discovery and Data Mining, 1-35. AAAI/MIT Press, 1996.

• G. Piatetsky-Shapiro and W. J. Frawley. Knowledge Discovery in Databases. AAAI/MIT Press, 1991.

• D. Pyle. Data Preparation for Data Mining. Morgan Kaufmann, 1999.

• T. Redman. Data Quality: Management and Technology. Bantam Books, New York, 1992.

• A. Silberschatz and A. Tuzhilin. What makes patterns interesting in knowledge discovery systems. IEEE Trans. on Knowledge and Data Engineering, 8:970-974, Dec. 1996.

• D. Tsur, J. D. Ullman, S. Abitboul, C. Cli fton, R. Motwani, and S. Nestorov. Query flocks: A generalization of association-rule mining. SIGMOD'98, Seattle, Washington, June 1998.

30

21.11.2001 Data mining: KDD Process 59

References – KDD Process

• Y. Wand and R. Wang. Anchoring data quality dimensions ontological foundations. Communications of ACM, 39:86-95, 1996.

• R. Wang, V. Storey, and C. Firth. A framework for analysis of data quality research. IEEE Trans. Knowledge and Data Engineering, 7:623-640, 1995.

21.11.2001 Data mining: KDD Process 60

Reminder : Course OrganizationCourse Evaluation

• Passing the course: min 30 pointso home exam: min 13 points (max 30

points)o exercises/experiments: min 8 points

(max 20 points)� at least 3 returned and reported

experimentso group presentation: min 4 points (max

10 points)• Remember also the other requirements:

o attending the lectures (5/7)o attending the seminars (4/5)o attending the exercises (4/5)

31

21.11.2001 Data mining: KDD Process 61

Seminar Presentations/Groups 9-10

Visualization and data mining

D. Keim, H.P., Kriegel, T.Seidl: “ Suppor ting Data Mining of Large Databases by Visual Feedback Quer ies" , ICDE’94.

21.11.2001 Data mining: KDD Process 62

Seminar Presentations/Groups 9-10

Interestingness

G. Piatetsky-Shapiro, C.J.Matheus: “ The Interestingness of Deviations” , KDD’94.

![Christoph F. Eick: Introduction Knowledge Discovery and Data Mining (KDD) 1 Knowledge Discovery in Data [and Data Mining] (KDD) Let us find something interesting!](https://static.fdocuments.us/doc/165x107/56649ed05503460f94bde2d1/christoph-f-eick-introduction-knowledge-discovery-and-data-mining-kdd-1.jpg)