Conservative to Confident: Treating Uncertainty Robustly ...terrain with side slopes, inclines, and...

Transcript of Conservative to Confident: Treating Uncertainty Robustly ...terrain with side slopes, inclines, and...

Conservative to Confident: Treating Uncertainty RobustlyWithin Learning-Based Control

Chris J. Ostafew, Angela P. Schoellig, and Timothy D. Barfoot

Abstract— Robust control maintains stability and perfor-mance for a fixed amount of model uncertainty but can beconservative since the model is not updated online. Learning-based control, on the other hand, uses data to improve themodel over time but is not typically guaranteed to be robustthroughout the process. This paper proposes a novel combina-tion of both ideas: a robust Min-Max Learning-Based NonlinearModel Predictive Control (MM-LB-NMPC) algorithm. Basedon an existing LB-NMPC algorithm, we present an efficientand robust extension, altering the NMPC performance objectiveto optimize for the worst-case scenario. The algorithm uses asimple a priori vehicle model and a learned disturbance model.Disturbances are modelled as a Gaussian Process (GP) basedon experience collected during previous trials as a function ofsystem state, input, and other relevant variables. Nominal statesequences are predicted using an Unscented Transform andworst-case scenarios are defined as sequences bounding the 3σconfidence region. Localization for the controller is providedby an on-board, vision-based mapping and navigation systemenabling operation in large-scale, GPS-denied environments.The paper presents experimental results from testing on a 50 kgskid-steered robot executing a path-tracking task. The resultsshow reductions in maximum lateral and heading path-trackingerrors by up to 30% and a clear transition from robust controlwhen the model uncertainty is high to optimal control whenmodel uncertainty is reduced.

I. INTRODUCTION

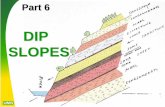

High-performance, path-tracking controllers for outdoormobile robots require techniques to mitigate the effects ofunknown surface materials, terrain topography, and complexrobot dynamics. However, finding rich, accurate modelsa priori is difficult because (i) the terrain is often not knownahead of time, (ii) robot-terrain interaction models often donot exist, and (iii) even if such models did exist, findingcorresponding model parameters is cumbersome.

Learning controllers alleviate the need for significant en-gineering work identifying and modelling all disturbancesprior to operation by enabling the robot to acquire and applyexperience in situ [1, 2]. In previous work, we presented anon-parametric, Learning-Based Nonlinear Model PredictiveControl (LB-NMPC) algorithm [3] to reduce path-trackingerrors within the context of an on-board, real-time, VisualTeach and Repeat (VT&R) mapping and navigation system[4]. In this work, we extend the algorithm by investigatinga robust Min-Max LB-NMPC (MM-LB-NMPC) algorithm.The combined robust, learning controller merges the bestof both worlds: robust, conservative control during initial

The authors are with the University of Toronto Institute for AerospaceStudies, Toronto, Ontario, Canada. Email: [email protected],[email protected], and [email protected].

Fig. 1. A Clearpath Husky robot autonomously negotiating challengingterrain with side slopes, inclines, and variable wheel traction. In practice,simple vehicle models rarely represent reality and limit the performance andstability of model-based, path-tracking controllers. In this work, we presenta robust learning-based controller that automatically transitions from robustto optimal control throughout the process of learning to track a path.

trials when model uncertainty is high, converging to optimalcontrol during later trials when model uncertainty is reduced.

The learning algorithm is based on a process model com-posed of two components: (i) a unicycle model representingthe kinematics of the robot, and (ii) a learned disturbancemodel representing both unmodelled robot dynamics andsystematic environmental disturbances. We model distur-bances as a Gaussian Process (GP) [5] based on observationsgathered during previous path traversals as a function ofsystem state, input, and other relevant system variables. Bymodelling the disturbances as a GP, the algorithm is ableto predict both the mean and uncertainty of disturbancesaffecting the a priori process model. We use an UnscentedTransform [6] to efficiently compute the mean and varianceof the nominal state sequence given the two-component,learned, stochastic model. The MM-LB-NMPC cost func-tion is optimized for the worst-case sequence bounding thenominal 3σ confidence region. We demonstrate the robust,learning control algorithm on a 50 kg Clearpath Husky robotand show reductions of worst-case path-tracking errors by upto 30% and a clear transition from robust towards optimalcontrol with only a 5% increase in computation time.

The key characteristics of this work are: (i) a path-tracking, robust MM-LB-NMPC algorithm based on a fixed,a priori known kinematic process model and a learnedGP disturbance model, (ii) efficient prediction of nominal

2015 IEEE International Conference on Robotics and Automation (ICRA)Washington State Convention CenterSeattle, Washington, May 26-30, 2015

978-1-4799-6923-4/15/$31.00 ©2015 IEEE 421

and 3σ bounding sequences using an Unscented Transform,(iii) navigation based on vision only, and (iv) experimentalresults on a 50 kg robot. To our knowledge, this paper is thefirst to demonstrate a robust MM-LB-NMPC algorithm thatautomatically transitions from robust to optimal control asmodel uncertainty varies with experience.

II. RELATED WORK

MPC is a framework in which the current control actionis obtained by solving, at each sampling instant, a finite-horizon optimal control problem using the current state ofthe plant as the initial state [7, 8]. Among a growing list ofexamples, MPC has been demonstrated in several real-worldapplications on ground robots [9–14]. However, in each ofthese examples, the system model is fixed and assumed torepresent the system accurately. As a result, these controllersachieve stability and good performance in operating regimeslimited in part by the a priori model and tuning parameters.In contrast, our algorithm simultaneously includes the abilityto learn from experience and robustness to model uncertainty,thus enabling reliable operation on robots of vastly differentmasses and in a variety of terrains.

Learning-based control aims to improve performance overtime by correcting the system model using experience(i.e., past measurements) [15–17]. Kocijan et al. [15] presentsa LB-MPC algorithm for a simulated pH neutralizationprocess. In addition to tracking errors and control input,the cost function penalized model uncertainty resulting ina controller that avoided uncertain states. In contrast, ourMin-Max approach uses the model uncertainty for robustcontrol, maintaining performance and stability despite modeluncertainty. Lehnert and Wyeth [16], and Park et al. [17]present LB-MPC algorithms for an elastic joint manipula-tor and an omni-directional mobile robot, respectively. Ineach of these cases, the controllers considered only themean predicted disturbance. Our approach considers boththe learned mean and variance, enabling automatic shiftsbetween robust and optimal control as model uncertaintyvaries. Finally, Tanaskovic et al. [18] present robust adaptiveMPC for constrained systems. While adaptive control is onlycapable of reacting to modelling errors, our approach isbased on a learned disturbance model and can therefore actin anticipation of repeatable disturbances.

Min-Max MPC maintains controller stability and perfor-mance despite model uncertainty by optimizing the perfor-mance objective for a worst-case scenario [19–24]. Scokaertand Mayne [21] present a Min-Max algorithm for robustperformance of systems with bounded disturbances. In con-trast, we assume normally-distributed disturbances and usean Unscented Transform [6] to predict a nominal sequence,3σ confidence region, and the associated boundary scenariosfor the Min-Max algorithm. Bemporad et al. [22] and Ker-rigan and Maciejowski [23] present algorithms that reducethe computation time of Min-Max MPC. In our work, wederive worst-case scenarios from the 3σ confidence regionsurrounding the predicted nominal sequence, representing asmall increase in computation relative to our original learning

algorithm [3]. Raimondo et al. [24] present a nonlinearMin-Max algorithm that separates state-dependent and state-independent disturbances to reduce conservativeness. In con-trast, we reduce conservativeness over time by learning animproved nominal process model. Effectively, our controllernaturally transitions to an optimal controller as model uncer-tainty decreases. To our knowledge, our work is the first topropose a MM-LB-NMPC algorithm.

Scenario MPC is a technique similar to Min-Max MPC[25–27]. However, instead of identifying a relatively smallnumber of worst-case disturbance sequences, Scenario MPCrelies on a (typically) large number of randomly sampledstate sequences over the prediction horizon given the modeluncertainty. Unlike Scenario MPC, our algorithm relies on asmall number of worst-case scenarios bounding the nominal3σ confidence region. This enables online operation andintegration into our existing LB-NMPC algorithm.

Otherwise, Berkenkamp and Schoellig [28] combine ro-bust control with machine learning techniques to adapt themodel uncertainty over time. While they present a learning,robust controller to stabilize an operating point, we derivea controller for path-tracking. Aswani et al. [29] presenta robust, linear LB-MPC algorithm that guarantees perfor-mance and stability by placing tube-shaped constraints onpredicted sequences. In this work, we use Min-Max MPC,a less conservative approach to robust MPC, optimizing forworst-case scenarios.

III. VISUAL TEACH & REPEAT

Localization for the controller is provided by an on-boardVisual Teach & Repeat (VT&R) mapping and navigationalgorithm developed by Furgale and Barfoot [4] wherethe sole sensor is an on-board stereo camera. In the firstoperational phase, the teach phase, the robot is pilotedalong the desired path. Localization in this initial phase isobtained relative to the robot’s starting position by visualodometry (VO). In addition to the VO pipeline, path verticesare defined at short and regular intervals along the pathwhile simultaneously storing key frames composed of localfeature descriptors and their 3D positions. During the repeatphase, the VT&R algorithm estimates the pose of the robotrelative to the nearest path vertex by re-localizing against thestored key frames. Re-localization is achieved by matchingfeature descriptors to generate feature tracks between thecurrent robot view and the teach-pass robot view. As longas sufficient correct feature matches are made, the systemgenerates consistent localization over trials and is able tosupport a learning control algorithm.

IV. MATHEMATICAL FORMULATION

A. MM-LB-NMPC Overview

NMPC finds a sequence of control inputs that optimizesthe plant behavior over a prediction horizon based on thecurrent state. The first control input in the optimal sequenceis then applied to the system, resulting in a new system state.The entire process is then repeated at the next sample timefor the new system state.

422

GP-basedDisturbance Model

MM-NMPCAlgorithm

Mobile Robot

xd,kxk

a g(a)

uk

Fig. 2. The controller is composed of two components: 1) the robust,path-tracking, Min-Max NMPC algorithm, and 2) the GP-based DisturbanceModel, providing experience-based disturbance estimates.

In traditional NMPC implementations, the process modelis specified a priori and remains unchanged during opera-tion. In our previous work on LB-NMPC, we augmented asimple process model with the mean of an experience-baseddisturbance model. Effectively, the controller used experienceto reduce path-tracking errors, compensating for effects notcaptured by the simple process model. In this work, we in-corporate both the disturbance mean and uncertainty into theNMPC algorithm, resulting in an efficient robust extensionthat reduces the worst-case errors (Fig. 2). We consider astochastic, learned process model,

xk+1 =

a priori model︷ ︸︸ ︷f(xk,uk) +

learned model︷ ︸︸ ︷g(ak) , (1)

with Gaussian system state, xk ∼ N (xk,Σk) ∈ Rn, distur-bance dependency, ak ∈ Rp, and control input, uk ∈ Rm, allat time k. The models f(·) and g(·) are nonlinear models: f(·)is a simple, a priori vehicle model and g(·) is an (initiallyunknown) disturbance model representing discrepancies be-tween the nominal model and the actual system behavior.Disturbances are modelled as a Gaussian Process (Sec. IV-C),thus g(·) is normally distributed, g(·) ∼ N (µ(·),Σgp(·)).In our previous work, we showed that g(·) could be used tolearn higher-order dynamics by including historic states inthe disturbance dependency [3]. However, for simplicity, weassume for now that ak = (xk,uk).

As previously mentioned, the goal of NMPC is to find aset of controls that optimizes the plant behavior over a givenprediction horizon. To this end, we define the cost functionto be minimized over the next K time-steps as

J(x,u) := (xd − x)T Q (xd − x) + uT R u, (2)

where Q is positive semi-definite, R is positive definite, u isa sequence of inputs, u = (uk, . . . ,uk+K), xd is a sequenceof desired states, xd = (xd,k+1, . . . , xd,k+K+1), and x isa sequence of predicted states, x = (xk+1, . . . , xk+K+1).Previously, the objective was optimized for the mean of thenominal sequence, x = x, where xnom = {{xi+1,Σi+1}|i=k, . . . , k+K}. In this work, the objective is optimized forthe worst-case sequence given the uncertainty in the learnedmodel. Specifically, the nominal sequence is predicted us-ing an Unscented Transform and 2n worst-case scenarios,x(l), l∈{1, . . . , 2n}, are defined in Sec. IV-B as sequences

Algorithm 1: MM-LB-NMPCData: xd, {xk,Σk}, and uinit

Result: uopt

1 initialization: u = uinit;2 while ‖δu‖ > α do3 Compute xnom given u and (1);4 Compute boundary sequences (NEW, cf. [3]);5 Find worst-case boundary sequence (NEW, cf. [3]);6 Linearize (2) around worst-case sequence and solve

for δu;7 Update control, u← u + δu;

bounding the nominal 3σ confidence region. Finally, theoptimal control sequence is given by

uopt = arg minu

maxlJ(x(l),u). (3)

Since both our process model and disturbance modelare nonlinear, the optimal control sequence, uopt, is founditeratively (Alg. 1) using a nonlinear optimization technique.In this paper, we use unconstrained Gauss-Newton minimiza-tion [30]. However, there are other nonlinear optimizationalgorithms, such as the constrained Gauss-Newton algo-rithm [31], that could be used to incorporate constraints onstates and control inputs.

At each time-step, we begin with the current systemstate, {xk,Σk} = {xk, Σk}, provided by the vision-basedlocalization system, and an initial guess for the optimalcontrol input sequence, u, such as the sequence of optimalinputs computed in the previous time-step (Alg. 1, Step 1).We compute the worst-case boundary sequence (Sec. IV-B,Alg. 1, Steps 3-5) based on u, (1), and

l∗ = arg maxl∈L

J(x(l), u). (4)

We linearize (2) around the worst-case sequence withu = u + δu, and x(l

∗) = x(l∗) + δx(l

∗) (Alg. 1, Step 6). Wewrite a linearized equation for the state,

δx(l∗) = H δu, (5)

where H is the block-Jacobian of (1) with respect to u.Substituting (5), x(l

∗) = x(l∗) + δx(l∗), and u = u + δuinto (2) results in J(·) being quadratic in δu. We can easilyfind the value of δu that minimizes J(x(l

∗),u), update ourcontrol input (Alg. 1, Step 7),

u← u + δu, (6)

and iterate to convergence, ‖δu‖ < α, with tuned value, α. Inaccordance with NMPC, we apply the resulting control inputfor one time-step and start all over at the next time-step.

The addition of the ‘max’ in (3) is the extension from ourprevious work [3]: the algorithm now takes into account theworst-case boundary sequence, limiting the worst-case errorsand guaranteeing stability for large model uncertainties.Moreover, there is an automatic transition as uncertaintydecreases, from robust control, with an uncertain model, tooptimal control, with a rich and accurate model.

423

0 0.05 0.1 0.15 0.2−0.02

0

0.02

0.04

0.06

0.08

x (m)

y (

m)

Unscented Transform vs Monte Carlo Simulations

Mean Unscented

± 3σ Unscented

Monte Carlo

Fig. 3. Here we show the lateral 3σ boundaries of the mean sequence. Sinceour model uncertainty is normally distributed, we use an Unscented Trans-form to compute the mean and uncertainty of a predicted state sequence.However, unlike Scenario MPC, where many scenarios are sampled inorder to find boundary sequences (red), the Unscented Transform efficientlyestimates the 3σ confidence region (dashed blue).

B. Computing Worst-Case Sequences

In our previous work, the cost function is optimized basedon the mean of the nominal state sequence. In this work,the cost function is optimized for the worst-case sequencebounding the nominal 3σ confidence region. Worst-casesequences are computed in two steps. First, the nominal statesequence is computed (Alg. 1, Step 3). Second, the worst-case sequences are extracted from the nominal sequence(Alg. 1, Step 4).

Since xk is normally-distributed and (1) is nonlinear, weuse an Unscented Transform [6] to iteratively predict thenominal state sequence, xnom, given u, {xk,Σk}, and (1).We define an initial state, zk := (xk,µ(ak)) ∈ R2n, withuncertainty, Pk := diag(Σk,Σgp(ak)). We compute 4n+1sigma points, Zk,i := (Xk,i,Mk,i), where Xk,i and Mk,i

are the sigma points of xk and µ(ak),

Zk,0 := zk (7)

Zk,i := zk +√

2n+ γ coli Sk, i = 1 . . . 2n (8)

Zk,i+2n := zk −√

2n+ γ coli Sk, i = 1 . . . 2n (9)

where SkSTk = Pk with Sk derived from the Cholesky

decomposition of Pk, coli Sk is the ith column of Sk, andγ is a tuning parameter. The sigma points are then passedthrough the nonlinear model,

Xk+1,i := f(Xk,i,uk) +Mk,i, i ∈ {0, . . . , 4n}, (10)

where f(·) is our a priori vehicle model. We combine thesigma points into the predicted mean and uncertainty,

xk+1 :=1

2n+ γ

(γXk+1,0 +

1

2

4n∑i=1

Xk+1,i

)(11)

Σk+1 :=1

2n+ γ

(γ(Xk+1,0 − xk+1)(Xk+1,0 − xk+1)T

+1

2

4n∑i=1

(Xk+1,i − xk+1)(Xk+1,i − xk+1)T

). (12)

This process is repeated K times, until the complete nom-inal sequence, xnom, is generated. Finally, we compute 2n

boundary sequences (Alg. 1, Step 4) based on xnom while

assuming 3σ noise (Fig. 3). Assuming for now n= 3, anddefining σk := (

√Σk(1, 1), . . . ,

√Σk(3, 3)), and

Γ(1)sign = diag(1, 1, 1), Γ

(5)sign = diag(−1, 1, 1),

Γ(2)sign = diag(1, 1,−1), Γ

(6)sign = diag(−1, 1,−1),

Γ(3)sign = diag(1,−1, 1), Γ

(7)sign = diag(−1,−1, 1),

Γ(4)sign = diag(1,−1,−1), Γ

(8)sign = diag(−1,−1,−1),

(13)

then x(l)i+1 = xi+1 + Γ(l)sign 3σi+1, i ∈ {k, . . . , k+K}.

C. Gaussian Process Disturbance Model

We model the disturbance, g(·), as a GP based on pastobservations. Since we provided a detailed explanation of themodel in previous work [3], here we provide only a high-level sketch. The learned model depends on observationsof disturbances collected during previous trials. At time k,we use the estimated poses, xk and xk−1, from the VT&Rsystem (Sec. III), the disturbance dependency, ak−1, and thecontrol input, uk−1, to solve (1) for g(ak−1),

g(ak−1) = xk − f(xk−1,uk−1). (14)

The resulting data pair, {ak−1, g(ak−1)}, represents an indi-vidual experience. We collect all experiences into one largedataset, D, with generally N observations, and drop the time-step index on each data pair in D, so that when referring toaD,i or gD,i, we mean the ith pair of data in the superset D.

In our work, we train a separate GP for each dimension ing(·) ∈ Rn to model disturbances as the robot travels along apath. For simplicity of discussion, we will assume for nowthat n = 1 and denote gD,i by gD,i. The GP model assumesa measured disturbance originates from a process model,

g(aD,i) ∼ GP (0, k(aD,i, aD,i)) , (15)

with zero mean and kernel function, k(aD,i, aD,i), tobe defined. We assume that each disturbance measure-ment is corrupted by zero-mean additive noise with vari-ance, σ2

n, so that gD,i = gD,i + ε, ε ∼ N (0, σ2n). Then a

modelled disturbance, g(ak), and the N observed distur-bances, g = (gD,1, . . . , gD,N ), are jointly Gaussian,[

gg(ak)

]∼ N

(0,[

K k(ak)T

k(ak) k(ak, ak)

]), (16)

where

(K)i,j = k(aD,i, aD,j), K ∈ RN×N , (17)

such that (K)i,j is the (i, j)th element of K, and

k(ak) =[k(ak, aD,1) . . . k(ak, aD,N )

].

In our case, we use the squared-exponential kernel func-tion [5],

k(ai, aj) = σ2f exp

(− 1

2(ai − aj)

T M−2(ai − aj))

+σ2n δij ,

where δij is the Kronecker delta, that is 1 iff i = jand 0 otherwise, and the constants M, σf , and σn arehyperparameters. In our implementation with ak ∈ Rp, the

424

ωkθk

vk

xd,i−1

xd,i

xd,i+1

xk

yk

Fig. 4. Definition of the robot velocities, vk and ωk , and the three posevariables, xk , yk , and θk , calculated relative to the nearest path vertex byEuclidean distance.

constant M is a diagonal matrix, M = diag(m), m ∈ Rp,representating the relevance of each component in ak, whilethe constants σ2

f and σ2n, represent the process variation and

measurement noise, respectively. Finally, we have that theprediction, g(ak), of the disturbance at an arbitrary state, ak,is also normally distributed,

g(ak)|g ∼ N(

k(ak)K−1g , k(ak, ak)− k(ak)K−1k(ak)T).

Unlike our previous work, which used only the predictedmean of a disturbance in the model predictive controller,here we make use of both the predicted mean and variance.As detailed in Sec. IV-B, the variance of the disturbanceis used to produce worst-case sequences that the controllershould actively mitigate. During initial trials, when the modeluncertainty is high, the predicted variance is large and theresulting control conservative. However, as the algorithm col-lects more experience, the predicted variance decreases, andthe algorithm naturally transitions to an optimal controller.

V. IMPLEMENTATION

In our work, robots are modelled as unicycle-type vehicleswith position, xk = (xk, yk, θk), calculated relative to thenearest path vertex by Euclidean distance, and velocity, vk =(vact,k, ωact,k) (Fig. 4). The robots have two control inputs,their linear and angular velocities, uk = (vcmd,k, ωcmd,k).The commanded linear velocity is set to a desired, scheduledspeed at the nearest path vertex, leaving only the angularvelocity, ωcmd,k, for the NMPC algorithm to choose (i.e.,we do not optimize the commanded linear velocity, leavingit to be scheduled in advance [32]).

When the time between control signal updates is definedas ∆t, the resulting a priori model used by the algorithm is

f(xk,uk) = xk+

∆t cos θk 0∆t sin θk 0

0 ∆t

uk, (18)

which represents a simple kinematic model for ourrobot; it does not account for dynamics or environmen-tal disturbances. As described in our previous work [3],we use an extended disturbance dependency in practice,ak = (xk, vk−1,uk,uk−1), enabling the algorithm to learn

higher-order disturbances in addition to kinematics. Since ourrobot is not equipped with velocity sensors, we approximatevk according to

vact,k−1 =

√(xk − xk−1)2 + (yk − yk−1)2

∆t,

and

ωact,k−1 =(θk − θk−1)

∆t.

This is preferable to using wheel encoders because we wantthe true speeds with respect to the ground and wheel encodersare unable to measure slip. Because xk comes from ourvision-based localization system, we are able to measurewheel slip in this way.

VI. EXPERIMENTAL RESULTS

A. Overview

We tested the MM-LB-NMPC algorithm on a 50 kgClearpath Husky robot traveling at 0.5 m/s. The controllerdescribed in Sec. IV was implemented and run in addition tothe VT&R software on a Lenovo W530 laptop with an Intel2.6 Ghz Core i7 processor with 16 GB of RAM. The cameraused for localization was a Point Grey Bumblebee XB3stereo camera. The resulting real-time localization and path-tracking control signals were generated at approximately10 Hz. Since GPS was not available, the improvement dueto the MM-LB-NMPC algorithm was quantified by thelocalization of the VT&R algorithm.

B. Tuning Parameters

The performance of the system was primarily adjustedusing the NMPC weighting matrices, Q and R. We selecteda 3:3:1 ratio balancing heading errors, position errors, andcontrol inputs. Otherwise, the prediction horizon, K = 10,and the convergence criterion, α=0.001K, were selected toenable online operation. The Sigma-Point scaling paramter,γ = 2, was selected to enable accurate prediction of meanand uncertainty. Hyperparameters were set automatically bymaximizing the log-likelihood of the measured disturbances[3]. Finally, path vertices were defined after each 0.2 m oftravel or 3.5◦ of rotation during the VT&R teach phase toreduce discontinuities in estimated and desired state.

C. Results

Over five trials, the algorithm successfully reduced themaximum lateral and heading errors by up to 30%. Fig. 5highlights the procession from robust control, when modeluncertainty was high, to optimal control, when the systemhad acquired experience and model uncertainty was reduced.In practice, the learned model uncertainty never goes to zerodue to measurement noise. As a result, the MM-LB-NMPCalgorithm shifted towards optimal control but ultimatelystruck a balance between robust and optimal control overtime (Fig. 5).

In general, the Min-Max algorithm incurred an increasein computation time of only 5-10%. This confirms ourselection of an Unscented Transform (Sec. IV-B) as an

425

1 2 3 4 50

0.1

0.2

0.3

0.41

σ U

ncert

ain

ty (

rad/s

)

Max Heading Rate Disturbance Uncertainty

Non−zero uncertainty due

to measurement noise

Learning

LB−NMPC

MM−LB−NMPC

1 2 3 4 5

103

Predicted Cost

Cost

MM−LB−NMPC shifts towards optimal

control as uncertainty decreases

1 2 3 4 50

0.05

0.1

0.15

0.2

0.25

Max Lateral Error

Err

or

(m)

Trial Number

Later, MM−LB−NMPC balances errors

and control inputs (optimal control)

High model

uncertainty

Initially, MM−LB−NMPC reduces

worst−case error (robust control)

Fig. 5. Here we show the procession of model uncertainty (e.g, the maxi-mum heading rate disturbance uncertainty), predicted costs, and maximumlateral error over several trials. The plots show the automatic transitionbetween robust control, when uncertainty is high and maximum errors arereduced significantly, to optimal control, when model uncertainty is low andthe controller finds a balance between errors and control inputs. Further, thealgorithm reduces errors due to non-repetitive noise, such as measurementnoise, that the learning algorithm is incapable of predicting.

efficient method of predicting the 3σ confidence regionwithout resorting to generating a large number of scenarios.

As shown in Fig. 6, we tested on a short path high-lighting the effect of the Min-Max algorithm. On this path,the measured disturbance affecting the heading rate of therobot peaked at approximately 0.5 rad/s, representing nearly50% of the commanded input 7 m along the path (Fig. 7).The general trend observed is that path-tracking errors arereduced over the entire path (Fig. 8). However, the errorsare not cancelled completely due to the optimality of theNMPC algorithm, which finds a balance between path-tracking errors and control inputs.

VII. CONCLUSION

In summary, this paper presents a novel, robust Min-MaxLearning-Based Nonlinear Model Predictive Control (MM-LB-NMPC) algorithm. We derive an efficient and robustextension to an existing LB-NMPC algorithm, altering theperformance objective to optimize for the worst-case sce-nario. The algorithm uses a simple a priori vehicle model

2 4 6 8 10 12

−2

−1

0

1

2

Desired Path Based on Visual Odometry

0 m 2 m 4 m6 m

8 m

10 m

12 m

y (

m)

x (m)

Desired Path

Fig. 6. The test path for the MM-LB-NMPC algorithm. A short,demonstrative path was selected to highlight the improvements due to theMin-Max algorithm.

2 4 6 8 10 12

−0.8

−0.6

−0.4

−0.2

0

0.2

0.4

0.6

0.8

Heading Rate Disturbance

Distance Along Path (m)

Dis

turb

an

ce

(ra

d/s

)

Measured

Predicted Mean

3σ Confidence

Fig. 7. Trial 5 heading rate disturbance vs distance. The 3σ boundsrepresent the model uncertainty used to compute the 3σ confidence regionand associated boundary sequences (Sec. IV-B). The goal of the Min-Maxalgorithm is to reduce worst-case errors given the model uncertainty.

2 4 6 8 10 12−0.4

−0.2

0

0.2

0.4

Late

ral E

rrors

(m

)

Lateral Error

2 4 6 8 10 12

−20

−10

0

10

20

Headin

g E

rrors

(deg)

Distance Along Path (m)

Heading Error

T1: LB−MPC

T1: MM−LB−MPC

T5: LB−MPC

T5: MM−LB−MPC

MM−LB−NMPC reduces errors

when model is uncertain

Fig. 8. Path-tracking errors vs distance for trials 1 (dashed) and 5 (solid).Reducing errors when model uncertainty is high (i.e., trial 1) is importantfor controller stability and perspective-dependent, vision-based localizationalgorithms. As model uncertainty decreases, the MM-LB-NMPC algorithmnaturally transitions towards an optimal control, balancing tracking errorsand control inputs.

and a learned disturbance model. Disturbances are modelledas a Gaussian Process (GP) based on experience collectedduring previous traversals as a function of system state,input and other relevant variables. Nominal state sequencesare predicted using an Unscented Transform and worst-

426

case scenarios are defined as sequences bounding the 3σconfidence region.

Experimental results are provided from tests with a 50 kgClearpath Husky robot on a demonstrative path. The resultsshow reductions in maximum lateral and heading path-tracking errors by up to 30% and a clear transition fromrobust control reducing worst-case errors, when the modeluncertainty is high, to optimal control balancing trackingerrors and control inputs, when model uncertainty is reduced.Furthermore, the algorithm requires only a 5-10% increasein computation time relative to the learning algorithm.

In retrospect, the MM-LB-NMPC algorithm offers aneffective and efficient method of simultaneously exploitingand decreasing model uncertainty to improve controller per-formance and guarantee stability. Leveraging this work, ourfuture work focuses on using the 3σ confidence region toprovide safety guarantees and real-time speed scheduling.

VIII. ACKNOWLEDGEMENTS

The authors would like to thank the Ontario Ministry ofResearch and Innovation’s Early Research Award Programfor funding our research and Clearpath Robotics for fundingthe Husky A200 robot. This work was also supported bythe Natural Sciences and Engineering Research Councilof Canada (NSERC) through the NSERC Canadian FieldRobotics Network (NCFRN).

REFERENCES[1] S. Schaal and C. G. Atkeson. Learning Control in Robotics. IEEE

Robotics & Automation Magazine, 17(2):20–29, 2010.[2] D. Nguyen-Tuong and J. Peters. Model Learning for Robot Control:

A Survey. Cognitive Processing, 12(4):319–340, 2011.[3] C. Ostafew, A. P. Schoellig, and T. D. Barfoot. Learning-Based

Nonlinear Model Predictive Control to Improve Vision-Based MobileRobot Path-Tracking in Challenging Outdoor Environments. In Pro-ceedings of the International Conference on Robotics and Automation,pages 4029–4036, 2014.

[4] P. Furgale and T. D. Barfoot. Visual Teach and Repeat for Long-RangeRover Autonomy. Journal of Field Robotics, 27(5):534–560, 2010.

[5] C. E. Rasmussen. Gaussian Processes for Machine Learning. TheMIT Press, 2006.

[6] S. J. Julier and J. K. Uhlmann. Unscented Filtering and NonlinearEstimation. Proceedings of the IEEE, 92(3):401–422, 2004.

[7] M. Morari and J. H. Lee. Model Predictive Control: Past, Present andFuture. Computers and Chemical Engineering, 23(4):667–682, 1999.

[8] D. Q. Mayne, J. B. Rawlings, C. V. Rao, and P. O. M. Scokaert.Constrained Model Predictive Control: Stability and Optimality. Au-tomatica, 36(6):789–814, 2000.

[9] F. Kuhne, W. F. Lages, and J. M. G. Silva. Mobile Robot TrajectoryTracking using Model Predictive Control. In Proceedings of the Latin-American Robotics Symposium, pages 1–7, 2005.

[10] G. Klancar and I. Skrjanc. Tracking-Error Model-Based PredictiveControl for Mobile Robots in Real Time. Robotics and AutonomousSystems, 55(6):460–469, 2007.

[11] F. Xie and R. Fierro. First-State Contractive Model Predictive Controlof Nonholonomic Mobile Robots. In Proceedings of the AmericanControl Conference, pages 3494–3499, 2008.

[12] S. Peters and K. Iagnemma. Mobile Robot Path Tracking of Aggres-sive Maneuvers on Sloped Terrain. In Proceedings of the InternationalConference on Intelligent Robots and Systems, pages 242–247, 2008.

[13] M. Burke. Path-Following Control of a Velocity Constrained TrackedVehicle Incorporating Adaptive Slip Estimation. In Proceedings of theInternational Conference on Intelligent Robots and Systems, pages 97–102, 2012.

[14] T. M. Howard, C. J. Green, and A. Kelly. Receding Horizon Model-Predictive Control for Mobile Robot Navigation of Intricate Paths.In Proceedings of the 7th Annual Conference on Field and ServiceRobotics, pages 69–78, 2009.

[15] J. Kocijan, R. Murray-Smith, C. E. Rasmussen, and A. Girard.Gaussian Process Model Based Predictive Control. In Proceedingsof the American Control Conference, volume 3, pages 2214–2219,2004.

[16] C. Lehnert and G. Wyeth. Locally Weighted Learning Model Pre-dictive Control for Nonlinear and Time Varying Dynamics. In Pro-ceedings of the International Conference on Robotics and Automation,pages 2604–2610, 2013.

[17] S. Park, S. Mustafa, and K. Shimada. Learning-Based Robot Controlwith Localized Sparse Online Gaussian Process. In Proceedings ofthe International Conference on Intelligent Robots and Systems, pages1202–1207, 2013.

[18] M. Tanaskovic, L. Fagiano, R. Smith, and M. Morari. Adaptive Re-ceding Horizon Control for Constrained MIMO Systems. Automatica,50(12):3019–3029, 2014.

[19] H. S. Witsenhausen. A Minimax Control Problem for Sampled LinearSystems. IEEE Transactions on Automatic Control, 13(1):5–21, 1968.

[20] P. J. Campo and M. Morari. Robust Model Predictive Control. InProceedings of the American Control Conference, pages 1021–1026,1987.

[21] P. O. M. Scokaert and D. Q. Mayne. Min-Max Feedback ModelPredictive Control for Constrained Linear Systems. IEEE Transactionson Automatic Control, 43(8):1136–1142, 1998.

[22] A. Bemporad, F. Borrelli, and M. Morari. Min-Max Control of Con-strained Uncertain Discrete-Time Linear Systems. IEEE Transactionson Automatic Control, 48(9):1600–1606, 2003.

[23] E. C. Kerrigan and J. M. Maciejowski. Feedback Min-Max ModelPredictive Control using a Single Linear Program: Robust Stability andthe Explicit Solution. International Journal of Robust and NonlinearControl, 14(4):395–413, 2004.

[24] D. M. Raimondo, D. Limon, M. Lazar, L. Magni, and E. F. Camacho.Min-Max Model Predictive Control of Nonlinear Systems: A UnifyingOverview on Stability. European Journal of Control, 15(1):5 – 21,2009.

[25] L. Blackmore. A Probabilistic Particle Control Approach to Optimal,Robust Predictive Control. In Proceedings of the AIAA Guidance,Navigation and Control Conference, 2006.

[26] J. Matsuko and F. Borrelli. Scenario-Based Approach to StochasticLinear Predictive Control. In Proceedings of the Conference onDecision and Control, pages 5194–5199, 2012.

[27] G. C. Calafiore and L. Fagiano. Robust Model Predictive Control viaScenario Optimization. IEEE Transactions on Automatic Control, 58(1):219–224, 2013.

[28] F. Berkenkamp and A. P. Schoellig. Learning-Based Robust Control:Guaranteeing Stability while Improving Performance. In Proceedingsof the Workshop on Machine Learning in Planning and Control ofRobot Motion, International Conference on Intelligent Robots andSystems, 2014.

[29] A. Aswani, H. Gonzalez, S. Shankar Sastry, and C. Tomlin. ProvablySafe and Robust Learning-Based Model Predictive Control. Automat-ica, 49:1216–1226, 2013.

[30] J. Nocedal and S. Wright. Numerical Optimization, volume 2. SpringerNew York, 1999.

[31] M. Diehl, H. J. Ferreau, and N. Haverbeke. Efficient NumericalMethods for Nonlinear MPC and Moving Horizon Estimation. InLecture Notes in Control and Information Sciences, Volume 384,Nonlinear Model Predictive Control, pages 391–417. Springer, 2009.

[32] C. Ostafew, J. Collier, A. P. Schoellig, and T. D. Barfoot. SpeedDaemon: Experience-Based Mobile Robot Speed Scheduler. In Pro-ceedings of the International Conference on Computer and RobotVision, pages 56–62, 2014.

427