Concurrent software engineering: prospects and pitfalls

Transcript of Concurrent software engineering: prospects and pitfalls

IEEE TRANSACTIONS ON ENGlNEERING MANAGEMENT, VOL. 43, NO. 2, MAY 1996 179

Concurrent Software Engineering: Prospects and la’itfalls

Joseph D. Blackburn, Geert Hoedemaker. and Luk N. Van Wassenhove

Abstract-Software development remains largely a sequential, time-consuming process. Concurrent engineering (CE) principles have been more widely adopted and with greater success in hardware development. In this paper a methodology for marrying CE principles to software engineering, or concurrent software engineering (CSE), is proposed. CSE is defined as a management technique to reduce the time-to-market in product development through simultaneous performance of activities and processing of information. A hierarchy of concurrent software development activity is defined, ranging from the simplest (within stage) to the most complex (across products and platforms). The infor- mation support activities to support this activity hierarchy are also defined, along with two key linking concepts-synchronicity and architectural modularity. Principles of CSE are developed for each level in the activity hierarchy. Research findings that establish limitations to implementing CE are also discussed.

I. INTRODUCTION

S an industry, software has become one of the largest and A fastest growing, with global expenses on software devel- opment exceeding $375 billion [ 11. However, the process of software development still closely resembles craft production. As a result, software routinely is over budget, exceeds its time target, and is riddled with defects [2].

A persuasive case for concurrent engineering (CE) in prod- uct development is well documented in the literature. We define CE as a management technique to reduce the time- to-market in product development through simultaneous per- formance of activities and processing of information. CE principles have been widely adopted to replace sequential, “over-the-wall” processes and to shrink lead-times in hardware design [3], [4]. Yet despite these hardware successes, software development remains largely sequential; simultaneous activit:y occurs only in isolated parts of the project, such as coding and testing.

Increasingly, software development has become closely coupled with hardware design. Tight linkages are especially important in firmware, or software designed to be embedded in hardware, where changes in either one usually require changes in the other. As hardware development times have shrunk and software complexity has increased, software often defines

Manuscript received October 8, 1994. Review of this manuscript was arranged by Guest Editor D. Gerwin. This work was supported in part by the Operations Roundtable and by the Dean’s Summer Research Fund, Owen Graduate School of Management, Vanderhilt University.

J. D. Blackburn is with the Owen Graduate School of Management, Vanderbilt University, Nashville, TN 37203 USA.

G. Hoedemaker is with Delta Lloyd Insurance Group, 25 18 BM The Hague, The Netherlands.

L. N. Van Wassenhove is with the Technology Management Group, IN- SEAD, 77305 Fontainebleau Cedex, France.

Publisher Item Identifier S 0018-9391(96)04456-X.

the critical path for new product introduction and dilatory software development becomes a market liability. Managing large software projects in isolation is a formidable problem, but the difficulties are magnified in firmware by the need for coordinated communication among the hardwarekoftware design teams.

The theme of this paper is that the software development process cain be greatly improved in terms of speed, quality and cost, and that there is much to be learned from the experience’s in hardware development with CE. Since this requires <a marriage of software engineering principles with those of CE, we call the resulting methodology concurrent software engineering (CSE). This paper makes the case for CSE and describes how it can be applied. After reviewing the state of software development and some basic CE concepts, we presenl a framework for CSE implementation based on a hierarchy of concurrent activity ranging from its simplest form, within project stage, to more complex projects across multiple products and platforms.

11. THE STATE OF SOFTWARE DEVELOPMENT

Software has been developed for commercial purposes since the late 1950’s. Initially, writing software programs for computers was viewed as the creative act of highly skilled artiisans working in isolation. Some in the industry still con<,ider software design an art and dismiss software engineering as an oxymoron.

As the industry grew, however, the projects became too large and complex to be carried out by an individual; budgets and time constraints were exceeded with alarming regularity. Efforts to manage software more effectively led to a discipline of software engineering for more rigorous project management in which division of labor and structured problem-solving techniques support creativity. Software engineering sought to eliminate bad practices and to impose formal methods for rigor in specification and design of software. In addition, it sought to enhance productivity and quality by providing computer aided software engineering (CASE) tools to developers for all stages of the process.

Several models have been proposed to structure the process of software development. One of the most widely used (and oldest) is the Waterfall model [ 5 ] . Although the Waterfall model adds structure to what had typically been an undis- ciplined process, it is a sequential approach in which stage reviews and approvals are scheduled before proceeding to the next stage. In the Waterfall model concurrency is usu- ally limited to overlapping activities within a stage, such as coding, and the need for cross-functional communication is

0018-9391/96$05.00 0 1996 IEEE

180 IEEE TRANSACTIONS ON ENGINEERING MANGEMENT, VOL. 43, NO. 2 , MAY 1996

20%

15%

10%

5%

0 %

._._. ._. .. .. .. . .__. .. ._._._.. , .... , .... .___., , ._. .. ._._.__. . ... . .. .. , . ._. .. . ._. .. ._._. .. , , , ., , ._. ._. .__._..__._, ............................. ......... .. . ...,... .. ..__.. .__. ,,__, ,, ,__,_, ,,, ,,,_, ,_, ,, ,_,_, , ,,,.,,,__, ,,,(, , ,_, ,_,,, ,(,

Proto- Customer CASE Concurr. Less Project Test typing Specs Tools Develop. Rework Team

Fig. 1. Percent reduction in development time factors.

minimized. Other models such as the spiral model [6] and rapid prototyping have evolved, yet these are also sequen- tial approaches. In the past decade, W. Humphrey and his colleagues have stimulated interest in a structured, defined software process with the software process maturity model [7] and its successor the capability maturity model [8]. Although the software process has become more structured, new tools have been developed, and reports of impressive productivity improvements have been published [9], [lo], the adoption of CE principles in software seems to be much less widespread and more ad hoc than among hardware design groups. For us, this raises the research question as to whether management of software as a concurrent process could yield even greater productivity gains.

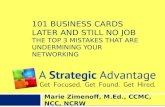

During 1993-1994 we assessed the state of software de- velopment by surveying managers of over 150 projects in the United States, Europe, and Japan, and by following up with field interviews [ l l ] . We asked them to review a re- cently completed software project and reflect upon the tools and techniques that were most useful in reducing project completion time. Most of these projects are large, ranging in size from 2000 to 1 million lines of code. By their own assessment, software managers perceive their organizations as becoming faster and more productive. When asked to estimate the duration of the project had it been undertaken five years earlier, the managers indicated that, on average, the project would have taken 30% longer.

To learn the degree to which CE principles were important, we asked software managers to rank the following factors with

1 Reuse of Module Improved Pr0arain.l

Strategy Code Size Comm. Engineers

respect to their effectiveness in reducing overall software de- velopment time: the use of prototyping, better initial customer specifications, use of CASE tools, concurrent development of stages or modules, less rework or recoding, improved project team management, better testing strategies, reuse of code or modules, changes in module size and/or linkages, improved team communication, and better programmers or software engineers. Data for our U.S. and Japanese samples are presented in Fig. 1 to show the percentage reduction in development time by factor.

There is clearly some evidence that concurrency practices are having an effect on reducing development time. In the American and Japanese firms in our survey, code reuse-a form of concurrency across projects rather than within-is rated the most important factor. Concurrent development is the second most important factor in Japan, and better customer specifications, an information support activity for concurrency, was highly valued in the United States. A possible explanation for the lower value for better customer specifications in Japan is that these firms have already adopted many of the tools used in hardware design for determining customer requirements. Most surprising, however, were the low values for the use of CASE tools and techniques and module size and linkages in both samples. When we questioned a software manager for a large telecommunications firm, he responded [ 121:

CASE tools have improved, and we have adopted them. But the improvements in productivity with CASE tools cannot keep pace with the increasing complexity of our software projects.

BLACKBURN et al.: CONCURRENT SOFTWARE ENGINEERING: PROSPECTS AND PITFALLS

............................................................................................................................................................................................. ........................................ .................................................................

35% \ -

30%

25%

20%

15%

10%

5%

0 % Determining Customer Planning and Detail Design

Requirements Specifications

Fig. 2. Development stage as percent of total development time.

Codiiigtlmplementation Testingllntegration

181

u 0 JAPAN

In his research on CE, Yeh [13] noted that “heavy invest- ment in CASE technology has delivered disappointing results, primarily due to the fact that CASE tools tend to support the old way of developing software, Le., sequential engineering.” These observations suggest that concurrent management prin- ciples may be more important than CASE tools and should precede the introduction of tools.

Analysis of reuse data provides additional insight into the status of concurrency practice. Follow-up interviews in Japan indicate that reuse is reactive: it tends to be more important in projects that are revisions of earlier software programs. When the Japanese survey responses of Fig. 1 are partitioned into two groups-new software projects and revisions of earlier projects-the percentage reduction factor for reuse was 21.5% for revisions and only 9.5% for new projects. This suggests that the firms lack a proactive, design-for-reuse strategy; they are not successfully designing new programs around reusable objects. Object-oriented (0-0) programming promises to change all this, and many firms are studying it (particularly in Japan and the United States). In our recent sample, half of the American firms were using languages that support 0-0 development but the percentage in Europe was under 15%. Designing for reuse is an important element of the framework for CSE that we introduce in Section IV.

In interviews managers admit that software development in their organizations remains largely sequential [ 121: they follow a version of the Waterfall model. These observations confirm what researchers such as Humphrey [14, p. 2491 and others [15], [16] have noted about this process model: it is

still “the best known and widely used overview framework for the sofitware development process.” In addition, we found in our research that how time is allocated across the project stages has an important effect on software development. Most software fiirms devote little time and few resources to the early stages of the project; time and effort build as the project moves from concept to coding and testing. As shown in Fig. 2, on average, coding and testing still constitute more than 50% of the effort. Our most recent survey data indicate a high correlation between software development speed and time spent in the specification stage of the process [ 111.

Although software firms have adopted many of the practices that we associate with CE, the impact of these practices is diminished by the piecemeal, reactive way in which they have been invoked, such as is the case with reuse. By only attempting concurrent activity in the detailed design stage of a project, fimns are robbed of the full benefits that CE can bring.

In our assessment the missing piece in the puzzle is man- agement. To achieve its potential in software development, CE should be practiced as a coordinated management effort across all stages of the project (and across projects) instead of as a selective alpplication of tools. To do this, management needs a framework to coordinate the application of CE throughout the software dlevelopment process. The purpose of the succeeding sections is to describe such a framework.

111. CE DEFINED

CE, like TQM or JIT, means different things to different people. There is no common definition, and most descriptions

182

I -ad=dgn I Modvlarirntion p,

IEEE

Requirements

P

1984 Model Development

1985 model Devdopment

Fig. 3 . Levels of activity concurrency.

of CE in the literature present it as an unstructured set of concepts and tools. Some researchers describe CE as a parallel design activity and others stress the integrated team approach to design in which the concerns of the different functions in the organization are addressed. For managers, the lack of a clearly- defined template for CE can be an obstacle to implementation. Some of the confusion about CE arises because, in the process of design and development, concurrency actually takes two different forms: concurrency in activities and concurrency in information.

In our definition of CE, we stress the need to manage both activity and information concurrency. Activity concurrency refers to the tasks and design activities that are performed simultaneously by different people or groups. Information concurrency refers to the integrated, or team, approach to development in which all the concerns of the different func- tions-the customer, R&D, design, engineering, manufactur- ing, sales, and service-are addressed through a flow of shared information. We focus on development time instead of the quality improvement and cost reduction potential of CE. Time-to-market is critical from a competitive standpoint and time-driven process improvements also tend to improve quality and reduce cost.

The definition of CE in terms of activities and information provides the framework around which we build the concept of CSE.

A. The Hierarchy of Activity Concurrency

There is a hierarchy of activity concurrency in development, ranging from within stage to across projects and platforms (as shown in Fig. 3).

Within-stage overlap of tasks is the simplest form of activity concurrency. As it is commonly practiced within a stage such as detailed design, the problem of designing, say, a new model TV is subdivided into modules (e.g., power supply, tuner, projection system) that are designed in parallel. Although less common, parallel activity can also be practiced within

TRANSACTIONS ON ENGINEERING MANGEMENT, VOL. 43, NO. 2 , MAY 1996

other stages, such as the requirements stage, where different functions-marketing, R&D, and software engineers-work in parallel to specify customer requirements.

Across stage overlap involves concurrent activity across different stages of the development process, such as high-level design, detailed design and testing. For example, some firms overlap detailed design and testing activity. Instead of waiting until all the design modules are completed before testing, they overlap and time-compress this activity by simulating the presence of other components and incorporating the testing into the detailed design phase. Few firms attempt this activity because, although potential integration problems are exposed earlier, the risk and cost of failure are high.

Hardware/software overlap occurs when software must be imbedded into a larger system, such as a TV set, which integrates hardware and software. Once the high-level design and product specifications have been determined, design of hardware components-the exterior box, the projection tube and circuit boards-can be performed in parallel with the software required to drive the system and its features.

Across project overlap presents a different type of concur- rency challenge for management: components designed for one product can be reused in current and future product releases. That is, the design activity for two or mcre products takes place simultaneously through the design of reusable, or multiuse, components. For example, in the design of software to support a new feature for TV, such as multichannel screen displays (or picture-in-picture), a robust software module that can deliver the feature in other models without reprogramming is a productivity enhancing form of concurrency.

Activity concurrency, in all its forms, is supported in varying degrees by information concurrency, as described in the next section.

B. Information Concurrency

Our model of information concurrency builds upon and extends Clark and Fujimoto’s information processing frame- work for supporting overlapping problem solving activities in design [17]. Information flows to support concurrency take three forms, which we call 1) front loading, 2) flying start, and 3) two-way high bandwidth flow.

1) Front loading is the early involvement in upstream design activities of downstream functions or is- sues-process engineering, manufacturing, customer service concerns, and even future market developments. Front loading provides an early waming about issues that, not considered, could lead to costly redesign and rework later. In hardware, for example, front loading of manufacturability problems can keep them from becoming major show stoppers when the design is transferred to production. Front loading of information about the direction of developing technology can aid the creation of more robust, reusable designs.

2) Flying start is preliminary information transfer flow- ing from upstream design activities to team members primarily concerned with downstream activities. For example, partial design information about new materials to be used in a product can provide a jump start for

BLACKBURN et al.: CONCURRENT SOFTWARE ENGINEERING: PROSPECTS AND PITFALLS

Front hading

183

Overlap (Reuse) kChibCtura l Modularity

Fig. 4

3 )

Information Concurrency Activity Concurrency

Two-way High Bandwidth

Framework for CSE

process engineering and help compress the time in process design activities. Two-way high bandwidth information exchange is in- tensive and rich communication among teams while performing concurrent activities. The information flow includes communication about potential design solutions and about design changes to avoid infeasibilities and in- terface problems. Two-way high bandwidth flows differ from front loading and flying start, which tend to be unidirectional flows of information from one function to another. For example, the hardwarekoftware overlap diagram in Fig. 3 displays a situation in which two- way high bandwidth flows are critical: teams involved in concurrent hardware and software design need to have a steady flow of information among the groups to prevent potential integration problems.

C. Linkages Between Information and Activity Concurrency

Managing the complex relationships between information flows and concurrent activity is the essential task of the project manager. To explain the linkage between information and activity concurrency, we extend the model proposed by Clark and Fujimoto to introduce two critical enablers: architectural modularity and synchronicity. Architectural modularity is the division of design problems into modules with well-defined functionality and interfaces (to “plug and play”). How the design problem is subdivided determines the type and level of information flows required to support concurrent activity. Synchronicity is the coordination of parallel activity. Design work done in parallel needs to remain synchronized because the “plane does not fly until all the parts are there.” It is

Hardwarel Software Overlap

primarily lthe project leader’s responsibility to insure that groups working in parallel communicate with each other to synchronize completion times and integrate effectively.

Small batch information processing facilitates all forms of information concurrency. Transferring information frequently and in small quantities, rather than sequentially and in large batches, supports concurrent activity by reducing the time delays in communication. This is the information equivalent of a salient characteristic of “lean manufacturing”-the ability to process materials and transfer them in small batches is a key skill in implementing JIT [18]. Analogously, small batch information processing provides “just-in-time’’ information flow that rnakes concurrent activity feasible.

Fig. 4 presents our view of how the process of CE can be structured ,with a design practice (architectural modularity) and a management practice (synchronicity) serving as key linkages between information and activity concurrency. In the section that follows, we apply this process to software development and descrilbe a framework for CSE.

IV. APPLYING CE TO SOFTWARE: CSE

CSE is the application of CE principles to the software development process. In the model we propose for software, each level of activity concurrency poses a different set of management problems and tradeoffs whose solution requires appropriate design (architectural modularity) and project man- agement (synchronicity) principles. As shown in Fig. 4, these practices are supported by concurrent information flows: front- loading, flying start, and two-way high bandwidth flows. In the remainder of this section we explain why the principle of architectural modularity is the key enabler for both within-

184 IEEE TRANSACTIONS ON ENGINEERING MANGEMENT, VOL. 43, NO. 2, MAY 1996

TABLE I FRAMEWORK FOR CSE: SUPPORT NEEDED ACROSS HIERARCHY OF ACTIVITIES

Level of Activity Concurrency Information Flows Within-Stage Across Projects Across Stages Hardware/Sof&vare Front Loading Critical Support Activity Critical Support Activity Critical Support Activity Critical Support Activity Flying Start Less Critical Less Critical Critical Critical Two-way Need Increases in Less Critical Critical Critical High-Bandwidth Inverse Proportion to Flow success with modularity Enabling Practices Architectural Modularity Critical Critical for Reuse Less Critical Less Critical Synchronicity Need Increases in Less Critical Critical Critical

Inverse Proportion to success with modularity

stage and across project concurrency, whereas synchronicity is the critical management practice that supports concurrency across stages and across the hardwarelsoftware interface. A summary of the relationships is provided in Table I.

A. Within-Stage Overlap

Parallel design activity is a widespread practice in the detailed design stage of both hardware and software: mod- ularizing the problem into elementary building blocks to be solved by an individual or small team. For this decomposition, architectural modularity plays a vital role because the objective is to subdivide the design problem into modules that have clearly defined inputs, outputs, and functionality, minimal interfaces with other modules, and are robust (or stable) with respect to external changes. The similarity between the characteristics of a well-defined module and an object, the basic building block of 0-0 design and programming is not coincidental-much of the potential payoff from 0-0 as a productivity tool is based on its support for concurrent activity through architectural modularity.

0-0 design is the latest, most promising technique to sup- port architectural modularity in software [ I]. Earlier attempts such as structuring problems in terms of subroutines and top- down functional decomposition ultimately failed because they yielded designs that were insufficiently resistant to change. Our surveys of current software practice described in Section I1 suggest that much of the promise of 0-0 programming re- mains largely unfulfilled; the gains in efficiency are coming more from other factors of team management than from 0-0 tools. Although many U S . software developers we interviewed are now using programming languages and tools to support 0-0 development, the response of one manager is typical [ 121:

I continue to be frustrated by our inability to define stable objects. Changes and rework are killing us.

Any within-stage efficiencies achieved through architectural modularity can be wiped out by changes in design spec- ifications and interfaces that require rework. Consequently, front loading is the key information flow for architectural modularity, and it begins at the high-level design stage, where advance information about possible design changes, customer requirements, and reuse concems can improve the layout of design modules.

Research and experience in hardware development has produced some guidelines on how to use front-loaded in-

formation to achieve architectural modularity. In hardware, Eppinger and his colleagues have used Steward’s design structure matrix (DSM) to examine the effects of different product decompositions into design components [ 191; the DSM quantifies the degree of interactions between components and provides a tool for analyzing alternative decompositions to minimize the likelihood of design iteration. Ulrich and Tung [20] posit some basic principles of product modularity for hardware, such as minimization of potential interactions.

Unfortunately, decomposing software requires more than a straightforward application of the techniques developed for hardware because, at this level, software and hardware are different. With hardware, there are natural physical decompo- sitions that can be matched with component functions [20]; in software, the modules are defined in terms of the functions they perform or the data processed-the physical (and visual) connection is missing. Software developers also maintain that the interfaces between components are more complex than in hardware and it is therefore difficult to capture all the potential interactions (in a DSM, for example). As a result, the module decomposition problem in software is a more complex, daunting problem.

In addition to module definition, another important tradeoff in modularization involves the size and number of modules. Breaking the problem into smaller modules increases overlap- ping, but also increases the complexity of the interfaces. In software, Brooks [21] observed that increasing the number of modules has adverse side effects: communication flows between teams working in parallel increase as a function of the square of the number of teams. We confirmed this in our field interviews with software developers who stated that as parallel activity in coding increases, the probability of interface errors and integration problems also increases, expanding the overall project completion time [ 121. This empirical observa- tion suggests that there are practical limits to the effectiveness of within stage concurrency. We verified this by carrying out a model-based study to prove the existence of and quantify limits to within-stage concurrency [22]. We constructed a simple model of stage decomposition into n modules and show that, when effects of communication complexity and rework probability due to interface errors are factored in, there is an optimal module decomposition, n* , beyond which additional subdivision increases expected completion time.

Increasing the level of within-stage concurrent design ac- tivity clearly increases the risk of integration and test failures,

BLACKBURN et al.: CONCURRENT SOFTWARE ENGINEERING: PROSPECTS AND PITFALLS 185

creating additional infomation management challenges. The goal of architectural modularity is to minimize the need for communication among modules, but to the extent that this is not achieved and communications needs rise, management’s priorities shift to practices such as synchronicity. The need for synchronicity to control communication flows increases in direct proportion to the lack of success in module definition, or architectural modularity. For synchronicity, the key informa- tion support activity then becomes two-way high bandwidth information flows.

B. Across Project Overlap

Software rarely exists in isolation. Like hardware, soft- ware is updated, redesigned, and elements are reused in new products. For example, consumer electronics firms such as Philips and Sony introduce new color TV’s annually with some hardware and software used in multiple models and essentially unchanged over several years. At the same time, these firms are carrying out parallel development projects for features that will be introduced in different years. Projects have genealogical links to predecessors.

How can you achieve concurrent design activity across projects whose development cycles do not overlap? Design reuse is the answer. When a component is reused in a subsequent product, the original design work for a component is a form of virtual concurrency because in the initial effort, one is simultaneously doing the design work for all future products in which that component is used.

Development managers recognize the importance of reuse and the payoff that it can bring in terms of time compression, productivity and quality. The trick is in getting it. Reuse is difficult to achieve because it requires stability, whereas product development is an environment of constant change and this tends to scuttle most reuse programs. Although external market pressures or technological breakthroughs account are important drivers of change, another cause of change is the designers’ view of their job as an agent of change. One European manager of telecommunications software described the problem:

Our engineers are trained from the time they are at the university to design new things. Reusing old de- signs goes against their natural inclination to change, to improve.

Nevertheless, he went on to explain that reuse is important. When asked how he encouraged reuse in such a culture, he said:

I do not give them enough time to develop new solutions and new code. They have to reuse whenever possible.

Most software reuse strategies that have been described to us are reactive: reuse occurs more by accident and imposed time constraints than as a planned process. A German electronics manufacturer outlined their strategy for concurrent projects as follows [ 121:

We plan on product releases every nine months. Our metaphor is a train. Every nine months the train leaves the station. Development of features proceeds concur-

rently arid if the software is ready, it goes on the train. Otherwise it waits for the next one to leave the station.

Planned, proactive reuse is a management responsibility: providing a clear vision of requirements for future products that sustains stable objects and platforms. To foster reuse, the key design practice is the same as for within-stage concur- rency-architectural modularity-but for a somewhat different reason. Across projects, architectural modularity is needed to provide sl~able, well-defined objects that are robust enough to transfer across platforms and to withstand changes in other objects. (Within stages, architectural modularity is needed for independence of objects so work can go on simultaneously with little intervention.)

As shown in Fig. 4, the key information flow necessary for achieving architectural modularity is front loading. Across projects, front-loaded information benefits designers by pro- viding good forecasts of the form and requirements for future modules. This information flow helps them develop more robust and reusable designs. Research by Sanderson and Uzmeri [23] on the Sony WalkmanTM suggests that Sony’s ability to spin off so many products from one platform was due to a product architecture designed around reusable modules.

To achieve planned reuse, many practitioners expect O- 0 programming to be the “silver bullet.” Hardware reuse is based on stable, well-defined components, and the analog in software is: an object with precisely defined interfaces and functionali1.y. However, better tools may provide only a partial solution. Many managers report frustration with their failure to control change across products and an inability to design stable modules: either the interfaces are unstable due to product changes or different functionality is required. The problem, they admit, lies not in the tools, but in the management of those tools and a failure to provide the incentives and vision that support architectural modularity. To encourage concurrency across projects, management must also establish incentives so that designers are rewarded for creating reusable modules.

C. Across-Stage Overlap

In this section we return to the issue of concurrency within an individual project and explore the overlap of activities across development stages.

Few sofiware firms attempt this type of across-stage concur- rency. Most of the firms we surveyed follow some version of the sequential Waterfall model [ 121, confirming an observation that has been made by numerous researchers on software de- velopment [ 141-[ 161. U S . Department of Defense contractors have long used the Waterfall model because their customer required it [ 161, and others use it because a sequential process is easier to manage.

Many software managers avoid concurrent activity across stages because of risk aversion. In interviews, managers stated that it is counterproductive to overlap the early stages of software development. Beginning high-level design activi- ties befoire requirements definition has stabilized, they argue, increases the risk that changing specifications will require redesign, amd the cost of reworking a stage can be exorbitant. The manager at a large German electronics firm stated em- phatically that stage overlap was incompatible with software

186 IEEE TRANSACTIONS ON ENGINEERING MANCEMENT, VOL. 43, NO. 2, MAY 1996

development due to the instability of early stages. Eppinger [I91 notes:

. . . stage overlap succeeds in reducing overall task time only when earlier iteration (overlapping) eliminates later iteration (feedback) which would have taken longer.

Examples from hardware development reported by Clark and Fujimoto [I71 show a strong correlation between the degree of stage overlap and shorter development cycles. Some software developers take the risk, reasoning that letting mod- ules progress to the next stage is too advantageous in terms of time gained to forego. One defense contractor, obligated to follow a sequential model, admitted to us that they circumvent the constraint by justifying early coding as mere “prototyping,” which is allowed.

What is the key activity that supports concurrency across stages? Synchronicity, rather than architectural modularity. is critical because when coordinating activity across stages. interdependencies cannot be eliminated, they must be man- aged. To facilitate synchronicity, all three types of information concurrency are important (see Fig. 4). Two-way high band- width flows are needed to keep the process from getting “out-of-sync” and to compress the time between occurrence and detection of problems. A flow forward of information downstream, or flying start, is needed to begin work on downstream stages. Front loading is critical in the requirements definition and high-level design stages to firm up product re- quirements; user requirements tend to be volatile and changes in specifications are one of the chief causes of project delay and rework.

Microsoft is a case in point of how software firms can employ across stage overlap. Cusumano [24] reports that, in an effort to reduce the time-to-market for large software ap- plications, Microsoft actively promotes management practices to overlap across stages. Having abandoned the sequential Waterfall model, they have adopted as management practice a procedure to “synchronize and stabilize.” Specifications. development, and testing are all carried out in parallel but they synchronize with daily builds.

Some software organizations use tools to overlap activities in the early stages of the process. One popular technique for front loading of requirements is rapid prototyping, which presents a potential user with a realistic interface whose functionality is simulated. In a recent survey of European software developers, we found that the faster, more pro- ductive firms exhibited a heavier reliance on prototyping. Quality function deployment (QFD) is another front loading technique designed to translate the voice of the customer into precise design requirements [25]. In our interviews with developers, we found QFD much more prevalent in hardware than software. Awareness of the technique is growing among software developers, but few firms in the United States and Europe have implemented it-the common response is that they are “studying it.” Software’s approach to QFD mirrors its approach to CE in general: it tends to lag hardware in its adoption of techniques to support overlapping activity.

D. Ha rdw a re/Sofhvare Ove rlap

Increasingly, software is embedded into a larger system which integrates hardware and software. Examples are numer- ous and range from a small integrated circuit that controls an amplifier to an automated air defense system. In an integrated system of software and hardware, the complex interdepen- dencies create coordination problems that can cause the lead time to exceed that required for the independent design of subsystems.

Delaying the integration of hardware and software until the first testable hardware prototype reduces system quality and extends the time. As Morris and Fomell [26] note:

Bringing hardware and software together at this late state is troublesome for several reasons. Engineers have little time to correct design problems, and fixes are more costly than they are earlier in the design process. Options for revisions are much more limited; because of the rigidity of the hardware design changes are usually made in the software, at the expense of system performance.

With embedded software, interaction is often limited to the passing of requirements from the hardware designers to soft- ware engineers. Those requirements tend to change frequently, frustrating the software engineers who grow weary of “those hardware guys that always come up with more changes.” Al- though one-way information flows are common in a traditional, sequential development process, they squelch concurrent ac- tivity.

To achieve CSE, active project management is needed to coordinate hardware and software design efforts-that is, synchronicity. As with across stage concurrency, all three forms of information concurrency are required to support an interactive parallel process. Front loading is critical for up- front involvement of software concerns in hardware design; two-way high bandwidth communication can provide insight into the origins of changing requirements so that developers can learn to predict changes (flying start).

Our interviews and surveys of software managers [ 1 I] , [ 121 suggest that the major obstacle to concurrent development of firmware is changing requirements. Because of the complex softwarehardware interfaces, changes in either one usually translate into changes in the other. Long, repeated rework cycles are the chief cause of cost and time overruns. Although these problems certainly occur in software (or hardware) de- signed alone, the interdependencies in firmware increase both the frequency and severity of problems. As is the case with stage overlap, two-way high bandwidth information transfer can provide early detection of interface problems. This may not, in itself, decrease the frequency of quality problems, but it can reduce their severity by locating them closer to the source and preventing the “spread of infection” to other parts of the design.

Since instability of requirements specifications is a major problem, CSE can address this with simultaneous development of hardware and software requirements. QFD is used to reveal the interdependencies between customer needs and specifications of the hardware and software systems; it is

BLACKBURN et al.: CONCURRENT SOFTWARE ENGINEERING: PROSPECTS AND PITFALLS 187

a form of front loading and two-way information transfer because it connects the “voice of the customer” with the team members responsible for determining hardware and software requirements.

V. CONCLUSION

In hardware development, CE has become a natural activity. Accumulating evidence suggests that CE practices can reduce development cycle times, lower costs and improve design quality [27], [28]. Our survey research indicates that some of the principles are being cautiously adopted in software, but that the full potential of CE is not being realized. Although concurrency is common within stages, particularly coding and testing, its use diminishes as one moves across stages, across the hardware-software interface or across platforms. Concurrency-supporting practices such as reuse and CASE tools are still managed on an ad hoc and reactive basis.

Concurrency occurs neither automatically nor by manage- ment decree. It is an all-encompassing project management practice requiring vision and leadership; synergy is gained from a systematic application of tools and practices by every- one involved. Our interviews suggest that the responsibilities of management to actively manage the process are not obvious to software developers. The software development process is still predominantly sequential, rather than concurrent.

The objective of this paper is to provide a coherent frame- work for CSE to move from ad hoc, reactive practices to proactive project management. Our framework, as shown in Fig. 4 and Table I, presents a hierarchy of concurrent activity and shows how information concurrency supports each level of activity concurrency through practices of architectural modularity and synchronicity.

Architectural modularity is the critical enabler for managing concurrency within stage and across projects and platforms, and thus the key information flow i s front-loading. This implies that management must stimulate early involvement by downstream functions to promote concurrency at these two activity levels. Careful attention to the definition of modules (or objects) yields short-term gains through within- stage overlapping and long-term productivity through reuse. Managers must also remain aware of the risk that excessively fine-grained modularization can yield diminishing returns by increasing the development time.

Synchronicity is the key management practice that links information and activity concurrency across stages and the hardwarehoftware interface. Our empirical research indicates that concurrency across stages in software is much less preva- lent than within stage because many managers are unwilling to assume the risk. When carrying out concurrent activity across stages and across the hardwarekoftware interface, all the information flows (front loading, flying start, and two-way high bandwidth) must be coordinated among the development team; this requires active, hands-on management and a higher level of involvement than is needed to promote architectural modularity. However, research on hardware indicates that firms can dramatically reduce their development times by learning to overlap activity across stages. The payoff from concurrency comes with a price: the project manager’s job

becomes more difficult. They have a heavier responsibility to coordinate information flows which support the different levels of cloncurrent activity.

The most formidable barrier to implementing concurrency in software appears to be inertia based on the belief that “software is different.” Our purpose in outlining a CSE methodology is to show that, even though the task may be more difficult, software can benefit greatly from applying the tools and techniques for fast product development that are being established in hard- ware. Hardware development provides a good learning model, and the firrns most likely to achieve sustainable advantages in software are the ones that stress the similarities and apply CE principles 1.0 their software development.

REFERENCES

S. R. Schach, Practical Software Engineering Homewood, IL: Irwin, 1992. M. Van Genuchten, “Why is software late? An empirical study of reasons for delay in software development,” IEEE Trans. Software Eng., vol. 17, pp. 582-590, 1991. H. Takeuchi and I. Nonaka, “The new new product development game,” Harvord Bus. Rev., vol. 64, no. 1, pp. 137-146, 1986. A. Rosenblatt and G. F. Watson, Eds., ‘Concurrent engineering,” IEEE Spectrum, pp, 22-37, July 1991. W. W. Royce, “Managing the development of large software systems: Concepts and techniques,” in PROC. WESCO, 1970. B. W. Boehm, “A spiral model of software development and enhance- ment,” E E E Computer, 1988. W. S. Humphrey et al., “A method for assessing the software engineer- ing capability of contractors,” Software Engineering Institute, Pittsburgh, PA, Feb. 1989. M. C. Paulk, B. Curtis, M. B. Chrissis, and C. V. Weber, Capability Ma- turity Model for Software, version 1.1, Software Engineering Institute, Pittsburgh, PA, 1993. M. Cusumano, Japan’s Software Factories, A Challenge to US. Mun- agement. Oxford, U.K.: Oxford Univ. Press, 1991. .. K. Swanson, D. McComb, J. Smith, and D. McCubbrey, “The appli- cation software factory: Applying total quality techniques to systems development,” MIS Quart., Dec. 1991. J. Blackburn, G. Scudder, L. N. van Wassenhove, and C. Hill, “Time- based software development,” in P roc. 1st Europe. OMA Manufact. Strategy Con$, Cambridge, U.K., 1994. J. D. Blackburn, G. Hoedemaker, and L. N. van Wassenhove, “Inter- views with product development managers,” Vanderbilt Univ., Owen Graduaite School of Manage. Working Paper, 1992. R. T. Yeh, “Notes on concurrent engineering,” IEEE Trans. Knowledge and Data Eng., vol. 4, pp. 407414, Oct. 1992. W. S. Humphrey, Managing the Software Process. Reading, MA: Addison-Wesley, 1990. E. Carmel, “Cycle time in packaged software firms,” J. Prod. Innov. Manage., vol. 12. pp. 110-123, 1995. R. B. IBowen, “Software project management under incomplete and ambiguous specifications,” IEEE Trans. Eng. Manage., vol. 37, pp. 10-21, Feb. 1990. K. B. Clark and T. Fujimoto, “Overlapping problem solving in product development,” Managing International Manufucturing, K. Ferdows, Ed. New York: North-Holland, 1989. J. D. Blackburn, Ed., Time-Based Competition: The Next Battleground in Anwican Manufacturing. R. P. Smith and S. D. Eppinger, “Identifying controlling features of engineering design iteration,” MlT lnt. Center f o r Res. on the Manage. of Techno/., working paper no. 72-92, Sept. 1992. K. T. IJlrich and K. Tung, Fundamentals of Product Modularity, MIT Sloan School, WP No. 3335-91-MSA, Sept. 1991. F. P. Brooks Jr., The Mythicul Man-Month, Essays on Software Engi- neering. Reading, MA: Addison-Wesley, 1975. G. M. Hoedemaker, L. N. Van Wassenhove, and J. D. Blackburn, “Math- ematics of concurrency: An investigation of the limits to simultaneous operations,” INSEAD Working Paper, May 1993. S . W. Sanderson and V. Uzmeri, “Strategies for new product develop- ment and renewal: Design based incrementalism,” Center f o r Science and Technology Policy Working Paper, Rensselaer Polytechnic Inst., 1991.

Homewood: Business One Irwin, 1991.

188 IEEE TRANSACTIONS ON ENGINEERING MANGEMENT, VOL. 43, NO. 2 , MAY 1996

[24] M Cusumano, “Microsoft Research seminar on d forthcoming book,” MIT Operations Summer Camp Conf , July 1995

[25] R. Thackeray and G van Treeck, “Applying quality function deployment for software product development,” J Eng Desigiz, vol 1, no 4, 1990

[26] R Morris and P Fornell, “Linking software and hardware design,” High Pegorm Sys t , June 1990.

[27] J R Hartley, Concurrent Engineering. Shortening Lead Rmes, Raising Quality and Lowering Costs Cambridge, MA Productivity Press, 1992

[28] A Rovenblatt and G F Watson, “Special report Concurrent engineer- ing,” IEEE Spectrum, vol 28, no 7. pp 22-44, July 1991

Geert Hoedemaker received the degree in opera- tions research from Erasmus University, Rotterdam, The Netherlands

He was a Research Assistant at INSEAD. France. He is presently with the Delta Lloyd Insurance Group of the Netherlands

Joseph D. Blackburn received the B S degree from Vanderbilt University, Nashville, TN, the M.S degree from the University of Wisconsin, Madison and the P h D degree in operations research from Stanford University, Stanford, CA

He is the James A Speyer Professor of Produc- tion Management in the Owen Graduate School of Management, Vanderbilt University He has been a Faculty Member of the University of Chicago and Boston Univerwy and has been a Visiting Professor at Stdnford University, the Katholieke Universiteit

Leuven, Belgium, dnd the Australian Graduate School of Management, Syd- ney His book, Rme-Based Competition The Next Battleground in American Manufacturing was published in 1991 He is a Senior Editor of Manufacturing and Service Operationr Management and serves on the editorial boards of The Journal of Operations Management, Technologv and Operations Review., and The Production and Operations Management Journal His current research interests are time-based competition and new product development

Luk N. Van Wassenhove received the M S degrees in mechanical engineering and industrial manage- ment, and the Ph D degree in operations manage- ment and operations research from the Katholieke Universiteit, Leuven, Belgium

He is presently Full Professor and Area Coordi- nator of the Technology Management Department at INSEAD, Fontainebleau, France His prior work experience includes appointments at the Kathoheke Universiteit and Erasmus Universiteit, Rotterdam, The Netherlands His research interests focus on

integrated supply chain management, time compression in new product development and quality, continual improvement, and learning on the factory level