Cmsc411(Pascuaword Report)

-

Upload

mannilou-pascua -

Category

Economy & Finance

-

view

343 -

download

0

description

Transcript of Cmsc411(Pascuaword Report)

Knowledge-Based SystemsCHAPTER 16

In Partial Fulfillment for the Requirements in

CMSC 411

Management Information System

Submitted to:

Ms.Susie Dainty B. Rivera

Submitted by:

Mannilou M. Pascua

Republic of the Philippines

LAGUNA STATE POLYTECHNIC UNIVERSITY

Siniloan, (Host) Campus

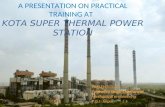

Figure 16.10 Prototyping is Incorporated in the Development of an Expert System

Expert System Maintenance An expert system must be maintained just as any other CBIS subsystem, and this is accomplished in Step 8. Changes are made that enable the expert system to reflect the changing nature of the problem domain and to achieve greater efficiency.

Sample Expert System

Step 7.

Step 8.

Step 6.

Use the system

Conduct user tests

Maintain the system

System Analyst ExpertUser

Step 1.

Step 2.

Study the problem domain

Step 3.

Define the problem

Step 4.

Specify the rule set

Step 5.

Test the prototype system

Construct the Interface

Need

to red

esig

n Need

to red

esign

Expert system activity in business began in the early 1980s. Since that time, the systems have been developed for a wide variety of application areas. As shown in Figure 16.11, the financial area of the firm has seen the highest level of activity. A sample of such a financial expert system is the credit approval system developed by professors Venkat Srinivasan of North Eastern University ,Boston and Young H, Kim of University of Cincinnati, who were working with a Fortune 500 company, which we will identify as SRR.

SRR’s credit policy consists of two activities: (1) setting credit limits for new customers and reviewing them once a year, and (2) handling exceptions on a daily basis. Srinivasan and Kim interviewed credits managers and observed credit analysts making the credit decisions. A senior credit manager served as the expert.

The Knowledge Base

The knowledge base of the expert system consists of two components: (1) rules that reflect the credit approval logic, and (2) a mathematical model that determines the credit limit.

The User Interface

As the interface engine proceeds through the rule set in a forward-chaining manner, the credit analyst is asked to make pair wise comparisons. For example, the interface engine might display the prompt:

What is the relative importance of Customer Background over Payment Record if the objective is to improve overall credit performances?

The credit analyst enters a code that reflects the comparison, and the forward chaining proceeds. When the chaining is completed, the output appears as a series of screens.

Table 16.1 Weightings of the Information Categories

The expert system then explains how it arrived at its conclusion. The second screen in Figure 16.13 shows how the Good rating on pay experience was derived. The AHP Intensity Value is a score computed by the mathematical model.

ProfitabilityIf Sales trend is Improving

And Customer’s net profit margin is Greater than 5%

Category $5.000-$20.000 $20.000-$50.000Financial Strength 0.65 0.70

Payment Record 0.18 0.20

Customer Background 0.10 0.05

Geographical Locations 0.05 0.03

Business Potential 0.02 0.02

Total 1.00 1.00

Source: Venkat Srinivasan and Young H. Kim, “ Designing Expert Financial System: A Case Study of Corporate Credit Management,” Financial Management 17 (Autumn 1988): 41,Used with permission.

And Customer’s net profit margin trend is

Improving

And Customer’s gross margin is Greater than 12%And Customer’s gross profit margin

trend isImproving

Then Customer profitability is ExcellentLiquidity

If Sales trend is Improving And Customer’s current ratio is Greater than 1.50And Customer current ration trend is IncreasingAnd customer’s quick ratio is Greater than 0.80And customer’s quick ratio trend is IncreasingThen Customer’s liquidity is Excellent

Debt ManagementIf Sales trend is Improving

And Customer’s debt net worth ratio is

Less than 0.30

And Customer’s debt net worth trend is

Decreasing

And Customer’s short-term debt to total debt is

Less than 0.40

And Customer’s short-term debt to total debt trend is

Decreasing

And Customer’s Interest coverage is Greater than 4.0Then Customer’s debt exposure is Excellent

Overall Financial HealthIf Customers profitability is Excellent

And Customer’s liquidity is ExcellentAnd Customer’s debt exposure is ExcellentThen Customer’s financial health is Excellent

Figure 16.12 Sample Rules

The credit analyst is able to display such screens as these, which explain the logic followed by the expert system in making the credit decision.

CREDIT ANALYSIS FOR : Ace Toys ,Inc3001 Silver Hill RoadNatick,MA 01760

Credit Need:$ 38,000

Existing Line: $ 0

Suggested Line:$ 0

OVERALL CONCLUSIONS: Pay Experience Good Customer Background Good Bank Good Financial Strength Poor

NARRATIVE:PAY EXPERIENCE

Customer’s pay habits are good. Pay to SRR has been mostly within terms, and pay to trade is excellent. Focus on collection efforts to bring pay to SRR up to par with trade pay Rule: If Pay to SRR is Good And Pay to trade is Excellent Then Customer’s pay experience is Good(AHP intensity value = 7)

Figure 16.13 Output Screens

Advantages and Disadvantages of Expert Systems

As with all computer applications, expert systems offer some real advantages, but there are also disadvantages. The advantages can accrue to both managers and the firm.

The Advantages of Expert Systems to Managers

Managers use expert systems with the intention of improving their decision-making. The improvement comes from being able to:

Consider More Alternatives. An expert system can enable a manager to consider more alternatives in the process of solving a problem. For example a financial manager who has been able to track the performance of only thirty stocks because of the volume of data that must be considered can track 3000 with the help of an expert system. By being able to consider a greater number of possible investment opportunities, the likelihood of selecting the best ones is increased.

Apply a Higher Level of Logic. A manager using an expert system can apply the same logic as that of a leading expert in the field.

Devote More Time to Evaluating Decision Results. The manager can obtain advice from the expert system quickly, leaving more time to weigh the possible result before action has to be taken.

Make More Consistent Decisions. Once the reasoning is programmed into the computer, the manager knows that the same solution process will be followed for each problem.

The Advantages of Expert Systems to the Firm

A firm that implements an expert system can expect: Better Performance for the Firm. As the firm’s managers extend their problem-solving

abilities through the use of expert systems, the firms control mechanism is improved. The firm is better able to meet its objectives.

To Maintain Control over the Firm’s Knowledge. Expert systems afford the opportunity to make the experienced employees’ knowledge more available to newer, less experienced employees and to keep that knowledge in the firm longer---even after the employees have left.

The Disadvantages of Expert Systems

Two characteristic of expert systems limit their potential as a business problem-solving tool. First, they cannot handle inconsistent knowledge. This is a real disadvantage because, in business, few things hold true all the time because of the variability in human performance. Second, expert systems cannot apply the judgment and intuition that are important ingredients when solving semi structured or unstructured problems.

How the Early Expert Systems Fared

Such was the case of the first expert systems that were built during the early and mid-1980s.

The XCON Success Story

The most thoroughly documented expert system success story is that of Digital Equipment Corporation (Digital) and its expert system called XCON. XCON was one of several expert systems developed by Digital, and it was used to validate the technical correctness of the orders. The task was not an easy one, because there were more than 30,000 hardware and software parts that could be incorporated into a particular configuration. Evidence of the difficulty is the fact that the XCON knowledge base consisted of over 10,000 rules.

Of all the expert system success stories, XCON provided the best example. The savings to Digital in the form of reduced manufacturing costs were estimated to be $15 million.

Other successful efforts, although not so well publicized, were Exper-Tax by Coopers and Lybrand, and Authorizer’s Assistant by American Express.

The Rest of the Story

In an effort to learn the eventual outcome of the early expert systems efforts, T. Grandon Gill, an MIS professor at Florida Atlantic University, conducted a survey of ninety-seven expert systems, including XCON, which were built prior to 1988. The survey respondents included managers, developers, experts, users, and support personnel.

The responses revealed that fewer than one-third of the systems ever achieved widespread or universal use and that almost one-half had been abandoned. On the positive side, almost three-fourths achieved some usage during their life span, and, for more than a third of them, the firms are still making investments in improving or maintaining the systems.

Reasons for Expert System Failures

The survey respondents identified the following causes of failure:

1. The original task that the expert system was designed to perform had changed.2. The cost of maintaining the expert was too great.3. The system became incompatible with other computer-based applications in the firm.4. The firm changed its focus or direction.5. The developers underestimated the size of the disk.6. The system was developed to solve a problem that was not considered to be critical to the

firm’s mission.7. The system exposed the firm to legal liability.8. Users resisted a system developed by outsiders.9. Users refused to assume responsibility for maintaining the system.10. Key development personnel were lost due to attrition.

None of these reasons was due to inadequate technology. Rather, responsibility can be assigned to the firms’ executives, information specialists, and users.

Keys to Successful Expert System Development

Using feedback from the survey respondents, Professor Gill identified five areas where the development projects could be improved.

1. Coordinate expert system development with the strategic business plan and the strategic plan for information resources.

2. Clearly define the problem to be solved, and thoroughly understand the problem domain.3. Pay particular attention to the legal (and ethical) feasibility of the proposed system.4. Fully understand both users’ concerns about the development project and their expectations

of the operational system.5. Employ management techniques designed to keep the attrition rate for developers within

acceptable limits.

These are ingredients that should be incorporated in any development project.

Cause for Hope

Viewing this oversight in a positive light, developers of future projects should be encouraged to know that they can substantially enhance their chances of success simply by doing thing right.

Another reason to expect that future expert systems efforts will be more successful than the early ones is the fact that the technology has changed in some respects. Not all new expert systems are being constructed from the same components as the early ones. A big breakthrough has been something called a neural network, or simply a neural net, which make it possible for a knowledge-based system to actually improve its performance over time. This valuable ability can provide the system with a certain measure of the judgment and intuition ingredients that make for good business decisions.

Neural Networks

A neural network, commonly called a neural net, is a mathematical model of the human brain that simulates the way that neurons interact to process data and learn from the experience.

Neural net design is a bottom-up approach, since it looks at the physical brain for inspiration in the creation of intelligent behavior. In contrast are the top-down approaches that have been developed by proponents of the more traditional AI areas mentioned earlier.

Biological Comparisons

The design of neural networks has been inspired by the physical design of the human brain. The component of the brain that provides an information-processing capability is the neuron, which consists of. Dendrites specialize in the input of electrochemical signals, the soma process the signals, and the axon provide output paths for the processed signals. Figure 16.14 illustrates two neurons.

Dendrites form a dendritic tree, a very fine, branch-like region of thin fibers around the cell body. Dendrites are the input components of the cell. They receive the electrochemical impulses that are carried from the axons of neighboring neurons.

Axons are long fibers that carry signals from the soma. The end of the axon splits into a tree-like structure, and each branch terminates in a small end bulb that almost touches the dendrites of other neurons. The end bulb called the synapse. Each neuron may be connected to a thousand or more neighbors via this network of dendrites and axons.

The soma is the processor component of the neuron. It is essentially a summation device that can respond to the total of its inputs within a short time period. The aggregation of signals is compared to an output threshold, which is the level of stimulation that is necessary for the neuron to fire or send an impulse along its axon to other connected neurons. The strength of the synaptic connection between the axon of the firing cell and the dendrite of the receiving cell determines the effect of the impulse.

Applying the Systems Approach

That the sequence can be described much, or perhaps most, of computing activity---and that includes human computing as well. The soma in the human brain is the processor. It receives inputs by means of dendrites and produces outputs by means of axons. In terms of the computer schematic, the soma is the “central processing unit,” the dendrites are the “input devices,” and the axons are the “output devices.”

Not only is the electronic computer a reflection of the systems approach the human brain is as well.

Through this very simple mechanism, input signals from neighboring neurons can be assigned priorities or weights in the soma’s accumulation process. These weights most likely serve as storage or memory for the network.

Even though the response time for a single neuron is approximately a thousand times slower than the digital switches in a computer, the brain is capable of solving complex problems such as vision and language. This is accomplished by linking together a tremendous number of inherently slow neurons (processors) into an immensely complex network. The number of neurons in the human brain has been estimated to be around 10, and each neuron forms approximately 104 synapses with other neurons. This is an example of parallel distributed processing (PDP), which allows each task to be broken down into a multitude of subtasks that are performed concurrently.

The Evolution of Artificial Neural Systems

Interest in modeling the human learning system can be traced back to the Chinese artisans as early as 200 B.C. However, most researchers consider the development of a simple neuron function by Warren McCulloch and Walter Pitts during the late 1930s as the real starting point.

The output from a McCulloch-Pitts neuron has a mathematical value equal to a weighted sum of inputs.

Hebb’s Learning Law One of the most famous learning rules was proposed in 1949 by Donald Hebb. Hebb’s learning law states that the more frequently one neuron contributes to the firing of a second; the more efficient will be the effect of the first on the second. Thus, memory is stored in the synaptic connections of the brain, and learning occurs with changes in the strength of these connections.

W2

W3

Wn-1 Y

W1

yn-1

y3

y3

Y1

Figure 16.15 A Single Artificial Neuron

The First Neurocomputers In the early 1950s, Marvin Minsky developed a device called the Snark, which is considered by many to be the first neurocomputer, or computer-based analog of the human brain. Although the Snark was technically successful, it failed to perform any significant information processing function.

In the mid 1950s, Frank Rosenblatt, a neurophysicist at Cornell University, developed the Perception, a hardware device used for pattern recognition. The Perceptrons, combined with a simple learning rule. The Perceptron was able to generalize and respond to unfamiliar input stimuli.

The success of Rosenblatt’s work fueled speculation that artificial brains were just around the corner. However, Marvin Minsky, an AI pioneer, and his colleague Seymour Papert demonstrated that the perceptrons of Rosenblatt could not solve simple logic problems. Their demonstration put a temporary damper on neural net research.

The Artificial Neural System

The artificial neural system (ANS) is not an exact duplicate of the biological system of the human brains, but it does exhibit such abilities as generalization, learning, abstraction, and even intuition. An ANS is made up of a series of very simple artificial neuron structures or neurodes. These structures are often referred to as perceptrons because of the influence of Rosenblatt. However, they are a direct extension of the mathematical model developed by McCulloch and Pitts.

These artificial neurons are the processing elements of the ANS architecture. The neuron sums the weighted inputs from its neighbors, compares this sum to its threshold value, and passed the result through a transfer function. The transfer function is a relationship between the output of the weighted sum and the threshold value of the cell. When the weighted sum exceeds the threshold value, the neuron “fires.”

The Multi-layer Perceptron these simple neurons are combined to form a multi-layer ANS, referred to as a multi-layer perceptron. Within each one, the input nodes are linked to the output nodes through one or more hidden layers, as illustrated in Figure 16.16.

The multi-layer perceptron is a feedforward network, meaning that the flow of data moves in only a simple direction, from the input layer to the output layer. However, the hidden layers permit an interaction between individual input nodes. This interaction allows a flexible mapping between inputs and outputs that facilitates their training.

Network Training

A neural net is not programmed in the traditional sense. Rather, it is trained by example. The training consists of many repetitions of inputs that express a variety of relationships. By progressively refining the weights of the system nodes (the simulated neurons), the ANS “discovers” the relationships among the inputs. This discovery process constitutes learning.

Putting the Artificial Neural System in Perspective

The ability to learn based on adaptation is the major factor that distinguishes ANS from expert system applications. Expert systems are programmed to make inferences based on data that describes the problem environment. The ANS, on the other hand, is able to adjust the nodal weights in response to the inputs and, possibly, to the desired outputs.

Because of its learning ability, the ANS is insulated from the shortcomings that plague expert systems in terms of adapting to changing conditions. During the coming years, more and more expert systems will be developed that incorporate neural nets, giving the systems combined ability to provide expert consultation and to improve their own expertise over time based on learning.

Putting Knowledge-Based Systems in Perspective

In 1956, when John McCarthy and his group coined the term artificial intelligence, most everyone else in the newborn computer industry was struggling to solve well-structured problems like payroll and inventory. Since that time, computer and information scientists have continually pushed back the frontiers of knowledge in the AIS, MIS, DSS, and virtual office. Most of those challenges have been met, and the applications are doing a good job of keeping up with the technology. For example, when inroads are made in such technology areas as multimedia and compact disks, they are incorporated into system designs.

![[MS-RPL]: Report Page Layout (RPL) Binary Stream Format€¦ · MS-RPL] —. stream report. report page. report report report](https://static.fdocuments.us/doc/165x107/5fd9f7a7a90b7c34145fa364/ms-rpl-report-page-layout-rpl-binary-stream-format-ms-rpl-a-stream-report.jpg)