Classification 021411

-

Upload

mihirsangani -

Category

Documents

-

view

216 -

download

0

Transcript of Classification 021411

-

8/7/2019 Classification 021411

1/35

Data MiningClassification: Basic Concepts, Decision

Trees, and Model Evaluation

Lecture Notes for Chapter 4

Introduction to Data Mining

by

Tan, Steinbach, Kumar

Tan,Steinbach, Kumar Introduction to Data Mining 4/18/2004 1

-

8/7/2019 Classification 021411

2/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

Classification: Definition

Given a collection of records (training set ) Each record is by characterized by a tuple

(x,y), wherexis the attribute set andy is the

class label

x: attribute, predictor, independent variable, input

y: class, response, dependent variable, output

Task: Learn a model that maps each attribute setx

into one of the predefined class labelsy

-

8/7/2019 Classification 021411

3/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

Examples of Classification Task

Task Attribute set,x Class label,y

Categorizing

email

messages

Features extracted from

email message header

and content

spam or non-spam

Identifying

tumor cells

Features extracted from

MRI scans

malignant or benign

cells

Cataloging

galaxies

Features extracted from

telescope images

Elliptical, spiral, or

irregular-shaped

galaxies

-

8/7/2019 Classification 021411

4/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

General Approach for BuildingClassification Model

Apply

Model

LearnModel

Tid

Attrib1

Attrib2 Attrib3 Class

1 Yes

Large 125K No

2

No Medium 100K No

3 No Small 70K

No

4

Yes

Medium 120K No

5

No Large 95K

Yes

6

No Medium 60K

No

7

Yes

Large 220K

No

8

No Small 85K

Yes

9

No Medium 75K

No

10 No Small 90K

Yes10

Tid

Attrib1

Attrib2 Attrib3 Class

11 No Small 55K

?

12 Yes

Medium 80K

?

13 Yes

Large 110K ?

14 No Small 95K

?

15 No Large 67K

?10

-

8/7/2019 Classification 021411

5/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

Classification Techniques

Base Classifiers Decision Tree based Methods

Rule-based Methods

Nearest-neighbor

Neural Networks

Nave Bayes and Bayesian Belief Networks

Support Vector Machines

Ensemble Classifiers

Boosting, Bagging, Random Forests

-

8/7/2019 Classification 021411

6/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

Example of a Decision Tree

IDHomeOwner

MaritalStatus

AnnualIncome

DefaultedBorrower

1 Yes Single 125K No

2 No Married 100K No

3 No Single 70K No

4 Yes Married 120K No

5 No Divorced 95K Yes

6 No Married 60K No

7 Yes Divorced 220K No

8 No Single 85K Yes

9 No Married 75K No

10 No Single 90K Yes10

Home

Owner

MarSt

Income

YESNO

NO

NO

Yes No

MarriedSingle, Divorced

< 80K > 80K

Splitting Attributes

Training Data Model: Decision Tree

-

8/7/2019 Classification 021411

7/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

Another Example of Decision Tree

MarSt

Home

Owner

Income

YESNO

NO

NO

Yes No

MarriedSingle,

Divorced

< 80K > 80K

There could be more than one tree that

fits the same data!

IHomeOwner

MaritalStatus

AnnualIncome

efaultedBorrower

Yes Single K No

No Married 00K No

3 No Single 70K No

Yes Married 0K No

No Divorced 9 K Yes

6 No Married 60K No

7 Yes Divorced 0K No

8 No Single 8 K Yes

9 No Married 7 K No

0 No Single 90K Yes

0

-

8/7/2019 Classification 021411

8/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

Decision Tree Induction

Many Algorithms: Hunts Algorithm (one of the earliest)

CART

ID , C4.5 SLIQ,SPRINT

-

8/7/2019 Classification 021411

9/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

General Structure of Hunts Algorithm

Let Dt be the set of trainingrecords that reach a node t

General Procedure:

If Dt contains records that

belong the same class yt,then t is a leaf node

labeled as yt

If Dt contains records that

belong to more than one

class, use an attribute test

to split the data into smaller

subsets. Recursively apply

the procedure to each

subset.

Dt

?

D

t

t t

D t

1 es Single 125K

2 No Married 100K

3 No Single 70K

4 es Married 120K

5 No Divorced 95K

6 No Married 60K

7 es Divorced 220K

8 No Single 85K

9 No Married 75K

10 No Single 90K10

-

8/7/2019 Classification 021411

10/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

Hunts Algorithm

IDHome

Owner

Marital

Status

Annual

Income

Defaulted

Borrower1 es ingle 125 No

2 o arried 100 No

3 o ingle 70 No

4 es arried 120 No

5 o ivorced 95 Yes

6 o arried 60 No

7 es ivorced 220 No

8 o ingle 85 Yes

9 o arried 75 No

10 o ingle 90 Yes10

-

8/7/2019 Classification 021411

11/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

How to determine the Best Split

Before Splitting: 10 records of class 0,

10 records of class 1

Which test condition is the best?

-

8/7/2019 Classification 021411

12/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

How to determine the Best Split

Greedy approach: Nodes with purerclass distribution are

preferred

Need a measure of node impurity:

High degree of impurity Low degree of impurity

-

8/7/2019 Classification 021411

13/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

Measures of Node Impurity

Gini Index

Entropy

Misclassification error

!j

tjptGINI 2)]|([1)(

!j

tjptjptEntropy )|(log)|()(

)|(max1)( tiPtErrori

!

-

8/7/2019 Classification 021411

14/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

Comparison among Impurity Measures

For a 2-class problem:

-

8/7/2019 Classification 021411

15/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

Measure of Impurity: GINI

Gini Index for a given node t :

(NOTE:p(j | t)is the relative frequency of class j at node t).

Maximum (1 - 1/nc) when records are equallydistributed among all classes, implying leastinteresting information

Minimum (0.0) when all records belong to one class,

implying most interesting information

!j

tjptGINI2

)]|([1)(

C1 0

C2 6

i

i=0.000

C1 2

C2 4

i

i=0.444

C1 3

C2 3

i

i=0.500

C1 1

C2 5

i

i=0.278

-

8/7/2019 Classification 021411

16/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

Index

Splits into two partitions Effect of Weighing partitions:

Larger and Purer Partitions are sought for.

B?

Yes No

Node N1 Node N2

Parent

C1 6

C2 6

Gini 0. 00

N1 N2C1 5 2

C2 1 4

Gini 0. 61

Gini(N1)

= 1 (5/6)2

(1/6)2

= 0.278

Gini(N2)

= 1 (2/6)2 (4/6)2

= 0.444

Gini(Children)= 6/12 * 0.278 +

6/12 * 0.444

= 0.361

-

8/7/2019 Classification 021411

17/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

Categorical Attributes: Computing Gini Index

For each distinct value, gather counts for each class inthe dataset

Use the count matrix to make decisions

CarType

{Sports,Luxury}

{Family}

C1 9 1

C2 7 3

Gini 0.468

CarT

{ rt }{Famil ,

x r }

C1 8 2

C2 0 10

Gi i 0.167

CarTyp

Family p rt L x ry

C1 1 8 1

C2 3 0 7

Gini 0.163

Multi-waysplit Two-waysplit(find bestpartitionofvalues)

-

8/7/2019 Classification 021411

18/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

Decision Tree Based Classification

Advantages: Inexpensive to construct

Extremely fast at classifying unknown records

Easy to interpret for small-sized trees Accuracy is comparable to other classification

techniques for many simple data sets

-

8/7/2019 Classification 021411

19/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

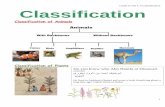

Rule-Based Classifier

Classify records by using a collection of ifthen rules

Name Blood Type Give Birth Can Fly Live in Water Class

human warm yes no no mammalspython cold no no no reptilessalmon cold no no yes fishes

whale warm yes no yes mammalsfrog cold no no sometimes amphibianskomodo cold no no no reptilesbat warm yes yes no mammals

pigeon warm no yes no birdscat warm yes no no mammalsleopard shark cold yes no yes fishesturtle cold no no sometimes reptiles

penguin warm no no sometimes birdsporcupine warm yes no no mammalseel cold no no yes fishessalamander cold no no sometimes amphibians

gila monster cold no no no reptilesplatypus warm no no no mammalsowl warm no yes no birds

dolphin warm yes no yes mammalseagle warm no yes no birds

R1:(GiveBirth = no) (Can Fly = yes)p Birds

R2:(GiveBirth = no) (Livein Water= yes)p Fishes

R3:(GiveBirth = yes) (BloodType = warm)pMammals

R4:(GiveBirth = no) (Can Fly = no)p Reptiles

R5:(Livein Water= sometimes)pAmphibians

-

8/7/2019 Classification 021411

20/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

Nearest Neighbor Classifiers

Basic idea:

If it walks like a duck, quacks like a duck,

then its probably a duck

Training

Records

Test RecordCompute

Distance

Choose k of the

nearest records

-

8/7/2019 Classification 021411

21/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

Bayes Classifier

A probabilistic framework for solving classification problems

Key idea is that certain attribute values are more likely

(probable) for some classes than for others

Example: Probability an individual is a male or female if the

individual is wearing a dress

Conditional Probability:

Bayes theorem:

)(

)()()(

XP

YPYXPXYP !

)(

),()|(

)(

),()|(

YP

YPYP

XP

YXPXYP

!

!

-

8/7/2019 Classification 021411

22/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

Confusion Matrix:

PREDICTED CLASS

ACTUAL

CLASS

Class=Yes Class=No

Class=Yes a b

Class=No c d

a: TP (true positive)

b: FN (false negative)

c: FP (false positive)

d: TN (true negative)

Evaluating Classifiers

-

8/7/2019 Classification 021411

23/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

Most widely-used metric:

PREDICTED CLASS

ACTUAL

CLASS

Class=Yes Class=No

Class=Yes a

(TP)

b

(FN)

Class=No c

(FP)

d

(TN)

Accuracy

FNFPTNTP

TNTP

dcba

da

!

!Accuracy

-

8/7/2019 Classification 021411

24/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

Holdout

Reserve k% for training and (100-k)% for testing

Random subsampling

Repeated holdout

Cross validation Partition data into k disjoint subsets

k-fold: train on k-1 partitions, test on the remaining one

Leave-one-out: k=n

Bootstrap Sampling with replacement

. 2 bootstrap:

!

vv!b

i

siboot accaccb

acc1

368.0632.01

Methods for Classifier Evaluation

-

8/7/2019 Classification 021411

25/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

Consider a 2-class problem

Number of Class 0 examples = 0

Number of Class 1 examples = 10

If a model predicts everything to be class 0, accuracy is

0/10000 = . %

This is misleading because the model does not detect

any class 1 example

Detecting the rare class is usually more interesting(e.g., frauds, intrusions, defects, etc)

Problem with Accuracy

-

8/7/2019 Classification 021411

26/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

Example of classification accuracy measures

PREDICTED CLASS

ACTUALCLASS

Class=Yes Class=No

Class=Yes 5

(TP)

5

(FN)

Class=No 5

(FP)

5

(TN) FPFNTP

TP

pr

rp

FNTP

TPFPTP

TP

TNFPFNTP

TNTP

!

!

!

!

!

2

22(F)measure-F

(r)Recall

(p)Precision

Accuracy

Accuracy = 0.8

ForYes class: precision = 8 .5, recall = 8 .5, F-measure = 8 .5

For No class: precision = 0.5, recall = 0.5, F-measure = 0.5

02/14/2011 CSCI 8 80: Spring 2011: Mining BiomedicalData 2

-

8/7/2019 Classification 021411

27/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

Example of classification accuracy measures

PREDICTED CLASS

ACTUALCLASS

Class=Yes Class=No

Class=Yes

(TP)

1

(FN)

Class=No 10

(FP)

0

(TN)

Accuracy = 0. 450

Sensitivity = 0.

Specificity = 0. 0

02/14/2011 CSCI 8 80: Spring 2011: Mining BiomedicalData 2

-

8/7/2019 Classification 021411

28/35

-

8/7/2019 Classification 021411

29/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

ROC (Receiver Operating Characteristic)

A graphical approach for displaying trade-off betweendetection rate and false alarm rate

Developed in 1 50s for signal detection theory to analyzenoisy signals

ROC curve plots True Positive Rate (TPR) against (False

Positive Rate) FPR Performance of a model represented as a point in an

ROC curve

Changing the threshold parameter of classifierchanges the location of the point

http://commonsenseatheism.com/wp-content/uploads/2011/01/Swets-Better-Decisions-Through-Science.pdf

02/14/2011 CSCI 8 80: Spring 2011: Mining BiomedicalData 2

-

8/7/2019 Classification 021411

30/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

(TPR,FPR):

(0,0): declare everything

to be negative class

(1,1): declare everything

to be positive class

(1,0): ideal

Diagonal line:

Random guessing

Below diagonal line:

prediction is opposite of

the true class

ROC Curve

02/14/2011 CSCI 8 80: Spring 2011: Mining BiomedicalData 0

-

8/7/2019 Classification 021411

31/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

Using ROC for Model Comparison

No model consistently

outperforms the other

M1 is better for small FPR

M2 is better for large FPR

Area Under the ROC curve

Ideal: Area = 1

Random guess:

Area = 0.5

02/14/2011 CSCI 8 80: Spring 2011: Mining BiomedicalData 1

-

8/7/2019 Classification 021411

32/35

-

8/7/2019 Classification 021411

33/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

ROC Curve Example

- 1-dimensional data set containing 2 classes (positive and negative)

- Any points located at x > t is classified as positive

At threshold t: TPR=0.5, FNR=0.5, FPR=0.12, FNR=0.88

02/14/2011 CSCI 8 80: Spring 2011: Mining BiomedicalData

-

8/7/2019 Classification 021411

34/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

How to Construct an ROC curve

Instance score(+|A) True Class

1 0. 5 +

2 0. +

0.8 -

4 0.85 -

5 0.85 -

0.85 +

0. -

8 0.5 +0.4 -

10 0.25 +

Use classifier that producescontinuous-valued output for

each test instance score(+|A)

Sort the instances according to

score(+|A) in decreasing orderApply threshold at each unique

value of score(+|A)

Count the number of TP, FP,

TN, FN at each threshold TPR = TP/(TP+FN)

FPR = FP/(FP + TN)

02/14/2011 CSCI 8 80: Spring 2011: Mining BiomedicalData 4

-

8/7/2019 Classification 021411

35/35

Tan,Steinbach, Kumar Introduction to Data Mining 8/05/2005 #

How to construct an ROC curve

Class + - + - - - + - + +

0.25 0.43 0.53 0.76 0.85 0.85 0.85 0.87 0.93 0.95 1.00

TP 5 4 4 3 3 3 3 2 2 1 0

FP 5 5 4 4 3 2 1 1 0 0 0

TN 0 0 1 1 2 3 4 4 5 5 5

FN 0 1 1 2 2 2 2 3 3 4 5

TP 1 0.8 0.8 0.6 0.6 0.6 0.6 0.4 0.4 0.2 0

FP 1 1 0.8 0.8 0.6 0.4 0.2 0.2 0 0 0

Threshold >=

ROC Curve:

02/14/2011 CSCI 8 80: Spring 2011: Mining BiomedicalData 5