Chunk Parsing

description

Transcript of Chunk Parsing

Chunk Parsing

Chunk Parsing

• Also called chunking, light parsing, or partial parsing.

• Method: Assign some additional structure to input over tagging

• Used when full parsing not feasible or not desirable.

• Because of the expense of full-parsing, often treated as a stop-gap solution.

Chunk Parsing

• No rich hierarchy, as in parsing.• Usually one layer above tagging.

• The process:1. Tokenize

2. Tag

3. Chunk

Chunk Parsing

• Like tokenizing and tagging in a few respects:

1. Can skip over material in the input

2. Often finite-state (or finite-state like) methods are used (applied over tags)

3. Often application specific (i.e., the chunks tagged have uses for particular applications)

Chunk Parsing

• Chief Motivations: to find data or to ignore data

• Example from Bird and Loper: find the argument structures for the verb give.

• Can “discover” significant grammatical structures before developing a grammar:

gave NPgave up NP in NPgave NP upgave NP helpgave NP to NP

Chunk Parsing

• Like parsing, except:– It is not exhaustive, and doesn’t pretend to

be.• Structures and data can be skipped when not

convenient or not desired

– Structures of fixed depth produced• Nested structures typical in parsing

[S[NP The cow [PP in [NP the barn]]] ate

• Not in chunking

[NP The cow] in [NP the barn] ate

Chunk Parsing

• Finds contiguous, non-overlapping spans of related text, and groups them into chunks.

• Because contiguity is given, finite state methods can be adapted to chunking

Longest Match

• Abney 1995 discusses longest match heuristic:– One automaton for each phrasal category– Start automata at position i (where i=0 initially)– Winner is the automaton with the longest

match

Longest Match

• He took chunks from the PTB:NP → D NNP → D Adj NVP → V

• Encoded each rule as an automaton• Stored longest matching pattern (the

winner)• If no match for a given word, skipped it (in

other words, didn’t chunk it)• Results: Precision .92, Recall .88

An Application

• Data-Driven Linguistics Ontology Development (NSF BCE-0411348)

• One focus: locate linguistically annotated (read: tagged) text and extract linguistically relevant terms from text

• Attempt to discover “meaning” of the terms• Intended to build out content of the

ontology (GOLD)• Focus on Interlinear Glossed Text (IGT)

An Application

• Interlinear Glossed Text (IGT), some examples:

(1) Afisi a-na-ph-a nsomba

hyenas SP-PST-kill-ASP fish

`The hyenas killed the fish.' (Baker 1988:254)

An Application

• More examples:(4) a. yerexa-n p'at'uhan-e bats-ets child-NOM window-ACC open-AOR.3SG

‘The child opened the window.’ (Megerdoomian ??)

(37) t ile-u i p-s pies u . 1pl-TOP buy-NOM meat COP 'What we need is meat.' (LaPolla 2000)

to buy is meat.’

An Application

• Problem: How do we ‘discover’ the meaning of the linguistically salient terms, such as NOM, ACC, AOR, 3SG?

• Perhaps we can discover the meanings by examining the contexts in which the occur.

• POS can be a context.• Problem: POS tags rarely used in IGT• How do you assign POS tags to a language you know

nothing about?• IGT gives us aligned text for free!!

(4) a. yerexa-n p'at'uhan-e bats-ets child-NOM window-ACC open-AOR.3SG

‘The child opened the window.’ (Megerdoomian ??)

An Application

• IGT gives us aligned text for free!!• POS tag the English translation• Align with the glosses and language data• That helps. We now know that NOM and ACC

attach to nouns, not verbs (nominal inflections)• And AOR and 3SG attach to verbs (verbal

inflections)

(4) a. yerexa-n p'at'uhan-e bats-ets child-NOM window-ACC open-AOR.3SG

‘The child opened the window.’ (Megerdoomian ??)DT NN VBP DT NN

An Application

(4) a. yerexa-n p'at'uhan-e bats-ets child-NOM window-ACC open-AOR.3SG

‘The child opened the window.’ (Megerdoomian ??)DT NN VBP DT NN

(37) t ile-u i p-s pies u . 1pl-TOP buy-NOM meat COP 'What we need is meat.' (LaPolla 2000)

to buy is meat.’

• In the LaPolla example, we know that NOM does not attach to nouns, but to verbs. Must be some other kind of NOM.

An Application

• How we tagged:– Globally applied most frequent tags (stupid

tagger)– Repaired tags where context dictated a

change (e.g., TO preceding race = VB)– Technique similar to Brill 1995

(4) a. yerexa-n p'at'uhan-e bats-ets child-NOM window-ACC open-AOR.3SG

‘The child opened the window.’ (Megerdoomian ??)DT NN VBP DT NN

An Application

• But can we get more information about NOM, ACC, etc.?

• Can chunking tell us something more about these terms?

• Yes!

(4) a. yerexa-n p'at'uhan-e bats-ets child-NOM window-ACC open-AOR.3SG

‘The child opened the window.’ (Megerdoomian ??)DT NN VBP DT NN

An Application

• Chunk phrases, mainly NPs• Since relationship (in simple sentences)

between NPs and verbs tells us something about the verbs’ arguments (Bird and Loper 2005)…

• We can tap this information to discover more about the linguistic tags

(4) a. yerexa-n p'at'uhan-e bats-ets child-NOM window-ACC open-AOR.3SG

‘The child opened the window.’ (Megerdoomian ??)DT NN VBP DT NN

An Application

• Apply Abney 1995’s longest match heuristic to get as many chunks as possible (especially NP)

• Leverage English canonical SVO (NVN) order to identify simple argument structures

• Use these to discover more information about the terms

• Thus…

(4) a. yerexa-n p'at'uhan-e bats-ets child-NOM window-ACC open-AOR.3SG

‘The child opened the window.’ (Megerdoomian ??)DT NN VBP DT NN

NP NPVP

An Application

• We know that– NOM attaches to subject NPs – may be a

case marker indicating subject– ACC attaches to object NPs – may be a case

marker indicating object

(4) a. yerexa-n p'at'uhan-e bats-ets child-NOM window-ACC open-AOR.3SG

‘The child opened the window.’ (Megerdoomian ??)DT NN VBP DT NN

NP NPVP

An Application

• What we do next: look at co-occurrence relations (clustering) of – Terms with terms– Host categories with terms

• To determine more information about the terms• Done by building feature vectors of the various

linguistic grammatical terms (“grams”) representing their contexts

• And measuring relative distances between these vectors (in particular, for terms we know)

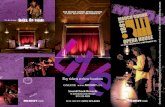

Linguistic “Gram” Space PASS 18 CAUS 19

PRES 13 TNS/ASPECT

FUT 14 PAST 12 VERBAL

F 11 1SG 17 M 10 3SG 16 AGREEMENT DAT 3 GEN 4 STD CASES NOM 1 ACC 2 PL 15 NOMINAL INSTR 8 POSS 9 LOC 5 ABS 7 ERG 6 OBL CASES