CHL_11Q2_IWARP_GA_TB_02_0405 (3)

Transcript of CHL_11Q2_IWARP_GA_TB_02_0405 (3)

-

8/6/2019 CHL_11Q2_IWARP_GA_TB_02_0405 (3)

1/2

TECHNICAL BRIEF

Figure 1. The high-per ormance, dual-port Chelsio

T420-LL-CR 10GbE Uni ed Wire Adapt

Capabilities Integrated to Deliver theBest Per ormance OverallChelsios second generation iWARP design builds onthe RDMA capabilities o T3, with continued MPI sup-port on Linux with OpenFabrics Enterprise Distribution(OFED), and Windows HPC Server 2008. T3 is alreadya eld-proven per ormer in Purdue Universitys 1300-

node cluster, and the T4 design reduces RDMA latencyrom T3s already low 6.8 s to about 3.7 s through itsincreased pipeline speed.

This demonstrates the linear scalability o ChelsiosRDMA architecture to deliver comparable or lower la-

tency than In niBand DDR or QDR, andto scale e ortlessly in real world appli-cations, as connections are added.

Enhanced Storage O oads T4 o ers protocol acceleration orboth le and block-level storage tra -

c. For le storage, T4 supports ull TOEunder Linux and TCP Chimney underWindows. T4s ourth-generation TOEdesign adds support or IPv6, increas-ingly prevalent and now a requirement

or many government and wide-areaapplications. For block storage, T4 supports partial or

ull iSCSI o oad o processor-intensive tasks such asprotocol data unit (PDU) recovery, header and data di-gest, cyclic redundancy checking (CRC), and direct dataplacement (DDP), supporting VMware ESX.

HPC CONVERGING ONLOW LATENCY IWARPHigh Per ormance Computing cluster architectures aremoving away rom proprietary and expensive network-

ing technologies towards Ethernet as the per ormance/ latency o TCP/IP continues to lead the way. In niBand,the once-dominant interconnect technology or HPCapplications leveraging Message Passing Inter ace (MPI)and remote direct memory access (RDMA), has nowbeen supplanted as the pre erred networking protocolin these environments.

Due to the rapid adoption o the x86plat orm in high per ormance paral-lel computing environments, 48% o the top 500 supercomputers now useEthernet as their standard networking

technology (source: top500.org), whilelatency-sensitive HPC applications suchas those used in nancial trading ormodeling environments, leverage IP/ Ethernet networks to run the same MPI/ RDMA applications using iWARP. Com-patible with existing Ethernet switches, iWARP is theproven low latency RDMA-over-Ethernet solution orhigh-per ormance computing on TCP/IP, developed bythe IETF and supported by the industrys leading 10GbEadapters.

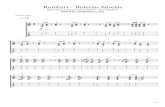

Cost-Efective, Low Latency ClusteringNow, Chelsio has delivered iWARP connectivity withthe shortest delay available in a network inter ace card.In recent tests with Chelsio partners, the T420-LL-CRwas ound to deliver RDMA Verbs latency o 3.4 s andaverage latency o 3.7 s, as shown in the Intel MPIbenchmarks in Figure 2. These charts also demonstratethe smooth scalability provided by the T420-LL-CRs 4thgeneration T4 ASICessential to ensuring continuouslow latency operation during periods o heavy use.

Chelsio T420 low latency server connectivity brings cost-efective capacityand versatility to any high-per ormance computing in rastructure.

...the Chelsio T420showed IntelMPI Benchmark

latency of just 3.7microseconds...

-

8/6/2019 CHL_11Q2_IWARP_GA_TB_02_0405 (3)

2/2

TECHNICAL BRIEF

2011 Chelsio Communications, Inc. All rights reserved. CHL_11Q2_IWARP_GA_TB_02_0405Chelsio and the Chelsio diamond symbol, are registered trademarks o Chelsio Communications, Inc., in the United States and/or in othercountries. Other brands, products, or service names mentioned are or may be tr ademarks or service marks o their respective owners.

Corporate Headquarters370 San Aleso Ave

Sunnyvale, CA 94085 T: 408.962.360

Broadening Chelsios support or block storage, T4adds partial and ull FCoE o oad. With an HBA driver,

ull o oad provides maximum per ormance as well ascompatibility with SAN management so tware. For so t-ware initiators, Chelsio supports the Open-FCoE stack and T4 o oads certain processing tasks much as it doesin iSCSI. Unlike iSCSI, FCoE requires enhanced Ethernet

support or lossless transport, Priority-based Flow Con-trol (PFC), Enhanced Transmission Selection (ETS) andData Centre Bridging Exchange (DCBX).

Conclusion These results show how the rigorous requirements o cluster computing and storage can be met or exceededusing iWARP and the Chelsio T420-LL-CR Uni ed Wire

Adapter. Pervasive and reliable 10Gb Ethernet withiWARP delivers a highly scalable ultra-low latency inter-connect solution or all HPC environments.

Why risk investing in technologies with marginalinstalled base and a limited uture, when Chelsio

T4-based 10GbE Uni ed Wire Adapters providecomprehensive support and o oading o iSCSI,FCoE, TOE, NFSiWARP, LustreiWARP or CIFs and NFStrafc, and achieve all the requirements needed

or application clustering ranging rom dozens tothousands o compute nodes?

So i you pre er to use one card throughout your HPCapplication, choose the T420-LL-CR; the Uni ed WireAdapter that does it all, and does it best.

About Chelsio CommunicationsChelsio Communications is the market and technologyleader in advanced, low-latency server connectivity,enabling the convergence o networking, storage andclustering trafc over 10Gb Ethernet.

Terminator ASIC technology is proven in more than 100OEM plat orms with the success ul deployment o morethan 100,000 ports, and now Chelsio is leading the wayagain, with its recently announced T4 ASIC technology.With Terminator 4, Chelsio has taken the uni ed wire tothe next level, providing ull o oad or TCP, iSCSI, iWARPand Fibre Channel over Ethernet, relieving servers andstorage systems o processing overhead, and bringingits adopters dramatic increases in application per or-mance.

To learn more, contact your Chelsio sales representativeat [email protected], or visit us at www.chelsio.com.

Figure 2.Chelsios T4 ASIC brings ultra-low latencies to iWARP trafc shown in these results

rom the Intel Message Passing Inter ace Benchmark (IMB) suite

0.1

1

10

100

1000

10000

100000

4 1 6

6 4

2 5 6

1 0 2 4

4 0 9 6

1 6 3 8 4

6 5 5 3 6

2 6 2 1 4 4

1 0 4 8 5 7 6

4 1 9 4 3 0 4

Allreduce, Reduce, Reduce_scatter Latency Avg (s)

Reduce_scatter Latency Avg

Reduce Latency Avg

Allreduce Latency Avg

0.1

1

10

100

1000

10000

100000

1 4 1 6

6 4

2 5 6

1 0 2 4

4 0 9 6

1 6 3 8 4

6 5 5 3 6

2 6 2 1 4 4

1 0 4 8 5 7 6

4 1 9 4 3 0 4

Allgather, Allgatherv, Alltoall, Bcast Latency Avg (s)

Bcast Latency Avg

Alltoall Latency Avg

Allgatherv Latency AvgAllgather Latency Avg

0.1

1

10

100

1000

10000

100000

1 4 1 6

6 4

2

5 6

1 0

2 4

4 0

9 6

1 6 3

8 4

6 5 5

3 6

2 6 2 1

4 4

1 0 4 8 5

7 6

4 1 9 4 3

0 4

Sendrecv, ExchangeLatency (s) and Throughput (MBps)

Exchange Throughput

Sendrecv Throughput

Exchange Latency Avg

Sendrecv Latency Avg

0.1

1

10

100

1000

10000

1 4 1 6

6 4

2 5 6

1 0 2 4

4 0 9 6

1 6 3 8 4

6 5 5 3 6

2 6 2 1 4 4

1 0 4 8 5 7 6

4 1 9 4 3 0 4

PingPong and PingPingLatency (s) and Throughput (MBps)

PingPing Throughput

PingPong Throughput

PingPing Latency

PingPong Latency3.7s

3.7s

3.8s

3.9s