Ch 02. Memory Hierarchy Design (1)

-

Upload

faheem-khan -

Category

Documents

-

view

218 -

download

3

description

Transcript of Ch 02. Memory Hierarchy Design (1)

-

Computer Architecture

Professor Yong Ho Song 1

Spring 2015

Computer Architecture Memory Hierarchy Design

Prof. Yong Ho Song

-

Computer Architecture

Professor Yong Ho Song

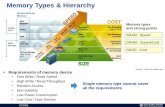

Introduction

Programmers want unlimited amounts of memory

with low latency

Fast memory technology is more expensive per bit

than slower memory

Solution: organize memory system into a hierarchy

Entire addressable memory space available in largest, slowest

memory

Incrementally smaller and faster memories, each containing a

subset of the memory below it, proceed in steps up toward the

processor

-

Computer Architecture

Professor Yong Ho Song

Principle of Locality

Temporal and spatial locality insures that nearly all

references can be found in smaller memories

Gives the allusion of a large, fast memory being presented to the

processor

Led to hierarchies based on memories of different

speeds and sizes

-

Computer Architecture

Professor Yong Ho Song

Memory Hierarchy

-

Computer Architecture

Professor Yong Ho Song

Memory Performance Gap

# of core

Clock frequency

LPDDR4

WideIO SDR

409.6 GB/s !!

25.8 GB/s !!

-

Computer Architecture

Professor Yong Ho Song

Memory Hierarchy Design

Memory hierarchy design becomes more crucial with

recent multi-core processors:

Aggregate peak bandwidth grows with # cores:

Intel Core i7 can generate two references per core per clock

Four cores and 3.2 GHz clock

25.6 billion 64-bit data references/second +

12.8 billion 128-bit instruction references

= 409.6 GB/s!

DRAM bandwidth is only 6% of this (25 GB/s)

Requires:

Multi-port, pipelined caches

Two levels of cache per core

Shared third-level cache on chip

-

Computer Architecture

Professor Yong Ho Song

Performance and Power

High-end microprocessors have >10 MB on-chip cache

Consumes large amount of area and power budget

Caches account for 25% to 50% of the total power

consumption

-

Computer Architecture

Professor Yong Ho Song

Memory Hierarchy Basics

When a word is not found in the cache, a miss occurs:

Fetch word from lower level in hierarchy, requiring a higher

latency reference

Lower level may be another cache or the main memory

Also fetch the other words contained within the block

Takes advantage of spatial locality

Place block into cache in any location within its set, determined

by address

Takes advantage of temporal locality

Block address MOD #Sets

-

Computer Architecture

Professor Yong Ho Song

Memory Hierarchy Basics

N blocks in a set N-way set associative

Direct-mapped cache one block per set

Fully associative all blocks in one set

Writing to cache: two strategies

Write-through

Immediately update lower levels of hierarchy

Write-back

Only update lower levels of hierarchy when an updated block is

replaced

Both strategies use write buffer to make writes asynchronous

-

Computer Architecture

Professor Yong Ho Song

Memory Hierarchy Basics

Miss rate

Fraction of cache access that result in a miss

Causes of misses

Compulsory

Very first reference to a block

Even infinite sized cache cannot avoid this type of cache miss

Capacity (in fully associative)

Cache memory is full of loaded blocks

Program working set is much larger than cache capacity

Conflict (not in fully associative)

Program makes repeated references to multiple addresses from

different blocks that map to the same location in the cache

Miss to the block which has been replaced

-

Computer Architecture

Professor Yong Ho Song

Cache Size (KB)

Mis

s R

ate

pe

r T

yp

e

0

0.02

0.04

0.06

0.08

0.1

0.12

0.14

1 2 4 8

16

32

64

12

8

1-way

2-way

4-way

8-way

Capacity

Compulsory

3Cs Absolute Miss Rate (SPEC92)

Conflict

Note: Compulsory

Miss small

-

Computer Architecture

Professor Yong Ho Song

Cache Size (KB)

Mis

s R

ate

pe

r T

yp

e

0

0.02

0.04

0.06

0.08

0.1

0.12

0.14

1 2 4 8

16

32

64

12

8

1-way

2-way

4-way

8-way

Capacity

Compulsory

2:1 Cache Rule

Conflict

miss rate 1-way associative cache size X = miss rate 2-way associative cache size X/2

-

Computer Architecture

Professor Yong Ho Song

Four Memory Hierarchy Questions

Q1: Where can a block be placed in the upper level?

(block placement)

Q2: How is a block found if it is in the upper level?

(block identification)

Q3: Which block should be replaced on a miss? (block

replacement)

Q4: What happens on a write? (write strategy)

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Q1: block placement

14

Direct Mapped

Set Associative

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Q2: block identification

Cache entry

Address tag to see if it matches the block address from the

processor

Valid bit to indicate whether or not the entry contains a valid

address

Processor address breakdown

Index to select an entry or a set of entry to compare the tag

Tag to match the selected entries

Block offset to access the individual byte (or word) within the

block

15

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Q3: block replacement

Random

To spread allocation uniformly

Some systems generate pseudorandom block numbers to get

reproducible behavior.

Least recently used (LRU)

To replace the block that has been unused for the longest time

If recently used blocks are likely to be used again, then a good

candidate for disposal is the least recently used block

First in, first out (FIFO)

Because LRU can be complicated to calculate, this approximates

LRU by determining the oldest block rather than the LRU.

16

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Q4: write strategy

Handling write hit

Write-through

Easy to implement

Ensure data coherency between multiprocessors and I/O

Avoiding write stall using write buffer

Write-back (requiring a dirty bit)

Better performance

Handling write miss

Write allocate

Write miss looks like a read miss from the memorys viewpoint

No write allocate

The block is modified only in the lower-level memory

Blocks stay out of the cache until the program tries to read the blocks

17

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Data Cache Sample: AMD Opteron

18

64KB Data Cache

Two-way Set Associative Cache

64 Byte Block

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Memory Access Performance

Average memory access time

For each memory access,

Revised average memory access time

Memory accesses

Instruction fetch

Data access

Average memory access per instruction

1 (instruction fetch rate) + d (data access rate)

d = #data instructions / all instruction count in a given program

Revised 2015

% instructions = 1 / (1 + d)

% data = d / (1 + d)

-

Computer Architecture

Professor Yong Ho Song

Cache Performance

CPU execution time

20

Revised 2015

Extra CPI due to cache miss

-

Computer Architecture

Professor Yong Ho Song

Six Basic Cache Optimizations

Larger block size

Reduces compulsory misses

Increases capacity and conflict misses, increases miss penalty

Larger total cache capacity to reduce miss rate

Increases hit time, increases power consumption

Higher associativity

Reduces conflict misses

Increases hit time, increases power consumption

-

Computer Architecture

Professor Yong Ho Song

Six Basic Cache Optimizations

Higher number of cache levels

Reduces overall memory access time

Giving priority to read misses over writes

Reduces miss penalty

Avoiding address translation in cache indexing

Reduces hit time

-

Computer Architecture

Professor Yong Ho Song

Larger block size

23

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Larger capacity and higher associativity

24

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Larger capacity and higher associativity

25

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Multi-level Cache

26

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Relative execution time by L2 cache size

27

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Higher priority to read misses

Case 1: write-through cache

Write buffer

May hold the updated version of a location needed on a read miss

Solution

Make read miss wait until the write buffer is empty

Or, check the write buffer on a read miss and if no conflicts, let the

read miss continue

Case 2: write-back cache

Write buffer

Put the replaced dirty block into write back

Then, read in the missed block from the memory system

28

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Physical vs. Virtual Caches

Physical caches are addressed with physical addresses

Virtual addresses are generated by the CPU

Address translation is required, which may increase the hit time

Virtual caches are addressed with virtual addresses

Address translation is not required for a hit (only for a miss)

CPU

Core TLB

Cache

Main

Memory

VA PA (on miss)

hit data

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Physical vs. Virtual Caches

one-step process in case of a hit (+)

cache needs to be flushed on a context switch unless process identifiers (PIDs) included in tags (-)

Aliasing problems due to the sharing of pages (-)

maintaining cache coherence (-)

CPU Physical

Cache TLB

Primary

Memory

VA

PA

Alternative: place the cache before the TLB

CPU

VA

Virtual

Cache

PA TLB

Primary

Memory

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Drawbacks of a Virtual Cache

Protection bits must be associated with each cache block

Whether it is read-only or read-write

Flushing the virtual cache on a context switch

To avoid mixing between virtual addresses of different processes

Can be avoided or reduced using a process identifier tag (PID)

Aliases

Different virtual addresses map to same physical address

Sharing code (shared libraries) and data between processes

Copies of same block in a virtual cache

Updates makes duplicate blocks inconsistent

Cant happen in a physical cache

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Aliasing in Virtual-Address Caches

VA1

VA2

Page Table

Data Pages

PA

VA1

VA2

1st Copy of Data at PA

2nd Copy of Data at PA

Tag Data

Two virtual pages share one physical page

Virtual cache can have two copies of same physical data. Writes to one copy

not visible to reads of other!

General Solution: Disallow aliases to coexist in cache

OS Software solution for direct-mapped cache

VAs of shared pages must agree in cache index bits; this ensures all VAs

accessing same PA will conflict in direct-mapped cache

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Address Translation during Indexing

To lookup a cache, we can distinguish between two tasks

Indexing the cache Physical or virtual address can be used

Comparing tags Physical or virtual address can be used

Virtual caches eliminate address translation for a hit

However, cause many problems (protection, flushing, and aliasing)

Best combination for an L1 cache

Index the cache using virtual address

Address translation can start concurrently with indexing

The page offset is same in both virtual and physical address

Part of page offset can be used for indexing limits cache size

Compare tags using physical address

Ensure that each cache block is given a unique physical address

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Concurrent Access to TLB & Cache

Index L is available without consulting the TLB

cache and TLB accesses can begin simultaneously

Tag comparison is made after both accesses are completed

Cases: L k-b, L > k-b (aliasing problem)

VPN L b

TLB Direct-map Cache

2L blocks

2b-byte block PPN Page Offset

=

hit? Data Physical Tag

Tag

VA

PA

Index

k

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Problem with L1 Cache size > Page size

Virtual Index now uses the lower a bits of VPN

VA1 and VA2 can map to same PPN

Aliasing Problem: Index bits = L > k-b

Can the OS ensure that lower a bits of VPN are same in PPN?

VPN a k b b

TLB

PPN ? Page Offset

Tag

VA

PA

Virtual Index = L bits

L1 cache

Direct-map

= hit?

PPN Data

PPN Data

VA1

VA2

k

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Anti-Aliasing with Higher Associativity

Set Associative Organization

VPN a L = k-b b

TLB 2L blocks

PPN Page Offset

=

hit?

Data

Tag

Physical Tag

VA

PA

Virtual

Index

k 2L blocks

2a

=

2a

Tag

Using higher associativity: cache size > page size

2a physical tags are compared in parallel

Cache size = 2a x 2L x 2b > page size (2k bytes)

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Anti-Aliasing via Second Level Cache

Usually a common L2 cache backs up both Instruction and Data L1 caches

L2 is typically inclusive of both Instruction and Data caches

CPU

L1 Data Cache

L1 Instruction Cache

Unified L2 Cache

RF Memory

Memory

Memory

Memory

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Anti-Aliasing Using L2: MIPS R10000

VPN a k b b

TLB

PPN Page Offset Tag

VA

PA

L-bit index L1 cache

Direct-map

= hit?

PPN Data

PPN Data

VA1

VA2

k

Direct-Mapped L2

PPN Data Suppose VA1 and VA2 (VA1 VA2) both map to same PPN

and VA1 is already in L1, L2

After VA2 is resolved to PA, a collision will be detected in L2.

VA1 will be purged from L1 and L2, and VA2 will be loaded no aliasing !

Revised 2015

VA1

-

Computer Architecture

Professor Yong Ho Song

Avoiding address translation time

41

-

Computer Architecture

Professor Yong Ho Song

Virtually indexed, physically tagged

42

-

Computer Architecture

Professor Yong Ho Song

Small and Simple First Level Caches

Small and simple first level caches

Critical timing path:

addressing tag memory, then

comparing tags, then

selecting correct set

Direct-mapped caches can overlap tag compare and transmission

of data

Lower associativity reduces power because fewer cache lines are

accessed

1st Optimization

-

Computer Architecture

Professor Yong Ho Song

L1 Size and Associativity

Access time vs. size and associativity

1st Optimization

-

Computer Architecture

Professor Yong Ho Song

L1 Size and Associativity

Energy per read vs. size and associativity

1st Optimization

-

Computer Architecture

Professor Yong Ho Song

Way Prediction

To improve hit time, predict the way to pre-set mux

Only a single tag comparison is performed

Miss results in checking the other blocks for matches in the next

clock cycle (longer miss penalty)

Prediction accuracy

> 90% for two-way, > 80% for four-way

I-cache has better accuracy than D-cache

Used on ARM Cortex-A8

Way selection

Extend to predict block as well

Increases mis-prediction penalty

2nd Optimization

-

Computer Architecture

Professor Yong Ho Song

Pipelined Cache Access

Pipeline cache access to improve bandwidth

Examples:

Pentium: 1 cycle

Pentium Pro Pentium III: 2 cycles

Pentium 4 Core i7: 4 cycles

Increases branch mis-prediction penalty

Makes it easier to increase associativity

3rd Optimization

-

Computer Architecture

Professor Yong Ho Song

Pipelined Cache Access

Pipeline cache access to improve bandwidth

Examples:

Pentium: 1 cycle

Pentium Pro Pentium III: 2 cycles

Pentium 4 Core i7: 4 cycles

Increases branch mis-prediction penalty

3rd Optimization

-

Computer Architecture

Professor Yong Ho Song

Non-blocking Caches

allows the CPU to continue executing instructions

while a miss is handled

some processors allow only 1 outstanding miss (hit under miss)

some processors allow multiple misses outstanding (miss under

miss)

can be used with both in-order and out-of-order

processors

in-order processors stall when an instruction that uses the load

data is the next instruction to be executed (non-blocking loads)

out-of-order processors can execute instructions after the load

consumer

49

4th Optimization

-

Computer Architecture

Professor Yong Ho Song

Miss Status Handling Register

Also called miss buffer

Keeps track of

Outstanding cache misses

Pending load/store accesses that refer to the missing cache block

Fields of a single MSHR

Valid bit

Cache block address (to match incoming accesses)

Control/status bits (prefetch, issued to memory, which subblocks

have arrived, etc)

Data for each subblock

For each pending load/store

Valid, type, data size, byte in block, destination register or store buffer entry address

4th Optimization

-

Computer Architecture

Professor Yong Ho Song

Miss Status Handling Register 4th Optimization

-

Computer Architecture

Professor Yong Ho Song

MSHR Operation

On a cache miss:

Search MSHR for a pending access to the same block

Found: Allocate a load/store entry in the same MSHR entry

Not found: Allocate a new MSHR

No free entry: stall

When a subblock returns from the next level in

memory

Check which loads/stores waiting for it

Forward data to the load/store unit

Deallocate load/store entry in the MSHR entry

Write subblock in cache or MSHR

If last subblock, dellaocate MSHR (after writing the block in

cache)

4th Optimization

-

Computer Architecture

Professor Yong Ho Song

Non-blocking Caches

53

4th Optimization

-

Computer Architecture

Professor Yong Ho Song

Multibanked Caches

Organize cache as independent banks to support

simultaneous access

ARM Cortex-A8 supports 1-4 banks for L2

Intel i7 supports 4 banks for L1 and 8 banks for L2

Interleave banks according to block address

Works best when accesses naturally spread across

banks

5th Optimization

-

Computer Architecture

Professor Yong Ho Song

Critical Word First, Early Restart

Critical word first

Request missed word from

memory first

Send it to the processor as soon

as it arrives

Early restart

Request words in normal order

Send missed work to the processor as soon as it arrives

Effectiveness of these strategies depends on block

size and likelihood of another access to the portion of

the block that has not yet been fetched

6th Optimization

-

Computer Architecture

Professor Yong Ho Song

Merging Write Buffer

When storing to a block that is already pending in the

write buffer, update write buffer

Reduces stalls due to full write buffer

Multiword writes are usually faster than writes

performed one word at a time

Write merging

No write merging

7th Optimization

-

Computer Architecture

Professor Yong Ho Song

Compiler Optimizations

Loop Interchange

Swap nested loops to access memory in sequential order

Blocking

Instead of accessing entire rows or columns, subdivide matrices

into blocks

Requires more memory accesses but improves locality of

accesses

8th Optimization

-

Computer Architecture

Professor Yong Ho Song

Loop Interchange

Swap nested loops to access memory in sequential order

/* Before */

for (k = 0; k < 100; k = k+1)

for (j = 0; j < 100; j = j+1)

for (i = 0; i < 5000; i = i+1)

x[i][j] = 2 * x[i][j];

/* After */

for (k = 0; k < 100; k = k+1)

for (i = 0; i < 5000; i = i+1)

for (j = 0; j < 100; j = j+1)

x[i][j] = 2 * x[i][j];

j

i

0x0000

0x4E20

0x9C40

0xEA60

j

i

0x0000

0x4E20

0x9C40

0xEA60

8th Optimization

-

Computer Architecture

Professor Yong Ho Song

Blocking 8th Optimization

-

Computer Architecture

Professor Yong Ho Song

8th Optimization

-

Computer Architecture

Professor Yong Ho Song

Hardware Prefetching

Fetch two blocks on miss (include next sequential

block)

Prefetched block is placed into the instruction stream

buffer

9th Optimization

-

Computer Architecture

Professor Yong Ho Song

Hardware Prefetching

Instruction Prefetching

Typically, CPU fetches 2 blocks on a miss: the requested block and the

next consecutive block.

Requested block is placed in instruction cache when it returns, and

prefetched block is placed into instruction stream buffer

Data Prefetching

Pentium 4 can prefetch data into L2 cache from up to 8 streams from 8

different 4 KB pages

Prefetching invoked if 2 successive L2 cache misses to a page,

if distance between those cache blocks is < 256 bytes

Revised 2015

-

Computer Architecture

Professor Yong Ho Song 63

Issues in Prefetching

Usefulness should produce hits

Timeliness not late and not too early

Cache and bandwidth pollution

L1 Data

L1 Instruction

Unified L2

Cache

RF

CPU

Prefetched data

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Hardware Instruction Prefetching

Instruction prefetch in Alpha AXP 21064

Fetch two blocks on a miss; the requested block (i) and the next

consecutive block (i+1)

Requested block placed in cache, and next block in instruction

stream buffer

If miss in cache but hit in stream buffer, move stream buffer block

into cache and prefetch next block (i+2)

L1 Instruction Unified L2

Cache

RF

CPU

Stream

Buffer

Prefetched

instruction block Req

block

Req

block

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Hardware Data Prefetching

Prefetch-on-miss:

Prefetch b + 1 upon miss on b

One Block Lookahead (OBL) scheme

Initiate prefetch for block b + 1 when block b is accessed

Why is this different from doubling block size?

Can extend to N block lookahead

Strided prefetch

If observe sequence of accesses to block b, b+N, b+2N, then

prefetch b+3N etc.

Example

IBM Power 5 supports 8 independent streams of strided prefetch

per processor, prefetching 12 lines ahead of current access

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Hardware Prefetching

Fetch two blocks on miss (include next sequential

block)

Pentium 4 Pre-fetching

9th Optimization

-

Computer Architecture

Professor Yong Ho Song

Compiler Prefetching

Insert prefetch instructions before data is needed

Non-faulting: prefetch doesnt cause exceptions

Register prefetch

Loads data into register

Cache prefetch

Loads data into cache

Combine with loop unrolling and software pipelining

10th Optimization

-

Computer Architecture

Professor Yong Ho Song

Compiler Prefetching

Insert prefetch instructions before data is needed

Timing is the biggest issue, not predictability

If you prefetch very close to when the data is required, you

might be too late

Prefetch too early, cause pollution

Estimate how long it will take for the data to come into L1, so we

can set P appropriatly

10th Optimization

-

Computer Architecture

Professor Yong Ho Song

Software Prefetching Data

Data Prefetch

Load data into register (HP PA-RISC loads)

Cache Prefetch: load into cache

(MIPS IV, PowerPC, SPARC v. 9)

Special prefetching instructions cannot cause faults;

a form of speculative execution

Issuing Prefetch Instructions takes time

Is cost of prefetch issues < savings in reduced misses?

Higher superscalar reduces difficulty of issue bandwidth

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Summary

-

Computer Architecture

Professor Yong Ho Song

Memory Technology

Performance metrics

Latency is concern of cache

Bandwidth is concern of multiprocessors and I/O

Access time

Time between read request and when desired word arrives

Cycle time

Minimum time between unrelated requests to memory

DRAM used for main memory, SRAM used for cache

-

Computer Architecture

Professor Yong Ho Song

Memory Technology

SRAM

Requires low power to retain bit

Requires 6 transistors/bit

DRAM

Must be re-written after being read

Must also be periodically refreshed

Every ~ 8 ms

Each row can be refreshed simultaneously

One transistor/bit

Address lines are multiplexed:

Upper half of address: row access strobe (RAS)

Lower half of address: column access strobe (CAS)

-

Computer Architecture

Professor Yong Ho Song

Memory Technology

Amdahl:

Memory capacity should grow linearly with processor speed

Unfortunately, memory capacity and speed has not kept pace

with processors

Some optimizations:

Multiple accesses to same row

Synchronous DRAM

Added clock to DRAM interface

Burst mode with critical word first

Wider interfaces

Double data rate (DDR)

Multiple banks on each DRAM device

-

Computer Architecture

Professor Yong Ho Song

Memory Optimizations

-

Computer Architecture

Professor Yong Ho Song

Memory Optimizations

-

Computer Architecture

Professor Yong Ho Song

Memory Optimizations

DDR (Double Data Rate)

DDR2

Lower power (2.5 V -> 1.8 V)

Higher clock rates (266 MHz, 333 MHz, 400 MHz)

DDR3

1.5 V

800 MHz

DDR4

1-1.2 V

1600 MHz

GDDR5 is graphics memory based on DDR3

-

Computer Architecture

Professor Yong Ho Song

Memory Optimizations

Graphics memory

Achieve 2-5 X bandwidth per DRAM vs. DDR3

Wider interfaces (32 vs. 16 bit)

Higher clock rate

Possible because they are attached via soldering instead of socketted DIMM modules

Reducing power in SDRAMs

Lower voltage

Low power mode (ignores clock, continues to refresh)

-

Computer Architecture

Professor Yong Ho Song

Memory Power Consumption

-

Computer Architecture

Professor Yong Ho Song

Flash Memory

Type of EEPROM

Must be erased (in blocks) before being overwritten

Non volatile

Limited number of write cycles

Cheaper than SDRAM, more expensive than disk

Slower than SRAM, faster than disk

-

Computer Architecture

Professor Yong Ho Song

Memory Dependability

Memory is susceptible to cosmic rays

Soft errors: dynamic errors

Detected and fixed by error correcting codes (ECC)

Hard errors: permanent errors

Use sparse rows to replace defective rows

Chipkill: a RAID-like error recovery technique

-

Computer Architecture

Professor Yong Ho Song

Virtual Memory

Protection via virtual memory

Keeps processes in their own memory space

Role of architecture:

Provide user mode and supervisor mode

Protect certain aspects of CPU state

Provide mechanisms for switching between user mode and

supervisor mode

Provide mechanisms to limit memory accesses

Provide TLB to translate addresses

-

Computer Architecture

Professor Yong Ho Song

Virtual Memory Concepts

What is Virtual Memory?

Uses disk as an extension to memory system

Main memory acts like a cache to hard disk

Each process has its own virtual address space

Page: a virtual memory block

Page fault: a memory miss

Page is not in main memory transfer page from disk to memory

Address translation:

CPU and OS translate virtual addresses to physical addresses

Page Size:

Size of a page in memory and on disk

Typical page size = 4KB 16KB

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Virtual Memory Concepts

A programs address space is divided into pages

All pages have the same fixed size (simplifies their allocation)

Program 1

virtual address space

main memory

Program 2

virtual address space

Pages are either in main

memory or in secondary

storage (hard disk)

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Issues in Virtual Memory

Page Size

Small page sizes ranging from 4KB to 16KB are typical today

Large page size can be 1MB to 4MB (reduces page table size)

Recent processors support multiple page sizes

Placement Policy and Locating Pages

Fully associative placement is typical to reduce page faults

Pages are located using a structure called a page table

Page table maps virtual pages onto physical page frames

Handling Page Faults and Replacement Policy

Page faults are handled in software by the operating system

Replacement algorithm chooses which page to replace in memory

Write Policy

Write-through will not work, since writes take too long

Instead, virtual memory systems use write-back

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Three Advantages of Virtual Memory

Memory Management

Programs are given contiguous view of memory

Pages have the same size simplifies memory allocation

Physical page frames need not be contiguous

Only the Working Set of program must be in physical memory

Stacks and Heaps can grow

Use only as much physical memory as necessary

Protection

Different processes are protected from each other

Different pages can be given special behavior (read only, etc)

Kernel data protected from User programs

Protection against malicious programs

Sharing

Can map same physical page to multiple users Shared memory

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Page Table and Address Mapping

Page Table Register

contains the address

of the page table

P age offse tV ir tua l page num ber

V irtua l add ress

P age offse tP hysica l page num ber

Phys ical add ress

P hysica l page num berVa lid

If 0 then page is no t

presen t in m em ory

Page tab le registe r

Page table

20 12

18

31 30 29 28 27 15 14 13 12 11 10 9 8 3 2 1 0

29 2 8 27 15 1 4 13 1 2 11 10 9 8 3 2 1 0

Page Table maps

virtual page numbers

to physical frames

Virtual page number

is used as an index

into the page table

Page Table Entry

(PTE): describes the

page and its usage

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Page Table contd

Each process has a page table

The page table defines the address space of a process

Address space: set of page frames that can be accessed

Page table is stored in main memory

Can be modified only by the Operating System

Page table register

Contains the physical address of the page table in memory

Processor uses this register to locate page table

Page table entry

Contains information about a single page

Valid bit specifies whether page is in physical memory

Physical page number specifies the physical page address

Additional bits are used to specify protection and page use

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Size of the Page Table

One-level table is simplest to implement

Each page table entry is typically 4 bytes

With 4K pages and 32-bit virtual address space, we need:

232/212 = 220 entries 4 bytes = 4 MB

With 4K pages and 48-bit virtual address space, we need:

248/212 = 236 entries 4 bytes = 238 bytes = 256 GB !

Cannot keep whole page table in memory!

Most of the virtual address space is unused

Virtual Page Number 20 Page Offset 12

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Reducing the Page Table Size

Use a limit register to restrict the size of the page table

If virtual page number > limit register, then page is not allocated

Requires that the address space expand in only one direction

Divide the page table into two tables with two limits

One table grows from lowest address up and used for the heap

One table grows from highest address down and used for stack

Does not work well when the address space is sparse

Use a Multiple-Level (Hierarchical) Page Table

Allows the address space to be used in a sparse fashion

Sparse allocation without the need to allocate the entire page table

Primary disadvantage is multiple level address translation

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Multi-Level Page Table

Level 1

Page Table

Level 2

Page Tables

Data Pages

page in primary memory

page in secondary memory

Root of the Current

Page Table

p1

p2

Virtual Address

(Processor

Register)

PTE of a nonexistent page

p1 p2 offset

0 11 12 21 22 31

10-bit

L1 index

10-bit

L2 index

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Variable-Sized Page Support

Level 1

Page Table

Level 2

Page Tables page in primary memory large page in primary memory

page in secondary memory

PTE of a nonexistent page

Root of the Current

Page Table

p1

p2

Virtual Address

(Processor

Register)

p1 p2 offset

0 11 12 21 22 31

10-bit

L1 index

10-bit

L2 index

4 MB

page

Data Pages

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Hashed Page Table

hash

index

Base of Table

+ PA of PTE

Hashed Page Table VPN offset

Virtual Address

PID VPN PID PPN link

VPN PID PPN

One table for all processes

Table is only small fraction of memory

Number of entries is 2 to 3 times number of

page frames to reduce collision probability

Hash function for address translation

Search through a chain of page table entries

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Handling a Page Fault

Page fault: requested page is not in memory

The missing page is located on disk or created

Page is brought from disk and Page table is updated

Another process may be run on the CPU while the first process

waits for the requested page to be read from disk

If no free pages are left, a page is swapped out

Pseudo-LRU replacement policy

Reference bit for each page each page table entry

Each time a page is accessed, set reference bit =1

OS periodically clears the reference bits

Page faults are handled completely in software by the

OS

It takes milliseconds to transfer a page from disk to memory

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Write Policy

Write through does not work

Takes millions of processor cycles to write disk

Write back

Individual writes are accumulated into a page

The page is copied back to disk only when the page is replaced

Dirty bit

1 if the page has been written

0 if the page never changed

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

CPU

Core TLB Cache

Main

Memory

VA PA miss

hit

data

TLB = Translation Lookaside Buffer

Address translation is very expensive

Must translate virtual memory address on every memory access

Multilevel page table, each translation is several memory

accesses

Solution: TLB for address translation

Keep track of most common translations in the TLB

TLB = Cache for address translation

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Translation Lookaside Buffer

VPN offset

VPN PPN

physical address PPN offset

virtual address

hit?

(VPN = virtual page number)

(PPN = physical page number)

TLB hit: Fast single cycle translation

TLB miss: Slow page table translation

Must update TLB on a TLB miss

Revised 2015

V R W D

-

Computer Architecture

Professor Yong Ho Song

Address Translation & Protection

Every instruction and data access needs address translation and protection checks

Check whether page is read only, writable, or executable

Check whether it can be accessed by the user or kernel only

Physical Address

Virtual Address

Address

Translation

Virtual Page No. (VPN) offset

Physical Page No. (PPN) offset

Protection

Check

Exception?

Kernel/User Mode

Read/Write

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Handling TLB Misses and Page Faults

TLB miss: No entry in the TLB matches a virtual address

TLB miss can be handled in software or in hardware

Lookup page table entry in memory to bring into the TLB

If page table entry is valid then retrieve entry into the TLB

If page table entry is invalid then page fault (page is not in memory)

Handling a Page Fault

Interrupt the active process that caused the page fault

Program counter of instruction that caused page fault must be saved

Instruction causing page fault must not modify registers or memory

Transfer control to the operating system to transfer the page

Restart later the instruction that caused the page fault

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Handling a TLB Miss

Software (MIPS, Alpha)

TLB miss causes an exception and the operating system walks

the page tables and reloads TLB

A privileged addressing mode is used to access page tables

Hardware (SPARC v8, x86, PowerPC)

A memory management unit (MMU) walks the page tables and

reloads the TLB

If a missing (data or PT) page is encountered during the TLB

reloading, MMU gives up and signals a Page-Fault exception for

the original instruction. The page fault is handled by the OS

software.

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Address Translation Summary

TLB

Lookup

Page Table

Walk

Update TLB Page Fault

(OS loads page)

Protection

Check

Physical

Address

(to cache)

miss hit

not in memory in memory denied permitted

Protection

Fault

hardware

hardware or software

software

SEGFAULT

Restart instruction

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

TLB, Page Table, Cache Combinations

TLB Page Table Cache Possible? Under what circumstances?

Hit Hit Hit Yes what we want!

Hit Hit Miss Yes although the page table is not

checked if the TLB hits

Miss Hit Hit Yes TLB miss, PA in page table

Miss Hit Miss Yes TLB miss, PA in page table, but data is

not in cache

Miss Miss Miss Yes page fault (page is on disk)

Hit Miss Hit/Miss Impossible TLB translation is not possible

if page is not in memory

Miss Miss Hit Impossible data not allowed in cache

if page is not in memory

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Address Translation in CPU Pipeline

Software handlers need restartable exception on page fault

Handling a TLB miss needs a hardware or software mechanism to refill TLB

Need mechanisms to cope with the additional latency of a TLB

Slow down the clock

Pipeline the TLB and cache access

Virtual address caches

Parallel TLB/cache access

PC Inst TLB

Inst. Cache D

Decode E M Data TLB

Data Cache W +

TLB miss? Page Fault?

Protection violation?

TLB miss? Page Fault?

Protection violation?

Revised 2015

-

Computer Architecture

Professor Yong Ho Song

Virtual Machines

Supports isolation and security

Sharing a computer among many unrelated users

Enabled by raw speed of processors, making the

overhead more acceptable

Allows different ISAs and operating systems to be

presented to user programs

System Virtual Machines

SVM software is called virtual machine monitor or hypervisor

Individual virtual machines run under the monitor are called

guest VMs

-

Computer Architecture

Professor Yong Ho Song

Impact of VMs on Virtual Memory

Each guest OS maintains its own set of page tables

VMM adds a level of memory between physical and virtual

memory called real memory

VMM maintains shadow page table that maps guest virtual

addresses to physical addresses

Requires VMM to detect guests changes to its own page table

Occurs naturally if accessing the page table pointer is a privileged

operation

-

Computer Architecture

Professor Yong Ho Song

End of Chapter

105