by James Algina University of Florida and H. J. Keselman...

Transcript of by James Algina University of Florida and H. J. Keselman...

Cohen’s Effect Size 1

Confidence Intervals for Cohen’s Effect Size

by

James Algina

University of Florida

and

H. J. Keselman

University of Manitoba

Cohen’s Effect Size 2

Abstract

We investigated coverage probability for confidence intervals for Cohen’s effect size and

a variant of Cohen’s effect size constructed by replacing least square parameters by robust

parameters (means by 20% trimmed means and variances by Winsorized variances). We

investigated confidence intervals constructed by using the noncentral t distribution and the

percentile bootstrap. Our results indicated that when data are nonnormal, coverage probability

for Cohen’s effect size could be inadequate for both methods of constructing confidence

intervals. Using either method, coverage probability was better for the robust effect size. Over

the range of distributions and effect sizes included in the study, coverage probability was best for

the percentile bootstrap confidence interval.

Cohen’s Effect Size 3

Confidence Intervals for Cohen’s Effect Size

Since at least the ‘60s, some methodologists (e.g., Cohen, 1965; Hays, 1963) have

recommended reporting an effect size in addition to (or, in some cases, in place of) hypothesis

tests. In perhaps the past 15 years or so, there has been renewed emphasis on reporting effect

sizes (ESs) because of editorial policies requiring ESs (e.g., Murphy, 1997; Thompson, 1994)

and official support for the practice. According to The Publication Manual of the American

Psychological Association (2001) “it is almost always necessary to include some index of ES or

strength of relationship in your Results section.” (p.25). The practice of reporting ESs has also

received support from the APA Task Force on Statistical Inference (Wilkinson and the Task

Force on Statistical Inference, 1999). An interest in reporting confidence intervals (CIs) for ESs

has accompanied the emphasis on ESs. Cumming and Finch (2001), for example, presented a

primer of CIs for ESs. Bird (2002) presented software for calculating approximate CIs for a wide

variety of ANOVA designs.

One of the most commonly reported ESs is Cohen’s d:

2 1Y Yd

S

where jY is the mean for the jth level 1, 2j and S is the square root of the pooled variance,

which we refer to as the pooled standard deviation.1 The number of observations in a level is

denoted by jn 1 2N n n . Cohen’s d estimates

2 1

where j is the population mean for the jth level and is the population standard deviation,

assumed to be equal for both levels.

Cohen’s Effect Size 4

It is known (see, for example, Cumming & Finch, 2001 or Steiger & Fouladi, 1997) that

when the sample data are drawn from normal distributions, the variances of the two populations

are equal, and the scores are independently distributed, an exact CI for the population ES

i.e., can be constructed by using the noncentral t distribution. Figure 1 presents a central and

noncentral t distribution. The distribution of the right is an example of a noncentral t distribution

and is the sampling distribution of the t statistic when is not equal to zero. It has two

parameters. The first is the familiar degrees of freedom and is 2N in our context. The second

is the noncentrality parameter

1 2 2 1 1 2

1 2 1 2

n n n n

n n n n.

The noncentrality parameter controls the location of the noncentral t distribution. In fact, the

mean of the noncentral t distribution is approximately equal to (Hedges, 1981), with the

accuracy of the approximation improving as N increases. The central t distribution, the sampling

distribution of the t statistic when 0 , is the special case of the noncentral t distribution that

occurs when , and therefore, are zero.

To find a 95% (for example) CI for , we first use the noncentral t distribution to find a

95% CI for . Then multiplying the limits of the interval for by 1 2 1 2n n n n a 95% CI

for is obtained. The lower limit of the 95% CI for is the noncentrality parameter for the

noncentral t distribution in which the calculated t statistic

1 2 2 1

1 2

n n Y Yt

n n S

is the .975 quantile. The upper limit of the 95% interval for is the noncentrality parameter for

the noncentral t distribution in which the calculated t statistic is the .025 quantile of the

Cohen’s Effect Size 5

distribution (see Steiger & Fouladi, 1997). Means and standard deviations for an example are

provided in Table 1. Calculations show that .97d and 3.14t . The t statistic, along with two

noncentral t distributions, is depicted in Figure 2. As Figure 2, indicates, if 5.21 , then

3.14t is the .025 percentile. Therefore the upper limit of the CI for is 5.21. If 1.05 then

3.14t is the .975 percentile and the lower limit of the CI for is 1.05. Multiplying both limits

by 1 2 1 2n n n n , .32 and 1.61 are obtained as the lower and upper limits, respectively, for a

95% CI for .

The use of the noncentral t distribution is based on the assumption that the data are drawn

from normal distributions. If this assumption is violated, there is no guarantee that the actual

probability coverage for the interval will match the nominal probability coverage. In addition, as

pointed out by Wilcox and Keselman (2003), when data are not normal the usual least squares

means and standard deviations can be misleading because these statistics are affected by skewed

data and by outliers. A better strategy is to replace the least square estimators by robust

estimators, such as trimmed means and Winsorized variances. To calculate a trimmed mean,

simply remove an a priori determined percentage of the observations and compute the mean from

the remaining observations. If the target percentage to be removed is 2 p , then number of

observations removed from each tail of the distribution is the integer that is just smaller than

jp n . Denote this integer by jg . The smallest jg observations are removed, as are the largest

jg observations. We denote a trimmed mean by tjY and refer to it as the p% trimmed mean. To

calculate a Winsorised variance, the smallest non-trimmed score replaces the scores trimmed

from the lower tail of the distribution and the largest non-trimmed score replaces the

observations removed from the upper tail. The non-trimmed and replaced scores are called

Winsorized scores. A Winsorized mean is calculated by applying the usual formula for the mean

Cohen’s Effect Size 6

to the Winsorized scores and a Winsorized variance is calculated as the sum of squared

deviations of Winsorized scores from the Winsorized mean divided by 1n . The Winsorized

variance is used because it can be shown that the standard error of a trimmed mean is a function

of the Winsorized variance. We denote a Winsorized variance by 2

jWS . A common trimming

percentage is 20%. See Wilcox (2003) for a justification of 20% trimming and formulas

corresponding to our verbal descriptions of the trimmed mean and Winsorized variance.

When trimmed means and Winsorised variances are used, we propose using the ES

2 1.4129 t tR

w

Y Yd

S,

which is a robust ES.2 In

Rd , WS is the square root of the pooled Winsorized variance

2 2

2 1 2 221 1

2

W W

W

n S n SS

N

and .4129 is the population Winsorized variance for a standard normal distribution. The

population robust ES is

2 1.4129 t tR

W

where tj is the population trimmed mean for the jth level and W is the square root of the

population Winsorized variance, which is assumed to be equal for the two groups. Including

.4129 in the definition of the robust effect ensures that R when the data are drawn from

normal distributions with equal variances. Trimmed means are used in R because outliers have

much less influence on trimmed means than on the usual means. The Winsorized variance is

used because the sample Winsorized variance is used in hypothesis testing based on trimmed

Cohen’s Effect Size 7

means. We also thought it was important to investigate the robustness of R

because many

authors subscribe to the position that inferences pertaining to robust parameters are more

valid than inferences pertaining to the usual least squares parameters when dealing with

populations that are nonnormal (e.g., Hampel, Ronchetti, Rousseeuw & Stahel, 1986; Huber,

1981; Staudte & Sheater, 1990).

A CI for R

can also be constructed by using the noncentral t distribution. To do so

replace the usual t statistic by the statistic due to Yuen and Dixon (1973)

2 11 2

1 2

t tR

Y Yh ht

h h S

where 2j j jh n g and

2

2

1 2

2

2

WN SS

h h.

Note that jh is the number of observation remaining after trimming. The degrees of freedom for

Rt are 1 2 2h h . Once lower and upper limits of the CI for the noncentrality parameter are

found, multiplying by

1 2

1 2 1 2

2.4129

2

h h N

h h h h

converts the limits into an interval for R

.

Study 1

We investigated the robustness of the noncentral t distribution-based CIs for and R to

sampling from nonnormal distributions.

Method

Cohen’s Effect Size 8

Probability coverage was estimated for all combinations of the following three factors:

population distribution (four cases from the family of g and h distributions), sample size:

1 2 20n n to 100 in steps of 20, and population ESs and R : 0 and .2 to 1.4 in steps of .3.

The nominal confidence level for all intervals was .95 and each condition was replicated 5000

times.

The data were generated from the g and h distribution (Hoaglin, 1985). Specifically, we

chose to investigate four g and h distributions: (a) 0g h , the standard normal distribution

1 2 0 , (b) .76g and .098h , a distribution with skew and kurtosis equal to that for

an exponential distribution 1 22, 6 , (c) 0g and .225h 1 20 and 154.84 ,

and (d) .225g and .225h (1 4.90 and

2 4673.80). The coefficient 1 is a measure

of skew and 2 is a measure of kurtosis. As indicated in the description of the first distribution, a

normal distribution has 1 2 0 . Distributions with positive skew typically have

1 0 and

distributions with negative skew typically have 1 0 . Short-tailed distributions, such as a

uniform distribution, typically have 2 0 and long-tailed distributions, such as a t distribution,

typically have 2 0. The three nonnormal distributions are quite strongly nonnormal. We

selected these because we wanted to find whether the CIs would work well over a wide range of

distributions, not merely with distributions that are nearly normal.

To generate data from a g and h distribution, standard unit normal variables ijZ were

converted to g and h distributed random variables via

2exp 1exp

2

ij ij

ij

gZ hZY

g

when both g and h were non-zero. When g was zero

Cohen’s Effect Size 9

2

exp2

ij

ij ij

hZY Z .

The ijZ scores were generated by using RANNOR in SAS (SAS, 1999). For simulated

participants in treatment 2, the 2iY scores were transformed to

2iY . (1)

These transformed scores were used in the CI for . For the CI for R

, the 2iY scores were

transformed to

2

.4129

WiY . (2)

This method of generating the scores in treatment 2 resulted in R

. It should be noted that if

we had used equation (1) to generate the scores used to calculate CIs for R then R would not

have been equal to . We also investigated the CI for R using the 2iY scores obtained by

equation (1). In these investigations,R

. The general pattern of results was the same in the

two sets of conditions.

Results

Estimated coverage probabilities for all conditions in Study 1 are reported in Table 2.

When 0g h , that is when the data were sampled from normal distributions, both CIs had

excellent coverage probability. When the data were sampled from nonnormal distributions, both

CIs had excellent coverage probability provided the population ES was equal to or less than .20.

However, as the population ES increased, coverage probability became less adequate. This

decline in adequacy occurred at smaller values of than for R and was more extreme for

than for R , but clearly both CIs degraded as the population ES increased. Nevertheless, across

Cohen’s Effect Size 10

all distributions, coverage probabilities for the CI on R

were between approximately .93 and .96

when R

.80 and thus provided reasonable coverage probability under some conditions.

Study 2

The evidence from Study 1 indicates that using the noncentral t distribution for CIs for

or R

, when sampling from nonnormal distributions, can result in coverage probabilities that are

not equal to the nominal confidence coefficient. An alternative procedure for constructing CIs is

to use the bootstrap. We investigated using the percentile bootstrap to construct CIs for and

R .

Method

We used the same design as in Study 1. The number of bootstrap replications was 600.

To apply the percentile bootstrap the following steps were competed 600 times within each

replication of a condition. First, a sample of size 1n was randomly selected with replacement

from the scores for the first group. Second, a sample of size2n was randomly selected with

replacement from the scores for the second group. These two samples were combined to form a

bootstrap sample. Third, the ES (i.e., either ˆ or ˆR ) was calculated from the bootstrap sample.

Fourth, the 600 ES estimates were ranked from low to high. The lower limit of the CI was

determined by finding the 15th

estimate in the rank order [i.e. the .025 (600) th

estimate]; the

upper limit was determined by finding the 585th

estimate [i.e. the .975 (600) th

estimate].

Results

Table 3 contains estimated coverage probabilities for percentile bootstrap intervals of

and R for all conditions in Study 2. The results indicate that the CI for performed much less

adequately than did the CI for R . In particular, coverage probability for the former CI could be

Cohen’s Effect Size 11

quite poor when the data were nonnormal. Coverage probabilities for the CI for R

ranged form

.942 to .971 and were, therefore, near the nominal .95 value for all distributions. This interval

exhibited a tendency to increase as R

increased, but appeared to be largely unaffected by the

distribution.

Additional Comparisons of CIs for R

Comparison of Interval Widths for CIs for R

. Study 1 and Study 2 indicated that, when

data are nonnormal, probability coverage for can be quite poor for both noncentral t

distribution based CIs and percentile bootstrap CIs. When the data were nonnormal, probability

coverage for the noncentral t distribution-based CI forR

was adequate when .80R and was

adequate under some conditions when .80R. Probability coverage for the percentile bootstrap

CI for R

was good for all conditions investigated. Since probability coverage for both types of

CIs for R

is adequate in some conditions, it is important to compare the width of the two types

of intervals. Average widths for the two types are reported in Table 4 and show that, in general,

the width of the noncentral t distribution-based CI is shorter. The width advantage for the

noncentral t distribution-based was larger with smaller sample sizes and larger values for R

.

Power. For each condition we determined the proportion of times that the intervals for

Rdid not contain zero. These proportions estimate the power for tests of the hypothesis

0 : 0RH against the non-directional alternative and are reported in Table 5. Typically, but not

always, the CI based on the noncentral t distribution is estimated to have more power than the

percentile bootstrap CI. However, the estimated power differences were very small.

Cohen’s Effect Size 12

Discussion

Although the need to report ESs is becoming more widely acknowledged and interest in

confidence intervals for ESs is increasing, little appears to be known about the robustness of CIs

for ESs. Our research indicates that noncentral t distribution-based CIs for Cohen’s ES i.e.,

and for a robust version of Cohen’s ES i.e., R may not have adequate coverage probability

when the data are sampled from nonnormal distributions. However, the difference between the

nominal confidence level and the empirical coverage probability tended to be much smaller for

the CI on R

and, depending on ones tolerance for this difference, one might regard the coverage

probability as adequate, particularly when .80R.

As a result of the performance of the noncentral t distribution-based CIs, we investigated

whether CIs constructed by using the percentile bootstrap would have adequate coverage

probability. The results indicated that percentile bootstrap CIs for might not have adequate

coverage probability when data are nonnormal. By contrast, percentile bootstrap CIs for R had

adequate coverage probability for the three nonnormal distributions we investigated. Perhaps

most important, the coverage probabilities were adequate for the full range of ESs investigated,

rather than just for .80R.

Although percentile bootstrap CIs for R had better coverage probability than did the

noncentral t distribution-based CI, the latter confidence interval was shorter. Thus, some might

argue that additional simulations are needed to determine the conditions under which the two CIs

maintain probability coverage close to the nominal level in order provide a basis for selecting the

CI most appropriate for their data. Unfortunately, visual inspection of ones data can be

misleading with respect to the degree of nonnormality. In Figure 7.1, Wilcox (2001), for

Cohen’s Effect Size 13

example, provides a graph of a distribution of very long-tailed distribution that is almost

indistinguishable from a graph of the normal distribution. Estimates of measures of skew and

kurtosis can be misleading because these estimates tend to have large standard errors unless the

sample size is very large. In addition, our results suggest that the size of R

, in part, determines

which of the two CIs has better coverage probability. Clearly, researchers will only have an

estimate of R

and it is not clear how valid the estimate will be as a guide to selecting between

the two CI methods. Our point of view is that we should try to find a CI that has good

probability coverage over a wide range of distributions and values for R

. In fact this point of

view motivated studying distributions that were strongly nonnormal. Since the percentile

bootstrap CI for R

best met this criterion, we recommend this confidence interval from among

the four we investigated.

Cohen’s Effect Size 14

Footnotes

1. Other, less popular, measures of ES have been proposed by Hedges and Olkin (1985),

Kraemer and Andrews (1982), McGraw and Wong (1992), Vargha and Delaney (2000), Cliff

(1993, 1996) and Wilcox and Muska (1999) [see Hogarty & Kromrey (2001) for the definitions

of these procedures].

2. Hogarty and Kromrey (2001) suggested a robust statistic of ES similar to the one we present.

Cohen’s Effect Size 15

References

American Psychological Association. (2001). Publication manual of the American Psychological

Association (5th

ed.). Washington, DC.

Bird, K. D. (2002). Confidence intervals for effect sizes in analysis of variance. Educational and

Psychological Measurement, 62, 197-226.

Cliff, N. (1993). Dominance statistics: Ordinal analyses to answer ordinal questions.

Psychological Bulletin, 114, 494-509.

Cliff, N. (1996). Answering ordinal questions with ordinal data using ordinal statistics.

Multivariate Behavioral Research, 31, 331-350.

Cohen, J. (1965). Some statistical issues in psychological research. In B.B. Wolman (Ed.),

Handbook of clinical psychology (pp. 95-121). New York: Academic Press.

Cumming G., & Finch S. A Primer on the understanding, use, and calculation of confidence

intervals that are based on central and noncentral distributions. Educational and

Psychological Measurement, 61, 532-574.

Hampel, F. R., Ronchetti, E. M., Rousseeuw, P. J. & Stahel, W. A. (1986). Robust statistics.

New York: Wiley .

Hays, W. L. (1963). Statistics. New York: Holt, Rinehart and Winston.

Hedges, L. V. (1981) Distribution theory for Glass's estimator of effect size and related

estimators. Journal of Educational Statistics, 6, 107-128.

Hedges, L. V., & Olkin, I. (1985). Statistical methods for meta-analysis. Orlando, FL: Academic

Press.

Cohen’s Effect Size 16

Hoaglin, D. C. (1983). Summarizing shape numerically: The g-and h distributions. In D. C.

Hoaglin, F. Mosteller, & Tukey, J. W. (Eds.), Data analysis for tables, trends, and

shapes: Robust and exploratory techniques. New York: Wiley.

Hogarty, K. Y., & Kromrey, J. D. (2001). We’ve been reporting some effect sizes: Can you guess

what they mean? Paper presented at the annual meeting of the American Educational

Research Association (April), Seattle.

Huber, P. J. (1981). Robust statistics. New York: Wiley.

Kraemer, H. C., & Andrews, G. A. (1982). A nonparametric technique for meta-analysis effect

size calculation. Psychological Bulletin, 91, 404-412.

McGraw, K. O., & Wong, S. P. (1992). A common language effect size statistic. Psychological

Bulletin, 111, 361-365.

Murphy, K. R. (1997). Editorial. Journal of Applied Psychology, 82, 3-5.

SAS Institute Inc. (1999). SAS/IML user's guide, version 8. Cary, NC: Author.

Staudte, R. G., & Sheather, S. J. (1990). Robust estimation and testing. New York: Wiley.

Steiger, J. H., & Fouladi, R. T. (1997). Noncentrality interval estimation and the evaluation of

statistical models. In L. Harlow, S. Mulaik, & J. H. Steiger (eds.), What if there were no

significance tests? Hillsdale, NJ: Erlbaum.

Thompson, B. (1994). Guidelines for authors. Educational and Psychological Measurement, 54,

837-847.

Vargha, A., & Delaney, H. D. (2000). A critique and improvement of the CL Common Language

effect size statistics of McGraw and Wong. Journal of Educational and Behavioral

Statistics, 25, 101-132.

Cohen’s Effect Size 17

Wilkinson, L. and the Task force on Statistical Inference (1999). Statistical methods in

psychology journals. American Psychologist, 54, 594-604.

Wilcox, R. R. (2001). Fundamentals of modern statistical methods. New York:Springer.

Wilcox, R. R. (2003). Applying contemporary statistical techniques. San Diego: Academic Press.

Wilcox, R. R., & Keselman, H. J. (2003). Modern robust data analysis methods: Measures of

central tendency. Psychological Methods, 8, 254-274.

Wilcox, R. R., & Muska, J. (1999). Measuring effect size: A non-parametric analogue of .

British Journal of Mathematical and Statistical Psychology, 52, 93-110.

Yuen, K. K., & Dixon, W. J. (1973). The approximate behaviour and performance of the two-

sample trimmed t. Biometrika, 60, 369-374.

Cohen’s Effect Size 18

Table 1

Example Means and Standard Deviations

Group jY jS

1 28.5 4.6

2 33.8 6.2

Note. 1 2 21n n

Cohen’s Effect Size 19

Table 2

Estimated Coverage Probabilities for Nominal 95% Noncentral t Distribution-Based Confidence

Intervals for and R

0g h 0g , .225h .76g , .098h .225g , .225h

R

1 2n n

R

R

R

R

0.00 20 0.949 0.949 0.955 0.952 0.951 0.951 0.952 0.953

40 0.950 0.944 0.955 0.955 0.951 0.953 0.955 0.954

60 0.949 0.951 0.947 0.950 0.945 0.950 0.955 0.948

80 0.950 0.948 0.954 0.952 0.949 0.956 0.951 0.954

100 0.946 0.948 0.955 0.956 0.948 0.945 0.949 0.951

0.20 20 0.949 0.949 0.947 0.951 0.950 0.953 0.954 0.951

40 0.950 0.948 0.950 0.952 0.954 0.945 0.954 0.946

60 0.946 0.947 0.950 0.951 0.954 0.953 0.949 0.951

80 0.950 0.947 0.953 0.952 0.950 0.954 0.943 0.945

100 0.947 0.945 0.952 0.950 0.956 0.952 0.942 0.954

0.50 20 0.949 0.948 0.939 0.947 0.944 0.949 0.930 0.947

40 0.951 0.949 0.935 0.947 0.940 0.943 0.921 0.944

60 0.951 0.951 0.927 0.945 0.941 0.948 0.915 0.945

80 0.948 0.951 0.930 0.948 0.944 0.945 0.918 0.940

100 0.949 0.945 0.925 0.945 0.938 0.946 0.907 0.949

0.80 20 0.948 0.944 0.910 0.940 0.931 0.937 0.899 0.946

40 0.955 0.943 0.903 0.937 0.929 0.937 0.875 0.941

60 0.961 0.955 0.895 0.939 0.923 0.933 0.872 0.939

80 0.942 0.944 0.903 0.947 0.925 0.935 0.861 0.943

100 0.954 0.951 0.892 0.943 0.925 0.939 0.854 0.933

1.10 20 0.950 0.948 0.889 0.937 0.904 0.925 0.853 0.936

40 0.951 0.945 0.872 0.938 0.909 0.923 0.838 0.930

60 0.950 0.945 0.849 0.932 0.912 0.926 0.812 0.928

80 0.951 0.940 0.850 0.934 0.896 0.914 0.804 0.932

100 0.948 0.945 0.851 0.938 0.904 0.926 0.785 0.939

Cohen’s Effect Size 20

Table 2 (Continued)

0g h 0g , .225h .76g , .098h .225g , .225h

R

1 2n n

R

R

R

R

1.40 20 0.954 0.946 0.857 0.926 0.887 0.915 0.822 0.925

40 0.954 0.937 0.841 0.934 0.883 0.907 0.787 0.929

60 0.945 0.945 0.822 0.930 0.879 0.914 0.761 0.928

80 0.948 0.941 0.816 0.934 0.882 0.907 0.748 0.930

100 0.948 0.945 0.805 0.927 0.880 0.915 0.736 0.923

Cohen’s Effect Size 21

Table 3

Estimated Coverage Probabilities for Nominal 95% Confidence Bootstrap CIs for and R

0g h 0g , .225h .76g , .098h .225g , .225h

R

1 2n n

R

R

R

R

0.00 20 0.938 0.951 0.924 0.954 0.924 0.946 0.922 0.954

40 0.940 0.945 0.938 0.953 0.936 0.950 0.938 0.954

60 0.945 0.950 0.934 0.951 0.935 0.948 0.939 0.947

80 0.945 0.948 0.943 0.950 0.938 0.955 0.937 0.951

100 0.942 0.947 0.945 0.953 0.942 0.945 0.937 0.949

0.20 20 0.935 0.954 0.919 0.953 0.921 0.950 0.919 0.950

40 0.940 0.947 0.936 0.952 0.939 0.950 0.936 0.950

60 0.939 0.946 0.936 0.951 0.946 0.950 0.937 0.952

80 0.945 0.946 0.944 0.952 0.944 0.949 0.933 0.946

100 0.944 0.947 0.943 0.952 0.947 0.950 0.934 0.952

0.50 20 0.934 0.955 0.902 0.953 0.908 0.954 0.882 0.955

40 0.941 0.951 0.922 0.952 0.925 0.950 0.902 0.952

60 0.946 0.954 0.920 0.952 0.933 0.954 0.907 0.951

80 0.944 0.951 0.922 0.951 0.937 0.953 0.919 0.948

100 0.946 0.946 0.928 0.947 0.935 0.952 0.916 0.953

0.80 20 0.928 0.954 0.870 0.958 0.885 0.957 0.843 0.962

40 0.944 0.953 0.888 0.952 0.913 0.955 0.863 0.957

60 0.953 0.958 0.899 0.950 0.917 0.949 0.877 0.952

80 0.938 0.9466 0.911 0.957 0.926 0.950 0.880 0.954

100 0.950 0.955 0.907 0.951 0.929 0.953 0.875 0.948

1.10 20 0.926 0.961 0.837 0.965 0.860 0.962 0.803 0.966

40 0.938 0.957 0.872 0.958 0.896 0.960 0.831 0.959

60 0.939 0.954 0.873 0.956 0.914 0.957 0.835 0.950

80 0.944 0.952 0.891 0.952 0.914 0.948 0.880 0.954

100 0.944 0.955 0.894 0.958 0.923 0.957 0.847 0.959

Cohen’s Effect Size 22

Table 3 (Continued)

0g h 0g , .225h .76g , .098h .225g , .225h

R

1 2n n

R

R

R

R

1.40 20 0.918 0.966 0.813 0.972 0.840 0.968 0.765 0.969

40 0.938 0.956 0.857 0.966 0.889 0.960 0.798 0.964

60 0.936 0.957 0.858 0.963 0.897 0.964 0.814 0.964

80 0.942 0.957 0.870 0.959 0.912 0.956 0.821 0.961

100 0.942 0.954 0.875 0.957 0.914 0.959 0.828 0.958

Cohen’s Effect Size 23

Table 4

Average Width of Noncentral t Distribution-Based (NCT) and percentile bootstrap (BOOT) CIs

for R

0g h 0g , .225h .76g , .098h .225g , .225h

R

1 2n n NCT

BOOT NCT

BOOT NCT

BOOT NCT

BOOT

0.00 20 1.368 1.513 1.368 1.468 1.368 1.470 1.367 1.463

40 0.952 0.995 0.952 0.979 0.952 0.973 0.952 0.976

60 0.774 0.795 0.774 0.787 0.774 0.782 0.774 0.786

80 0.668 0.682 0.668 0.676 0.668 0.673 0.668 0.675

100 0.597 0.606 0.597 0.603 0.597 0.601 0.597 0.601

0.20 20 1.373 1.516 1.374 1.482 1.373 1.484 1.373 1.481

40 0.956 0.999 0.956 0.986 0.956 0.984 0.956 0.984

60 0.777 0.799 0.776 0.793 0.776 0.789 0.776 0.791

80 0.671 0.685 0.671 0.681 0.671 0.680 0.671 0.681

100 0.599 0.609 0.599 0.606 0.599 0.606 0.599 0.606

0.50 20 1.404 1.571 1.401 1.549 1.405 1.578 1.403 1.548

40 0.975 1.028 0.974 1.023 0.975 1.041 0.975 1.027

60 0.792 0.820 0.792 0.821 0.792 0.831 0.792 0.824

80 0.684 0.703 0.684 0.705 0.684 0.712 0.684 0.707

100 0.611 0.625 0.611 0.628 0.611 0.635 0.611 0.630

0.80 20 1.458 1.659 1.453 1.663 1.462 1.749 1.456 1.682

40 1.010 1.083 1.009 1.096 1.010 1.126 1.010 1.102

60 0.819 0.860 0.819 0.874 0.820 0.901 0.818 0.877

80 0.708 0.736 0.707 0.748 0.708 0.773 0.707 0.754

100 0.632 0.653 0.632 0.665 0.631 0.684 0.632 0.669

1.10 20 1.532 1.784 1.527 1.827 1.541 1.958 1.527 1.848

40 1.058 1.153 1.057 1.186 1.060 1.250 1.057 1.196

60 0.857 0.913 0.857 0.945 0.859 0.991 0.858 0.957

80 0.740 0.782 0.740 0.808 0.741 0.852 0.740 0.816

100 0.661 0.694 0.661 0.717 0.661 0.754 0.661 0.722

Cohen’s Effect Size 24

Table 4 (Continued)

0g h 0g , .225h .76g , .098h .225g , .225h

R

1 2n n NCT

BOOT NCT

BOOT NCT

BOOT NCT

BOOT

1.40 20 1.627 1.944 1.618 2.024 1.633 2.183 1.619 2.043

40 1.120 1.239 1.116 1.301 1.122 1.400 1.116 1.317

60 0.906 0.983 0.905 1.027 0.907 1.108 0.905 1.044

80 0.781 0.840 0.781 0.881 0.783 0.940 0.781 0.893

100 0.698 0.747 0.697 0.780 0.698 0.833 0.697 0.792

Cohen’s Effect Size 25

Table 5

Estimated Power for Non-directional Tests of 0 : 0RH

0g h 0g , .225h .76g , .098h .225g , .225h

R

1 2n n NCT

BOOT NCT

BOOT NCT

BOOT NCT

BOOT

0.20 20 0.090 0.089 0.088 0.086 0.083 0.091 0.088 0.084

40 0.131 0.132 0.123 0.120 0.132 0.130 0.117 0.113

60 0.174 0.174 0.164 0.163 0.170 0.169 0.161 0.160

80 0.219 0.217 0.210 0.204 0.215 0.215 0.219 0.214

100 0.260 0.263 0.265 0.258 0.267 0.259 0.257 0.250

0.50 20 0.290 0.302 0.286 0.272 0.315 0.312 0.300 0.277

40 0.538 0.548 0.514 0.507 0.534 0.527 0.533 0.527

60 0.705 0.714 0.702 0.697 0.710 0.697 0.713 0.701

80 0.830 0.833 0.827 0.818 0.831 0.828 0.824 0.815

100 0.907 0.906 0.897 0.891 0.898 0.896 0.898 0.892

0.80 20 0.627 0.654 0.590 0.570 0.620 0.612 0.603 0.577

40 0.905 0.909 0.886 0.880 0.892 0.884 0.894 0.879

60 0.984 0.987 0.976 0.974 0.977 0.975 0.974 0.970

80 0.994 0.994 0.996 0.994 0.995 0.994 0.994 0.994

100 1.000 1.000 1.000 1.000 0.998 0.999 1.000 1.000

1.10 20 0.873 0.891 0.849 0.834 0.862 0.855 0.853 0.825

40 0.996 0.996 0.992 0.991 0.992 0.988 0.989 0.988

60 1.000 1.000 0.999 0.999 0.999 0.999 0.999 0.999

80 1.000 1.000 1.000 1.000 1.000 1.000 1.000 1.000

100 1.000 1.000 1.000 1.000 1.000 1.000 1.000 1.000

1.40 20 0.979 0.985 0.960 0.950 0.962 0.957 0.959 0.947

40 1.000 1.000 1.000 0.999 1.000 1.000 1.000 0.999

60 1.000 1.000 1.000 1.000 1.000 1.000 1.000 1.000

80 1.000 1.000 1.000 1.000 1.000 1.000 1.000 1.000

100 1.000 1.000 1.000 1.000 1.000 1.000 1.000 1.000

Cohen’s Effect Size 26

- 2 2 4 6 8t

0.1

0.2

0.3

0.4

Central t Noncentral t with l =4

Figure 1. A central and a noncentral t distribution.

Cohen’s Effect Size 27

- 2 2 4 6 8t

0.1

0.2

0.3

0.4

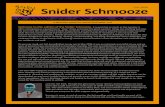

t=3.14

Noncentral t with l =5.21

Noncentral t with l =1.05

.025.025

Figure 2. Graphical representation of finding a confidence interval for the noncentrality

parameter

![Michael Cohen’s Publicationsmcohen/welcome/publications/... · 2019-04-18 · [27] Chandrajith Ashuboda Marasinghe, William L. Martens, Stephen Lam-bacher, Michael Cohen, Susantha](https://static.fdocuments.us/doc/165x107/5f2ed43797e82f022c1c77e5/michael-cohenas-publications-mcohenwelcomepublications-2019-04-18-27.jpg)