BAP: A Benchmark-driven Algebraic Method for the...

Transcript of BAP: A Benchmark-driven Algebraic Method for the...

BAP: A Benchmark-driven Algebraic Method for thePerformance Engineering of Customized Services

Invited Paper

Jerry RoliaAutomated Infrastructure LabHewlett Packard Laboratories

Bristol, [email protected]

Diwakar KrishnamurthyDepartment of Engineering

University of CalgaryCalgary, Alberta, [email protected]

Giuliano CasaleSAP Research

Belfast, Northern [email protected]

Stephen DawsonSAP Research

Belfast, Northern [email protected]

ABSTRACTThis paper describes our joint research on performance engi-neering methods for services in shared resource utilities. Thetechniques support the automated sizing of a customizedservice instance and the automated creation of performancevalidation tests for the instance. The performance tests per-mit fine-grained control over inter-arrival time and servicetime burstiness to validate sizing and facilitate the devel-opment and validation of adaptation policies. Our novelresearch on sizing also takes into account the impact ofworkload factors that contribute to such burstiness. Themethods are automated, integrated, and exploit an algebraicapproach to workload modelling that relies on per-servicebenchmark suites with benchmarks that can be automati-cally executed within utilities. The benchmarks and theirperformance results are reused to support a Benchmark-driven Algebraic method for the Performance (BAP) engi-neering of customized services.

Categories and Subject DescriptorsH.3.4 [Systems and Software]: Performance evaluation(efficiency and effectiveness); B.8.2 [Performance and Re-liability]: Performance Analysis and Design Aids; D.2.8[Metrics]: Performance Measures

General TermsPerformance, Measurement, Design

Permission to make digital or hard copies of all or part of this work forpersonal or classroom use is granted without fee provided that copies arenot made or distributed for profit or commercial advantage and that copiesbear this notice and the full citation on the first page. To copy otherwise, torepublish, to post on servers or to redistribute to lists, requires prior specificpermission and/or a fee.WOSP/SIPEW’10, January 28–30, 2010, San Jose, California, USA.Copyright 2009 ACM 978-1-60558-563-5/10/01 ...$10.00.

1. INTRODUCTIONVirtualization has enabled the dynamic provisioning of

Software as a Service (SaaS) in large shared resource utili-ties. Service instances can be configured in portals and auto-matically rendered onto shared resource pools of computing,network, and storage resources. In such utilities capacitysizing for a service is less risky than when provisioning dedi-cated hardware because the capacity offered to a service canbe adjusted quickly on the fly post-deployment. Howeversignificant performance risks still remain. Each instance ofa service may be customized to use a subset of features sothat its performance behaviour is unique. A utility may sup-port hundreds or thousands of service instances so perfor-mance management methods must be automated, the meth-ods must satisfy each instance’s performance requirements,and the methods must be accurate enough to make efficientuse of resources. This is a challenge because the relationshipbetween the performance of each service instance and its ca-pacity can be complex, particularly in multi-tier applicationswith many potential bottlenecks. Furthermore utilities maydynamically vary the capacity associated with services tomake more efficient use of resources and to decrease energycosts. Our joint research has focused on the development ofautomated techniques to support performance managementof customized service instances in shared resource utilities.This paper describes our progress and experiences to date.

The methods we are developing exploit an algebraic ap-proach to workload modelling. Basically, each service ischaracterized using a number of service-specific benchmarksthat can be run and measured in an automated mannerwithin a utility environment. Each benchmark acts as asemantically correct workload with a particular business ob-ject mix, where business objects of the service contain logicthat causes demands on the system. It is important thatsuch workloads are semantically correct, i.e., that they usebusiness objects in a correct sequence. A customized ser-vice instance also has a particular business object mix thatis likely different from the mix of any of the benchmarks.Thus it is likely to cause different demands on the system.However the customized business object mix may be esti-mated as a linear combination of the pre-existing business

3

object mixes of the benchmarks. Thus we can reuse a subsetof benchmarks in certain combinations and in certain ordersto match the customized workload mix and other workloadproperties. The subset of benchmarks acts as an algebraicbasis [16]. This enables an algebraic and automated ap-proach to performance engineering for customized serviceinstances.We have found that by matching a business object mix

using the algebraic approach we are able to estimate theresource demands of a customized service instance. Thisprovides workload specific demand values for parameters ofa performance model for the customized service instance.We assume that each service has a pre-existing performancemodel that has been prepared by a performance analyst.The automation step saves effort by assigning appropriateparameter values for the model for each separate customizedservice instance. The same linear combination of bench-marks can also be used to automatically prepare customizedperformance validation tests for a service instance. We haveenhanced the tests to consider various aspects of inter-arrivaltime and service demand burstiness. The tests are used tostudy the sensitivity of system performance to such work-load features. Finally, the burstiness examples shed light onthe complexity of the relationship between response timesand capacity. We describe a technique we have developedto take into account such burstiness to improve the predic-tive accuracy of performance models so they can be used tobetter support performance management.Section 2 gives an overview of related work. Section 3 de-

scribes a method for customizing service instances of SAPERP systems in a service on demand environment. Themethod provides a practical motivation for our algebraic ap-proach for workload modelling. Sections 4 and 5 describerecent work on demand estimation and synthesizing perfor-mance validation tests. Section 6 demonstrates the use ofa layered queueing model (LQM) to predict the behaviouror a SAP ERP system for a sales and delivery workload. Italso presents a study for a TPC-W system that shows how arecently developed technique named the Weighted AverageMethod (WAM) can use traces from performance validationtests with burstiness to improve the accuracy of predictivemodels. Summary and concluding remarks are offered in 7.

2. RELATED WORKThis section describes key works that are related to or

have contributed directly to this paper. We refer to readerto more complete related works in [13][28][6][27][14].This paper presents techniques we have developed using

the notion of an algebraic approach to workload modelling.Litoiu et. al [18][19] consider mixes of workloads that causecombinations of bottlenecks in distributed systems to satu-rate. They employ a linear programming based approach tofind combinations of workloads that achieve certain proper-ties. Dujmovic [8] describes benchmark design theory thatmodels benchmarks using an algebraic space and minimizesthe number of benchmark tests needed to provide maximuminformation. Dujmovic’s work also informally describes theconcept of interpreting the results of a ratio of differentbenchmarks to better predict the behaviour of a differentbut unmeasured system however no formal method is givento compute the ratio. Krishnaswamy and Scherson [15] alsomodel benchmarks as an algebraic space but also do notconsider the problem of finding such a ratio.

Krishnamurthy et al. [12][13] introduce the Synthetic WebApplication Tester (SWAT) which includes a method thatautomatically selects a subset of pre-existing user sessionsfrom a session based e-commerce system, each with a par-ticular URL mix, and computes a ratio of sessions to achievespecific workload characteristics. For example, the tech-nique can reuse the existing sessions to simultaneously matcha new URL mix and a particular session length distribu-tion and to prepare a corresponding synthetic workload tobe submitted to the system. They showed how such work-load features impact the performance behaviour of sessionbased systems. We consider results from [13] in Sections 5.1and 5.2. BAP exploits the ratio computation technique inthis work to automatically find a basis of benchmarks and tocompute a ratio of the benchmarks that enables the creationof customized performance model and performance valida-tion test for a service instance.

Bard and Shatzoff studied the problem of characteriz-ing the resource usage of operating system functions in the1970’s [3]. The system under study did not have the abilityto measure the resource demands of such functions directly.The execution rates of the functions and their aggregateresource consumption were measurable and were recordedperiodically. This data served as inputs to a regression prob-lem. Bard used the least squares technique to successfullyestimate per-function resource consumption for the study.We summarize results for a new Demand Estimation methodwith Confidence intervals (DEC) that solves the same prob-lem [28] in Section 4. DEC has certain advantages. We referto that paper’s related work for more on the topic.

Burstiness in service demands has recently been shown tobe responsible for major performance degradations in multi-tier systems [23]. Service demand burstiness differs substan-tially from the well-understood burstiness in the arrival ofrequests to a system. Arrival burstiness has been system-atically examined in networking [17] and there are manybenchmarking tools that can shape correlations between ar-rivals [4, 11, 22, 13]. In contrast, service demand burstinesscan be seen as the result of serially correlated service de-mands placed by consecutive requests on a hardware or soft-ware system [26, 23, 21], rather than a feature of the inter-arrival times between requests. It is much harder to modeland predict system performance for workloads with servicedemand burstiness than for traditional workloads [7]. Thisstresses the need for benchmarking tools that support ana-lytic and simulation techniques to study the performance im-pact of service demand burstiness. Casale et. al [6] presentan approach for supporting the generation of performancevalidation tests that control service demand burstiness forsession based systems. We refer to this approach here as aBurstiness eNabing method (BURN). A summary of resultsfrom the approach are considered in Section 5.3.

Queueing Network Models (QNMs) [5] and Mean ValueAnalysis (MVA) [25] have been used to study computer sys-tem performance since the early 1970’s. Researchers haverecently applied them directly to the study of multi-tier sys-tems [32]. Layered Queueing Models (LQM) [29][33] arebased on QNMs, and were developed starting in the 1980’sto consider the performance impact of software interactionsin multi-tier software systems, e.g., systems that have con-tention for software resources such as threads. Tiwari etal.[31] report that layered queuing networks were more ap-propriate for modeling a J2EE application than a Petri-

4

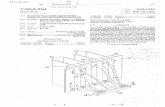

(a) Customized Business Object Mix

(b) Customized Model and Performance Valiation Test

Figure 1: Customized Service Business Object Mix,Performance Model, and Validation Test

Net based approach because they better addressed issues ofscale. Balsamo et al. conclude that extended QNM-basedapproaches, such as LQMs, are the most appropriate mod-eling abstraction for multi-tiered software environments [2].Rolia et al. describe the validation of an LQM for a SAP

ERP system [27]. A summary of results are offered in Sec-tion 6.1. The Weighted Average Method (WAM) was re-cently proposed as a method for taking into account theimpact of inter-arrival time burstiness in predictive analyticmodels [14]. A summary of results of [14] is presented inSection 6.2 and we refer to that paper for more extensiverelated work.

3. CUSTOMIZING SERVICE INSTANCESThis section gives an example of a method for customiz-

ing a service instance for an on-demand business processplatform. The example motivates the algebraic approach toworkload modelling.

Figure 1 illustrates the method. Figure 1(a) shows a ser-vice that supports business processes for customers. Ex-amples of business processes include customer relationshipmanagement, supply chain management, and sales and dis-tribution [30]. These can be complex processes that imple-ment how a business interacts with its partners and cus-tomers.

The service in Figure 1(a) has many business processeseach with many process variants such as order entry, sale,and return. A service could have hundreds or thousands ofprocess variants. Each process variant has a number of pro-cess steps each of which uses business objects1 in applicationservers and database servers. The business objects imple-ment application and database logic that determine resourcedemands on the system. Process variants can be profiled todiscover the average number of visits by a variant to eachbusiness object. A customized service instance defines whichprocess variants are to be used by a customer. This deter-mines which software modules are available to the end usersof the service instance. From a performance perspective, itis also necessary to specify the throughput for each of thevariants, e.g., the number of times per hour the variant isstarted, and a goal for mean response time for interactionswith the system. A simple cross product of the through-puts specified when customizing the system and visit valuesfound when profiling the system gives the expected numberof visits to each business object, e.g., per hour, and whennormalized with respect to one to gives the probability ofinvocation for each business object. This is the customizedbusiness object mix M for the customized service instance.It specifies the relative proportion of system functions forthe customized service instance in terms of business objects.

Figure 1(b) illustrates how the customized business objectmixM can be used to automatically compute parameters fora customized performance model and to automatically pre-pare a performance validation test. Our approach assumesthat the service has a performance model that is preparedby an analyst. Further, each service has a suite of bench-marks that can be automatically executed and measured inthe utility. For example, there may be N benchmarks. Eachbenchmark is executed and measured to obtain its businessobject mix and resource demand values, e.g., CPU time perrequest, for the service’s performance model. It is possibleto automate the execution of such benchmarks in sharedresource utilities during times when resources are under-utilized. The measured values can be reused to supportperformance engineering for different customized service in-stances.

An Algebraic ApproachThe customized business object mix M and benchmark

business object mixes can be thought of as vectors. A linearprogramming algorithm can be used to find a basis Q of theN benchmarks. The basis enables the customized businessobject mix M to be estimated as a linear combination Rof the object mixes of the Q benchmarks. For example, acustomized service instance with business object mixM maybe similar to a workload with R = 50%, 30%, and 20% ofsessions from Q = 3 benchmarks named Ben1, Ben2, andBen3, respectively. The SWAT algorithm [13] can be usedto find the Q benchmarks and to estimate R. SWAT alsopermits the specification of a matching tolerance for each

1Our use of business object refers to a class of business ob-ject rather than any instance of the class of business object.

5

business object so that those that affect performance mostcan be matched more exactly.Once the ratio R of benchmarks is known, R can be used

to estimate the demands for the customized performancemodel corresponding to the customized business object mix.The demands are simply a linear combination, using R, ofthe measured benchmark demands. Furthermore, replay-ing benchmark sessions in proportion R synthesizes the cus-tomized object mix. Therefore the benchmarks can also bereused to automatically create performance validation tests.The following two sections give case study results for thisapproach.

4. DEMAND ESTIMATION WITHCONFIDENCE (DEC)

Demand estimation in software performance is often con-ducted using regression techniques. However, regressionmakes certain assumptions that can lead to inaccuraciesin demand predictions. For example, in the current con-text, regression assumes that visits to objects are not cor-related. Yet software systems typically use objects in cor-related ways. For example, functions such as add to cartand buy are often used together. This introduces the prob-lem of multi-colinearity into the regression problem whichcan lead to inaccurate estimates for per-object demands.Furthermore, for confidence interval calculations, regressionassumes that the demand caused by a particular mix of ob-jects is deterministic, any variation is assumed to be dueto measurement error. The variation is allocated to an er-ror term that is assumed to have values that are Normallydistributed and are used to compute a confidence interval.Yet computing system resource demands are rarely deter-ministic and the corresponding errors are not always Nor-mally distributed. Thus confidence interval estimates fromregression for computing system applications are not alwaysstatistically sound or reliable.We have proposed a Demand Estimation method with

Confidence intervals (DEC) for estimating demands thatovercomes these problems [28]. DEC uses the ratio of bench-marks R to estimate demands as illustrated in Figure 1(b).With DEC, confidence interval calculation is straightfor-ward. Several independent experiment replications are con-ducted for each benchmark. Each benchmark replicationyields a benchmark replication demand. The mean of thebenchmark replication demands is computed as the overallbenchmark mean demand. From the central limit theorema benchmark mean demand is Normally distributed if thenumber of replications is large [10]. Furthermore, a linearcombination of Normally distributed independent randomvariables results in a random variable that is also Normallydistributed. Since DEC uses a linear combination of mea-sured benchmark mean demands to predict the resource de-mand of a business object mix, the predicted demand is alsoNormally distributed. This allows confidence intervals to becomputed with certainty for the predictions from DEC.Figure 2 compares DEC with the Least Squares (LSQ)

and Least Absolute Deviation (LAD) regression techniquesthat are often cited in the literature [28]. The results wereobtained from a multi-tier TPC-W system deployed at theUniversity of Calgary that consists of a Web server node,a database server node, and a client node connected by anon-blocking Ethernet switch that provides a dedicated 1

Gbps connectivity between any two machines in the setup.The Web and database server nodes are used to executethe TPC-W bookstore application. The client node is ded-icated for running the httperf [24] Web request generatorthat was used to submit benchmarks to the TPC-W sys-tem. All nodes in the setup contain an Intel 2.66 GHZ Core2 CPU and 2 GB of RAM. We used the Windows perfmonutility to collect resource usage information from the Weband database server nodes using a sampling interval of 1second. The CPU demands are much larger than disk andnetwork demands for this system so we focus on demandestimation for these values.

Figures 2(a) and 2(b) give cumulative distribution func-tions (CDF) for estimated CPU demands as compared tomeasured CPU demands for customized business object mixfor the server and database nodes. The figures present re-sults for thirty cases. Figures 2(c) and 2(d) give the CDF oftwo-sided confidence intervals for the same cases.

In Figure 2(a), the results of DEC, LSQ and LAD arevery similar with a maximum error below 15%. It must benoted that for all the cases the server node demand onlychanged by a factor of 1.5. There is no clear advantage forany technique.

Figure 2(b) gives the corresponding results for the DBCPU demand estimates. The demand value varied by afactor of over 200 for these cases and were important forthe performance of the system. The figure shows that DECoffered much lower errors for 30% of the cases.

Figures 2(c) and (d) show that DEC’s confidence inter-val predictions are more in line with measured confidenceintervals than those predicted by the LSQ and LAD meth-ods. DEC’s confidence interval calculations are statisticallysound. Note that LAD’s reported confidence intervals aretighter than LSQ confidence intervals due to the nature ofthe LAD algorithm.

To summarize, DEC is a new technique for estimatingresource demands that relies on the algebraic approach toworkload modelling. It does not suffer from the problem ofmulti-colinearity and provides statistically sound confidenceinterval calculations that appear to be tighter and closerto measured confidence intervals than the regression basedtechniques. The demands predicted by DEC can be used incustomized performance models. The next system considersthe creation of performance validation tests.

5. CUSTOMIZED PERFORMANCEVALIDATION TESTS

Just as it is possible to automatically render service in-stances into shared resource pools, it is also possible to au-tomatically generate and execute performance tests that val-idate the performance of such instances [9]. Customized per-formance validation tests can be used to stress such systemsto verify that they are able to satisfy performance require-ments.

The section gives examples of performance validation teststhat match a customized workload mix, that match a mixand session length distribution, that match a mix and thinktime distribution, and that match a mix and service demandburstiness. The techniques are based on the algebraic ap-proach to workload modelling.

Sections 5.1 and 5.2 rely on case study data from a multi-tier TPC-W system at Carleton University as described

6

0

2

4

6

8

10

12

14

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

Rel

ativ

e er

ror i

n pe

rcen

tage

Percentiles

DECLSQLAD

(a) Web CPU Demand Error CDF

0

10

20

30

40

50

60

70

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

Rel

ativ

e er

ror i

n pe

rcen

tage

Percentiles

DECLSQLAD

(b) DB CPU Demand Error CDF

0

5

10

15

20

25

30

35

40

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

Con

fiden

ce in

terv

al w

idth

in p

erce

ntag

e

Percentiles

MeasuredDECLSQLAD

(c) Web CPU Demand CI CDF

0

50

100

150

200

250

300

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

Con

fiden

ce in

terv

al w

idth

in p

erce

ntag

e

Percentiles

MeasuredDECLSQLAD

(d) DB CPU Demand CI CDF

Figure 2: Comparison of Demand Prediction and CI Errors between DEC and Regression Techniques

in [13]. The Carleton system had topology similar to theUniversity of Calgary system described in Section 4 but withdifferent software and hardware. Section 5.3 relies on casestudy data from the multi-tier TPC-W system at the Uni-versity of Calgary.

5.1 Matching Functional Mix with SWATOnce computed, the ratio R of the Q chosen benchmarks

can be used to automate the creation of a performance val-idation test. As illustrated in Figure 1(b), sessions in thetest are simply drawn from the Q benchmarks according tothe probabilities in R. Figure 3 compares two experimentruns for the TPC-W system. The original workload is gener-ated using the TPC-W emulated browser workload genera-tor. Forty thousand sessions were generated with 838 uniquebusiness object mixes and a certain overall business objectmix. Next we treated the 838 unique sessions from the origi-nal workload, i.e., sessions with different object mixes, as ourset of benchmarks. Using the linear programming method,a subset of Q = 420 of the benchmarks were chosen as thebasis along with the ratio R. A new trace of fouty thousandsessions was generated using the Q benchmarks and the ra-tio R. This corresponds to the synthetic workload in thefigure, and corresponds to a performance validation test.Figure 3(a) shows the response time distributions for the

original and synthetic workloads [13]. They are nearly over-lapping. Figure 3(b) shows the distribution for the number

of concurrent users. Again they are almost identical. Thesynthetic workload matched the business object mix M , theresponse time distribution, device utilization values, and ses-sion population distribution. A smaller set of benchmarks,i.e., fewer than Q benchmarks, would approximate the busi-ness object mix and performance measures.

5.2 Inter-arrival Time Burstiness with SWATThe approach of Section 5.1 has been enhanced in two

ways to evaluate the impact of inter-arrival time burstinesson the TPC-W system. For the first enhancement, bursti-ness due to session length distribution was considered. Tosupport this, additional constraints were added to the linearprogramming formulation so that the sessions for an experi-ment satisfied both a customized business object mix and adesired session length distribution as specified using a prob-ability distribution function (pdf). Each point in the samplespace of the pdf corresponds to a session length range. Forexample, the first point may have probability 0.1 and corre-spond to sessions with length 1 to 3. The second point mayhave probability 0.9 and correspond to sessions with length4 to 12. The linear programming solution was repeated foreach different point in the sample space. The mix that cor-responds to each sample point has its selected benchmarksweighted by both the corresponding point’s probability fromthe pdf and the benchmark’s corresponding R value fromits solution of the linear program. The benchmarks from all

7

(a) Response Time CDF (b) Concurrent Session Population CDF

Figure 3: Comparison of Original Workload and Performance Validation Test

the points contribute to an integrated workload mix for thewhole session length distribution.For the second enhancement, we considered burstiness due

to think time distributions. Table 1 shows the impact ofmean response time and the 95-percentile of response timefor several experiments [13]. The empirical workload hadthink times and session lengths that corresponded to datacollected from a large e-commerce system [1]. Exponen-tial corresponds to the exponential distribution for thinktimes and session lengths. Heavy-tailed session length useda bounded Pareto distribution for session lengths and theempirical distribution for think times. Heavy-tailed thinktime used a bounded Pareto distribution for think timesand empirical distribution for session lengths. In all cases,the mean session length and mean think time was the same.Furthermore the per resource utilizations and throughputwere the same for each of the runs. The Table shows thatheavy tailed session lengths could increase mean responsetime by over 25% and the 95-percentile of response timeby nearly 50% even though the overall resource utilizationsand request throughput were the same for each run. Heavytailed think times also had a significant impact. This demon-strates aspects of the complex relationship between softwareperformance and capacity.Figure 4 compares the response time CDF and CDF for

the number of concurrent sessions for the empirical andheavy-tailed session length distribution cases. Figure 4(a)shows more larger response times and fewer small responsetimes for the heavy-tailed case. Figure 4(b) suggests thatthe increases in response times are because arriving sessionshave a higher probability of arriving when there are moreconcurrent sessions being served than for the empirical case.Similar results are available for heavy tailed think time dis-tributions [13] but are omitted here due to space limitations.This subsection has shown that the algebraic approach

to workload modelling can be used to create performancevalidation tests that match workload mix as well as cus-tomizable session length and think time distributions. Themethods can be employed to evaluate the sensitivity of asystem to such workload features using automatically gen-erated performance validation tests.

5.3 Service Demand Burstiness with BURNService demand burstiness is an interesting feature of sys-

tems [23]. Changes in workload mix can cause bottleneckshifts in systems with potentially significant impact on re-sponse times. We have extended the linear programming ap-proach further to control bottleneck switching in multi-tiersystems. The extension takes as input a measure of bursti-ness called the index of dispersion [21]. The index of dis-persion is the product of the coefficient of variation squaredof service demands and the lag-k autocorrelation coefficientof the service demands. We use the index of dispersion tocontrol, in a statistical manner, the ordering of sessions [6].We refer to this as a BURstiness eNabling method (BURN).A low index of dispersion behaves like the algorithm of Sec-tion 5.1 with sessions chosen from benchmarks at randomusing the ratio R. A high index of dispersion results inthe same business object mix but increases the likelihood ofsessions from the same benchmark appearing in sequence.If different benchmarks have different bottlenecks then thiscan cause switches in bottlenecks. The full algorithm forcontrolling service demand burstiness is given in [6].

Figure 5 demonstrates the impact of service demand bursti-ness [6]. Two tests were run against the TPC-W system asdescribed in Section 4. The first test had an index of dis-persion of 1.14 which corresponds to low burstiness, withthe squared coefficient of variation of service demands ap-proaching a value of one. The second case had an indexof dispersion of 50 which corresponds to high burstiness.Figures 5(a) and (b) show examples of time-varying CPUusage for the front server and database server. For the lowburstiness case resource usage is consistent over time. Forthe high burstiness case we see heavy use of the server nodeCPU and then a switch in bottleneck to the database nodeCPU. Note that for both the server node CPU and databasenode CPU, the average CPU utilization over the entire runwas the same for the two tests.

Figures 5(c) and (d) give the mean number of requestsover time for the three types of benchmark sessions thatmake up the workload. For the low burstiness case the num-bers are consistent over time. For the high burstiness case

8

Table 1: Effects of Session Length and Think Time Distributions

Workload Mean R 95p R 95p R Workload95p R Empirical

Empirical 0.86 2.90 1.0Exponential 0.83 2.83 0.98Heavy-tailed session length 1.09 4.27 1.47Heavy-tailed think time 0.96 3.40 1.17

(a) Response Time CDF (b) Concurrent Session Population CDF

Figure 4: Comparison of Empirical Workload and Inter-arrival Burstiness due to Session Length Distribution

we see the proportion of requests of each type changing,which explains the shift in bottleneck to the DB CPU.Figures 5(e) and (f) illustrate mean response times for

the three types of benchmark sessions for the low and highburstiness cases. The high burstiness scenario causes muchgreater variability in response times for all classes of re-quests. Finally, Figure 5(g) shows the CDF of response timesover all requests for the two tests. Even thought the deviceutilizations and request throughputs were the same, bursti-ness has a significant impact on the distribution of responsetimes.To summarize, the algebraic approach to workload mod-

elling can help to control service demand burstiness in auto-mated performance validation tests. Such tests can be usedto evaluate the sensitivity of a system to such workload fea-tures.

6. PREDICTIVE MODELSThe previous sections considered computing the param-

eters of a performance model for a customized service in-stance and the automated creation of performance validationtests. This section focuses on examples of performance mod-els and their predictive accuracy. The first example demon-strates a validated model for a deployment of the SAP ERPplatform for a sales and distribution workload. SAP ERPis a complex business process execution environment usedby many of the world’s medium and large sized businesses.Though validated, the workload for the system is relativelysimple. Such models can yield inaccurate results for sys-tems subject to more complex bursty workload behaviour.Our second example is a model for the TPC-W system de-ployed at Carleton University. For this system we demon-strate that a recently developed method can use burstiness

information derived from performance validation tests alongwith the performance model to improve the accuracy of themodel’s performance predictions. By taking into accountsuch burstiness with performance models we are better ableto use performance modelling to help guide choices of moretime consuming measurement based validation tests.

6.1 LQM for an SAP ERP SystemFigure 6 illustrates a layered queueing model [29] (LQM)

for a SAP ERP system [27]. The process for customizing aninstance of a SAP ERP service, as described in Section 3,leads to specific resource demand parameters for the modelas determined by a customer’s choices of business processvariants and business process variant throughputs.

Figure 6 illustrates a LQM for the SAP ERP system un-der study. The system node had two virtual CPUs each withits own physical CPU. An application server and databaseserver were both assigned to the system node. The ap-plication server is composed of a dispatcher and pools ofseparate operating system processes, called work processes,which serve requests forwarded by the dispatcher [30]. Thedispatcher assigns each request to an idle work process. Ser-vice within a work process is non-preemptive. Work pro-cesses may access the database in a synchronous mannerduring which the work process remains unavailable to serveother requests due to a lack of preemptive capabilities. Re-quests are served by three pools of work processes. The mostimportant pool serves dialog step requests, which are respon-sible for processing and updating information and data thatare displayed on the client-side through the graphical userinterface; this information is exchanged between client andERP application by means of a proprietary communicationprotocol. Each dialog step request alternates cycles of CPU-

9

0 50 100 150 200 250

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

utilization sample

uti

liza

tio

n

server

db

(a) I = 1.14, CPU U No Burstiness

0 50 100 150 200 250 300

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

utilization sample

uti

liza

tio

n

server

db

(b) I = 50, CPU U High Burstiness

0 50 100 150 200 250 3000

100

200

300

400

500

600

700

800

sample period of arrival

num

ber o

f req

uest

s in

the

syst

em

broshpord

(c) Mean Num Requests No Burstiness

0 50 100 150 200 250 3000

100

200

300

400

500

600

700

800

sample period of arrival

num

ber o

f req

uest

s in

the

syst

em

broshpord

(d) Mean Num Requests High Burstiness

(e) Mean R Request Type No Burstiness

0 50 100 150 200 250 300

1E2

1E3

1E4

1E5

sample period of arrival

me

an

re

spo

nse

tim

e [

ms]

bro

shp

ord

(f) Mean R Request Type High Burstiness

0 1e1 1e2 1e3 1e4 1e5

1e−5

1e−4

1e−3

1e−2

1e−1

1

t − request response times

CCDF: 1−F(t)

no burstiness

burstiness, I=50

(g) CDF Response Times

Figure 5: Performance effects service demand burstiness causing dynamic bottleneck switch

10

Figure 6: LQM of SAP ERP System

intensive activities and synchronous calls to the databasefor data retrieval. Additionally, dialog work processes gen-erate asynchronous calls to the database that start beingserved only after completion of the dialog step which re-quests them. These asynchronous calls are of type updateand update2 and are handled by separate pools of work pro-cesses. Update2 requests are processed at a lower priorityby the platform.Tables 2 and 3 offer measured and predicted results for the

SAP ERP system for a sales and distribution workload [27].The tables show the measured CPU utilization, measureddialog request response time, and predicted mean dialog re-sponse time for the six and three work process cases respec-tively. The results show that the performance model is wellable to predict the behaviour of the system. For example,measured and predicted mean dialog response times are gen-erally affected in the same proportion as utilizations increaseand threading levels increase.In [27], we showed that features including multi-threading

for software servers, multiple processors per server, syn-chronous and asynchronous remote procedure calls, mul-tiple phases of processing, and priority of access to CPUresources were needed to validate a model for the system.We note however that the workload for the system was notcomplex. It did not involve different types of workloads.Session lengths were always the same. Session inter-arrivaland departure times are assumed to be exponentially dis-tributed. Previous sections showed that both inter-arrivaland service demand burstiness can have a significant impacton performance. That burstiness affects the relationship be-tween response time and utilization. Predictive models suchas LQMs and QNMs do not have the ability to take intoaccount such burstiness. The next section describes a tech-nique developed to take into account such burstiness to im-prove the accuracy of performance predications.

6.2 WAM: Performance Modelling withComplex Workloads

This section describes the Weighted Average Method(WAM) for taking into account burstiness in predictive per-formance models [14]. To date we have used the technique tostudy inter-arrival time burstiness in systems where per re-

quest think times are an order of magnitude or more greaterthan per-request response times.

WAM takes as input a performance model, e.g., an LQMthat models customer sessions competing for systemresources, and a trace of sessions, e.g., as generated for aperformance validation test. WAM walks over the trace ofsessions and uses the performance model to estimate thesession population distribution for the test. Initially WAMassumes there are zero sessions in the system – as is thecase at the start of a performance test. As requests fromnew sessions arrive WAM increments its count of the num-ber of concurrent sessions. For each request in a session,WAM estimates the response time for the request as themean request response time for the request, as found usinga performance model with the current count for the num-ber of concurrent sessions as the customer population in themodel. The request’s subsequent think time is taken as aninput from the trace. When the estimated response time ofthe last request in a session completes WAM decreases thecount of number of concurrent sessions by one. When allthe sessions in the trace are processed WAM outputs its es-timate for session population distribution. This is used withthe performance model to predict system measures such asthe mean request response time.

To demonstrate the effectiveness of WAM at improvingthe accuracy of predictions of performance models withbursty workloads, we conducted a case study with data fromthe TPC-W system deployed at Carleton University [14].The case study considers various scenarios with inter-arrivaltime burstiness. We now summarize results that employ aLQM alone (LQM), without taking into account burstiness,an LQM paired with a Markov Chain Birth Death process(Markov Chain Birth Death LQM) that models session pop-ulation distribution but assumes session arrival and depar-ture times are exponentially distributed [20], WAM with thesession population distribution computed empirically(Empirical WAM LQM) using measured response times fromthe trace but that estimates overall mean response time us-ing the corresponding measured session population distribu-tion with the LQM, and WAM with the session populationdistribution computed using an LQM for request responsetime estimates and the overall mean response time calcula-tion (Monte Carlo WAM LQM).

Empirical WAM LQM gives the best possible results forthe approach. Monte Carlo WAM LQM offers a constructivemethod that approximates Empirical WAM LQM and canalso be used to conduct sensitivity analyses by either mod-ifying think time distributions or using re-generated traceswith different session length distributions. Clearly, in suchcases measured response time values are no longer known soEmpirical WAM cannot be applied.

Table 4 summarizes the error measures for mean responsetimes for thirty nine experiments, including seventeen ex-periment replications that employed bounded Pareto sessionlength and think time distributions [14]. Table 5 includesonly the results for the seventeen cases with inter-arrivaltime burstiness. The results offer the mean absolute er-ror, maximum error and trend error for each approach. Themean absolute error is a weighted measure of error, the trenderror is the greatest difference between error values (includ-ing sign). A larger trend error suggests less confidence inthe ability of the model to predict the impact of changes tothe system.

11

Table 2: Measured and predicted mean dialog response times for 6 dialog workprocessMeasured Measured LQM

Pop CPU U DialogResp DialogResp10 0.08 142.12 139.6750 0.36 151.77 149.1475 0.49 157.15 158.42100 0.60 165.36 170.63150 0.76 209.62 241.19175 0.85 293.11 329.66

Table 3: Measured and Predicted mean dialog response times for 3 dialog work processMeasured Measured LQM

Pop CPU U DialogResp DialogResp10 0.08 142.08 135.6250 0.36 154.55 154.9675 0.49 164.23 167.94100 0.60 190.56 180.59150 0.76 320.32 322.84175 0.84 473.23 586.71

Table 4 shows that the LQM alone has the greatest errors.While the Markov Chain Birth Death approach does modelsession population distribution, it does not model bursti-ness so it does not improve the accuracy of predictions. TheEmpirical WAM approach decreases all measures of errorsby 10% or more by using the empirical session populationdistribution along with LQM. Monte Carlo WAM LQM of-fers results that are nearly as accurate as those of EmpiricalWAM. Table 5 gives similar results but only includes thecases with inter-arrival time burstiness.Figure 7 illustrates some interesting results for four repli-

cates of a case with heavy tailed session length distributions.For this case system behaviour was different for each of thefour runs. Figures 7(a) and (b) illustrate session populationpdfs for two of the four runs. Clearly the pdfs are very dif-ferent. The pdfs for the other two runs were also different.This was despite the fact that the runs were for many hourseach. Significant burstiness can cause a range of system be-haviours such that there is no steady state behaviour. Fig-ure 7(c) shows the measured and estimated mean requestresponse times for the LQM, Markov Chain Birth DeathLQM, and Monte Carlo LQM approaches for the four runs.The Monte Carlo LQM approach outperforms the other ap-proaches. The Markov Chain Birth Death LQM approachmodels session population distribution but not the run spe-cific impact of burstiness on the distribution.To summarize, this section described the WAM method.

It has been used with traces of sessions, similar to thosefrom performance validation tests, along with performancemodels to predict the impact of inter-arrival time burstinesson systems. The approach is trace based and as a resultis compatible with the algebraic method for workload mod-elling.

7. SUMMARY AND CONCLUSIONSThis paper describes our progress to date on BAP, a

Benchmark-driven Algebraic method for the Performanceengineering of customized services in shared resource utili-ties. We have described a customization process for serviceinstances that enables parameters for performance modelsand performance validation tests to be automatically gener-ated. An algebraic approach is used for workload modelling.

This enabled an integrated set of methods named SWAT,DEC, BURN, and WAM that support performance mod-elling and performance testing and that take into accountimportant features such as inter-arrival time and service de-mand burstiness.

The examples presented in the paper have shown that therelationship between software performance, in terms of re-sponse times, and capacity, in terms of utilizations, is com-plex. There can be many different behaviours for systemswith the same throughputs and utilization values. Whilethis has certainly been demonstrated in other related fields,we are not aware of other comprehensive methods such asthose presented here for studying these issues for multi-tiersoftware systems.

Our future work includes further case studies that applythe methods to enterprise systems. We intend to furtherdevelop WAM to support more kinds of burstiness, and tosupport the development and validation of adaptation poli-cies for complex applications, to predict the behaviour ofservices in adaptive environments, and to explore the notionof benchmark design to better choose sets of benchmarks tocharacterize services.

8. ACKNOWLEDGMENTSThe authors thank Amir Kalbasi and Min Xu of the Uni-

versity of Calgary, Stephan Kraft of SAP Research, and SvenGraupner of HP Labs for their collaboration and support ofthis work.

9. REFERENCES[1] M. Arlitt, D. Krishnamurthy, and J. Rolia.

Characterizing the scalability of a large web-basedshopping system. ACM Transactions on InternetTechnology, 1(1):44–69, 2001.

[2] S. Balsamo, A. D. Marco, P. Inverardi, andM. Simeoni. Model-based performance prediction insoftware development: A survey. IEEE Transactionson Software Engineering, 30(5):295–310, May 2004.

[3] Y. Bard and M. Shatzoff. Statistical methods incomputer performance analysis. In K. Chandy andR. Yeh, editors, Current Trends in Programming

12

Table 4: WAM vs BirthDeath for Modelling Session Population Distribution for All ExperimentsApproach Abs Error % Max Error % Trend Error %LQM 15.2 32.4 42.5Markov Chain Birth Death LQM 13.7 32.6 45.1Empirical WAM LQM 5.79 15.5 28.2Monte Carlo WAM LQM 7.2 18.4 33.2

Table 5: WAM vs BirthDeath for Modelling Session Population Distribution for Bursty ExperimentsApproach Abs Error % Max Error % Trend Error %LQM 21.2 32.4 28.0Birth Death LQM 19.2 32.6 30.1Empirical WAM LQM 4.93 13.8 25.5Monte Carlo WAM LQM 7.1 18.4 31.2

Methodology, Volume III, Software Modelling.Prentice-Hall, Englewood Cliffs, N.J., 1978.

[4] P. Barford and M. Crovella. Generating representativeweb workloads for network and server performanceevaluation. ACM Performance Evaluation Review,26(1):151–160, 1998.

[5] J. P. Buzen. Computational algorithms for closedqueueing networks with exponential servers. Comm. ofthe ACM, 16(9):527–531, 1973.

[6] G. Casale, A. Kalbasi, D. Krishnamurthy, andJ. Rolia. Automatic stress testing of multi-tier systemsby dynamic bottleneck switch generation. In To appearin Proc. of USENIX Middleware, December 2009.

[7] G. Casale, N. Mi, and E. Smirni. Bound analysis ofclosed queueing networks with workload burstiness. InProc. of ACM SIGMETRICS 2008, pages 13–24.ACM Press, 2008.

[8] J. J. Dujmovic. Universal benchmark suites. In InProc. of MASCOTS, pages 197–205, 1999.

[9] S. Gaisbauer, J. Kirschnick, N. Edwards, and J. Rolia.Vats: Virtualized-aware automated test service. In InProc. of Quantitative Evaluation of Systems, 2008.

[10] R. Jain. The Art of Computer Systems PerformanceAnalysis: Techniques for Experimental Design,Measurement, Simulation, and Modeling. John Wiley& Sons, 1991.

[11] K. Kant, V. Tewary, and R. Iyer. An internet trafficgenerator for server architecture evaluation. In InProc. CAECW, January 2001.

[12] D. Krishnamurthy. Synthetic Workload Generation forStress Testing Session-Based Systems. Ph.D. Thesis,Department of Systems and Computer Engineering,Carleton University, Ottawa, January 2004.

[13] D. Krishnamurthy, J. Rolia, and S. Majumdar. Asynthetic workload generation technique for stresstesting session-based systems. IEEE Trans. onSoftware Engineering, 32(11):868–882, Nov 2006.

[14] D. Krishnamurthy, J. Rolia, and M. Xu. Wam: Theweighted average method for predicting theperformance of systems with bursts of customersessions. To appear in IEEE Transactions on SoftwareEngineering, 2009.

[15] U. Krishnaswamy and D. Scherson. A framework forcomputer performance evaluation using benchmarksets. IEEE Trans. on Computers, 49(12):1325–1338,Dec 2000.

[16] P. Lax. Linear Algebra. John Wiley & Sons, NewYork, 1997.

[17] W. E. Leland, M. S. Taqqu, W. Willinger, and D. V.Wilson. On the self-similar nature of ethernet traffic.IEEE/ACM Trans. on Networking, 2(1):1–15, 1994.

[18] M. Litoiu, J. Rolia, and G. Serazzi. Designing processreplication and threading policies: A quantitativeapproach. In In Proc. 10th International Conferenceon Modelling Techniques and Tools for ComputerPerformance Evaluation, LNCS, volume 1469, pages15–26, 1998.

[19] M. Litoiu, J. Rolia, and G. Serazzi. Designing processreplication and activation, a quantitative approach.IEEE Transactions on Software Engineering,26(12):1168–1178, 2000.

[20] D. Menasce and V. A. F. Almeida. Capacity Planningfor Web Performance: Metrics, Models, and Methods.Prentice Hall, 1998.

[21] N. Mi, G. Casale, L. Cherkasova, and E. Smirni.Burstiness in multi-tier applications: Symptoms,causes, and new models. In V. Issarny and R. E.Schantz, editors, Middleware, volume 5346 of LectureNotes in Computer Science, pages 265–286. Springer,2008.

[22] N. Mi, G. Casale, L. Cherkasova, and E. Smirni.Injecting realistic burstiness to a traditionalclient-server benchmark. In In Proc. of ICAC, June2009.

[23] N. Mi, Q. Zhang, A. Riska, E. Smirni, and E. Riedel.Performance impacts of autocorrelated flows inmulti-tiered systems. Performance Evaluation,64(9-12):1082–1101, 2007.

[24] D. Mosberger and T. Jin. httperf: A tool formeasuring web server performance. In In Proc. ofWorkshop on Internet Server Performance, June 1998.

[25] M. Reiser and S. S. Lavenberg. Mean-value analysis ofclosed multichain queueing networks. Journal of theACM, 27(2):312–322, 1980.

[26] A. Riska and E. Riedel. Long-range dependence at thedisk drive level. In Proc. of the 3rd Conf. onQuantitative Evaluation of Systems (QEST), pages41–50. IEEE Press, 2006.

[27] J. Rolia, G. Casale, D. Krishnamurthy, S. Dawson,and S. Kraft. Predictive modelling of sap erpapplications: Challenges and solutions. In To Appearin Proc. of the Workshop on Run-time Models forSelf-managing Systems and Applications, Oct 2009.

13

[28] J. Rolia, D. Krishnamurthy, A. Kalbasi, andS. Dawson. Resource demand modeling for multi-tierservices. In To Appear in Proc. of the Workshop onSoftware and Performance, Jan 2009.

[29] J. A. Rolia and K. C. Sevcik. The method of layers.IEEE Trans. on Software Engineering, 21(8):689–700,1995.

[30] T. Schneider. SAP Performance Optimization Guide.SAP Press, 2003.

[31] N. Tiwari and P. Mynampati. Experiences of using lqnand qpn tools for performance modeling of a j2eeapplication. In In Proc. of Computer MeasurementGroup (CMG) Conference, pages 537–548, 2006.

[32] B. Urgaonkar, G. Pacifici, P. J. Shenoy, M. Spreitzer,and A. N. Tantawi. An analytical model for multi-tierinternet services and its applications. In Proc. of ACMSIGMETRICS, pages 291–302. ACM Press, 2005.

[33] M. Woodside, J. E. Nielsen, D. C. Petriu, andS. Majumdar. The stochastic rendezvous networkmodel for performance of synchronousclient-server-like distributed software. IEEETransactions on Computers, 44(1):20–34, January1995.

(a) Session Pop Distribution Example 1

(b) Session Pop Distribution Example 2

(c) Mean Response Time Comparison for Four Runs

Figure 7: Predicted and Measured Mean ResponseTimes with Heavy Tail Distribution

14