arXiv:1806.09178v3 [stat.ML] 8 Jun 2019 · Dino Oglic Department of Informatics King’s College...

Transcript of arXiv:1806.09178v3 [stat.ML] 8 Jun 2019 · Dino Oglic Department of Informatics King’s College...

Journal of Machine Learning Research (2019) Submitted ; Published

Towards a Unified Analysis of Random Fourier Features

Zhu Li [email protected] of StatisticsUniversity of OxfordOxford, OX1 3LB, UK

Jean-Francois Ton [email protected] of StatisticsUniversity of OxfordOxford, OX1 3LB, UK

Dino Oglic [email protected] of InformaticsKing’s College LondonLondon, WC2R 2LS, UK

Dino Sejdinovic [email protected]

Department of StatisticsUniversity of OxfordOxford, OX1 3LB, UK

Editor:

AbstractRandom Fourier features is a widely used, simple, and effective technique for scaling up kernelmethods. The existing theoretical analysis of the approach, however, remains focused on specificlearning tasks and typically gives pessimistic bounds which are at odds with the empirical results.We tackle these problems and provide the first unified risk analysis of learning with random Fourierfeatures using the squared error and Lipschitz continuous loss functions. In our bounds, the trade-offbetween the computational cost and the expected risk convergence rate is problem specific andexpressed in terms of the regularization parameter and the number of effective degrees of freedom.We study both the standard random Fourier features method for which we improve the existingbounds on the number of features required to guarantee the corresponding minimax risk convergencerate of kernel ridge regression, as well as a data-dependent modification which samples featuresproportional to ridge leverage scores and further reduces the required number of features. As ridgeleverage scores are expensive to compute, we devise a simple approximation scheme which provablyreduces the computational cost without loss of statistical efficiency.

Keywords: Kernel methods, random Fourier features, stationary kernels, kernel ridge regression,Lipschitz continuous loss, support vector machines, logistic regression, ridge leverage scores.

1. Introduction

Kernel methods are one of the pillars of machine learning (Schölkopf and Smola, 2001; Schölkopfet al., 2004), as they give us a flexible framework to model complex functional relationships in aprincipled way and also come with well-established statistical properties and theoretical guarantees(Caponnetto and De Vito, 2007; Steinwart and Christmann, 2008). The key ingredient, known as

c©2019 .

arX

iv:1

806.

0917

8v4

[st

at.M

L]

5 D

ec 2

019

LI, TON, OGLIC AND SEJDINOVIC

kernel trick, allows implicit computation of an inner product between rich feature representationsof data through the kernel evaluation k(x, x′) = 〈ϕ(x), ϕ(x′)〉H, while the actual feature mappingϕ : X → H between a data domain X and some high and often infinite dimensional Hilbert spaceHis never computed. However, such convenience comes at a price: due to operating on all pairs ofobservations, kernel methods inherently require computation and storage which is at least quadraticin the number of observations, and hence often prohibitive for large datasets. In particular, thekernel matrix has to be computed, stored, and often inverted. As a result, a flurry of research intoscalable kernel methods and the analysis of their performance emerged (Rahimi and Recht, 2007;Mahoney and Drineas, 2009; Bach, 2013; Alaoui and Mahoney, 2015; Rudi et al., 2015; Rudi andRosasco, 2017; Rudi et al., 2017; Zhang et al., 2015). Among the most popular frameworks forfast approximations to kernel methods are random Fourier features (RFF) due to Rahimi and Recht(2007). The idea of random Fourier features is to construct an explicit feature map which is of adimension much lower than the number of observations, but with the resulting inner product whichapproximates the desired kernel function k(x, y). In particular, random Fourier features rely onBochner’s theorem (Bochner, 1932; Rudin, 2017) which tells us that any bounded, continuous andshift-invariant kernel is a Fourier transform of a bounded positive measure, called spectral measure.The feature map is then constructed using samples drawn from the spectral measure. Essentially,any kernel method can then be adjusted to operate on these explicit feature maps (i.e., primal rep-resentations), greatly reducing the computational and storage costs, while in practice mimickingperformance of the original kernel method.

Despite their empirical success, the theoretical understanding of statistical properties of randomFourier features is incomplete, and the question of how many features are needed, in order to obtaina method with performance provably comparable to the original one, remains without a definitiveanswer. Currently, there are two main lines of research addressing this question. The first lineconsiders the approximation error of the kernel matrix itself (e.g., see Rahimi and Recht, 2007;Sriperumbudur and Szabó, 2015; Sutherland and Schneider, 2015, and references therein) and basesperformance guarantees on the accuracy of this approximation. However, all of these works requireΩ(n) features (n being the number of observations), which translates to no computational savings atall and is at odds with empirical findings. Realizing that the approximation of kernel matrices is justa means to an end, the second line of research aims at directly studying the risk and generalizationproperties of random Fourier features in various supervised learning scenarios. Arguably, first suchresult is already in Rahimi and Recht (2009), where supervised learning with Lipschitz continuousloss functions is studied. However, the bounds therein still require a pessimistic Ω(n) numberof features and due to the Lipschitz continuity requirement, the analysis does not apply to kernelridge regression (KRR), one of the most commonly used kernel methods. In Bach (2017b), thegeneralization properties are studied from a function approximation perspective, showing for thefirst time that fewer features could preserve the statistical properties of the original method, butin the case where a certain data-dependent sampling distribution is used instead of the spectralmeasure. These results also do not apply to kernel ridge regression and the mentioned samplingdistribution is typically itself intractable. Avron et al. (2017) study the empirical risk of kernelridge regression and show that it is possible to use o(n) features and have the empirical risk of thelinear ridge regression estimator based on random Fourier features close to the empirical risk of theoriginal kernel estimator, also relying on a modification to the sampling distribution. However, thisresult is for the empirical risk only, does not provide any expected risk convergence rates, and a

2

TOWARDS A UNIFIED ANALYSIS OF RANDOM FOURIER FEATURES

tractable method to sample from a modified distribution is proposed for the Gaussian kernel only.A highly refined analysis of kernel ridge regression is given by Rudi and Rosasco (2017), where itis shown that Ω(

√n log n) features suffices for an optimal O(1/

√n) learning error in a minimax

sense (Caponnetto and De Vito, 2007). Moreover, the number of features can be reduced evenfurther if a data-dependent sampling distribution is employed. While these are groundbreakingresults, guaranteeing computational savings without any loss of statistical efficiency, they requiresome technical assumptions that are difficult to verify. Moreover, to what extent the bounds can beimproved by utilizing data-dependent distributions still remains unclear. Finally, it does not seemstraightforward to generalize the approach of Rudi and Rosasco (2017) to kernel support vectormachines (SVM) and/or kernel logistic regression (KLR). Recently, Sun et al. (2018) have providednovel bounds for random Fourier features in the SVM setting, assuming the Massart’s low noisecondition and that the target hypothesis lies in the corresponding reproducing kernel Hilbert space.The bounds, however, require the sample complexity and the number of features to be exponential inthe dimension of the instance space and this can be problematic for high dimensional instance spaces.The theoretical results are also restricted to the hinge loss (without means to generalize to other lossfunctions) and require optimized features.

In this paper, we address the gaps mentioned above by making the following contributions:

• We devise a simple framework for the unified analysis of generalization properties of randomFourier features, which applies to kernel ridge regression, as well as to kernel support vectormachines and logistic regression.

• For the plain random Fourier features sampling scheme, we provide, to the best of ourknowledge, the sharpest results on the number of features required. In particular, we showthat already with Ω(

√n log dλK) features, we incur no loss of learning accuracy in kernel

ridge regression, where dλK corresponds to the notion of the number of effective degrees offreedom (Bach, 2013) with dλK n and λ := λ(n) is the regularization parameter. In addition,Ω(1/λ) features is sufficient to ensure O(

√λ) expected risk rate in kernel support vector

machines and kernel logistic regression.

• In the case of a modified data-dependent sampling distribution, the so called empirical ridgeleverage score distribution, we demonstrate that Ω(dλK) features suffice for the learning risk toconverge at O(λ) rate in kernel ridge regression (O(

√λ) in kernel support vector machines

and kernel logistic regression).

• In our refined analysis of kernel ridge regression, we show that the excess risk convergencerate of the estimator based on random Fourier features can (depending on the decay rate ofthe spectrum of kernel function) be upper bounded by O( lognn ) or even O( 1

n), which impliesmuch faster convergence than O( 1√

n) rate featuring in most of previous bounds.

• Similarly, our refined analysis for Lipschitz continuous loss demonstrates that under a realizablecase (defined subsequently) one could achieve O( logn√

n) excess risk convergence rate with

only O(√n) features. To the best of our knowledge, this is the first result offering non-trivial

computational savings for approximations in problems with Lipschitz loss functions.

• Finally, as the empirical ridge leverage scores distribution is typically costly to compute, wegive a fast algorithm to generate samples from the approximated empirical leverage distribution.

3

LI, TON, OGLIC AND SEJDINOVIC

Utilizing these samples one can significantly reduce the computation time during the in sampleprediction and testing stages, O(n) and O(log n log log n), respectively. We also includea proof that gives a trade-off between the computational cost and the expected risk of thealgorithm, showing that the statistical efficiency can be preserved while provably reducing therequired computational cost.

2. Background

In this section, we provide some notation and preliminary results that will be used throughout thepaper. Henceforth, we denote the Euclidean norm of a vector a ∈ Rn with ‖a‖2 and the operatornorm of a matrix A ∈ Rn1×n2 with ‖A‖2. Furthermore, we denote with ‖A‖F the Frobenious normof a matrix or operator A. Let H be a Hilbert space with 〈·, ·〉H as its inner product and ‖ · ‖H asits norm. We use Tr(·) to denote the trace of an operator or a matrix. Given a measure dρ, we useL2(dρ) to denote the space of square-integrable functions with respect to dρ.

2.1 Random Fourier Features

Random Fourier features is a widely used, simple, and effective technique for scaling up kernelmethods. The underlying principle of the approach is a consequence of Bochner’s theorem (Bochner,1932), which states that any bounded, continuous and shift-invariant kernel is a Fourier transform ofa bounded positive measure. This measure can be transformed/normalized into a probability measurewhich is typically called the spectral measure of the kernel. Assuming the spectral measure dτ has adensity function p(·), the corresponding shift-invariant kernel can be written as

k(x, y) =

∫Ve−2πiv

T (x−y)dτ(v) =

∫V

(e−2πiv

T x)(e−2πiv

T y)∗p(v)dv, (1)

where c∗ denotes the complex conjugate of c ∈ C. Typically, the kernel is real valued and we canignore the imaginary part in this equation (e.g., see Rahimi and Recht, 2007). The principle can befurther generalized by considering the class of kernel functions which can be decomposed as

k(x, y) =

∫Vz(v, x)z(v, y)p(v)dv, (2)

where z : V × X → R is a continuous and bounded function with respect to v and x. The main ideabehind random Fourier features is to approximate the kernel function by its Monte-Carlo estimate

k(x, y) =1

s

s∑i=1

z(vi, x)z(vi, y), (3)

with reproducing kernel Hilbert space H (not necessarily contained in the reproducing kernel Hilbertspace H corresponding to the kernel function k) and visi=1 sampled independently from thespectral measure. In Bach (2017a, Appendix A), it has been established that a function f ∈ H can beexpressed as 1:

f(x) =

∫Vg(v)z(v, x)p(v)dv (∀x ∈ X ) (4)

1. It is not necessarily true that for any g ∈ L2(dτ), there exists a corresponding f ∈ H.

4

TOWARDS A UNIFIED ANALYSIS OF RANDOM FOURIER FEATURES

where g ∈ L2(dτ) is a real-valued function such that ‖g‖2L2(dτ)< ∞ and ‖f‖H is equal to the

minimum of ‖g‖L2(dτ), over all possible decompositions of f . Thus, one can take an independentsample visi=1 ∼ p(v) (we refer to this sampling scheme as plain RFF) and approximate a functionf ∈ H at a point xj ∈ X by

f(xj) =s∑i=1

αiz(vi, xj) := zxj (v)Tα with α ∈ Rs.

In standard estimation problems, it is typically the case that for a given set of instances xini=1 oneapproximates fx = [f(x1), · · · , f(xn)]T by

fx = [zx1(v)Tα, · · · , zxn(v)Tα]T := Zα,

where Z ∈ Rn×s with zxj (v)T as its jth row.As the latter approximation is simply a Monte Carlo estimate, one could also pick an importance

weighted probability density function q(·) and sample features visi=1 from q (we refer to thissampling scheme as weighted RFF). Then, the function value f(xj) can be approximated by

fq(xj) =

s∑i=1

βizq(vi, xj) := zq,xj (v)Tβ,

with zq(vi, xj) =√p(vi)/q(vi)z(vi, xj) and zq,xj (v) = [zq(v1, xj), · · · , zq(vs, xj)]T . Hence, a

Monte-Carlo estimate of fx can be written in a matrix form as fq,x = Zqβ, where Zq ∈ Rn×s withzq,xj (v)T as its jth row.

Let K and Kq be Gram-matrices with entries Kij = k(xi, xj) and Kq,ij = kq(xi, xj) such that

K =1

sZZT ∧ Kq =

1

sZqZ

Tq .

If we now denote the jth column of Z by zvj (x) and the jth column of Zq by zq,vj (x), then thefollowing equalities can be derived easily from Eq. (3):

Ev∼p(K) = K = Ev∼q(Kq) ∧ Ev∼p[zv(x)zv(x)T

]= K = Ev∼q

[zq,v(x)zq,v(x)T

].

Since we now conduct regularized empirical risk minimization in H, we would like to find outthe norm of f , the next proposition gives an upper bound of its norm.

Proposition 1. Assume that the reproducing kernel Hilbert spaceH with kernel k admits a decompo-sition as in Eq. (2) and let H := f | f =

∑si=1 αiz(vi, ·), ∀αi ∈ R. Then, for all f ∈ H it holds

that ‖f‖2H ≤ s‖α‖22, where H is the reproducing kernel Hilbert space with kernel k (see Eq. 3).

Proof. Let us define a space of functions as

H1 := f | f(x) = αz(v, x), α ∈ R.

We now show that H1 is a reproducing kernel Hilbert space with kernel defined as k1(x, y) =(1/s)z(v, x)z(v, y), where s is a constant. Define a map M : R → H1 such that Mα =

5

LI, TON, OGLIC AND SEJDINOVIC

αz(v, ·),∀α ∈ R. The map M is a bijection, i.e. for any f ∈ H1 there exists a unique αf ∈ R suchthat M−1f = αf . Now, we define an inner product onH1 as

〈f, g〉H1 = 〈√sM−1f,

√sM−1g〉R = sαfαg.

It is easy to show that this is a well defined inner product and, thus,H1 is a Hilbert space.

For any instance y, k1(·, y) = (1/s)z(v, ·)z(v, y) ∈ H1, since (1/s)z(v, x) ∈ R by definition.Take any f ∈ H1 and observe that

〈f, k1(·, y)〉H1 = 〈√sM−1f,

√sM−1k1(·, y)〉R

= s〈αf , 1/sz(v, y)〉R= αfz(v, y) = f(y).

Hence, we have demonstrated the reproducing property forH1 and ‖f‖H1 = sα2f .

Now, suppose we have a sample of features visi=1. For each vi, we define the reproducingkernel Hilbert space

Hi := f | f(x) = αz(vi, x), α ∈ R

with the kernel ki(x, y) = (1/s)z(vi, x)z(vi, y). Denoting with

H = ⊕si=1Hi = f : f =

s∑i=1

fi, fi ∈ Hi

and using the fact that the direct sum of reproducing kernel Hilbert spaces is another reproducingkernel Hilbert space (Berlinet and Thomas-Agnan, 2011), we have that k(x, y) =

∑si=1 ki(x, y) =

(1/s)∑s

i=1 z(vi, x)z(vi, y) is the kernel of H and that the norm of f ∈ H is defined as

minfi∈Hi | f=

∑si=1 fi

s∑i=1

‖fi‖Hi =

minαi∈R | fi=αiz(vi,·)

s∑i=1

sα2i = min

αi∈R | fi=αiz(vi,·)s‖α‖22.

Hence, we have that ‖f‖H ≤ s‖α‖22.

An importance weighted density function based on the notion of ridge leverage scores is in-troduced in Alaoui and Mahoney (2015) for landmark selection in the Nyström method (Nyström,1930; Smola and Schölkopf, 2000; Williams and Seeger, 2001). For landmarks selected using thatsampling strategy, Alaoui and Mahoney (2015) establish a sharp convergence rate of the low-rankestimator based on the Nyström method. This result motivates the pursuit of a similar notion forrandom Fourier features. Indeed, Bach (2017b) propose a leverage score function based on an integraloperator defined using the kernel function and the marginal distribution of a data-generating process.Building on this work, Avron et al. (2017) propose the ridge leverage function with respect to a fixedinput dataset, i.e.,

lλ(v) = p(v)zv(x)T (K + nλI)−1zv(x). (5)

6

TOWARDS A UNIFIED ANALYSIS OF RANDOM FOURIER FEATURES

From our assumption on the decomposition of a kernel function, it follows that there exists a constantz0 such that |z(v, x)| ≤ z0 (for all v and x) and zv(x)T zv(x) ≤ nz20 . We can now deduce thefollowing inequality using a result from Avron et al. (2017, Proposition 4):

lλ(v) ≤ p(v)z20λ

with∫Vlλ(v)dv = Tr

[K(K + nλI)−1

]:= dλK.

The quantity dλK is known for implicitly determining the number of independent parameters in alearning problem and, thus, it is called the effective dimension of the problem (Caponnetto andDe Vito, 2007) or the number of effective degrees of freedom (Bach, 2013; Hastie, 2017).

We can now observe that q∗(v) = lλ(v)/dλK is a probability density function. In Avron et al.(2017), it has been established that sampling according to q∗(v) requires fewer Fourier featurescompared to the standard spectral measure sampling. We refer to q∗(v) as the empirical ridgeleverage score distribution and, in the remainder of the manuscript, refer to this sampling strategy asleverage weighted RFF.

2.2 Rademacher Complexity

To characterize the stability of a learning algorithm, we need to take into account the complexityof the space of functions. Below, we first introduce a particular measure of the complexity overfunction spaces known as Rademacher complexity, we then present two lemmas that demonstrate howRademacher complexity of RKHS can be linked to kernel and how the excess risk can be computedthrough Rademacher complexity.

Definition 1. Let Px be a probability distribution on a set X and suppose that x1 · · · , xn areindependent samples selected according to Px. LetH be a class of functions mapping X to R. Then,the random variable known as the empirical Rademacher complexity is defined as

Rn(H) = Eσ

[supf∈H

∣∣∣∣∣ 2nn∑i=1

σif(xi)

∣∣∣∣∣ | x1, · · · , xn]

where σ1, · · · , σn are independent uniform ±1-valued random variables. The correspondingRademacher complexity is then defined as the expectation of the empirical Rademacher complexity,i.e.,

Rn(H) = E[Rn(H)

].

The following lemma provides the Rademacher complexity for a certain RKHS with kernel k.

Lemma 1. (Bartlett and Mendelson, 2002) LetH be a reproducing kernel Hilbert space of functionsmapping from X to R that corresponds to a positive definite kernel k. Let H0 be the unit ball ofH, centered at the origin. Then, we have that Rn(H0) ≤ (1/n)EX

√Tr(K), where K is the Gram

matrix for kernel k over an independent and identically distributed sample X = x1, · · · , xn.

Lemma 2 states that the expected excess risk convergence rate of a particular estimator inH notonly depends on the number of data points, but also on the complexity ofH.

7

LI, TON, OGLIC AND SEJDINOVIC

Lemma 2. (Bartlett and Mendelson, 2002, Theorem 8) Let xi, yini=1 be an independent andidentically distributed sample from a probability measure P defined on X ×Y and letH be the spaceof functions mapping from X to A. Denote a loss function with l : Y × A → [0, 1] and define theexpected risk function for all f ∈ H to be E(f) = EP (l(y, f(x))), together with the correspondingempirical risk function E(f) = (1/n)

∑ni=1 l(yi, f(xi)). Then, for a sample size n, for all f ∈ H

and δ ∈ (0, 1), with probability 1− δ, we have that

E(f) ≤ E(f) +Rn(l H) +

√8 log(2/δ)

n

where l H = (x, y)→ l(y, f(x))− l(y, 0) | f ∈ H.

3. Theoretical Analysis

In this section, we provide a unified analysis of the generalization properties of learning with randomFourier features. We start with a bound for learning with the mean squared error loss function andthen extend our results to problems with Lipschitz continuous loss functions. Before presenting theresults, we briefly review the standard problem setting for supervised learning with kernel methods.

Let X be an instance space, Y a label space, and ρ(x, y) = ρX (x)ρ(y | x) a probability measureon X × Y defining the relationship between an instance x ∈ X and a label y ∈ Y . A trainingsample is a set of examples (xi, yi)ni=1 sampled independently from the distribution ρ, knownonly through the sample. The distribution ρX is called the marginal distribution of a data-generatingprocess. The goal of a supervised learning task defined with a kernel function k (and the associatedreproducing kernel Hilbert spaceH) is to find a hypothesis2 f : X → Y such that f ∈ H and f(x) isa good estimate of the label y ∈ Y corresponding to a previously unseen instance x ∈ X . While inregression tasks Y ⊂ R, in classification tasks it is typically the case that Y = −1, 1. As a resultof the representer theorem an empirical risk minimization problem in this setting can be expressedas (Scholkopf and Smola, 2001)

fλ := arg minf∈H

1

n

n∑i=1

l(yi, (Kα)i) + λαTKα, (6)

where f =∑n

i=1 αik(xi, ·) with α ∈ Rn, l : Y ×Y → R+ is a loss function, K is the kernel matrix,and λ is the regularization parameter. The hypothesis fλ is an empirical estimator and its ability todescribe ρ is measured by the expected risk (Caponnetto and De Vito, 2007)

E(fλ) =

∫X×Y

l(y, fλ(x))dρ(x, y).

Similar to Rudi and Rosasco (2017) and Caponnetto and De Vito (2007), we have assumed 3 theexistence of fH ∈ H such that fH = inff∈H E(f). The assumption implies that there exists some

2. Throughout the paper, we assume (without loss of generality) that our hypothesis space is the unit ball in a reproducingkernel Hilbert spaceH, i.e., ‖f‖H ≤ 1. This is a standard assumption, characteristic to the analysis of random Fourierfeatures (e.g., see Rudi and Rosasco, 2017)

3. The existence of fH depends on the complexity ofH which is related to the conditional distribution ρ(y|x) and themarginal distribution ρX . For more details, please see Caponnetto and De Vito (2007) and Rudi and Rosasco (2017).

8

TOWARDS A UNIFIED ANALYSIS OF RANDOM FOURIER FEATURES

ball of radius R > 0 containing fH in its interior. Our theoretical results do not require priorknowledge of this constant and hold uniformly over all finite radii. To simplify our derivations andconstant terms in our bounds, we have (without loss of generality) assumed that R = 1.

3.1 Learning with the Squared Error Loss

In this section, we consider learning with the squared error loss, i.e., l(y, f(x)) = (y − f(x))2.For this particular loss function, the optimization problem from Eq. (6) is known as kernel ridgeregression. The problem can be reduced to solving a linear system (K + nλI)α = Y , withY = [y1, · · · , yn]T . Typically, an approximation of the kernel function based on random Fourierfeatures is employed in order to effectively reduce the computational cost and scale kernel ridgeregression to problems with millions of examples. More specifically, for a vector of observed labels Ythe goal is to find a hypothesis fx = Zqβ that minimizes ‖Y − fx‖22 while having good generalizationproperties. In order to achieve this, one needs to control the complexity of hypotheses defined byrandom Fourier features and avoid over-fitting. According to Proposition 1, ‖f‖2H can be upperbounded by s‖β‖22, where s is the number of sampled features. Hence, the learning problem withrandom Fourier features and the squared error loss can be cast as

βλ := arg minβ∈Rs

1

n‖Y − Zqβ‖22 + λs‖β‖22. (7)

This is a linear ridge regression problem in the space of Fourier features and the optimal hypothesisis given by fλβ = Zqβλ, where βλ = (ZTq Zq + nλI)−1ZTq Y . Since Zq ∈ Rn×s, the computationaland space complexities are O(s3 + ns2) and O(ns). Thus, significant savings can be achieved usingestimators with s n. To assess the effectiveness of such estimators, it is important to understandthe relationship between the expected risk and the choice of s.

3.1.1 WORST CASE ANALYSIS

In this section, we assume that the unit ball of the reproducing kernel Hilbert space contains thehypothesis fH and provide a bound on the required number of random Fourier features with respectto the worst case minimax rate of the corresponding kernel ridge regression problem. The followingtheorem gives a general result while taking into account both the number of features s and a samplingstrategy for selecting them.

Theorem 1. Assume a kernel function k has a decomposition as in Eq. (2) and let |y| ≤ y0 bebounded with y0 > 0. Denote with λ1 ≥ · · · ≥ λn the eigenvalues of the kernel matrix K andassume the regularization parameter satisfies 0 ≤ nλ ≤ λ1. Let l : V → R be a measurablefunction such that l(v) ≥ lλ(v) (∀v ∈ V) and dl =

∫V l(v)dv <∞. Suppose visi=1 are sampled

independently from the probability density function q(v) = l(v)/dl. If the unit ball ofH contains theoptimal hypothesis fH and

s ≥ 5dl log16dλKδ

,

then for all δ ∈ (0, 1), with probability 1− δ, the excess risk of fλβ can be upper bounded as

E(fλβ )− E(fH) ≤ 2λ+O(

1√n

)+ E(fλ)− E(fH). (8)

9

LI, TON, OGLIC AND SEJDINOVIC

Theorem 1 expresses the trade-off between the computational and statistical efficiency throughthe regularization parameter λ, the effective dimension of the problem dλK, and the normalizationconstant of the sampling distribution dl. The regularization parameter can be considered as somefunction of the number of training examples (Caponnetto and De Vito, 2007; Rudi and Rosasco,2017) and we use its decay rate as the sample size increases to quantify the complexity of the targetregression function fρ(x) =

∫ydρ(y | x). In particular, Caponnetto and De Vito (2007) have shown

that the minimax risk convergence rate for kernel ridge regression is O( 1√n

). Setting λ ∝ 1√n

, we

observe that the estimator fλβ attains the worst case minimax rate of kernel ridge regression.

To prove Theorem 1, we need Theorem 2 and Lemma 3 to analyse the learning risk. In Theorem2, we give a general result that provides an upper bound on the approximation error between anyfunction f ∈ H and its estimator based on random Fourier features. As discussed in Section 2, wewould like to approximate a function f ∈ H at observation points with preferably as small functionnorm as possible. The estimation of fx can be formulated as the following optimization problem:

minβ∈Rs

1

n‖fx − Zqβ‖22 + λs‖β‖22.

Below we provide the desired upper bound on the approximation error of the estimator based onrandom Fourier features (proof presented in Appendix B).

Theorem 2. Let λ1 ≥ · · · ≥ λn be the eigenvalues of the kernel matrix K and assume that theregularization parameter satisfies 0 ≤ nλ ≤ λ1. Let l : V → R be a measurable function such thatl(v) ≥ lλ(v) (∀v ∈ V) and

dl =

∫Vl(v)dv <∞.

Suppose visi=1 are sampled independently according to probability density function q(v) = l(v)dl

. If

s ≥ 5dl log16dλKδ

,

then for all δ ∈ (0, 1) and ‖f‖H ≤ 1, with probability greater than 1− δ, we have that it holds

sup‖f‖H≤1

inf√s‖β‖2≤

√2

1

n‖fx − Zqβ‖22 ≤ 2λ. (9)

The next lemma, following Rudi and Rosasco (2017), is important in demonstrating the riskconvergence rate as it illustrates the relationship between Y , fλ and fλβ , the proof is in Appendix C.

Lemma 3. Assuming that the conditions of Theorem 1 hold, let fλ and fλβ be the empirical estimatorsfrom problems (6) and (7), respectively. In addition, suppose that visi=1 are independent samplesselected according to a probability measure τq with probability density function q(v) such thatp(v)/q(v) > 0 almost surely. Then, we have

〈Y − fλ, fλβ − fλ〉 = 0.

Equipped with Theorem 2 and Lemma 3, we are now ready to prove Theorem 1.

10

TOWARDS A UNIFIED ANALYSIS OF RANDOM FOURIER FEATURES

Proof. The proof relies on the decomposition of the expected risk of E(fλβ ) as follows

E(fλβ ) = E(fλβ )− E(fλβ ) (10)

+E(fλβ )− E(fλ) (11)

+E(fλ)− E(fλ) (12)

+E(fλ)− E(fH) (13)

+E(fH).

For (10), the bound is based on the Rademacher complexity of the reproducing kernel Hilbert spaceH, where H corresponds to the approximated kernel k. We can upper bound the Rademachercomplexity of this hypothesis space with Lemma 1. As l(y, f(x)) is the squared error loss functionwith y and f(x) bounded, we have that l is a Lipschitz continuous function with some constantL > 0. Hence,

(10) ≤ Rn(l H) +

√8 log(2/δ)

n

≤√

2L1

nEX√

Tr(K) +

√8 log(2/δ)

n

≤√

2L1

n

√EXTr(K) +

√8 log(2/δ)

n

≤√

2L1

n

√nz20 +

√8 log(2/δ)

n

≤√

2Lz0√n

+

√8 log(2/δ)

n∈ O(

1√n

), (14)

where in the last inequality we applied Lemma 2 to H, which is a reproducing kernel Hilbert spacewith radius

√2. For (12), a similar reasoning can be applied to the unit ball in the reproducing kernel

Hilbert spaceH.

11

LI, TON, OGLIC AND SEJDINOVIC

For (11), we observe that

E(fλβ )− E(fλ) =1

n‖Y − fλβ ‖22 −

1

n‖Y − fλ‖22

=1

ninf‖fβ‖‖Y − fβ‖22 −

1

n‖Y − fλ‖22

=1

ninf‖fβ‖

(‖Y − fλ‖22 + ‖fλ − fβ‖22

+2〈Y − fλ, fλ − fβ〉)− 1

n‖Y − fλ‖22

≤ 1

ninf‖fβ‖‖fλ − fβ‖22

+2

ninf‖fβ‖〈Y − fλ, fλ − fβ〉

≤ 1

ninf‖fβ‖‖fλ − fβ‖22 +

2

n〈Y − fλ, fλ − fλβ 〉

=1

ninf‖fβ‖‖fλ − fβ‖22

≤ sup‖f‖

inf‖fβ‖

1

n‖f − fβ‖22

≤ 2λ,

where in the last step we employ Theorem 2. Combining the three results, we derive

E(fλβ )− E(fH) ≤ 2λ+O(1√n

) + E(fλ)− E(fH). (15)

As a consequence of Theorem 1, we have the following bounds on the number of requiredfeatures for the two strategies: leverage weighted RFF (Corollary 1) and plain RFF (Corollary 2).

Corollary 1. If the probability density function from Theorem 1 is the empirical ridge leverage score

distribution q∗(v), then the upper bound on the risk from Eq. (8) holds for all s ≥ 5dλK log16dλKδ .

Proof. For Corollary 1, we set l(v) = lλ(v) and deduce

dl =

∫Vlλ(v)dv = dλK.

Theorem 1 and Corollary 1 have several implications on the choice of λ and s. First, we couldpick λ ∈ O(n−1/2) that implies the worst case minimax rate for kernel ridge regression (Caponnettoand De Vito, 2007; Rudi and Rosasco, 2017; Bartlett et al., 2005) and observe that in this cases is proportional to dλK log dλK. As dλK is determined by the learning problem (i.e., the marginaldistribution ρX ), we can consider several different cases. In the best case (e.g., the Gaussian kernelwith a sub-Gaussian marginal distribution ρX ), the eigenvalues of K exhibit a geometric/exponential

12

TOWARDS A UNIFIED ANALYSIS OF RANDOM FOURIER FEATURES

decay, i.e., λi ∝ R0ri (R0 is some constant). From Bach (2017b), we know that dλK ≤ log(R0/λ),

implying s ≥ log2 n. Hence, significant savings can be obtained with O(n log4 n+ log6 n) computa-tional and O(n log2 n) storage complexities of linear ridge regression over random Fourier features,as opposed to O(n3) and O(n2) costs (respectively) in the kernel ridge regression setting.

In the case of a slower decay (e.g.,H is a Sobolev space of order t ≥ 1) with λi ∝ R0i−2t, we

have dλK ≤ (R0/λ)1/(2t) and s ≥ n1/(4t) log n. Hence, even in this case a substantial computationalsaving can be achieved. Furthermore, in the worst case with λi close to R0i

−1, our bound impliesthat s ≥

√n log n features is sufficient, recovering the result from Rudi and Rosasco (2017).

Corollary 2. If the probability density function from Theorem 1 is the spectral measure p(v) from

Eq. (2), then the upper bound on the risk from Eq. (8) holds for all s ≥ 5z20λ log

16dλKδ .

Proof. For Corollary 2, we set l(v) = p(v)z20λ and derive

dl =

∫Vp(v)

z20λdv =

z20λ.

Corollary 2 addresses plain random Fourier features and states that if s is chosen to be greater than√n log dλK and λ ∝ 1√

nthen the minimax risk convergence rate is guaranteed. When the eigenvalues

have an exponential decay, we obtain the same convergence rate with only s ≥√n log logn features,

which is an improvement compared to a result by Rudi and Rosasco (2017) where s ≥√n log n. For

the other two cases, we derive s ≥√n log n and recover the results from Rudi and Rosasco (2017).

3.1.2 REFINED ANALYSIS

In this section, we provide a more refined analysis with expected risk convergence rates faster thanO( 1√

n), depending on the spectrum decay of the kernel function and/or the complexity of the target

regression function.

Theorem 3. Suppose that the conditions from Theorem 1 apply and let

s ≥ 5dl log16dλKδ

.

Then, for all D > 1 and δ ∈ (0, 1), with probability 1 − δ, the excess risk of fλβ can be upperbounded as

E(fλβ )− E(fH) ≤ 2r∗H + 2λD

(D − 1)+O

(1

n

)+ E(fλ)− E(fH). (16)

Furthermore, denoting the eigenvalues of the normalized kernel matrix (1/n)K with λini=1, wehave that

r∗H ≤ min0≤h≤n(hn∗ e7n2λ2

+

√1

n

∑i>h

λi

), (17)

where e7 > 0 is a constant and λ1 ≥ · · · ≥ λn.

13

LI, TON, OGLIC AND SEJDINOVIC

Theorem 3 covers a wide range of cases and can provide sharper risk convergence rates. In par-ticular, note that r∗H is of order O(1/

√n), which happens when the spectrum decays approximately

as 1/n and h = 0. In this case, the excess risk converges with the rate O(1/√n), which corresponds

to the considered worst case minimax rate. On the other hand, if the eigenvalues decay exponentially,then setting h = dlog ne implies that r∗H ≤ O(log n/n). Furthermore, setting λ ∝ logn

n , we canshow that the excess risk converges at a much faster rate of O(log n/n). In the best case, when thekernel function has only finitely many positive eigenvalues, we have that r∗H ≤ O(1/n) by letting hbe any fixed value larger than the number of positive eigenvalues. In this case, we obtain the fastestrate of O(1/n) for the regularization parameter λ ∝ 1

n .

To prove Theorem 3, we rely on the notion of local Rademacher complexity and adjust ournotation so that it is easier to cross-reference relevant auxiliary claims from Bartlett et al. (2005).Suppose P is a probability measure on X × Y and let xi, yini=1 be an independent sample fromP . For any reproducing kernel Hilbert space H and a loss function l, we define the transformedfunction class as lH := l(f(x), y) | f ∈ H. We also abbreviate the notation and denote withlf = l(f(x), y) and En(f) = 1/n

∑ni=1 f(xi). For the reproducing kernel Hilbert space H, we

denote the solution of the kernel ridge regression problem by f .

In general, the reason Theorem 1 is not sharp is because when analysing Eqs.(10 and 12), weused the global Rademacher complexity of the whole RKHS. However, as pointed out by Bartlettet al. (2005), regularised ERM is likely to return a function that are around fH. Hence, instead ofestimating the global function space complexity, we could just analyse the local space around fH.Specifically, we would like to apply Theorem 4 to Eqs.(10 and 12) to compute the local Rademachercomplexity. In order to do so, we need to find a proper sub-root function (see Appendix D for itsdefinition and property). Below, we first state the theorem for local Rademacher complexity. Wethen present Lemma 4 and 11 in order to prove Theorem 5 to find the proper sub-root function. Bycombining Theorem 4 and 5 and apply them to Eqs.(10 and 12), we obtain Theorem 3.

Theorem 4. (Bartlett et al., 2005, Theorem 4.1) LetH be a class of functions with ranges in [−1, 1]and assume that there is some constant B0 such that for all f ∈ H, E(f2) ≤ B0E(f). Let ψn be asub-root function and let r∗ be the fixed point of ψn, i.e., ψn(r∗) = r∗. Fix any δ > 0, and assumethat for any r ≥ r∗,

ψn(r) ≥ e1Rnf ∈ star(H, 0) | En(f2) ≤ r+e2δ

n

and

star(H, f0) = f0 + α(f − f0) | f ∈ H ∧ α ∈ [0, 1].

Then, for all f ∈ H and D > 1, with probability greater than 1− 3e−δ,

E(f) ≤ D

D − 1En(f) +

6D

Br∗ +

e3δ

n

where e1, e2 and e3 are some constants.

As stated before, in order to obtain a sharper rate, we need to compute the local Rademachercomplexity. The following lemma allows us to compute it through the eigenvalues of the Grammatrix.

14

TOWARDS A UNIFIED ANALYSIS OF RANDOM FOURIER FEATURES

Lemma 4. (Bartlett et al., 2005, Lemma 6.6) Let k be a positive definite kernel function withreproducing kernel Hilbert space H and let λ1 ≥ · · · ≥ λn be the eigenvalues of the normalizedGram-matrix (1/n)K. Then, for all r > 0

Rnf ∈ H | En(f2) ≤ r ≤( 2

n

n∑i=1

minr, λi)1/2

Now we would like to apply Theorem 4, our task is to find a proper sub-root function. To thisend, we let l be the squared error loss function and observe that for all f ∈ H it holds that

En(l2f ) ≥ (En(lf ))2 (x2 is convex)

≥ (Enlf )2 − (Enlf )2

= (Enlf + Enlf )(Enlf − Enlf )

≥ 2Enlf En(lf − lf )

≥ 2

BEnlfEn(f − f)2. (Lemma 11 in Appendix D) (18)

The third inequality holds because f achieves the minimal empirical risk. The last inequality is aconsequence of Lemma 11 applied to the empirical probability distribution Pn. Hence, to obtain alower bound on Enl2f expressed solely in terms of En(f − f)2, we need to find a lower bound ofEnlf . First, observe that it holds

Enlf =1

n‖Y −K(K + nλI)−1Y ‖2.

Then, using this expression we derive

Enlf =1

n‖Y −K(K + nλI)−1Y ‖2

= nλ2Y T (K + nλI)−2Y

≥ nλ2

(λ1 + nλ)2Y TY

=

(nλ

λ1 + nλ

)21

n

n∑i=1

y2i

≥

(nλ

λ1 + nλ

)2

σ2y (with1

n

n∑i=1

y2i ≥ σ2y)

= σ2y

(1

1 + λ1nλ

)2

≥ σ2y

(1

λ1nλ + λ1

nλ

)2

=σ2y4

(nλ

λ1

)2

= e4(nλ)2, (19)

15

LI, TON, OGLIC AND SEJDINOVIC

where e4 = (σy2λ1

)2 is a constant.The last equality follows because λ1 is independent of n and λ, as well as bounded. Hence,

Eq.(18) becomes

Enl2f ≥2e4(nλ)2

BEn(f − f)2 =: e5(nλ)2En(f − f)2.

As a result of this, we have the following inequality for the two function classes

lf ∈ lH | Enl2f ≤ r ⊆ lf ∈ lH | En(f − f)2 ≤ r

e5(nλ)2.

Recall that for a function classH, we denote its empirical Rademacher complexity by Rn(H).Then, we have the following inequality

Rnlf ∈ lH | Enl2f ≤ r ≤

Rnlf ∈ lH | En(f − f)2 ≤ r

e5n2λ2 =

Rnlf − lf | En(f − f)2 ≤ r

e5n2λ2∧ lf ∈ lH ≤

LRnf − f | En(f − f)2 ≤ r

e5n2λ2∧ f ∈ H ≤

LRnf − g | En(f − g)2 ≤ r

e5n2λ2∧ f, g ∈ H ≤

2LRnf ∈ H | Enf2 ≤1

4e5

r

n2λ2 =

2LRnf ∈ H | Enf2 ≤e6r

n2λ2,

(20)

where the last inequality is due to Bartlett et al. (2005, Corollary 6.7). Now, combining Lemma 4and Eq. (20) we can derive the following theorem which gives us the proper sub-root function ψn.The theorem is proved in Appendix E.

Theorem 5. Assume xi, yini=1 is an independent sample from a probability measure P defined onX × Y , with Y ∈ [−1, 1]. Let k be a positive definite kernel with the reproducing kernel HilbertspaceH and let λ1 ≥ · · · ,≥ λn be the eigenvalues of the normalized kernel Gram-matrix. Denotethe squared error loss function by l(f(x), y) = (f(x)− y)2 and fix δ > 0. If

ψn(r) = 2Le1

(2

n

n∑i=1

minr, λi

)1/2

+e3δ

n,

then for all lf ∈ lH and D > 1, with probability 1− 3e−δ,

E(f) = E(lf ) ≤ D

D − 1Enlf +

6D

Br∗ +

e3δ

n.

Moreover, the fixed point r∗ defined with r∗ = ψn(r)∗ can be upper bounded by

r∗ ≤ min0≤h≤n

(hn∗ e7n2λ2

+

√1

n

∑i>h

λi

),

where e7 is a constant, and λ is the regularization parameter used in kernel ridge regression.

16

TOWARDS A UNIFIED ANALYSIS OF RANDOM FOURIER FEATURES

We are now ready to deliver the proof of Theorem 3.

Proof. We decompose E(fλβ ) with D > 1 as follows

E(fλβ ) = E(fλβ )− D

D − 1E(fλβ )

+D

D − 1E(fλβ )− D

D − 1E(fλ)

+D

D − 1E(fλ)− E(fλ)

+E(fλ)− E(fH)

+E(fH).

Hence,

E(fλβ )− E(fH) ≤

∣∣∣∣∣E(fλβ )− D

D − 1E(fλβ )

∣∣∣∣∣ (21)

+D

D − 1(E(fλβ )− E(fλ)) (22)

+

∣∣∣∣∣ D

D − 1E(fλ)− E(fλ)

∣∣∣∣∣ (23)

+E(fλ)− E(fH). (24)

We have already demonstrated that

Eq. (22) ≤ 2D

D − 1λ.

For Eqs. (21) and (23) we apply Theorem 5. However, note that fλβ and fλ belong to differentreproducing kernel Hilbert spaces. As a result, we have

Eq. (21) ≤ r∗H +O(1/n)

Eq. (23) ≤ r∗H +O(1/n)

Now, combining these inequalities together we deduce

E(fλβ )− E(fH) ≤ r∗H + r∗H + 2D

D − 1λ+O(1/n)

+E(fλ)− E(fH)

≤ 2r∗H + 2D

D − 1λ+O(1/n)

+E(fλ)− E(fH).

The last inequality holds because the eigenvalues of the Gram-matrix for the reproduing kernelHilbert space H decay faster than the eigenvalues ofH. As a result of this, we have that r∗H ≤ r

∗H.

17

LI, TON, OGLIC AND SEJDINOVIC

Now, Theorem 5 implies that

r∗H ≤ min0≤h≤n(hn∗ e7n2λ2

+

√1

n

∑i>h

λi

). (25)

There are two cases worth discussing here. On the one hand, if the eigenvalues of K decayexponentially, we have

r∗H ≤ O

(log n

n

)by substituting h = dlog ne. Now, according to Caponnetto and De Vito (2007)

E(fλ)− E(fH) ∈ O

(log n

n

),

and, thus, if we set λ ∝ lognn then the expected risk rate can be upper bounded by

E(fλβ )− E(fH) ∈ O

(log n

n

).

On the other hand, if K has finitely many non-zero eigenvalues (t), we then have that

r∗H ∈ O

(1

n

),

by substituting h ≥ t. Moreover, in this case, E(fλ)−E(fH) ∈ O( 1n) and setting λ ∝ 1

n , we deducethat

E(fλβ )− E(fH) ≤ O

(1

n

).

3.2 Learning with a Lipschitz Continuous Loss

We next consider kernel methods with Lipschitz continuous loss, examples of which include kernelsupport vector machines and kernel logistic regression. Similar to the squared error loss case, weapproximate yi with gβ(xi) = zq,xi(v)Tβ and formulate the following learning problem

gλβ = arg minβ∈Rs

1

n

n∑i=1

L(yi, zq,xi(v)Tβ) + λs‖β‖22.

3.2.1 WORST CASE ANALYSIS

The following theorem describes the trade-off between the selected number of features s and theexpected risk of the estimator, providing an insight into the choice of s for Lipschitz continuous lossfunctions.

18

TOWARDS A UNIFIED ANALYSIS OF RANDOM FOURIER FEATURES

Theorem 6. Suppose that all the assumptions from Theorem 1 apply to the setting with a Lipschitzcontinuous loss. If

s ≥ 5dl log(16dλK)

δ,

then for all δ ∈ (0, 1), with probability 1− δ, the expected risk of gλβ can be upper bounded as

E(gλβ) ≤ E(gH) +√

2λ+O(

1√n

). (26)

This theorem, similar to Theorem 1, describes the relationship between s and E(gλβ) in theLipschitz continuous loss case. However, a key difference here is that the expected risk can only beupper bounded by

√λ, requiring λ ∝ 1

n in order to preserve the convergence properties of the risk.

Proof. The proof is similar to Theorem 1. In particular, we decompose the expected learning risk as

E(gλβ) = E(gλβ)− E(gλβ) (27)

+E(gλβ)− E(gH) (28)

+E(gH)− E(gH) (29)

+E(gH).

Now, (27) and (29) can be upper bounded similar to Theorem 1, through the Rademacher complexitybound from Lemma 2. For (28), we have

E(gλβ)− E(gH) =

1

n

n∑i=1

l(yi, gλβ(xi))−

1

n

n∑i=1

l(yi, gH(xi)) =

1

ninf‖gβ‖

n∑i=1

l(yi, gβ(xi))−1

n

n∑i=1

l(yi, gH(xi))

≤ inf‖gβ‖

1

n

n∑i=1

|gβ(xi)− gH(xi)|

≤ inf‖gβ‖

√√√√ 1

n

n∑i=1

|gβ(xi)− gH(xi)|2

≤ sup‖g‖

inf‖gβ‖

√1

n‖g − gβ‖22

≤√

2λ.

Corollaries 3 and 4 provide bounds for the cases of leverage weighted and plain RFF, respectively.The proofs are similar to the proofs of Corollaries 1 and 2.

Corollary 3. If the probability density function from Theorem 6 is the empirical ridge leverage score

distribution q∗(v), then the upper bound on the risk from Eq. (26) holds for all s ≥ 5dλK log16dλKδ .

19

LI, TON, OGLIC AND SEJDINOVIC

In the three considered cases for the effective dimension of the problem dλK, Corollary 3 states thatthe statistical efficiency is preserved if the leverage weighted RFF strategy is used with s ≥ log2 n,s ≥ n1/(2t) log n, and s ≥ n log n, respectively. Again, significant computational savings canbe achieved if the eigenvalues of the kernel matrix K have either a geometric/exponential or apolynomial decay.

Corollary 4. If the probability density function from Theorem 6 is the spectral measure p(v) from

Eq. (2), then the upper bound on the risk from Eq. (26) holds for all s ≥ 5z20λ log

(16dλK)δ .

Corollary 4 states that n log n features are required to attain O(n−1/2) convergence rate of theexpected risk with plain RFF, recovering results from Rahimi and Recht (2009). Similar to the analysisin the squared error loss case, Theorem 6 together with Corollaries 3 and 4 allows theoreticallymotivated trade-offs between the statistical and computational efficiency of the estimator gλβ .

3.2.2 REFINED ANALYSIS

It is generally difficult to achieve a better trade-off between computation cost and prediction accuracyin the Lipschitz continuous loss case. In order to do this, we need some further assumptions.Fortunately, for a loss function l, by assuming that the Bayes classifier function g∗l is contained in theRKHS H, we can do that.

Theorem 7. Suppose that all the assumptions from Theorem 1 apply to the setting with a Lipschitzcontinuous loss. In addition, we assume that λ > 1/2 and g∗l ∈ H with E(g∗l ) = R∗, we have that,for δ ∈ (0, 1) with probability greater than 1− 2e−δ,

E(gλβ)− E(g∗l ) ≤ C3s

nlog

1

λ+

2c1δ

n,

where C3 is a constant with respect to s, n and λ.

In realizable case, i.e., the Bayes classifier belongs to the RKHS spanned by the features, Theorem7 describes a refined relationship between the learning risk and the number of features. In addition,it also implicitly states how the complexity of g∗l can affects the learning risk convergence rate.Basically, if choosing s = O(

√n) is sufficient to make the RKHS H large enough to include g∗l , and

we let λ = O(1/√n), we can then achieve the learning rate of O( logn√

n) with only O(

√n) features.

To our knowledge, this is by far the first result that shows we can obtain computational savings inLipschitz continuous loss case. Furthermore, if g∗l has low complexity in the sense that with onlyfinitely many features cs, cs <∞, H can include g∗l , then we can achieve O(1/n) convergence ratewith only cs features. That being said, however, we are in the realizable case which is somewhatlimiting. Also, we lack of a specific way to describe the exact complexity of g∗l in terms of numberof features. Hence, how we can extend our analysis to the unrealizable case and how to analyze thecomplexity of g∗l would be an interesting future direction.

To prove Theorem 7, we need the help of the following results on the analysis of the localRademacher complexities from Bartlett et al. (2005).

Theorem 8. (Bartlett et al., 2005, Theorem 3.3) Let F := f : f(x) ∈ [a, b] be a class of functionsand B be a constant. Assume that there is a functional T : F → R+ such that Var(f) ≤ T (f) ≤

20

TOWARDS A UNIFIED ANALYSIS OF RANDOM FOURIER FEATURES

BE(f) for all f ∈ F and for α ∈ [0, 1], T (αf) ≤ α2T (f). Suppose ψ is a sub-root function withfixed point r∗ and it satisfies for all r ≥ r∗,

ψ(r) ≥ BRnf ∈ star(F , 0) : T (f) ≤ r,

then we have ∀f ∈ F , there is a constant D > 1 such that

E(f) ≤ D

D − 1En(f) +

6D

Br∗ +

c1δ

n,

with probability greater than 1− e−δ.

We would like to apply Theorem 8 to the decomposition of the learning risk in the Lipschitizcontinuous loss case, namely Eqs. (27, 28, 29). In order to do that, we need three steps. The first stepis to find a proper T functional, and the second one is to find the sub-root function ψ associated withT . Our final job is to find the unique fixed point of ψ. Hence, the following is devoted to solve thesethree problems.

To this end, we first notice that for all lf ∈ lH, we have that lf ∈ [0, lb] for some constant lbas the loss is upper bounded. In addition, since we assume z(v, x) < ∞, we have for all f ∈ H,‖f‖H <∞. We let

∀f ∈ H, T (lf ) = E(l2f) + λ‖f‖2HE(lf∗),

where λ ∈ [0,∞) is the regularization parameter and f∗ is the Bayes classifier. Now immediately,we have Var(lf ) ≤ T (lf ). Also we have

T (lf ) = E(l2f) + λ‖f‖2HE(lf∗)

≤ lbE(lf ) + λ‖f‖2HE(lf )

≤ (lb + λ‖f‖2H)E(lf )

≤ BE(lf ),

where B <∞ is some constant. Also, it is easy to verify for α ∈ [0, 1], T (αlf ) ≤ α2T (lf ).

Now that we have a proper T functional, our next job is to find the sub-root function ψ. Wenotice that

T (lf ) = El2f

+ λ‖f‖2HElf∗

≥ (Elf )2 + λ‖f‖2HElf∗

≥ (Elf )2 − (Elf∗)2 + λ‖f‖2HElf∗= (Elf + Elf∗)(Elf − Elf∗) + λ‖f‖2HElf∗

≥ 2Elf∗(Elf − Elf∗) + λ‖f‖2HElf∗

= 2Elf∗(Elf − Elf∗ +λ

2‖f‖H)

Since Elf∗ = E(f∗) = R∗, we have that

lf : T (lf ) ≤ r ⊆ lf : Elf − Elf∗ +λ

2‖f‖H ≤

r

2R∗.

21

LI, TON, OGLIC AND SEJDINOVIC

As a result,

Rnlf : T (lf ) ≤ r ≤ Rnlf : Elf − Elf∗ +λ

2‖f‖H ≤

r

2R∗

= Rnlf − lf∗ : Elf − Elf∗ +λ

2‖f‖H ≤

r

2R∗

Now if we can upper bound the Rademacher complexity and the bound happens to be a sub-rootfunction, then we could apply Theorem 8 directly. With this aim, we now define two function spacesas follows:

Hr := f ∈ H : Elf − Elf∗ +λ

2‖f‖H ≤

r

2R∗,

lHr := lf − lf∗ : f ∈ Hr.

Our job is to find the upper bound of Rn(lHr). To do this, we utilize the theories from entropynumber.

Definition 2. (Steinwart and Christmann, 2008) Let n ≥ 1 be an integer and (E, ‖ · ‖) be asemi-normed space. We define the entropy number for E as

en(E, ‖ · ‖) := infε > 0 : ∃a1, . . . , a2n−1 ∈ E, s.t.E ⊂ ∪2n−1i=1 B(ai, ε),

where B(a, ε) is the ball with center a and radius ε under the norm ‖ · ‖.

Also, given a function space F and a sequence of numbers D := x1, . . . , xn, we define thesemi-norm as ‖ · ‖D = ( 1

n

∑i f

2(xi))12 , ∀f ∈ F . Equipped with entropy number, the next two

lemmas give us the control on the empirical Rademacher complexity and the expected Rademachercomplexity. The proofs (in Appendix F) are similar to Sun et al. (2018) with slight differences on theconditions of each function space.

Lemma 5. Assume λ < 12 , we have that

Rn(lHr) ≤ L√s log 16 log2(1/λ)

n

(ρ+ 9c2

√r),

where ρ = suplHr‖lf‖D and c1 is some constant.

The next lemma gives us an upper bound on the expected Rademacher complexity.

Lemma 6. Assume λ < 1/2, we have

Rn(lHr) ≤ C1

√s

nlog2

1

λ

√r + C2

s

nlog2

1

λ.

Armed with the expected Rademacher complexity, we are now ready to prove Theorem 7.

Proof. We decompose the learning risk as follows:

E(gλβ) = E(gλβ)− D

D − 1E(gλβ)

+D

D − 1E(gλβ)− D

D − 1E(g∗) (30)

+D

D − 1E(g∗)− E(g∗) (31)

+E(g∗).

22

TOWARDS A UNIFIED ANALYSIS OF RANDOM FOURIER FEATURES

Since we assume gH ∈ H, we have Eq. (30) ≤ 0. Hence, the learing risk is now:

E(gλβ) ≤ E(gλβ)− D

D − 1E(gλβ)

+D

D − 1E(g∗)− E(g∗)

+E(g∗).

≤ |E(gλβ)− D

D − 1E(gλβ)| (32)

+|E(g∗)− D

D − 1E(g∗)| (33)

+E(g∗).

We now would like to apply Theorem 8 to Eqs. (32,33). To this end, we have already discussed thatT (lg) is a proper T functional, moreover, if we let

ψ(r) = BC1

√s

nlog

1

λ

√r +BC2

s

nlog

1

λ,

we can easily check that ψ(r) is a sub-root function and ψ(r) ≥ BRnlg : T (lg) ≤ r. ApplyingTheorem 8 to Eqs. (32,33), we have

E(gλβ)− E(gH) ≤ 12D

Br∗ +

2c1δ

n,

with probability greater than 1− 2e−δ. r∗ is the unique fixed point of ψ(r). By letting ψ(r) = r andsolve the equation, we can easily show that

r∗ ≤ B2C21

s

nlog

1

λ+ 2BC2

s

nlog

1

λ.

Hence, the excess risk can be upper bounded as

E(gλβ)− E(g∗) ≤ C3s

nlog

1

λ+

2c1δ

n.

3.3 A Fast Approximation of Leverage Weighted RFF

As discussed in Sections 3.1 and 3.2, sampling according to the empirical ridge leverage scoredistribution (i.e., leverage weighted RFF) could speed up kernel methods. However, computing ridgeleverage scores is as costly as inverting the Gram matrix. To address this computational shortcoming,we propose a simple algorithm to approximate the empirical ridge leverage score distribution andthe leverage weights. In particular, we propose to first sample a pool of s features from the spectralmeasure p(·) and form the feature matrix Zs ∈ Rn×s (Algorithm 1, lines 1-2). Then, the algorithmassociates an approximate empirical ridge leverage score to each feature (Algorithm 1, lines 3-4) andsamples a set of l s features from the pool proportional to the computed scores (Algorithm 1, line5). The output of the algorithm can be compactly represented via the feature matrix Zl ∈ Rn×l such

23

LI, TON, OGLIC AND SEJDINOVIC

Algorithm 1 APPROXIMATE LEVERAGE WEIGHTED RFF

Input: sample of examples (xi, yi)ni=1, shift-invariant kernel function k, and regularization pa-rameter λ

Output: set of features (v1, p1), · · · , (vl, pl) with l and each pi computed as in lines 3–41: sample s features v1, . . . , vs from p(v)2: create a feature matrix Zs such that the ith row of Zs is

[z(v1, xi), · · · , z(vs, xi)]T

3: associate with each feature vi a real number pi such that pi is equal to the ith diagonal elementof the matrix

ZTs Zs((1/s)ZTs Zs + nλI)−1

4: l←∑s

i=1 pi and M ← (vi, pi/l)si=1

5: sample l features from M using the multinomial distribution given by the vector(p1/l, · · · , ps/l)

that the ith row of Zl is given by zxi(v) = [√

lp1z(v1, xi), · · · ,

√lplz(vl, xi)]

T .

The computational cost of Algorithm 1 is dominated by the operations in step 3. As Zs ∈ Rn×s,the multiplication of matrices ZTs Zs costs O(ns2) and inverting ZTs Zs + nλI costs only O(s3).Hence, for s n, the overall runtime is onlyO(ns2+s3). Moreover, ZTs Zs =

∑ni=1 zxi(v)zxi(v)T

and it is possible to store only the rank-one matrix zxi(v)zxi(v)T into the memory. Thus, the algo-rithm only requires to store an s× s matrix and can avoid storing Zs, which would incur a cost ofO(n× s).

The following theorem gives the convergence rate for the expected risk of Algorithm 1 in thekernel ridge regression setting.

Theorem 9. Suppose the conditions from Theorem 1 apply to the regression problem defined witha shift-invariant kernel k, a sample of examples (xi, yi)ni=1, and a regularization parameter λ.Let s be the number of random Fourier features in the pool of features from Algorithm 1, sampledusing the spectral measure p(·) from Eq. (2) and the regularization parameter λ. Denote with fλ

∗l

the ridge regression estimator obtained using a regularization parameter λ∗ and a set of randomFourier features vili=1 returned by Algorithm 1. If

s ≥ 7z20λ

log(16dλK)

δand l ≥ 5dλ

∗K log

(16dλ∗

K )

δ,

then for all δ ∈ (0, 1), with probability 1− δ, the expected risk of fλ∗

l can be upper bounded as

E(fλ∗

l ) ≤ E(fH) + 2λ+ 2λ∗ +O(

1√n

).

Moreover, this upper bound holds for l ∈ Ω( snλ).

Theorem 9 bounds the expected risk of the ridge regression estimator over random featuresgenerated by Algorithm 1. We can now observe that using the minimax choice of the regularization

24

TOWARDS A UNIFIED ANALYSIS OF RANDOM FOURIER FEATURES

parameter for kernel ridge regression λ, λ∗ ∝ n−1/2, the number of features that Algorithm 1 needsto sample from the spectral measure of the kernel k is s ∈ Ω(

√n log n). Then, the ridge regression

estimator fλ∗

l converges with the minimax rate to the hypothesis fH ∈ H for l ∈ Ω(log n · log log n).This is a significant improvement compared to the spectral measure sampling (plain RFF), whichrequires Ω(n3/2) features for in-sample training and Ω(

√n log n) for out-of-sample test predictions.

Proof. Suppose the examples xi, yini=1 are independent and identically distributed and that thekernel k can be decomposed as in Eq. (2). Let visi=1 be an independent sample selected accordingto p(v). Then, using these s features we can approximate the kernel as

k(x, y) =1

s

s∑i=1

z(vi, x)z(vi, y)

=

∫Vz(v, x)z(v, y)dP (v), (34)

where P is the empirical measure on visi=1. Denote the reproducing kernel Hilbert space associatedwith kernel k by H and suppose that kernel ridge regression was performed with the approximatekernel k. From Theorem 1 and Corollary 2, it follows that if

s ≥ 7z20λ

log16dλKδ

,

then for all δ ∈ (0, 1), with probability 1− δ, the risk convergence rate of the kernel ridge regressionestimator based on random Fourier features can be upper bounded by

E(fλα) ≤ 2λ+O

(1√n

)+ E(fH). (35)

Let fH be the function in the reproducing kernel Hilbert space H achieving the minimal risk,i.e., E(fH) = inff∈H E(f). We now treat k as the actual kernel that can be decomposed via theexpectation with respect to the empirical measure in Eq. (34) and re-sample features from the setvisi=1, but this time the sampling is performed using the optimal ridge leverage scores. As k is theactual kernel, it follows from Eq. (5) that the leverage function in this case can be defined by

lλ(v) = p(v)zv(x)T (K + nλI)−1zv(x).

Now, observe that

lλ(vi) = p(vi)[ZTs (K + nλI)−1Zs]ii

where [A]ii denotes the ith diagonal element of matrix A. As K = (1/s)ZsZTs , then the Woodbury

inversion lemma implies that

lλ(vi) = p(vi)[ZTs Zs(

1

sZTs Zs + nλI)−1]ii.

If we let lλ(vi) = pi, then the optimal distribution for visi=1 is multinomial with individualprobabilities q(vi) = pi/(

∑sj=1 pj). Hence, we can re-sample l features according to q(v) and

25

LI, TON, OGLIC AND SEJDINOVIC

perform linear ridge regression using the sampled leverage weighted features. Denoting this estimatorwith fλ

∗l and the corresponding number of degrees of freedom with dλ

K= TrK(K + nλ)−1, we

deduce (using Theorem 1 and Corollary 1)

E(fλ∗

l ) ≤ 2λ∗ +O

(1√n

)+ E(fH), (36)

with the number of features l ∝ dλK

.As fH is the function achieving the minimal risk over H, we can conclude that E(fH) ≤ E(fλα).

Now, combining Eq. (35) and (36), we obtain the final bound on E(fλ∗

l ).

Theorem 10 provides a generalization convergence analysis to kernel support vector machinesand logistic regression. Compared to previous result, the convergence rate of the expected risk,however, is at a slightly slowerO(

√λ+√λ∗) rate due to the difference in the employed loss function

(see Section 3.2).

Theorem 10. Suppose the conditions from Theorem 6 apply to a learning problem with Lipschitzcontinuous loss, a shift-invariant kernel k, a sample of examples (xi, yi)ni=1, and a regularizationparameter λ. Let s be the number of random Fourier features in the pool of features from Algorithm 1,sampled using the spectral measure p(·) from Eq. (2) and the regularization parameter λ. Denotewith gλ

∗l the estimator obtained using a regularization parameter λ∗ and a set of random Fourier

features vili=1 returned by Algorithm 1. If

s ≥ 5z20λ

log(16dλK)

δand l ≥ 5dλ

∗K log

(16dλ∗

K )

δ,

then for all δ ∈ (0, 1), with probability 1− δ, the expected risk of gλ∗l can be upper bounded as

E(gλ∗l ) ≤ E(gH) +

√2λ+

√2λ∗ +O

(1√n

).

We conclude by pointing out that the proposed algorithm provides an interesting new trade-offbetween the computational cost and prediction accuracy. In particular, one can pay an upfront cost(same as plain RFF) to compute the leverage scores, re-sample significantly fewer features and employthem in the training, cross-validation, and prediction stages. This can reduce the computational costfor predictions at test points from O(

√n log n) to O(log n · log logn). Moreover, in the case where

the amount of features with approximated leverage scores utilized is the same as in plain RFF, theprediction accuracy would be significantly improved as demonstrated in our experiments.

4. Numerical Experiments

In this section, we report the results of our numerical experiments (on both simulated and real-worlddatasets) aimed at validating our theoretical results and demonstrating the utility of Algorithm 1. Wefirst verify our results through a simulation experiment. Specifically, we consider a spline kernelof order r where k2r(x, y) = 1 +

∑∞i=1

1m2r cos 2πm(x − y) (also considered by Bach, 2017b;

Rudi and Rosasco, 2017). If the marginal distribution of X is uniform on [0, 1], we can show thatk2r(x, y) =

∫ 10 z(v, x)z(v, y)q∗(v)dv, where z(v, x) = kr(v, x) and q∗(v) is also uniform on [0, 1].

26

TOWARDS A UNIFIED ANALYSIS OF RANDOM FOURIER FEATURES

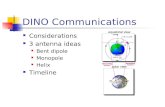

We let y be a Gaussian random variable with mean f(x) = kt(x, x0) (for some x0 ∈ [0, 1]) andvariance σ2. We sample features according to q∗(v) to estimate f and compute the excess risk. ByTheorem 1 and Corollary 1, if the number of features is proportional to dλK and λ ∝ n−1/2, we shouldexpect the excess risk converging at O(n−1/2), or at O(n−1/3) if λ ∝ n−1/3. Figure 1 demonstratesthis with different values of r and t.

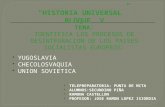

Next, we make a comparison between the performances of leverage weighted (computed accord-ing to Algorithm 1) and plain RFF on real-world datasets. We use four datasets from Chang andLin (2011) and Dheeru and Karra Taniskidou (2017) for this purpose, including two for regressionand two for classification: CPU, KINEMATICS, COD-RNA and COVTYPE. Except KINEMATICS,the other three datasets were used in Yang et al. (2012) to investigate the difference between theNyström method and plain RFF. We use the ridge regression and SVM package from Pedregosa et al.(2011) as a solver to perform our experiments. We evaluate the regression tasks using the root meansquared error and the classification ones using the average percentage of misclassified examples. TheGaussian/RBF kernel is used for all the datasets with hyper-parameter tuning via 5-fold inner crossvalidation. We have repeated all the experiments 10 times and reported the average test error foreach dataset. Figure 2 compares the performances of leverage weighted and plain RFF. In regressiontasks, we observe that the upper bound of the confidence interval for the root mean squared errorcorresponding to leverage weighted RFF is below the lower bound of the confidence interval forthe error corresponding to plain RFF. Similarly, the lower bound of the confidence interval for theclassification accuracy of leverage weighted RFF is (most of the time) higher than the upper bound onthe confidence interval for plain RFF. This indicates that leverage weighted RFFs perform statisticallysignificantly better than plain RFFs in terms of the learning accuracy and/or prediction error.

5. Discussion

We have investigated the generalization properties of learning with random Fourier features in thecontext of different kernel methods: kernel ridge regression, support vector machines, and kernellogistic regression. In particular, we have given generic bounds on the number of features required forconsistency of learning with two sampling strategies: leverage weighted and plain random Fourierfeatures. The derived convergence rates account for the complexity of the target hypothesis and thestructure of the reproducing kernel Hilbert space with respect to the marginal distribution of a data-generating process. In addition to this, we have also proposed an algorithm for fast approximation ofempirical ridge leverage scores and demonstrated its superiority in both theoretical and empiricalanalyses.

For kernel ridge regression, Avron et al. (2017) and Rudi and Rosasco (2017) have extensivelyanalyzed the performance of learning with random Fourier features. In particular, Avron et al. (2017)have shown that o(n) features are enough to guarantee a good estimator in terms of its empiricalrisk. The authors of that work have also proposed a modified data-dependent sampling distributionand demonstrated that a further reduction in the number of random Fourier features is possiblefor leverage weighted sampling. However, their results do not provide a convergence rate for theexpected risk of the estimator which could still potentially imply that computational savings come atthe expense of statistical efficiency. Furthermore, the modified sampling distribution can only beused in the 1D Gaussian kernel case. While Avron et al. (2017) focus on bounding the empirical risk

27

LI, TON, OGLIC AND SEJDINOVIC

Figure 1: The log-log plot of the theoretical and simulated risk convergence rates, averaged over 100 repetitions.

of an estimator, Rudi and Rosasco (2017) give a comprehensive study of the generalization propertiesof random Fourier features for kernel ridge regression by bounding the expected risk of an estimator.The latter work for the first time shows that Ω(

√n log n) features are sufficient to guarantee the

(kernel ridge regression) minimax rate and observes that further improvements to this result arepossible by relying on a data-dependent sampling strategy. However, such a distribution is defined ina complicated way and it is not clear how one could devise a practical algorithm by sampling from it.While in our analysis of learning with random Fourier features we also bound the expected risk of anestimator, the analysis is not restricted to kernel ridge regression and covers other kernel methodssuch as support vector machines and kernel logistic regression. In addition to this, our derivationsare much simpler compared to Rudi and Rosasco (2017) and provide sharper bounds in some cases.More specifically, we have demonstrated that Ω(

√n log log n) features are sufficient to attain the

minimax rate in the case where eigenvalues of the Gram matrix have a geometric/exponential decay.In other cases, we have recovered the results from Rudi and Rosasco (2017). Another importantdifference with respect to this work is that we consider a data-dependent sampling distribution basedon empirical ridge leverage scores, showing that it can further reduce the number of features and inthis way provide a more effective estimator.

In addition to the squared error loss, we also investigate the properties of learning with randomFourier features using the Lipschitz continuous loss functions. Both Rahimi and Recht (2009) and

28

TOWARDS A UNIFIED ANALYSIS OF RANDOM FOURIER FEATURES

Figure 2: Comparison of leverage weighted and plain RFFs, with weights computed according to Algorithm 1.

Bach (2017b) have studied this problem setting and obtained that Ω(n) features are needed to ensureO(1/

√n) expected risk convergence rate. Moreover, Bach (2017b) has defined an optimal sampling

distribution by referring to the leverage score function based on the integral operator and shownthat the number of features can be significantly reduced when the eigenvalues of a Gram matrixexhibit a fast decay. The Ω(n) requirement on the number of features is too restrictive and precludesany computational savings. Also, the optimal sampling distribution is typically intractable. Inour analysis, through assuming the realizable case, we have demonstrated that for the first time,O(√n) features are possible to guarantee O( 1√

n) risk convergence rate. In extreme cases, where the

complexity of target function is small, constant features is enough to guarantee fast risk convergence.Moreover, We also provide a much simpler form of the empirical leverage score distribution anddemonstrate that the number of features can be significantly smaller than n, without incurring anyloss of statistical efficiency.

Having given risk convergence rates for learning with random Fourier features, we provide afast and practical algorithm for sampling them in a data-dependent way, such that they approximatethe ridge leverage score distribution. In the kernel ridge regression setting, our theoretical analysisdemonstrates that compared to spectral measure sampling significant computational savings can beachieved while preserving the statistical properties of the estimator. We further test our findingson several different real-world datasets and verify this empirically. An interesting extension of ourempirical analysis would be a thorough and comprehensive comparison of the proposed leverage

29

LI, TON, OGLIC AND SEJDINOVIC

weighted sampling scheme to other recently proposed data-dependent strategies for selecting goodfeatures (e.g., Rudi et al., 2018; Zhang et al., 2018), as well as a comparison to the Nyström method.

Acknowledgments: We thank Fadhel Ayed, Qinyi Zhang and Anthony Caterini for fruitful discussion onsome of the results as well as for proofreading of this paper. This work was supported by the EPSRC andMRC through the OxWaSP CDT programme (EP/L016710/1). Dino Oglic was supported in part by EPSRCgrant EP/R012067/1. Zhu Li was supported in part by Huawei UK.

ReferencesAhmed Alaoui and Michael W Mahoney. Fast randomized kernel ridge regression with statistical guarantees.

In Advances in Neural Information Processing Systems, pages 775–783, 2015.

Haim Avron, Michael Kapralov, Cameron Musco, Christopher Musco, Ameya Velingker, and Amir Zandieh.Random Fourier features for kernel ridge regression: Approximation bounds and statistical guarantees. InInternational Conference on Machine Learning, pages 253–262, 2017.

Francis Bach. Sharp analysis of low-rank kernel matrix approximations. In Conference on Learning Theory,pages 185–209, 2013.

Francis Bach. Breaking the curse of dimensionality with convex neural networks. Journal of Machine LearningResearch, 18(19):1–53, 2017a.

Francis Bach. On the equivalence between kernel quadrature rules and random feature expansions. Journal ofMachine Learning Research, 18(21):1–38, 2017b.

Peter L Bartlett and Shahar Mendelson. Rademacher and Gaussian complexities: Risk bounds and structuralresults. Journal of Machine Learning Research, 3(Nov):463–482, 2002.

Peter L Bartlett, Olivier Bousquet, Shahar Mendelson, et al. Local rademacher complexities. The Annals ofStatistics, 33(4):1497–1537, 2005.

Alain Berlinet and Christine Thomas-Agnan. Reproducing kernel Hilbert spaces in probability and statistics.Springer Science & Business Media, 2011.

Salomon Bochner. Vorlesungen über Fouriersche Integrale. In Akademische Verlagsgesellschaft, 1932.

Andrea Caponnetto and Ernesto De Vito. Optimal rates for the regularized least-squares algorithm. Foundationsof Computational Mathematics, 7(3):331–368, 2007.

Chih-Chung Chang and Chih-Jen Lin. LIBSVM: A library for support vector machines. ACM Transactionson Intelligent Systems and Technology, 2:27:1–27:27, 2011. Software available at http://www.csie.ntu.edu.tw/~cjlin/libsvm.

Dua Dheeru and Efi Karra Taniskidou. UCI machine learning repository, 2017. URL http://archive.ics.uci.edu/ml.

Trevor J Hastie. Generalized additive models. In Statistical models in S, pages 249–307. Routledge, 2017.

Michael W Mahoney and Petros Drineas. CUR matrix decompositions for improved data analysis. Proceedingsof the National Academy of Sciences, 106(3):697–702, 2009.

Evert J. Nyström. Über die praktische Auflösung von Integralgleichungen mit Anwendungen auf Randwer-taufgaben. Acta Mathematica, 1930.

30

TOWARDS A UNIFIED ANALYSIS OF RANDOM FOURIER FEATURES

Dino Oglic and Thomas Gärtner. Greedy feature construction. In Advances in Neural Information ProcessingSystems 29, pages 3945–3953. Curran Associates, Inc., 2016.

F. Pedregosa, G. Varoquaux, A. Gramfort, V. Michel, B. Thirion, O. Grisel, M. Blondel, P. Prettenhofer,R. Weiss, V. Dubourg, J. Vanderplas, A. Passos, D. Cournapeau, M. Brucher, M. Perrot, and E. Duchesnay.Scikit-learn: Machine learning in Python. Journal of Machine Learning Research, 12:2825–2830, 2011.

Ali Rahimi and Benjamin Recht. Random features for large-scale kernel machines. In Advances in neuralinformation processing systems, pages 1177–1184, 2007.

Ali Rahimi and Benjamin Recht. Weighted sums of random kitchen sinks: Replacing minimization withrandomization in learning. In Advances in neural information processing systems, pages 1313–1320, 2009.

Alessandro Rudi and Lorenzo Rosasco. Generalization properties of learning with random features. InAdvances in Neural Information Processing Systems, pages 3218–3228, 2017.

Alessandro Rudi, Raffaello Camoriano, and Lorenzo Rosasco. Less is more: Nyström computationalregularization. In Advances in Neural Information Processing Systems, pages 1657–1665, 2015.

Alessandro Rudi, Luigi Carratino, and Lorenzo Rosasco. Falkon: An optimal large scale kernel method. InAdvances in Neural Information Processing Systems, pages 3891–3901, 2017.

Alessandro Rudi, Daniele Calandriello, Luigi Carratino, and Lorenzo Rosasco. On fast leverage score samplingand optimal learning. In Advances in Neural Information Processing Systems, pages 5672–5682, 2018.

Walter Rudin. Fourier analysis on groups. Courier Dover Publications, 2017.

Bernhard Schölkopf and Alexander J. Smola. Learning with kernels: Support vector machines, regularization,optimization, and beyond. MIT Press, 2001.

Bernhard Scholkopf and Alexander J Smola. Learning with kernels: support vector machines, regularization,optimization, and beyond. MIT press, 2001.

Bernhard Schölkopf, Koji Tsuda, and Jean-Philippe Vert. Kernel methods in computational biology. MITpress, 2004.

Alexander J. Smola and Bernhard Schölkopf. Sparse greedy matrix approximation for machine learning. InProceedings of the 17th International Conference on Machine Learning, 2000.

Bharath Sriperumbudur and Zoltán Szabó. Optimal rates for random Fourier features. In Advances in NeuralInformation Processing Systems, pages 1144–1152, 2015.

Ingo Steinwart and Andreas Christmann. Support vector machines. Springer Science & Business Media, 2008.

Yitong Sun, Anna Gilbert, and Ambuj Tewari. But how does it work in theory? linear svm with randomfeatures. In Advances in Neural Information Processing Systems, pages 3379–3388, 2018.

Dougal J Sutherland and Jeff Schneider. On the error of random Fourier features. In Proceedings of theThirty-First Conference on Uncertainty in Artificial Intelligence, pages 862–871. AUAI Press, 2015.

Joel A Tropp. An introduction to matrix concentration inequalities. Foundations and Trends R© in MachineLearning, 8(1-2):1–230, 2015.

Christopher K. I. Williams and Matthias Seeger. Using the Nyström method to speed up kernel machines. InAdvances in Neural Information Processing Systems 13. 2001.

31

LI, TON, OGLIC AND SEJDINOVIC

Tianbao Yang, Yu-Feng Li, Mehrdad Mahdavi, Rong Jin, and Zhi-Hua Zhou. Nyström method vs randomFourier features: A theoretical and empirical comparison. In Advances in neural information processingsystems, pages 476–484, 2012.

Jian Zhang, Avner May, Tri Dao, and Christopher Ré. Low-precision random fourier features for memory-constrained kernel approximation. arXiv preprint arXiv:1811.00155, 2018.

Yuchen Zhang, John Duchi, and Martin Wainwright. Divide and conquer kernel ridge regression: A distributedalgorithm with minimax optimal rates. The Journal of Machine Learning Research, 16(1):3299–3340, 2015.

32