ACTIVE DEEP LEARNING FOR EFFICIENT AERIAL IMAGE · PDF fileACTIVE DEEP LEARNING FOR EFFICIENT...

-

Upload

phungkhanh -

Category

Documents

-

view

224 -

download

1

Transcript of ACTIVE DEEP LEARNING FOR EFFICIENT AERIAL IMAGE · PDF fileACTIVE DEEP LEARNING FOR EFFICIENT...

ACTIVE DEEP LEARNING FOR EFFICIENT AERIAL IMAGE LABELLING IN DISASTERRESPONSE

Jordan Yap? , Arash Vahdat?, Ferda Ofli†, Patrick Meier‡, Greg Mori?

?School of Computing Science, Simon Fraser University, Canada† Qatar Computing Research Institute, Hamad bin Khalifa University, Doha, Qatar

‡ Humanitarian UAV Network (UAViators)

ABSTRACTEfficient analysis of aerial imagery has great potential to assistin disaster response efforts. In this paper we develop meth-ods based on active learning to detect objects of interest. Weformulate an approach based on fine-tuning deep networksfor object recognition based on labels obtained from a humanuser. We demonstrate that this approach can effectively adaptfeatures to recognize objects in aerial images taken after adisaster strikes.

Index Terms— active learning, object recognition

1. INTRODUCTION

When a disaster happens relief efforts need to begin coordi-nating immediately; delays of days or weeks could have direconsequences for those affected. It is essential to determinewhich areas have been most seriously affected and where todeploy limited relief resources for maximum effectiveness. Itcan be quick to have a satellite scan over a disaster zone orsend multiple UAVs to fly over a large area and collect im-ages in a short amount of time. Processing this image data todetermine disaster impact is a daunting task given the largevolume and diversity of potential image data. One approachis to use human labelling, people could manually look at eachimage captured and determine which regions contain damageand which do not. The damage resulting from a typhoon orhurricane can be spread over a vast area; image data collectedfrom such disasters can require a massive amount of man-ual human effort to analyze. Efforts based on crowd-sourcingcould be used, but the scale of the data makes a timely, cost-effective response very difficult.

Instead, automatic methods for analyzing the imagerycould be used. One could create a training dataset of disaster-zone imagery and use it to build a computer vision model.However, a priori learning of computer vision models that canaccurately analyze generic disaster-zone imagery is problem-atic. Intra-class variation and the peculiarities of the specific

The authors would like to thank Nvidia for providing the GPUs used inthis paper as well as Sky Eye Media for providing the UAV imagery used inthe Typhoon Haiyan dataset.

disaster region will confound these models. To address this,in this work we develop an approach based on active learn-ing. Active learning methods incrementally build a trainingdataset by querying a human user for labels. Given a specificdataset such as the Typhoon Haiyan imagery, we query hu-man users for labels. These labels are used to train automatedclassification algorithms, and the process is iterated. In theTyphoon Haiyan situation, a key assessment is the numberof coconut trees destroyed by the typhoon. Coconut treesare a primary food and income source for people in this re-gion. Hence, the goal of our approach is to detect healthy anddestroyed coconut trees. Human users can provide labels byclicking on images (per-image) or labelling individual bound-ing boxes that are extracted from an image (per-region). Inthis paper we explore deep learning approaches for activelearning. We examine the effectiveness of fine-tuning deepfeatures and the trade-offs of human annotation on a per-image and per-region basis in an active learning setting.

In summary, the contributions of this paper are: (1) devel-oping active learning algorithms based on deep learning, (2)exploring active learning from per-image and per-region la-bels, and (3) demonstrating the application of active learningto assisting disaster relief efforts.

2. PREVIOUS WORK

In this paper we develop active deep learning algorithms foranalyzing aerial disaster imagery. There is substantial litera-ture in these areas, and we briefly review previous work be-low.

Object Recognition: State of the art approaches forobject recognition utilize large labelled image collectionsand deep learning techniques. ImageNet [1] is the standarddataset for object recognition, containing a diverse set ofobject categories, and focused on internet images. Deeplearning approaches [2–5] leverage this labelled dataset tosuccessfully classify images. Further, the features learnedfrom ImageNet are effective for transfer to other analysistasks. In this paper we explore active learning methods forfine-tuning deep networks.

Active Learning: Active learning refers to settings wherethe learning algorithm can query a human labeller to provideadditional data [6]. In the recent vision literature, active learn-ing has been used to build a collection of labelled data, whilebeing efficient with human labelling effort (e.g. [7–9]). Asan example, Russakovsky et al. [10] use a Markov decisionprocess to model cost-accuracy tradeoffs between human la-belling and automated algorithm labelling for object detec-tion.

Aerial Image Analysis: Remote sensing image analy-sis is an active area of research. An overview is providedin Mather and Koch [11]. Analysis spans a range from de-tecting/segmenting objects, classifying regions, to analyzinghuman behaviour, and includes damage assessment datasets(e.g. [12]). Examples of recent work include Blanchart etal. [13], who utilize SVM-based active learning to analyzeaerial images in a coarse-to-fine setting. Bruzzone and Pri-eto [14] is an example of a change detection-based analysistechnique. Zhang et al. [15] develop coding schemes for clas-sifying aerial images by land use. Oreifej et al. [16] recognizepeople from aerial images.

3. METHODS

3.1. Dataset

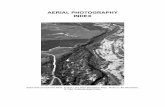

We use a new dataset of images captured from the aftermathof Typhoon Haiyan which were from a region located inJavier, Leyte, Philippines. A UAV was flown overhead at aconstant altitude that captures an area of 250m2 at an imageresolution of 1024 by 1024 pixels. Fig. 1 shows an exampleimage.

Fig. 1: Sample image captured from a UAV (N10◦46′20”E124◦59′00”)

There are 438 images in the dataset, mostly containingfoliage, but roads, lakes, vehicles and buildings are alsofrequently present. Manual annotation of 2200 ‘Healthy’coconut trees (fronds of the coconut tree remain intact) and

5700 ‘Destroyed’ trees (fronds and pieces of the trunk strewnacross the ground) was done. A sliding window approachis used in processing the image. A fixed 256x256 region isscanned at a step size of 100 pixels across the entire image.The standard intersection-over-union (IOU) greater than orequal to 0.5 is used to determine correct detections. Thisapproach results in 23450 ‘Other’ background regions intotal.

3.2. Active Deep Learning

We have developed an active learning method utilizing deeplearning; our system fine-tunes a deep neural network with theregions that have been labelled by an oracle. Compared to anactive learning system that uses fixed features (e.g. extractedfrom a pre-trained AlexNet [2]), we show that by continu-ously fine-tuning the network our system can suggest betterimages to an oracle for labelling on the next iteration to out-perform a system using fixed-features alone.

Our method will follow the notation used by Mozafari etal. [17]: a querying strategy is used to determine which imageto allow the oracle to label next given how much it believes itwill increase classification performance, and a classificationalgorithm that can predict which class a datapoint belongs towith a confidence score wi ∈ [0, 1] where a score of 1 wouldmean the classifier is 100% certain that a given region is de-stroyed. The goal is to obtain a classifier that over time canaccurately learn to classify a region while presenting the leastamount of images possible to an oracle to have labelled.

To initialize training of the classifier there should be atleast 1 region from each of the possible classes labelled. Theclassifier used in our system was a deep network [2] pre-trained on the ImageNet dataset. The processing pipeline isillustrated in Fig. 2 (Red - Destroyed, Blue- Healthy, Black -Other).

Fig. 2: Pipeline for Active Deep Learning: 1) Fine-tunenetwork with labelled images 2) Get oracle to label the most

uncertain unlabelled image using 3) Image labelling ORRegion labelling 5) Add newly labelled data to training set

3.3. Querying Strategy

In order to obtain the largest increase in classification ac-curacy at each iteration when labelled images are added,a querying strategy uses classification scores to determinewhich image will be labelled next. In this work, the mostuncertain approach is used to choose the next image to label.This approach is the equivalent of a min margin approachwhere we want to choose images to label that the classifieris most uncertain of [18]. For our experiments we ran eachregion through our classifier to obtain a probability score. Wethen average the scores of all regions in an image to obtainan overall uncertainty score wx for an image x. In our casewe want the oracle to label images where the average prob-ability of all the regions in that image is closest to 0.5 (mostuncertain). We do this using Eqn 1:

argminx∈U

(|wx − 0.5|) (1)

The image found to be most uncertain is then labelled by auser and regions from that image are added to the set used forfine-tuning our deep network.

3.4. Fine-Tuning the Deep Network

All labelled regions at a given iteration will be used to fine-tune our model to adapt it to the types of images found inthe dataset. Fine-tuning a model is done for a fixed numberof epochs of training; in this way, a system can interleavetraining and querying a user for additional labelled data.

4. RESULTS

Performance Evaluation: For our experiments we used theTyphoon Haiyan dataset imagery where performance is eval-uated by Area Under Precision Recall Curve (PR-AUC). Dur-ing each iteration of testing our system performs the desig-nated number of epochs of fine-tuning and selects the imageor regions with lowest uncertainty score. To be able to per-form multiple comparable trials, the initial regions used fortraining was fixed to be the same for all experiments as wellas using pre-collected labels in place of an oracle to conductour active learning experiments. We compare performanceof our active deep learning system after 5 and 100 epochs offine-tuning to a softmax classifier that uses fixed fc7 deep fea-tures extracted from a pre-trained AlexNet that has not beenfine-tuned.

Figures 3 and 4 show the performance using image-basedand region-based labelling. We compare having the oracle la-bel an entire image or label each region individually becauseit may be that the small region sizes causes the oracle to makemore incorrect decisions. Image-based seems to be a fastertask since you only have to click on areas of destroyed andhealthy trees and not label the many ‘Other’ (background) re-gions that exist. Our experiments show that the image-based

method increases performance faster and with less queryingof the oracle than region-based.

We examine two problems: detecting trees and detectingdestroyed trees. Each of these tasks is useful for a damageassessment, either identifying ratios or absolute counts of de-stroyed trees. The tree detection task (Tree vs. Non-tree) com-bines the ‘Destroyed’ and ‘Healthy’ regions into one class(Tree), compared against the ‘Other’ class. For detecting de-stroyed trees, ‘Destroyed’ regions are compared to the com-bined ‘Healthy’ and ‘Other’ classes.

4.1. Image-based Labelling Performance

4.1.1. Detecting destroyed trees

Fig. 3 shows the performance when having an oracle label thetwo most uncertain images on each iteration. It is interestingto see that in the task of classifying destroyed trees (shownin Fig. 3 left), fine-tuning the network has a considerable im-provement, up to 18%, over using fixed features. Fixed fea-tures initially has comparable performance but after more im-ages have been labelled and our network is allowed to fine-tune, it begins to diverge after 10 labelled images.

4.1.2. Tree detection

We can see in Fig. 3 (right) that increasing the number of iter-ations of fine-tuning only helps for a few iterations. After 12images labelled we can see the performance using 5 epochs offine-tuning matches that of 100 epochs of fine-tuning. Both ofthese Active Deep Learning methods are significantly betterthan using fixed features.

4.2. Region-based Labelling Performance

4.2.1. Detecting destroyed trees

In Fig. 4 we have the oracle label the 10 most uncertain re-gions on each iteration. Similar to image-based labelling wecan see that once more regions have been labelled fine-tuningmakes a significant improvement. For example, after 400 la-belled regions performance is significantly above the baselinewith fixed features. Fine-tuning for 100 epochs gives an in-crease in performance over fine-tuning for just 5 epochs inbetween active labelling querying and adding of additionaltraining data.

4.2.2. Tree detection

Results using a region-based labelling method for the lesschallenging Tree vs Non-tree problem are shown in Fig. 4(right). We can see that fine-tuning can out-perform usingfixed features but the difference is not as pronounced com-pared to the other methods described previously. Fine-tuningfor 100 epochs seems to be better than just 5 but the differenceis very small ( ∼2% higher PR-AUC).

Fig. 3: Image Based - Querying 2 Images / Iteration

Fig. 4: Region Based - Querying 10 Regions / Iteration

Fig. 5 shows qualitative results. Typical failure casesinclude background foliage similar to coconut trees. Thisincludes false positive healthy tree detections arising fromother plants, and false positive destroyed tree detections fromdensely scattered shrubbery similar to destroyed trees. Thesechallenges are also present for human annotators; obtaining“ground truth” for this problem is difficult.

5. CONCLUSION

In this work we explored the fine-tuning of a deep neural net-work used for obtaining features used in an active learningsystem. Compared to systems that use fixed features, ourwork shows that by fine-tuning a deep neural network sig-nificant performance improvements can be gained, especiallyduring early stages of training. We also discuss two ways ofhaving a user label an image in the Typhoon Haiyan datasetand acknowledge the trade-offs between them. More broadly,developing such image analysis systems has direct humani-tarian applications around the world for assessing the impactof major disasters on food security in tropical countries. Thebenefits of these systems, and need for further research, areset to grow given the challenges climate change poses.

Fig. 5: Real world output of our system in actionRed/Blue - Ground Truth Destroyed/Healthy RegionsYellow/Green - Classified Destroyed/Healthy Regions

6. REFERENCES

[1] Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause,Sanjeev Satheesh, Sean Ma, Zhiheng Huang, AndrejKarpathy, Aditya Khosla, Michael Bernstein, Alexan-der C. Berg, and Li Fei-Fei, “ImageNet large scale vi-sual recognition challenge,” International Journal ofComputer Vision (IJCV), pp. 1–42, 2015.

[2] Alex Krizhevsky, Ilya Sutskever, and Geoffrey E Hin-ton, “Imagenet classification with deep convolutionalneural networks,” in NIPS, 2012.

[3] Pierre Sermanet, David Eigen, Xiang Zhang, MichaelMathieu, Rob Fergus, and Yann LeCun, “Overfeat: Inte-grated recognition, localization and detection using con-volutional networks,” ICLR, 2014.

[4] Karen Simonyan and Andrew Zisserman, “Very deepconvolutional networks for large-scale image recogni-tion,” arXiv preprint arXiv:1409.1556, 2014.

[5] Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Ser-manet, Scott Reed, Dragomir Anguelov, Dumitru Erhan,Vincent Vanhoucke, and Andrew Rabinovich, “Goingdeeper with convolutions,” in CVPR, 2015.

[6] David Cohn, Les Atlas, and Richard Ladner, “Improv-ing generalization with active learning,” Mach. Learn.,vol. 15, no. 2, pp. 201–221, May 1994.

[7] Rong Yan, Jie Yang, and Alexander Hauptmann, “Au-tomatically labeling video data using multi-class activelearning,” in International Conference on Computer Vi-sion (ICCV), 2003.

[8] S. Bandla and K. Grauman, “Active learning of an ac-tion detector from untrimmed videos,” in InternationalConference on Computer Vision (ICCV), 2013.

[9] Carl Vondrick and Deva Ramanan, “Video annotationand tracking with active learning,” in Advances in Neu-ral Information Processing Systems 24, 2011.

[10] Olga Russakovsky, Li-Jia Li, and Li Fei-Fei, “Best ofboth worlds: human-machine collaboration for objectannotation,” in CVPR, 2015.

[11] Paul Mather and Magaly Koch, Computer processing ofremotely-sensed images: an introduction, John Wiley &Sons, 2011.

[12] “Remote sensing damage assessment: UNI-TAR/UNOSAT, EC JRC and World Bank,” 2010.

[13] Pierre Blanchart, Marin Ferecatu, and Mihai Datcu,“Cascaded active learning for object retrieval using mul-tiscale coarse to fine analysis,” in International Confer-ence on Image Processing (ICIP), 2011.

[14] L. Bruzzone and D. Prieto, “An adaptive semiparametricand context-based approach to unsupervised change de-tection in multitemporal remote-sensing images,” IEEETrans. Image Processing, vol. 11, no. 4, pp. 452–466,2002.

[15] Hui Zhang, Jinfang Zhang, and Fanjiang Xu, “Landuse and land cover classification base on image saliencymap cooperated coding,” in International Conferenceon Image Processing (ICIP), 2015.

[16] O. Oreifej, R. Mehran, and M. Shah, “Human identityrecognition in aerial images,” in CVPR, 2010.

[17] Barzan Mozafari, Purna Sarkar, Michael Franklin,Michael Jordan, and Samuel Madden, “Scaling upcrowd-sourcing to very large datasets: A case for ac-tive learning,” Proc. VLDB Endow., vol. 8, no. 2, pp.125–136, Oct. 2014.

[18] Greg Schohn and David Cohn, “Less is more: Activelearning with support vector machines,” in Proceedingsof the Seventeenth International Conference on MachineLearning, San Francisco, CA, USA, 2000, ICML ’00,pp. 839–846, Morgan Kaufmann Publishers Inc.