4.1 Introduction - YunTech

Transcript of 4.1 Introduction - YunTech

Introduction4.1

第1頁

Back-propagation Learning Rule (BP)4.5

Functional-Link Net4.9

設計Multilayer Perceptron with 1 Hidden Layer 解XOR的分類問題

4.2

On Hidden Nodes for Neural Nets4.7

Gradient and Gradient Descent Method in Optimization

4.3

Multilayer Perceptron (MLP) and Forward Computation

4.4

Experiment of XOR Classification & Discussions

4.6

Application - NETtalk:A Parallel Network That Learns to Read Aloud

4.8

4.1

4.2

第2頁

4.3

4.4

4.5

4.6

4.7

4.8

4.9

4.1 Introduction

對於Figure 4.1-1 Exclusive-OR的問題,我們不能利

用一層的Perceptron或一層的分辨函數去求得分辨

的區域。但我們可利用多層的Perceptron去解問題

而得到答案。

Fig. 4.1-1. Exclusive OR problem.

4.1

4.2

第3頁

4.3

4.4

4.5

4.6

4.7

4.8

4.9

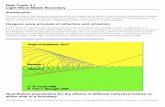

4.1 IntroductionConsider the Exclusive-OR (XOR) problem shown in

Figure 4.1-1. We cannot just use one layer of perceptron

or discriminant function to achieve classification.

However, we can solve this problem by using the

multilayer perceptron.

Fig. 4.1-1. Exclusive OR problem.

4.1

4.2

第4頁

4.3

4.4

4.5

4.6

4.7

4.8

4.9

• Weighting coefficient adjustment is based on

the gradient decent method.

Fig. 4.1-2 Two-layer Perceptron of neural network.

第5頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

4.2 Multilayer Perceptron with 1 Hidden

Layer to solve XOR classification problem

XOR的分兩類、兩度空間的mapping、及2-2-1的

neural network 如下,我們要求得網路上的

weighting coefficients,解XOR分兩類的問題。

第6頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

第7頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

XOR is a two-class

mapping in 2-D problem.

Its correspond 2-2-1 neural

network is shown in Fig.

4.1-3.

We need to determine the

weighting coefficients so

that the neural network can

solve XOR problem. Fig. 4.1-3 2-2-1的neural network

第8頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

• In 2-D space, we can draw 2

parallel lines L1, L2 to

separate the 2 classes.

• As shown in 2-2-1 network,

there is only one hidden layer

(two hidden nodes) in order

to solve this XOR problem.

• The first layer can determine

two lines (each perceptron

has one )

第9頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

Line equations for L1, L2

第10頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

• Both L1, L2 can separate

the space into two halves

(+1 or -1)

• The perceptron in the

second layer can separate

the combination of +1 and

-1 (as inputs) into 2 classes.

• There are totally 4

combinations of (P1, P2):

(0,0), (0, 1), (1, 1), (1, 0)

第11頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

Node 1與node 2的activation function為hardlimiter,

output value為+1 or –1。設 , 各為 node 1與node

2的output value。

𝑃2𝑃1

第12頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

4 cases/combinations of (P1, P2)

Case 1:如果 L1 , L2 的分割空間如下圖,

第13頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

則由input training patterns到hidden nodes到desired

class outputs的結果如下表:

第14頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

我們將hidden node output及其desired output劃在另

一空間上,可以得到如下圖,為一『Not AND, Not

OR』的空間分割。

在此我們以 軸表示 perceptron之output, 軸

表示 perceptron之output。𝑃2

𝑃2𝑃1𝑃1

第15頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

第16頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

全部的2-2-1網路及其weighting coefficients如下圖:

第17頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

Case 2:如果 , 的分割空間如下圖,𝐿2𝐿1

第18頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

則由input training patterns到hidden nodes到desired

class outputs的結果如下表:

第19頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

將hidden node output及其desired output劃在另一空

間上,可以得到如下圖,為一『Not AND』的空間

分割。

第20頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

The 2-2-1 network and all the weighting coefficients

are shown below::

第21頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

Case 3: 如果 , 的分割空間如下圖𝐿2𝐿1

第22頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

則由input training patterns到hidden nodes到desired

class outputs的結果如下表:

第23頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

我們將hidden node output及其desired output劃在另

一空間上,可以得到如下圖,為一『OR』的空間

分割。

第24頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

The 2-2-1 network and all the weighting coefficients

are shown below:

第25頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

Case 4:如果 , 的分割空間如下圖,𝐿2𝐿1

+

--

第26頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

則由input training patterns到hidden nodes到desired

class outputs的結果如下表:

第27頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

我們將hidden node output及其desired output劃在另

一空間上,可以得到如下圖,為一『OR』的空間

分割。

第28頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

The 2-2-1 network and all the weighting coefficients

are shown below:

第29頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

4.3 Gradient and Gradient Descent

Method in Optimization

The gradient descent method is used in the back-

propagation learning rule of the Perceptron and

Multilayer Perceptron.

第30頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

(A) Gradient

第31頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

第32頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

第33頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

Example 4-1

Fig.4.3-1 Function and gradient at different location.

第34頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

example 4-2

第35頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

Fig. 4.3-2 (a) Function, (b) Gradient at different locations.

第36頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

(B) Gradient descent method

Fig. 4.3-3 Optimal solution by gradient descent.

第37頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

(B) Gradient descent method

第38頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

Fig. 4.3-4 Function of 2

variables.

第39頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

Gradient descent method: to find the minimum of a

given function. Like down hill to the valley.

Draback: settled at local minimum

第40頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

(C) Chain Rule

Given E(z(y(x))),𝜕𝑦

𝜕𝑥

𝜕𝑧

𝜕𝑦

𝜕𝐸

𝜕𝑧

𝜕𝐸

𝜕𝑥=

第41頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

4.4 Multilayer Perceptron (MLP)

and Forward Computation

Fig. 4.4-1 Two-layer perceptron of neural network.

第42頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

• The system is shown in Fig. 4.4-1. The index

of the node in input layer is i.

• There are I+1 inputs, the (J+1) node index in

the hidden layer is j; the K node index in the

output layer is k.

• Input vector has I features: x1, x2, …, xI . We

must have a 1 as the input at the input layer,

that is a linear combination of the variable.

And the same at each hidden layer.

• Fig. 4.4-2 shows the mathematical computation

第43頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

Fig. 4.4-2 Two-layer perceptron of neural network.

I

第44頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

• w1 is a matrix, whose jth row denotes the

weighting coefficients connecting the input y

to the jth hidden node in the first layer

• w2 is the matrix, whose jth row denotes the

weighting coefficients connecting the output o1

in the first layer to the jth hidden node in the

second layer

第45頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

At input layer, = , = 1

At hidden layer, j-th node,

𝑓 𝑠𝑗jo

𝑠𝑗 =

𝑖=1

𝐼+1

𝑤𝑗𝑖𝑜𝑖

𝑜𝐼+1𝑥𝑖𝑜𝑖

第46頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

4.5 Back-propagation Learning Rule (BP)

• In the learning, the adjustment of the

weighting coefficients propagates from

output layer to the inside hidden layers, so

it is called back-propagation (BP) learning

algorithm.

• The multi-layer perceptron is also called

back-propagation learning neural network

(BPNN)

第47頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

Fig. 4.5-1 Back-propagation learning in multilayer perceptron.

第48頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

4.5.1 Analysis(A) One hidden layer case (I-J-K network)

Given an input training pattern vector x and its desired

output vector d, we can get the real output vector o.

Consider the error1

2| 𝐝 − 𝐨 𝑡 |𝟐 , the sum of the

squared-error E,

𝑤𝑘𝑗

第49頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

4.5.1 Analysis(A) One hidden layer case (I-J-K network)

Fig. 4.5-2 Adjust weighting

coefficient 𝑤𝑘𝑗 between output and

hidden layer.

第50頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

1

第51頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

Fig. 4.5-3 Adjust weighting coefficient 𝑤𝑗𝑖 between hidden and input layers.

第52頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

第53頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

第54頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

第55頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

(B)Two hidden layers case (B-I-J-K network)

Fig. 4.5-4 Adjust weighting between hidden and input layers.𝑤𝑖𝑏

第56頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

(B) Two hidden layers case (B-I-J-K network)

3. Adjust weighting 𝑤𝑖𝑏 between hidden (index i) and

input (index b) layers.

第57頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

第58頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

第59頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

第60頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

(c) Three hidden layers case (A-B-I-J-K) and n

hidden layers case

第61頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

第62頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

第63頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

第64頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

第65頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

第66頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

4.5.2 Back-propagation learning algorithm of one-

hidden layer perceptron (I)

Algorithm:具一層隱藏層的認知器之由後傳遞學

習法則(I) (輸入一個樣本就算誤差)

第67頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

第68頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

(A) Major steps of algorithm

第69頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

(B)Flowchart of programming

第70頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

4.5.3 Back-propagation learning algorithm of

one-hidden layer perceptron (II)

Algorithm:具一層隱藏層的認知器之由後傳遞學

習法則 (II) (Calculate the error after one iteration

is complete,輸入一個iteration才算誤差)

Input: N augmented vectors y1, …, yN, and the

corresponding output vector d1, …, dN.

Output: The weighting coefficients wji, wkj for the first

and second layers, respectively.

第71頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

Steps:

第72頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

(A) Major steps of algorithm

第73頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

(B)Flowchart of programming

第74頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

4.6 Experiment of XOR Classification &

Discussions• The 2-3-2 (2 inputs, 1 hidden layer with 3

hidden nodes, and 2 output nodes, truly 3-4-2)

multilayer perceptron is applied to the

classification of XOR problem.

• The training samples are the 4 points in XOR.

• After learning, we input the test points of the

square area and get the two classification

regions in the following.

第75頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

Fig. 4.6-1 Classification result of Exclusive-OR using 2-3-2 perceptron.

(a) Error vs. number of iterations (Error threshold is set at 0.01).

(b) Two class regions.

第76頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

Fig. 4.6-1 Classification result of Exclusive-OR using 2-3-2 perceptron.

(a) Error vs. number of iterations (Error threshold is set at 0.01).

(b) Two class regions. (續)

第77頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

討論:

第78頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

第79頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

第80頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

第81頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

7. Universal Approximation Theorem

The theorem can be applied to multilayer perceptrons,

第82頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

Multilayer perceptron and decision regions in

2-D space

第83頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

4.7 On Hidden Nodes for Neural Nets

Mirchandani與Cao於1989年發表 “On hidden nodes

for neural nets”之論文,最主要的貢獻為發表了定

理,

第84頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

Theorem: For the input of d dimensions, number of

hidden nodes is H, the maximum number M of linearly

separable regions can determined as

= = + + +

where = 0,H < k

( = )!

( )! !

H

H k k( , )C H k

( , )C H k

( , )C H d

( ,1)C H( ,0)C H 0

,d

k

C H k

,M H d

第85頁

4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

4.9

本章以下部分省略