3 D System on System

-

Upload

shanmugkit -

Category

Documents

-

view

56 -

download

0

Transcript of 3 D System on System

426 IEEE TRANSACTIONS ON BIOMEDICAL CIRCUITS AND SYSTEMS, VOL. 4, NO. 6, DECEMBER 2010

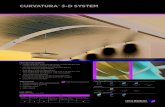

3-D System-on-System (SoS) Biomedical-ImagingArchitecture for Health-Care Applications

Sang-Jin Lee, Student Member, IEEE, Omid Kavehei, Student Member, IEEE, Yoon-Ki Hong,Tae Won Cho, Member, IEEE, Younggap You, Member, IEEE, Kyoungrok Cho, Member, IEEE, and

Kamran Eshraghian

Abstract—This paper presents the implementation of a 3-Darchitecture for a biomedical-imaging system based on a mul-tilayered system-on-system structure. The architecture consistsof a complementary metal–oxide semiconductor image sensorlayer, memory, 3-D discrete wavelet transform (3D-DWT), 3-DAdvanced Encryption Standard (3D-AES), and an RF trans-mitter as an add-on layer. Multilayer silicon (Si) stacking permitsfabrication and optimization of individual layers by differentprocessing technology to achieve optimal performance. Utilizationof through silicon via scheme can address required low-poweroperation as well as high-speed performance. Potential benefits of3-D vertical integration include an improved form factor as wellas a reduction in the total wiring length, multifunctionality, powerefficiency, and flexible heterogeneous integration. The proposedimaging architecture was simulated by using Cadence Spectreand Synopsys HSPICE while implementation was carried out byCadence Virtuoso and Mentor Graphic Calibre.

Index Terms—3D-AES, 3-D–discrete wavelet transfrom(3D-DWT), biomedical imaging, system-on-system (SoS), unarycomputation.

I. INTRODUCTION

M OBILE HEALTH-CARE monitoring systems andservices are rapidly growing as the result of advances

in silicon complementary metal–oxide semiconductor (CMOS)scaling as well as rapid improvements in the availability ofbroadband communication systems and networks. Video im-ages, such as magnetic resonance imaging (MRI), computedtomography (CT), and X-rays introduce heavy demand on thestorage capacity of the memory layer of a processing engine.Contemporary research into future digital biomedical-imagingtechnology depicted in Fig. 1 is conjectured to influence themanner in which radiologists, medical practitioners, and spe-cialists interact. The dynamic and bandwidth requirement of

Manuscript received March 31, 2010; revised July 25, 2010; acceptedSeptember 09, 2010. Date of current version November 24, 2010. Thiswork was supported by the World Class University (WCU) Project ofMEST and KOSEF through Chungbuk National University under Grant no.R33-2008-000-1040-0. This paper was recommended by Associate Editor A.Bermak.

S.-J. Lee, Y.-K. Hong, T. W. Cho, Y. You, K. Cho, and K. Eshraghian arewith the College of Electrical and Computer Engineering, Chungbuk NationalUniversity, Cheongju 361-763, Chungbuk, South Korea (e-mail: [email protected]; [email protected]; [email protected];[email protected]; [email protected]; [email protected]).

O. Kavehei is with the College of Electrical and Computer Engineering,Chungbuk National University, Cheongju 361-763, Chungbuk, South Korea.He is also with the School of Electrical and Electronic Engineering, Universityof Adelaide, Adelaide SA 5005, Australia (e-mail: [email protected]).

Color versions of one or more of the figures in this paper are available onlineat http://ieeexplore.ieee.org.

Digital Object Identifier 10.1109/TBCAS.2010.2079330

Fig. 1. Proposed ubiquitous mobile digital biomedical-imaging system as partof health-care monitoring and management.

medical sensor data vary significantly from 10 ksamples/s at 20b/sample for CT, to more than 100 Msamples/s at 16 b/samplefor MRI. Medical sensor data rates are increasing exponentially.It is conjectured that next generations of CT and MRI systemsare likely to generate 4 Gb/s to more than 200 Gbit/s of sampleddata [1]. Usually, compression algorithms offer either lossyor lossless operations. As a consequence, several approaches,such as discrete cosine transform (DCT)-based JPEG, MPEG,and H.26x have been implemented to address the complexityassociated with the massive amount of data required to betransported in one form or another. The wavelet-based imageprocessing, such as discrete wavelet transform (DWT), hasemerged as an option to DCT-based schemes. The approachpermits implementation of a highly scalable image compressionwith inherently superior features, such as progressive lossy tolossless performance within a single data stream, the ability toenhance the quality associated with selected spatial regions inselected quality layers, and the absence of blocky artifacts inlow bit rates. Substantial clinical research has been devoted toapplications of image compression for biomedical equipmentsand, as a consequence, the medical imaging standard DigitalImaging and Communications in Medicine (DICOM) employsJPEG 2000 [2], [3]. Privacy protection has also been an impor-tant issue as medical image data are transmitted through publiccommunication networks. Encryption is an effective way toprotect medical information records against modification andeavesdropping of communication channels and, hence, canoffer the security and integrity of stored data from tampering.

The conventional 2-D systems-on-a-chip (SoC) technology[4] that has characterized implementation strategies of the in-dustry over the last decade has many challenges in terms of

1932-4545/$26.00 © 2010 IEEE

LEE et al.: 3-D SOS BIOMEDICAL-IMAGING ARCHITECTURE 427

Fig. 2. Three-dimensional stacked IC technology featuring 5-�m TSV tech-nology, enabling the interconnection of global wires between different dies [7].

area utilization, long signal paths, and the related complexityof signal routing that requires a large number of inputs/outputs(I/Os), resulting in an increase in power consumption. Conse-quently, a number of constraints are encountered in the design ofhigh-speed and low-power portable multimedia-based biomed-ical-imaging systems.

Recent advances in 3-D multilayered fabrication, togetherwith the progress in vertical interconnect technology, such asthat of the through silicon via (TSV) shown in Fig. 2, make the3-D architectural mapping a viable option for gigascale inte-grated systems [5]–[7] demanded by the more futuristic meta-health cards and the like [8]. Therefore, the combination of 3-Dintegrated architectures with multilayer silicon die stacking isa promising solution to the severe problem faced by the in-tegrated-circuit (IC) industry as geometries are scaled below32 nm. Three-dimensional VLSI technology increases packingdensity while having the potential for providing significant im-provement in propagation delay and reduced power consump-tion when compared to its equivalent 2-D counterpart. For ex-ample, propagation delay and energy dissipation associated withinterconnects are reduced by up to 50% at a 45-nm feature size[9]. Hence, multilayer Si die stacking is an imminent schemefor mobile health–care monitoring that can address low-powerrequirements, small footprint/volume, high reliability, as wellas the option for high-speed performance. Through utilizationof TSV technology, chips can be individually optimized and,hence, vertically stacked. The length of TSV can range from 20to 50 m [10], being shorter than the conventional 2-D SoC de-signs. The smaller size and shorter physical dimension reduceparasitic effects resulting in faster and cooler circuits. TSVsare good thermal conductors. Therefore, strategic positioningof TSVs in adjacent layers, inclusion of dummy thermal TSVs,and placement of TSVs within hotspots can enhance thermalconduction of central layers at a cost of utilizing an additionalchip area. Other options also also available, such as an on-chipthermoelectric thin film cooler that has limited promise [11].However, these exotic techniques are more expensive and havelimited applications.

Although scaled transistors show an improved performancebetween the 90–22 nm range, the wiring interconnects dominatethe behavior [5], [12]–[15]. The improvement gained, whichis the result of scaled transistors, is insignificant when cam-pared with the influence of scaled interconnects. The 3-D ICsoffer a promising solution, reducing the interconnect length andthe footprint, without the need for scaled transistors [16]. Asa consequence, the focus of this paper is in the realization ofa multilayered SoS architecture that consists of an image cap-ture layer (upper layer), memory, 3-D DWT (3D-DWT), 3-D

Advanced Encryption Standard (3D-AES), and an RF trans-mitter that can be implemented as an add-on layer to facilitatessecure data transmission over public communication channels.For the image capture layer, many alternatives are feasible thatcan produce the necessary data stream [17]. The focus in ourapproach, however, is directed toward the design and simula-tion of a single-inverter pulsewidth modulation (PWM) readoutstructure that provides a digital data stream to the next layer, andconsumes about 5% of total consumed power.

This paper is organized as follows. Section II generalizes theoverall 3-D system architecture for image capture, data com-pression, and encryption. Section III introduces the image-cap-turing layer followed by Section IV where the 3-D image-pro-cessing and encryption layers are presented. Performance andrelated analysis are given in Section V. Section VI concludesthis paper.

II. 3-D MULTILAYERED PHYSICAL ARCHITECTURE

The 3-D portable health-care monitoring system is comprisedof multiple Si dies that accommodate system layers, such asthe CMOS image sensor (CIS), memory layer, 3D-DWT [18],and 3D-AES [19] blocks, and an add-on RF transmitter. Fig. 3illustrates the logical architecture as well as the approach towardthe segmentation of the layers.

The approach pursued in the design of the CIS layer is basedon a single-inverter digital pixel sensor (DPS). In this arrange-ment, the pixel’s output signal is compatible with a unary (incoding the lengths of zeros in a bit vector) data-computationframework in anticipation that this approach will prove advan-tageous in future designs [20]. In order to represent a decimalnumber in the unary number system, an arbitrarily chosensymbol representing “1” is repeated times. For example, dec-imal “6” as an 8-b word in unary is represented by “11111100.”The significant feature to be noted is that there is only oneswitching transition from “1” to “0.” The unary computationhas the potential to improve the frame rate of CIS and providefurther improvement in power dissipation due to reduction inthe switching activity. The stored image frames in the memorylayer (Fig. 3) are forwarded to the 3D-DWT layer, which con-sists of a series of decompositions and embedded block codingwith optimized truncation (EBCOT). Subsequently, the com-pressed image data are encrypted by the 3D-AES layer and canbe transmitted by the RF transmitter to a host computer. Thememory layer may be implemented by using the more conven-tional SRAM. However, due to the possible requirement for verylow power and ultra-high capacity memory, inclusion of Mem-ristor (a portmanteau of memory and resistor) creates new possi-bilities [21] in implementing this layer. The significant featuresof the memristor are its ability to remember its state after theremoval of the power source, its nano-based feature size thatwould allow realization of Terabit memories at low power, andits compatibility with CMOS processing technology [5]

In 3-D implementation, the average global interconnectswiring length and, hence, overhead delay increase by a squareroot of the number of layers [22]. Therefore, smaller featuresize and shorter physical dimension of TSV technology, whenadopted as the interconnect between layers, reduce the parasiticeffects resulting in faster and cooler circuits. To address thepossible dissipation problem of the central layers that could

428 IEEE TRANSACTIONS ON BIOMEDICAL CIRCUITS AND SYSTEMS, VOL. 4, NO. 6, DECEMBER 2010

Fig. 3. Proposed 3-D multilayer secure imaging system where the interlayerinterconnections are based on the TSV technology. (a) Logical architecture. (b)Physical implementation using TSVs for connecting the layers.

lead to a rise in temperature, the strategy in our proposedarchitecture is to change the thermal profile of the central coreby interleaving 3D-DWT and 3D-AES into two layers (Fig. 3).This approach distributes the switching activity between twolayers and, as a consequence, changes the thermal profile of thecore.

Moreover, the significant aspect of the proposed 3-D archi-tecture for medical application is the possibility for compres-sion to take place at the sensor level [23]. The advantage offeredappears in terms of performance (bandwidth/memory require-ments) and computational complexity improvements [23], [24].

III. IMAGE CAPTURING: CMOS IMAGE SENSOR LAYER

Conventional CIS usually includes pixel arrays, gain ampli-fiers, and analog-to-digital converters (ADC) implemented onthe same Si die. The overall performance of CIS can be en-hanced if the high-speed digital processor layer and the analoglayer are optimized separately, and connected by TSV [14]. Anasynchronous event-driven signal processor can manage com-munication between the layers.

Fig. 4. Proposed pixel with conditional reset transistors: M2 and M3.

A. CMOS Image Sensor (CIS) Layer

In order to improve the dynamic range of CIS and, as ourinitial step, we first augmented the conventional CIS design [25]with the addition of conditional reset transistors M2 and M3in the pixel as illustrated in Fig. 4. The M3 transistor selectsonly one pixel to reset conditionally in a given column by thec_reset signal that is a column-select common signal. The M1transistor resets the photodiode at the end of every integrationperiod, which prevents pixels from being bloomed. The sourcefollower transistor M4, amplifies the photodiode’s output signaland applies it to a column line of CIS (pixel out).

1) Conditional Reset Algorithm : The pixel output is sam-pled at exponentially increasing exposure timesand is digitized to bits [26]. The digitized samples for eachpixel are combined into bit binary numbers with thefloating-point format, which is further converted to a floating-point number with an exponent ranging from 0 to and an -bitmantissa. This increases the dynamic range by a factor of ,while providing bits of resolution for each exponent range ofillumination. Since analog-to-digital (A/D) conversion is carriedout at each sampling instance, there is a potential problem ofblooming due to the pixel saturation. A higher dynamic range,however, can be obtained by reducing the sample-to-sample in-terval [25], [27].

In the conditional reset-based pixel, the combination of thephotodiode and the fd node’s parasitic capacitances was esti-mated to be 48 fF, and the estimated value of conversion gain[25] was 3.3 . The pixel output voltage of 1.6 was re-freshed at the 5-ms integration time frames. The photocurrent( ) and the total number of electrons were calculated to be 15.3pA and 500 000, respectively [25]. The product of the conver-sion gain and the total number of electrons gives a voltage dropof 1.6 V at the pixel output [25]. The pixel area and fill factorare 16 16 m and 34%, respectively [25]. To improve theperformance of the CIS layer, we redesigned and simulated a128 128 pixels structure (Fig. 5) using a Hynix 0.25- m stan-dard CMOS process, and commercial design tools. Using theconditional algorithm, the sensor’s dynamic range can be in-creased to , where is the sampling times during an integra-tion period and it is only limited by the speed of the readoutcircuitry.

LEE et al.: 3-D SOS BIOMEDICAL-IMAGING ARCHITECTURE 429

Fig. 5. CMOS image sensor chip layout. (a) CIS chip layout used for extractionand simulations. (b) Individual pixel layout.

Fig. 6. New pixel circuit with an inverter acting as an A/D converter. The “0”to “1” transition at the output of the inverter can occur only once which implies areduction in switching activity and, hence, an improvement in power dissipation.

B. Advanced CIS Pixel

The circuit depicted in Fig. 4 has an advantage in dynamicrange. However, the pixel area is larger than the conventionalstructure. The need for inclusion of an A/D convertor also influ-ences area utilization, speed, and power consumption. To over-come the area/speed limitations and to address the need for theCIS layer to be connected directly to the next layer, a new digitalpixel sensor (DPS) approach as shown in Fig. 6 is investigated.The pixel output in this novel circuit is a pulsewidth-modulatedsignal that transfers illumination levels directly to the next layer.The amount of charge induced due to the incident illuminationdetermines the output state of the inverter. Pixel informationin an 8-b depth has 256 steps of luminance. Within the unarynumber system, there is only one transition in 256 steps, whichimplies it is possible to reduce the dynamic power dissipation ofthe circuit [20]. The nature of unary arithmetic is an importantstrategy for the design of future processors for low-power andportable applications. The M3 transistor shown in Fig. 6 is usedto cancel the offset voltage while the M2 transistor is OFF. M3and reset transistors are ON simultaneously. Since the inverter’soutput is not fully charged (near ), depending on the il-lumination level, it gradually charges as the input to the inverterdecreases. As a consequence, it is possible to increase the framerate by reducing the integration time.

The new pixel downloads frame data to the processor layerthrough TSV in parallel. Since the integration time can be overseveral milliseconds, the pixel has adequate time to accumulatethe input charge because of the low-level incident illumination.Moreover, the dynamic switching “0” to “1” transition occurs

Fig. 7. Physical architecture of a multilayered CIS, which also includes thememory layer as well as the compression (3D-DWT) and encryption (3D-AES)layers.

only once at the output data stream of the pixel as shown inFig. 6.

The physical architecture of a multilayered CIS, which alsoincludes the compression (3D-DWT) and encryption (3D-AES)layers is shown in Fig. 7. The pixel array and peripheral circuitryare implemented in separate layers, and linked by TSV intercon-nects. The upper layer comprises pixel arrays, row drivers, anddecoders, and RGB sample-and-hold circuitry. The lower layersperform an image-saving task in the memory, such as SRAMand memristor [21], image compression with DWT, and encryp-tion by AES.

IV. 3-D IMAGE SIGNAL-PROCESSING ARCHITECTURE

The DWT algorithm has been widely used for image-pro-cessing techniques [18]. Traditionally, the wavelet scheme inimage processing is based on the 2D-DWT, where the focusis on treating only still images as planes. In contrast, the3D-DWT expands the 2D-DWT to 3-D for the compression ofvolumetric data, such as that created by CT and MRI scanners.The 3D-DWT has an advantage of allowing a series of 2-Dimages to be further compressed in the third dimension byexploiting correlation between the adjacent images in series.The outcome provides better compression ratios as well as theabsence of blocking artifacts [28], [29]. Therefore, such anapproach is implemented in a variety of applications, includingimage compression and noise reduction demanded by MRI andCT scans. The algorithm for DWT is 3-D while that of AES is2-D. However in order to optimize the thermal profile of thecore layer, the 2D-AES algorithm is implemented in two layerswhich gives a computation advantage gained through muchshorter TSV communication paths and, hence, further powerreduction.

A. 3-D-DWT Architecture

A 3-D image is an extension of 2-D images along the tem-poral direction. A 3-D-DWT performs a spatial-temporal de-composition along two spatial , , and one temporal direc-

430 IEEE TRANSACTIONS ON BIOMEDICAL CIRCUITS AND SYSTEMS, VOL. 4, NO. 6, DECEMBER 2010

tions on the image sequences. The captured image sequencespass through 3D-DWT decomposition and are coded throughthe embedded block coder. The 3-D-DWT repeats the decom-position of 1-DDWT. The DWT computation is described by

(1)

(2)

where and are the low- and high-pass subband filteroutputs, respectively. The functions and represent im-pulse responses of low-pass filters and high-pass filters, respec-tively. The shows the filter length, and and are the re-lated output signals [30]. Equation (1) describes a filter for thelow-frequency range, yielding approximate information while(2) shows a filter for the high-frequency range delivering de-tailed information. The decomposed approximate informationis further decomposed into another set of approximate and de-tailed information with a lower degree of resolution.

Fig. 8 illustrates one level of 3D-DWT, where the H-pass andthe L-pass represent a high-pass filter and a low-pass filter, re-spectively. The downsampled LLL (1-leveled low-pass signal)component can be decomposed further through multilevel DWTdecomposition processes. The LLL component is the low-res-olution version of the original image sequence and has mostof its features. The levels of image decomposition depend onthe system configuration dictated by the desired system perfor-mance. Conventional 3D-DWT is inefficient since it requires ac-cessing all of the image frames at the same time and, therefore,a significant amount of memory space is needed to perform theDWT process [31]. The concept of the group of frames (GoF),which is similar to the group of pictures in MPEG, is introducedhere to overcome the drawbacks associated with conventional3-D-DWT. Furthermore, 2-D physical implementation of the3-D-DWT algorithm has limitations in terms of compressionefficiency due to frame access. Therefore, a new architecturebased on a two-layered system is introduced which addressesthe frame-access issue. The 3-D physical implementation usingTSV permits accesses to all frames on the same time axis, thusproviding better data-compression efficiency.

B. DWT Functional Block and Filter Design

Functional block composition for the 3-D-DWT to imple-ment one-level 3-D-DWT is illustrated in Fig. 9 using thealgorithm highlighted in Fig. 8. The DWT functional blockconsists of filter bank-1s (FB-1s), filter bank-2s (FB-2s) andtheir related interconnections. The nine bank-1 filters down-sample in the direction. Two bank-2 filters and the nextfour bank-1 filters decompose images in the and direction,respectively. The filter architecture is based on the Daubechies9/7 filter [31]. The key parameters in the design of filters arethe peak signal-to-noise ratio (PSNR) and the occupied chiparea. Cao et al. achieved a high level of accuracy in fixed-pointimplementation by using 13-b coefficients [32]. Our approachis based on the modified version of Cao’s filter bank-1, whichmaps the adder/compressor array into two parallel streams inorder to generate the high and the low subband filter outputssimultaneously. Fig. 10 highlights the modified filter bank-1

Fig. 8. One-level 3-D-DWT decomposition where � and � represent a high-pass filter and a low-pass filter, respectively. The LLL component is the low-resolution version of the original image sequence and has most of its features.

Fig. 9. Logical architecture of the 3-D-DWT of filter bank-1 and filter bank-2.

having a serial input. Filter bank-2 is the same as filter bank-1,taking nine pixel data input in parallel while 3-D-DWT decom-poses the sequence of images along the direction of the axis(which is time), the axis (vertical), and the axis (horizontal)simultaneously. This implies that 81 pixels are processed inthree dimensions at a time.

C. Unary to Binary Convertor

We need a unary-to-binary (U2B) converter to save data fromthe CMOS image sensor to the memory layer which can beimplemented and can be either a SRAM or a memristor-basedstructure. The proposed U2B converter consists of a controller,register array, and clock source. As shown in Fig. 11, theconverted data are temporally saved in an 8-b register to adjusttiming that is based on S. Agarwal’s flip-flop [33]. The DWTprocessing utilizes binary computation. However, the binarycomputation can be replaced with unary system processing[34], [35].

D. 3-D-AES-Based Image Encryption

The medical images are encrypted with a block cipher al-gorithm AES that has a 128-b input data block with a cipherkey of length 128, 192, or 256 b [19]. The block cipher can

LEE et al.: 3-D SOS BIOMEDICAL-IMAGING ARCHITECTURE 431

Fig. 10. Modified filter bank-1 design. The adder/compressor array is dividedinto two parallel structures in order for the high and the low subband filter out-puts to be generated simultaneously.

Fig. 11. Logical architecture for the unary-to-binary convertor.

perform encipher and decipher operations using the repeatedoperation of a substitute permute network (SPN) on 128 b ofdata. Each time the SPN is used, it is supplied with a differentround-key. These are generated by a function known as key-ex-pansion. The first round comprises a 128-b XOR of the plain-text with the key to form a new 128-b state. Each middle roundoperates on the state by performing the operations—sub-bytes,shift-rows, mix-columns, and add-round-key. In the decryptionprocedure, the order of transformations is reversed. Mix- or in-verse mix-columns are not included at the last round of the func-tion [19]. Fig. 12 shows the block diagram for AES [36].

The 3-D-AES is implemented in two layers, reducing thedata-path length. The functional blocks in the two upper andbottom layers are partitioned in accordance with their areautilization and power density. In this architecture, the “Mix-Column” multiplier and the “S-box 1” have approximately fivetimes higher power density than other functional blocks [36].Therefore, the MixColumn multiplier and the “S-box 1” aredistributed through two layers and positioned so that they are

Fig. 12. Logical architecture for the 3-D-AES functional block.

not in the proximity of blocks in the neighborhood which havehigh-power density.

V. FUNCTIONAL MODULE SIMULATIONS

AND PERFORMANCE ANALYSIS

In this section, we present simulation results and analyze per-formance of the individual modules to be implemented in theproposed 3-D biomedical imager.

A. Pixel Dynamic Range and Power Evaluation

The inverter-based pixel structure was designed using Sam-sung semiconductor 0.13- m CMOS technology. Fig. 13 showsthe relationship between the photodiode normalized capacitancewith respect to sensitivity (V/sec-lux) and effective photore-ceptor area ( m ). A set of active pixel sensors with dif-ferent photodiode dimensions with a 4 m pixel pitch was sim-ulated. The cell areas were varied between 1–6.25 m ( axis)and the photodiode capacitances that are related to the accu-mulated charge at each pixel were noted. The axis of Fig. 13highlights the normalized capacitance for each pixel while the

axis demonstrates the sensitivity of a pixel. As the photodiodearea increases, sensitivity decreases and more effective chargebecomes available. However, there is a highly nonlinear rela-tionship between the photodiode junction capacitance and pho-todiode area, when there is relatively low sensitivity and highfill-factor (effective photoreceptor area/total pixel area).

The power analysis for the single inverter pixel was also car-ried out using the mentioned technology. Simulations show thatthe average power consumption to capture a frame (where thetotal photocurrent ( ) varies from 10 pA to 10 nA across the128 128 sensor) is 1.36 mW. Fig. 14 also shows the delay be-tween integration time triggering ( ) and the switching pointat the output of the inverter ( ). The delay decreases by in-creasing the light intensity, and an adaptive approach to adjustthe integration time will significantly help to reduce the powerconsumption of the pixel.

B. CIS Readout Architectures and Related Analysis

There are several options for the implementation of thereadout part of the CIS. In El-Desouki’s work [37], readoutis classified in terms of pixel-by-pixel ADC (PBP-ADC),per-column ADC (PC-ADC), and per-pixel ADC (PP-ADC).Fig. 15 highlights these three different architectures of CIS.Their respective frame rates (FR) in each case can be modeled

432 IEEE TRANSACTIONS ON BIOMEDICAL CIRCUITS AND SYSTEMS, VOL. 4, NO. 6, DECEMBER 2010

Fig. 13. Relationship between the photodiode-normalized capacitance with re-spect to sensitivity and effective photodiode area.

Fig. 14. Delay between the starting point of the integration time and inverter’soutput switching point as a function of photocurrent, where � , � , � ,� ,and ��� are the total current that passes through the photodiode, photocur-rent, dark current, integration time (here is its triggering point), and the inverter’soutput.

by

(3)

(4)

(5)

where and are the number of rows and columns in the pixelarray, respectively. is the time necessary for the ADC tocomplete one conversion, is the time required by the chipI/O to send out the converted digital result, is the number ofdigital bits, and is the number of parallel outputs [37], [38].

In our proposed CIS architecture, corresponds to. The remaining parameters are kept the same for

simulations. If unary computation is considered for the CIS

Fig. 15. Different readout architectures of CIS. (a) Pixel-by-pixel ADC (PBP-ADC). (b) Per-column ADC (PC-ADC). (c) Per-pixel (PP-ADC) architectures.

then, the frame rate (FR) is modified to

Proposed (6)

Thus, the FR of readout for proposed architectures can be esti-mated by increasing the resolution. This is illustrated in Fig. 16,where , , and are fixed at 2 ms, 50 MHz, and 32, re-spectively. The bandwidth of the output I/O bus is assumed tobe 32-b for the purpose of comparison with other architectures.The results shown in Fig. 16 indicate that for high resolutionimaging, that is, higher than 10 K, the unary architecture is abetter performer than the conventional approach. Thus, our pro-posed inverter-based architecture can maintain higher resolutionat a very high frame rate than the conventional architecture.

C. Dynamic Power Estimation for TSV Data Transition

Since TSV interconnection plays an important function in theproposed architecture, we address the issue of dynamic powerdissipation in this section. The conventional CIS image sensoroutputs image data in the binary format at the output of the A/Dconverter while the inverter-based CMOS image sensor outputsimage data with the unary format. As a preprocessor, we employa data format converter that converts data from unary to a binaryat the front stage of DWT using a 256-b counter. When unarydata changes from “0” to “1,” the controller writes a count valueonto the registers.

The dynamic power dissipation is expressed as

(7)

LEE et al.: 3-D SOS BIOMEDICAL-IMAGING ARCHITECTURE 433

Fig. 16. Relationship between frame rate and resolution of different CISreadout architectures. The readout architecture is classified in terms ofpixel-by-pixel ADC (PBP-ADC), per-column ADC (PC-ADC), and per-pixelADC (PP-ADC), also showing the corresponding proposed inverter-basedarchitecture with unary computation domain.

TABLE IPHYSICAL DIMENSION AND CHARACTERISTICS OF TSV

where is a load capacitance of TSV, is an operationvoltage, is the frame rate, and is a transition proba-bility. Table I shows the physical dimension and characteristicsof the TSV based on Marchal et al. Reference [7] is used fordynamic power analysis. A 5- m TSV’s diameter has a capac-itance of 239.5 fF and is chosen to carry out simulation work.The operating voltage and the frame rate are 1.2 V and 50MHz, respectively. Data-format transitions of the binary formatdiffer from those for the unary format. For transmitting 8-b datain the binary, the worst-case number of transitions is 8, whilein the unary, the transition occurs, at most, once during datatransmission.

In order to simulate the behavior of TSV and estimateddynamic power dissipation, (7) is employed and two medicalMRI and CT images are adopted for simulation. In addition,two nonmedical images (Stefan and Container) with the QCIF(176 144) format and 64 frame for the binary and unarydata format are also included. Stefan shows higher dynamicpower dissipation than those of the medical images since imagechanges dynamically. The unary format keeps constant powerdissipation as data switches only once. The results of simulationillustrating the dynamic power versus frame number are shownin Fig. 17.

D. Comparison Between 2-D and 3-D-DWT Implementation

Sample medical images of MRI and CT shown in Fig. 18illustrate test images of the original and reconstructed CT and

Fig. 17. Dynamic power dissipation for TSV using two medical images (MRIand CT) and two nonmedical images (Stefan and Container) for the binary andunary implementations. � and � denote binary and unary simulations, respec-tively.

MRI that were adopted for simulations. The DWT imageswere compressed with quantization and the embedded blockcoding [39]. Raw data for all cases is formatted as UXGA(1600 1200) with 8-b depth and 64 frames. The PSNRs andthe related mean-square error (MSE) for 2-D and 3-D-DWTare modeled by (8) and (9) [40]

(8)

(9)

where MSE is the quadratic average difference between theoriginal and the restored images. represents the numberof pixels on a frame, and is the number of frames. A com-parison between several approaches ranging from lossless tonear-lossless approaches is shown in Table II. The 3-D approachfor medical test MRI and CT images offers approximately 20%improvement in PSNR over the corresponding 2-D compressionwhen the compression ratio is kept at 2:1.

The compression ratio has been calculated as the ratio be-tween the number of bits occupied by the unpacked image andthe number of bits in the compressed image. Fig. 19 shows thePSNR comparison between 2-D and 3-D-DWT for Ultra eX-tended Graphics Array (UXGA, 1600 1200) size of MRI andCT with 64-frames. As for the results, the PSNR for 3-D-DWTis 61.0 dB, which is higher than the corresponding 2-D-DWTof only 51.2 dB under the 2:1 compression ratio. The “LLL”component carries the largest amount of image information. The3D-DWT, allows a more aggressive compression to take place,while retaining the “LLL” impact on PSNR.

E. Power Estimation for 3-D Implementation

We designed a 128 128 CMOS image sensor pixel arraywith the proposed single-inverter architecture. We estimatepower of the blocks CIS, U2B, memory, 3-D-DWT, and AES.The average power consumption of CIS is 1.36 mW at 200MHz using 1.2 V. For the 3-D-DWT, we employed Cao’sfilter architecture [32] having a 9/7 tap filter that consists of

434 IEEE TRANSACTIONS ON BIOMEDICAL CIRCUITS AND SYSTEMS, VOL. 4, NO. 6, DECEMBER 2010

Fig. 18. Test images (UXGA (1600� 1200) with 8-b depth and 64 frames) ofthe (a) original CT and MRI and (b) reconstructed CT and MRI by the proposedalgorithm.

TABLE IIPERFORMANCE COMPARISON OF THE PROPOSED ARCHITECTURE AND EXISTING

METHODS IN TERMS OF COMPRESSION RATIO AND AVERAGE PSNR

27 adders. In this design, we replaced adders with Kavehei’scircuit [44] having a power consumption in the order of 2.2mW. The 3-D-AES is based on Panu’s architecture [36] thatconsumes 5.6 mW at 152 MHz. Table III shows estimatedpower consumptions for the functional blocks of our proposed3-D architecture. All power estimations are simulated usingSamsung semiconductor 0.13- m standard CMOS technologyand 1.2 V .

VI. CONCLUSION

This paper presents an SoS architecture that targets biomed-ical imaging and secure transmission over various communi-cation networks. Multiple chips in conventional systems areintegrated into a consolidated 3-D structure, employing TSVtechnology. Individual die can be fabricated with optimal Siprocess technology suitably adapted to targeted applications.

Fig. 19. PSNR comparison result of the 2-D and 3D-DWT for (a) MRI and (b)CT images.

TABLE IIIPOWER ESTIMATION FOR THE PROPOSED ARCHITECTURE

The architecture yields several advantages over the conven-tional single die approach. High performance and low powerare one of the major potential advantages of this multilayeredstructure. Speed improvement is achieved due to the shorterinterconnects. The system dissipates less power due to thereplacement of power hogging interchip I/O circuitry withinter-die land-TSV structures without the need for signal am-plification. The load of each circuit is much smaller than theconventional I/O ports and, therefore, the power consumptionis lower. Several options of the readout part of the CIS werestudied. The results derived from simulations indicate that forhigh-resolution applications, the proposed CIS architecturebased on unary computation shows better performance thanconventional approaches. Furthermore, the proposed 3-D ar-chitecture can maintain high resolution over 10 million pixelsat a relatively high frame rate. The comparison between 2-Dand 3-D architectures for MRI and CT images highlightedthat 3-D implementation provides an improvement in PSNR ofabout 20% over the corresponding 2-D compression. The 3-Dintegration is perhaps the best available option to continue withMoore’s law.

ACKNOWLEDGMENT

The authors would like to thank iDataMap Pty Ltd. for theircontribution.

LEE et al.: 3-D SOS BIOMEDICAL-IMAGING ARCHITECTURE 435

REFERENCES

[1] A. Wegener, “Compression of medical sensor data [Exploratory DSP],”IEEE Signal Process. Mag., vol. 27, no. 4, pp. 125–130, Jul. 2010.

[2] D. Clunie, DICOM Standard 2009. [Online]. Available: http://www.dclunie.com/dicom-status/status.html

[3] D. Dhouib, A. Nait-Ali, C. Olivier, and M. S. Naceur, “Performanceevaluation of wavelet based coders on brain MRI volumetric medicaldatasets for storage and wireless transmission,” Int. J. Biol., Biomed.Med. Sci., vol. 3, pp. 147–156, 2008.

[4] F. Catthoor, N. D. Dutt, and C. E. Kozyrakis, “How to solve the currentmemory access and data transfer bottlenecks: At the processor archi-tecture or at the compiler level,” in Proc. Conf. Des., Autom. Test Eur.,New York, 2000, pp. 426–435.

[5] International Technology Roadmap for Semiconductors (ITRS) Inter-connect, 2008. [Online]. Available: http://public.itrs.net

[6] E. Beyne, “3D Interconnection and packaging: Impending reality orstill a dream?,” in Proc. IEEE Int. Solid-State Circuits Conf., 2004, vol.1, pp. 138–139.

[7] P. Marchal, B. Bougard, G. Katti, M. Stucchi, W. Dehaene, A. Pa-panikolaou, D. Verkest, B. Swinnen, and E. Beyne, “3-D technology as-sessment: Path-finding the technology/design sweet-spot,” Proc. IEEE,vol. 97, no. 1, pp. 96–107, Jan. 2009.

[8] K. Eshraghian, RadCard (DICOM Datacard) iDataMap, 2010. [On-line]. Available: http://www.idatamap.com

[9] M. Bamal, S. List, M. Stucchi, A. Verhulst, M. Van Hove, R. Car-tuyvels, G. Beyer, and K. Maex, “Performance comparison of inter-connect technology and architecture options for deep submicron tech-nology nodes,” in Proc. Int. Interconnect Technol. Conf., 2006, pp.202–204.

[10] V. F. Pavlidis and E. G. Friedman, “Interconnect-based design method-ologies for three-dimensional integrated circuits,” Proc. IEEE, vol. 97,no. 1, pp. 123–140, Jan. 2009.

[11] I. Chowdhury, R. Prasher, K. Lofgreen, G. Chrysler, S. Narasimhan, R.Mahajan, D. Koester, R. Alley, and R. Venkatasubramanian, “On-chipcooling by superlattice-based thin-film thermoelectric,” Nature Nan-otechnol., vol. 4, pp. 235–238, 2009.

[12] J. A. Davis, R. Venkatesan, A. Kaloyeros, M. Beylansky, S. J. Souri,K. Banerjee, K. C. Saraswat, A. Rahman, R. Reif, and J. D. Meindl,“Interconnect limits on gigascale integration (GSI) in the 21st century,”Proc. IEEE, vol. 89, no. 3, pp. 305–324, Mar. 2001.

[13] G. Metze, M. Khbels, N. Goldsman, and B. Jacob, “HeterogeneousIntegration,” Tech Trend Notes, vol. 12, no. 2, p. 3, Sep. 2003.

[14] R. S. Patti, “Three-dimensional integrated circuits and the future ofsystem-on-chip designs,” Proc. IEEE, vol. 94, no. 6, pp. 1214–1224,Jun. 2006.

[15] S. Gupta, M. Hilbert, S. Hong, and R. Patti, “Techniques for producing3D ICs with high-density interconnect,” presented at the 21st Int. VLSIMultilevel Interconnection Conf., Waikoloa Beach, HI, Sep. 2004.

[16] M. Koyanagi, T. Fukushima, and T. Tanaka, “High-density throughsilicon vias for 3-D LSIs,” Proc. IEEE, vol. 97, no. 1, pp. 49–59, Jan.2009.

[17] T. Miyoshi, Y. Arai, M. Hirose, R. Ichimiya, Y. Ikemoto, T. Kohriki,T. Tsuboyama, and Y. Unno, “Performance study of SOI monolithicpixel detectors for X-ray application,” Nucl. Instrum. Meth. Phys. Res.Section A: Accel.,Spectrometers, Detectors Assoc. Equip., Apr. 2010,to be published.

[18] R. M. Jiang and D. Crookes, “Area-efficient high-speed 3D-DWT pro-cessor architecture,” Electron. Lett., vol. 43, no. 9, 2007.

[19] Advanced Encryption Standard (AES), National Institute of Standardand Technology (NIST), FIPS PUB 197, Nov. 2001, Fed. Inf. Process.Std. Publ.

[20] S. Xue and B. Oelmann, “Unary-prefixed encoding of lengths of con-secutive zeros in bit vector,” Electron. Lett., vol. 41, no. 6, pp. 346–347,Mar. 2005.

[21] O. Kavehei, A. Iqbal, Y. S. Kim, K. Eshraghian, S. F. Al-Sarawi, andD. Abbott, “The fourth element: Characteristics, modelling and elec-tromagnetic theory of the memristor,” Proc. Roy. Soc. A: Math., Phys.Eng. Sci., vol. 466, no. 2120, pp. 2175–2202, 2010.

[22] J. W. Joyner, P. Zarkesh-Ha, and J. D. Meindl, “Global interconnect de-sign in a three-dimensional system-on-a-chip,” IEEE Trans. Very LargeScale Integr. (VLSI) Syst., vol. 12, no. 4, pp. 367–372, Apr. 2004.

[23] C. Posch, D. Matolin, and R. Wohlgenannt, “A QVGA 143 dB dynamicrange asynchronous address-event PWM dynamic image sensor withlossless pixel-level video compression,” in Proc. IEEE Int. Solid-StateCircuits Conf. Dig. Tech. Papers, Feb. 7–11, 2010, pp. 400–401.

[24] F. Luisier, C. Vonesch, T. Blu, and M. Unser, “Fast interscale waveletdenoising of poisson-corrupted images,” Signal Process., vol. 90, no.2, pp. 415–427, Feb. 2010.

[25] S. H. Yang and K. R. Cho, “High dynamic range CMOS image sensorwith conditional reset,” in Proc. IEEE Custom Integrated CircuitsConf., 2002, pp. 265–268.

[26] D. X. D. Yang, A. El Gamal, B. Fouler, and H. Tian, “A 640� 512CMOS image sensor with ultrawide dynamic range floating-pointpixel-level ADC,” IEEE J. Solid-State Circuits, vol. 34, no. 12, pp.1821–1834, Dec. 1999.

[27] Z. Milin and A. Bermak, “Compressive acquisition CMOS imagesensor: From the algorithm to hardware implementation,” IEEE Trans,Very Large Scale Integr. (VLSI) Syst., vol. 18, no. 3, pp. 490–500, Mar.2010.

[28] R. M. Jiang and D. Crookes, “FPGA implementation of 3D discretewavelet transform for real-time medical imaging,” in Proc. CircuitTheory and Design, Aug. 2007, pp. 519–522.

[29] H. Y. Yoo, K. Lee, and B. D. Kwon, “Implementation of 3D discretewavelet scheme for space-borne imagery classification and its applica-tion,” in Proc. IEEE Int. Geoscience and Remote Sensing Symp., Jul.23–28, 2007, pp. 3437–3440.

[30] T. Huang, P. C. Tseng, and L. G. Chen, “Flipping structure:An efficient VLSI architecture for lifting-based discrete wavelettransform,” IEEE Trans. Signal Process., vol. 52, no. 4, pp.1080–1089, Apr. 2004.

[31] O. Fatemi and S. Bolouki, “Pipeline, memory-efficient and pro-grammable architecture for 2D discrete wavelet transform using liftingscheme,” in Proc. Inst. Elect. Eng., Circuits, Devices and Systems,Dec. 2005, vol. 9, pp. 703–708.

[32] X. Cao, Q. Xie, C. Peng, Q. Wang, and D. Yu, “An efficientVLSI implementation of distributed architecture for DWT,” inProc. IEEE 8th Workshop Multimedia Signal Processing , Oct.2006, pp. 364–367.

[33] S. Agarwal, P. Ramanathan, and P. T. Vanathi, “Comparative analysisof low power high performance flip–flops in the 0.13 �� technology,”in Proc. Int. Conf. Advanced Computing and Communications, Dec.18–21, 2007, pp. 209–213.

[34] Y. K. Hong, K. T. Kim, Y. G. Kim, Y. H. Kim, K. R. Cho, T. W. Cho, Y.You, and K. Eshraghian, “Three-dimensional discrete wavlet transform(DWT) based on unary arithmetic,” in Proc. 14th World Multi-Conf.Systemics, Cybernetics and Informatics, Orlando, FL, 2010, vol. 1, pp.30–33.

[35] S. Y. Kim, K. T. Kim, K. R. Cho, T. W. Cho, and Y. You, “Unarycomputation for biomedical data,” in Proc. 14th World Multi-Conf. Sys-temics, Cybernetics and Informatics, Orlando, FL, 2010, vol. 1, pp.34–38.

[36] H. Panu, A. Timo, H. Marko, and D. H. Timo, “Design and imple-mentation of low-area and low-power AES encryption hardware core,”in Proc. 9th EUROMICRO Conf. Digital System Design, 2006, pp.577–583.

[37] M. El-Desouki, M. J. Deen, Q. Fang, L. Liu, F. Tse, and D. Armstrong,“CMOS image sensors for high speed applications,” in Sensors (Peter-boroug 2009), Jan. 2009, vol. 9, pp. 430–444.

[38] S. Chen, F. Boussaid, and A. Bermak, “Robust intermediate read-outfor deep submicron technology CMOS image sensors,” IEEE SensorsJ., vol. 8, no. 3, pp. 286–294, Mar. 2008.

[39] K. Mei, N. Zheng, C. Huang, Y. Liu, and Q. Zeng, “VLSIdesign of a high-speed and area-efficient JPEG2000 encoder,”IEEE Trans., Circuits Syst. Video Technol., vol. 17, no. 8, pp.1065–1078, Aug. 2007.

[40] A. B. Watson, “What’s wrong with mean-squared error?,” in DigitalImages and Human Vision. Cambridge, MA: MIT Press, 1993, pp.207–220.

[41] S. Chokchaitam, M. Iwahashi, and S. Jitapunkul, “A new unified loss-less/lossy image compression based on a new integer DCT,” IEICETrans. Inf. Syst., vol. E88-D, no. 7, pp. 1598–1606, Jul. 2005.

[42] Y. Xie, X. Tang, and M. Sun, “Image compression based on classifica-tion row by row and LZW encoding,” in Proc. Congr. Image and SignalProcessing, May 27–30, 2008, vol. 1, pp. 617–621.

[43] V. Sanchez, P. Nasiopoulos, and R. Abugharbieh, “Efficient losslesscompression of 4-D medical images based on the advanced videocoding scheme,” IEEE Trans. Inf. Technol. Biomed., vol. 12, no. 4, pp.442–446, Jul. 2008.

[44] O. Kavehei, M. R. Azghadi, K. Navi, and A. P. Mirbaha, “Design ofrobust and high-performance 1-bit CMOS full adder for nanometer de-sign,” in Proc. IEEE Computer Soc. Annu. Symp. VLSI, Apr. 7–9, 2008,pp. 10–15.

436 IEEE TRANSACTIONS ON BIOMEDICAL CIRCUITS AND SYSTEMS, VOL. 4, NO. 6, DECEMBER 2010

Sang-Jin Lee (S’10) received the B.S. degree inchemical engineering and the M.S. degree in infor-mation and communication engineering from theChungbuk National University, Cheongju, Korea, in2008 and 2010, respectively, where he is is currentlypursuing the Ph.D. degree.

His current research interests include the design ofmultilayer system-on-systems technology, comple-mentary metal–oxide semiconductor image sensors,as well as cryptographic and embedded systems.

Mr. Lee is a member of the World Class University(WCU) Program at Chungbuk National University, Korea.

Omid Kavehei (S’05) received the M.S. degree incomputer systems engineering from the NationalUniversity of Iran (Shahid Beheshti University),Tehran, Iran, in 2005 and is currently pursuing thePh.D. degree in electrical and electronic engineeringat the University of Adelaide, Adelaide, Australia.

Since 2009, he has been a Visiting Scholar atChungbuk National University (CBNU), SouthKorea. His research interests include emergingnonvolatile memory systems and bio-inspired infor-mation processing.

Dr. Kavehei received an Endeavor International Postgraduate ResearchScholarship from the Australian Government. He was a recipient of the D. R.Stranks Travelling Fellowship, Simon Rockliff (DSTO) Scholarship, ResearchAbroad Scholarship, and the World Class University (WCU) Program ResearchScholarships.

Yoon-Ki Hong received the B.S. degree in electronicengineering from the Chungbuk National University,Cheongju, Korea, in 2009, where he is currently pur-suing the M.S. degree.

His current research interests include discretewavelet transform and circuit design. He is alsoa member of the World Class University (WCU)Program at Chungbuk National University, Korea.

Tae Won Cho (M’92) received the B.S degreein electronic engineering from Seoul NationalUniversity, Seoul, Korea, in 1973, the M.S. degreein electrical engineering from the University ofLouisville, Louisville, KY, in 1986, and the Ph.D.degree in electrical engineering from the Universityof Kentucky in 1992.

From 1973 to 1983, he was with Gold Star, Korea.Since 1992, he has been a Professor at the Collegeof Electrical and Computer Engineering, ChungbukNational University, Chungbuk, South Korea. Also,

he is a representative of the Research Institute of Ubiquitous Bio-InformationTechnology and CEO of Youbicom. His research interests are very-large scaleintegrated design, low-power circuits, computer system architecture, and em-bedded systems.

Dr. Cho is a member of the Institute of Electronics Engineers of Korea.

Younggap You (M’81) received the B.S. degreein electronic engineering from Sogang University,Seoul, Korea, in 1975, and the M.S. and Ph.D.degrees in electrical engineering from the Univer-sity of Michigan, Ann Arbor, in 1981 and 1986,respectively.

From 1975 to 1979, he was with the Agency forDefense Development, Korea, where he was involvedin high-speed logic design. He was a Principal Engi-neer at LG Semiconductor Inc. (now Hynix Semicon-ductor Inc.) from 1986 through 1988. Currently, he

is a Professor and Dean of the College of Electrical and Computer Engineeringat Chungbuk University, Cheongju, Korea. His research interests are fault-tol-erant computing, cryptography, silicon die-stacking architectures, and systemstesting.

Dr. You is a member of the World Class University Program of the ChungbukUniversity. He has 25 patents, and authored five technical books, including Fun-damentals of DRAM Design and Analysis (2004). He is a member of The Insti-tute of Electronics Engineers of Korea.

Kyoungrok Cho (S’89–M’92) received the B.S. de-gree in electronic engineering from Kyoungpook Na-tional University, Taegu, Korea, in 1977, and the M.S.and Ph.D. degrees in electrical engineering from theUniversity of Tokyo, Tokyo, Japan, in 1989 and 1992,respectively.

From 1979 to 1986, he was with the TV researchcenter of LG Electronics, Korea. Currently, he isa Professor in the College of Electrical and Com-puter Engineering, Chungbuk National University,Korea. His research interests are in the field of

high-speed and low-power circuit design, system-on-a-chip platform designfor communication systems, and prospective complementary metal–oxidesemiconductor image sensors, memristor-based circuits, and the design ofmultilayer system-on-systems technology. Currently, he is Director of theWorld Class University (WCU) Program at Chungbuk National University,Korea. He is also a Director of the IC Design Education Center at the ChungbukNational University. During 1999 and 2006, he spent two years at Oregon StateUniversity as a Visiting Scholar.

Prof. Cho was the recipient of the The Institute of Electronics Engineers ofKorea (IEEK) award in 2004. He is a member of The IEEK.

Kamran Eshraghian received the B.Tech.,M.Eng.Sc., and Ph.D. degrees from the University ofAdelaide, Adelaide, South Australia, and the Dr.-Inge.h. degree from the University of Ulm, Germany.

He is best known in the international arenaas being one of the fathers of complementarymetal–oxide semiconductor very-large scale in-tegrated (VLSI) systems, having influenced twogenerations of researchers in academia and industry.In 1979, he joined the Department of Electrical andElectronic Engineering at the University of Adelaide,

after spending about ten years with Philips Research, both in Australia andEurope. In 1994, he was invited to take up the Foundation Chair of Computer,Electronics and Communications Engineering in Western Australia, and be-came Head of the School of Engineering and Mathematics, and DistinguishedUniversity Professor, and subsequently became the Director of the ElectronScience Research Institute. In 2004, he became Founder/President of Elabs aspart of his vision for horizontal integration of nanoelectronics with those of bio-and photon-based technologies, thus creating a new design domain for systemon system integration. Currently, he is the President of Innovation Labs and andis the Chairman of the Board of Directors of two high-technology companies. In2007, he was visiting Professor of Engineering and the holder of the inauguralFerrero Family Chair in Electrical Engineering at UC Merced prior to his movein 2009 to Chungbuk National University, Korea, as Distinguished Professor,World Class University (WCU) program. He has co-authored six textbooksand has lectured widely in VLSI and multitechnology systems. He has foundedsix high-technology companies, providing intimate links between universityresearch and industry.

Prof. Eshraghian is a Fellow and Life Member of the Institution of Engi-neers, Australia. In 2004, he was awarded for his research into the integrationof nanoelectronics with that of lightwave technology.