3 Basic Properties of Matrices

Transcript of 3 Basic Properties of Matrices

3

Basic Properties of Matrices

In this chapter, we build on the notions introduced on page 5, and discussa wide range of basic topics related to matrices with real elements. Some ofthe properties carry over to matrices with complex elements, but the readershould not assume this. Occasionally, for emphasis, we will refer to “real”matrices, but unless it is stated otherwise, we are assuming the matrices arereal.

The topics and the properties of matrices that we choose to discuss aremotivated by applications in the data sciences. In Chapter 8, we will considerin more detail some special types of matrices that arise in regression analysisand multivariate data analysis, and then in Chapter 9 we will discuss somespecific applications in statistics.

3.1 Basic Definitions and Notation

It is often useful to treat the rows or columns of a matrix as vectors. Termssuch as linear independence that we have defined for vectors also apply torows and/or columns of a matrix. The vector space generated by the columnsof the n×m matrix A is of order n and of dimension m or less, and is calledthe column space of A, the range of A, or the manifold of A. This vector spaceis denoted by

V(A)

orspan(A).

(The argument of V(·) or span(·) can be either a matrix or a set of vectors.Recall from Section 2.1.3 that if G is a set of vectors, the symbol span(G)denotes the vector space generated by the vectors in G.) We also define therow space of A to be the vector space of order m (and of dimension n orless) generated by the rows of A; notice, however, the preference given to thecolumn space.

52 3 Basic Properties of Matrices

Many of the properties of matrices that we discuss hold for matrices withan infinite number of elements, but throughout this book we will assume thatthe matrices have a finite number of elements, and hence the vector spacesare of finite order and have a finite number of dimensions.

Similar to our definition of multiplication of a vector by a scalar, we definethe multiplication of a matrix A by a scalar c as

cA = (caij).

The aii elements of a matrix are called diagonal elements; an elementaij with i < j is said to be “above the diagonal”, and one with i > j issaid to be “below the diagonal”. The vector consisting of all of the aii’s iscalled the principal diagonal or just the diagonal. The elements ai,i+ck

arecalled “codiagonals” or “minor diagonals”. If the matrix has m columns, theai,m+1−i elements of the matrix are called skew diagonal elements. We useterms similar to those for diagonal elements for elements above and belowthe skew diagonal elements. These phrases are used with both square andnonsquare matrices. The diagonal begins in the first row and first column(that is, a11), and ends at akk, where k is the minimum of the number of rowsand the number of columns.

If, in the matrix A with elements aij for all i and j, aij = aji, A is saidto be symmetric. A symmetric matrix is necessarily square. A matrix A suchthat aij = −aji is said to be skew symmetric. The diagonal entries of a skewsymmetric matrix must be 0. If aij = aji (where a represents the conjugateof the complex number a), A is said to be Hermitian. A Hermitian matrix isalso necessarily square, and, of course, a real symmetric matrix is Hermitian.A Hermitian matrix is also called a self-adjoint matrix.

Diagonal, Hollow, and Diagonally Dominant Matrices

If all except the principal diagonal elements of a matrix are 0, the matrix iscalled a diagonal matrix. A diagonal matrix is the most common and mostimportant type of sparse matrix. If all of the principal diagonal elements of amatrix are 0, the matrix is called a hollow matrix. A skew symmetric matrixis hollow, for example. If all except the principal skew diagonal elements of amatrix are 0, the matrix is called a skew diagonal matrix.

An n×m matrix A for which

|aii| >m∑

j 6=i

|aij| for each i = 1, . . . , n (3.1)

is said to be row diagonally dominant; one for which |ajj| >∑n

i 6=j |aij| for eachj = 1, . . . , m is said to be column diagonally dominant. (Some authors referto this as strict diagonal dominance and use “diagonal dominance” withoutqualification to allow the possibility that the inequalities in the definitions

3.1 Basic Definitions and Notation 53

are not strict.) Most interesting properties of such matrices hold whether thedominance is by row or by column. If A is symmetric, row and column di-agonal dominances are equivalent, so we refer to row or column diagonallydominant symmetric matrices without the qualification; that is, as just diag-onally dominant.

Matrices with Special Patterns of Zeroes

If all elements below the diagonal are 0, the matrix is called an upper triangularmatrix; and a lower triangular matrix is defined similarly. If all elements of acolumn or row of a triangular matrix are zero, we still refer to the matrix astriangular, although sometimes we speak of its form as trapezoidal. Anotherform called trapezoidal is one in which there are more columns than rows,and the additional columns are possibly nonzero. The four general forms oftriangular or trapezoidal matrices are shown below, using an intuitive notationwith X and 0 to indicate the pattern.

X X X

0 X X

0 0 X

X X X

0 X X

0 0 0

X X X

0 X X

0 0 X

0 0 0

X X X X

0 X X X

0 0 X X

In this notation, X indicates that the element is possibly not zero. It doesnot mean each element is the same. In some cases, X and 0 may indicate“submatrices”, which we discuss in the section on partitioned matrices.

If all elements are 0 except ai,i+ckfor some small number of integers ck,

the matrix is called a band matrix (or banded matrix). In many applications,ck ∈ {−wl,−wl + 1, . . . ,−1, 0, 1, . . . , wu − 1, wu}. In such a case, wl is calledthe lower band width and wu is called the upper band width. These patternedmatrices arise in time series and other stochastic process models as well as insolutions of differential equations, and so they are very important in certainapplications. Although it is often the case that interesting band matrices aresymmetric, or at least have the same number of codiagonals that are nonzero,neither of these conditions always occurs in applications of band matrices. Ifall elements below the principal skew diagonal elements of a matrix are 0, thematrix is called a skew upper triangular matrix. A common form of Hankelmatrix, for example, is the skew upper triangular matrix (see page 334). Noticethat the various terms defined here, such as triangular and band, also applyto nonsquare matrices.

Band matrices occur often in numerical solutions of partial differentialequations. A band matrix with lower and upper band widths of 1 is a tridi-agonal matrix. If all diagonal elements and all elements ai,i±1 are nonzero, atridiagonal matrix is called a “matrix of type 2”. The inverse of a covariancematrix that occurs in common stationary time series models is a matrix oftype 2 (see page 334).

54 3 Basic Properties of Matrices

Using the intuitive notation of X and 0 as above, a band matrix may bewritten as

X X 0 · · · 0 0

X X X · · · 0 0

0 X X · · · 0 0

. . .. . .

0 0 0 · · · X X

.

Computational methods for matrices may be more efficient if the patterns aretaken into account.

A matrix is in upper Hessenberg form, and is called a Hessenberg matrix, ifit is upper triangular except for the first subdiagonal, which may be nonzero.That is, aij = 0 for i > j + 1:

X X X · · · X X

X X X · · · X X

0 X X · · · X X

0 0 X · · · X X...

.... . .

......

0 0 0 · · · X X

.

A symmetric matrix that is in Hessenberg form is necessarily tridiagonal.Hessenberg matrices arise in some methods for computing eigenvalues (see

Chapter 7).Many matrices of interest are sparse; that is, they have a large propor-

tion of elements that are 0. The matrices discussed above are generally notconsidered sparse. (“A large proportion” is subjective, but generally meansmore than 75%, and in many interesting cases is well over 95%.) Efficient andaccurate computations often require that the sparsity of a matrix be accom-modated explicitly.

3.1.1 Matrix Shaping Operators

In order to perform certain operations on matrices and vectors, it is oftenuseful first to reshape a matrix. The most common reshaping operation isthe transpose, which we define in this section. Sometimes we may need torearrange the elements of a matrix or form a vector into a special matrix. Inthis section, we define three operators for doing this.

Transpose

The transpose of a matrix is the matrix whose ith row is the ith column of theoriginal matrix and whose jth column is the jth row of the original matrix. Weuse a superscript “T” to denote the transpose of a matrix; thus, if A = (aij),then

3.1 Basic Definitions and Notation 55

AT = (aji). (3.2)

(In other literature, the transpose is often denoted by a prime, as in A′ =(aji) = AT.)

If the elements of the matrix are from the field of complex numbers, theconjugate transpose, also called the adjoint, is more useful than the transpose.(“Adjoint” is also used to denote another type of matrix, so we will generallyavoid using that term. This meaning of the word is the origin of the otherterm for a Hermitian matrix, a “self-adjoint matrix”.) We use a superscript“H” to denote the conjugate transpose of a matrix; thus, if A = (aij), thenAH = (aji). We also use a similar notation for vectors. If the elements of Aare all real, then AH = AT. (The conjugate transpose is often denoted by anasterisk, as in A∗ = (aji) = AH. This notation is more common if a prime isused to denote the transpose. We sometimes use the notation A∗ to denote ag2 inverse of the matrix A; see page 117.)

If (and only if) A is symmetric, A = AT; if (and only if) A is skew sym-metric, AT = −A; and if (and only if) A is Hermitian, A = AH.

Diagonal Matrices and Diagonal Vectors: diag(·) and vecdiag(·)

A square diagonal matrix can be specified by the diag(·) constructor functionthat operates on a vector and forms a diagonal matrix with the elements ofthe vector along the diagonal:

diag((d1, d2, . . . , dn)

)=

d1 0 · · · 00 d2 · · · 0

. . .

0 0 · · · dn

. (3.3)

(Notice that the argument of diag is a vector; that is why there are two setsof parentheses in the expression above, although sometimes we omit one setwithout loss of clarity.) The diag function defined here is a mapping IRn 7→IRn×n. Later we will extend this definition slightly.

A very important diagonal matrix has all 1s along the diagonal. If it hasn diagonal elements, it is denoted by In; so In = diag(1n). This is called theidentity matrix of order n. The size is often omitted, and we call it the identitymatrix, and denote it by I.

The vecdiag(·) function forms a vector from the principal diagonal elementsof a matrix. If A is an n×m matrix, and k = min(n, m),

vecdiag(A) = (a11, . . . , akk). (3.4)

The vecdiag function defined here is a mapping IRn×m 7→ IRmin(n,m).Sometimes we overload diag(·) to allow its argument to be a matrix, and

in that case, it is the same as vecdiag(·). Both the R and Matlab computingsystems, for example, use this overloading; that is, they each provide a singlefunction (called diag in each case).

56 3 Basic Properties of Matrices

Forming a Vector from the Elements of a Matrix: vec(·) andvech(·)

It is sometimes useful to consider the elements of a matrix to be elements ofa single vector. The most common way this is done is to string the columnsof the matrix end-to-end into a vector. The vec(·) function does this:

vec(A) = (aT1 , aT

2 , . . . , aTm), (3.5)

where a1, a2, . . . , am are the column vectors of the matrix A. The vec functionis also sometimes called the “pack” function. (A note on the notation: theright side of equation (3.5) is the notation for a column vector with elementsaT

i ; see Chapter 1.) The vec function is a mapping IRn×m 7→ IRnm.For a symmetric matrix A with elements aij, the “vech” function stacks

the unique elements into a vector:

vech(A) = (a11, a21, . . . , am1, a22, . . . , am2, . . . , amm). (3.6)

There are other ways that the unique elements could be stacked that wouldbe simpler and perhaps more useful (see the discussion of symmetric storagemode on page 474), but equation (3.6) is the standard definition of vech(·).The vech function is a mapping IRn×n 7→ IRn(n+1)/2.

3.1.2 Partitioned Matrices

We often find it useful to partition a matrix into submatrices; for example,in many applications in data analysis, it is often convenient to work withsubmatrices of various types representing different subsets of the data.

We usually denote the submatrices with capital letters with subscriptsindicating the relative positions of the submatrices. Hence, we may write

A =

[A11 A12

A21 A22

], (3.7)

where the matrices A11 and A12 have the same number of rows, A21 andA22 have the same number of rows, A11 and A21 have the same number ofcolumns, and A12 and A22 have the same number of columns. Of course, thesubmatrices in a partitioned matrix may be denoted by different letters. Also,for clarity, sometimes we use a vertical bar to indicate a partition:

A = [ B |C ].

The vertical bar is used just for clarity and has no special meaning in thisrepresentation.

The term “submatrix” is also used to refer to a matrix formed from agiven matrix by deleting various rows and columns of the given matrix. Inthis terminology, B is a submatrix of A if for each element bij there is an akl

3.1 Basic Definitions and Notation 57

with k ≥ i and l ≥ j such that bij = akl; that is, the rows and/or columns ofthe submatrix are not necessarily contiguous in the original matrix. This kindof subsetting is often done in data analysis, for example, in variable selectionin linear regression analysis.

A square submatrix whose principal diagonal elements are elements of theprincipal diagonal of the given matrix is called a principal submatrix. If A11 inthe example above is square, it is a principal submatrix, and if A22 is square,it is also a principal submatrix. Sometimes the term “principal submatrix” isrestricted to square submatrices. If a matrix is diagonally dominant, then itis clear that any principal submatrix of it is also diagonally dominant.

A principal submatrix that contains the (1, 1) element and whose rowsand columns are contiguous in the original matrix is called a leading principalsubmatrix. If A11 is square, it is a leading principal submatrix in the exampleabove.

Partitioned matrices may have useful patterns. A “block diagonal” matrixis one of the form

X 0 · · · 00 X · · · 0

. . .

0 0 · · · X

,

where 0 represents a submatrix with all zeros and X represents a generalsubmatrix with at least some nonzeros.

The diag(·) function previously introduced for a vector is also defined fora list of matrices:

diag(A1, A2, . . . , Ak)

denotes the block diagonal matrix with submatrices A1, A2, . . . , Ak along thediagonal and zeros elsewhere. A matrix formed in this way is sometimes calleda direct sum of A1, A2, . . . , Ak, and the operation is denoted by ⊕:

A1 ⊕ · · · ⊕Ak = diag(A1, . . . , Ak). (3.8)

Although the direct sum is a binary operation, we are justified in definingit for a list of matrices because the operation is clearly associative.

The Ai may be of different sizes and they may not be square, although inmost applications the matrices are square (and some authors define the directsum only for square matrices).

We will define vector spaces of matrices below and then recall the definitionof a direct sum of vector spaces (page 18), which is different from the directsum defined above in terms of diag(·).

Transposes of Partitioned Matrices

The transpose of a partitioned matrix is formed in the obvious way; for ex-ample,

58 3 Basic Properties of Matrices

[A11 A12 A13

A21 A22 A23

]T=

AT

11 AT21

AT12 AT

22

AT13 AT

23

. (3.9)

3.1.3 Matrix Addition

The sum of two matrices of the same shape is the matrix whose elementsare the sums of the corresponding elements of the addends. As in the case ofvector addition, we overload the usual symbols for the operations on the realsto signify the corresponding operations on matrices when the operations aredefined; hence, addition of matrices is also indicated by “+”, as with scalaraddition and vector addition. We assume throughout that writing a sum ofmatrices A+B implies that they are of the same shape; that is, that they areconformable for addition.

The “+” operator can also mean addition of a scalar to a matrix, as inA + a, where A is a matrix and a is a scalar. Although this meaning of “+”is generally not used in mathematical treatments of matrices, in this bookwe use it to mean the addition of the scalar to each element of the matrix,resulting in a matrix of the same shape. This meaning is consistent with thesemantics of modern computer languages such as Fortran 90/95 and R.

The addition of two n×m matrices or the addition of a scalar to an n×mmatrix requires nm scalar additions.

The matrix additive identity is a matrix with all elements zero. We some-times denote such a matrix with n rows and m columns as 0n×m, or just as 0.We may denote a square additive identity as 0n.

The Transpose of the Sum of Matrices

The transpose of the sum of two matrices is the sum of the transposes:

(A + B)T = AT + BT. (3.10)

The sum of two symmetric matrices is therefore symmetric.

Rank Ordering Matrices

There are several possible ways to form a rank ordering of matrices of thesame shape, but no complete ordering is entirely satisfactory. If all of theelements of the matrix A are positive, we write

A > 0; (3.11)

if all of the elements are nonnegative, we write

A ≥ 0. (3.12)

3.1 Basic Definitions and Notation 59

The terms “positive” and “nonnegative” and these symbols are not to beconfused with the terms “positive definite” and “nonnegative definite” andsimilar symbols for important classes of matrices having different properties(which we will introduce in equation (3.66) and discuss further in Section 8.3.)

Vector Spaces of Matrices

Having defined scalar multiplication and matrix addition (for conformablematrices), we can define a vector space of n ×m matrices as any set that isclosed with respect to those operations. The individual operations of scalarmultiplication and matrix addition allow us to define an axpy operation onthe matrices, as in equation (2.1) on page 12. Closure of this space impliesthat it must contain the additive identity, just as we saw on page 13). Thematrix additive identity is the 0 matrix.

As with any vector space, we have the concepts of linear independence,generating set or spanning set, basis set, essentially disjoint spaces, and directsums of matrix vector spaces (as in equation (2.10), which is different fromthe direct sum of matrices defined in terms of diag(·) as in equation (3.8)).

An important vector space of matrices is IRn×m. For matrices X, Y ∈IRn×m and a ∈ IR, the axpy operation is aX + Y .

If n ≥ m, a set of nm n×m matrices whose columns consist of all combina-tions of a set of n n-vectors that span IRn is a basis set for IRn×m. If n < m,we can likewise form a basis set for IRn×m or for subspaces of IRn×m in asimilar way. If {B1, . . . , Bk} is a basis set for IRn×m, then any n ×m matrix

can be represented as∑k

i=1 ciBi. Subsets of a basis set generate subspaces ofIRn×m.

Because the sum of two symmetric matrices is symmetric, and a scalarmultiple of a symmetric matrix is likewise symmetric, we have a vector spaceof the n × n symmetric matrices. This is clearly a subspace of the vectorspace IRn×n. All vectors in any basis for this vector space must be symmetric.Using a process similar to our development of a basis for a general vectorspace of matrices, we see that there are n(n + 1)/2 matrices in the basis (seeExercise 3.1).

3.1.4 Scalar-Valued Operators on Square Matrices:The Trace

There are several useful mappings from matrices to real numbers; that is, fromIRn×m to IR. Some important ones are norms, which are similar to vectornorms and which we will consider later. In this section and the next, wedefine two scalar-valued operators, the trace and the determinant, that applyto square matrices.

60 3 Basic Properties of Matrices

The Trace: tr(·)

The sum of the diagonal elements of a square matrix is called the trace of thematrix. We use the notation “tr(A)” to denote the trace of the matrix A:

tr(A) =∑

i

aii. (3.13)

The Trace of the Transpose of Square Matrices

From the definition, we see

tr(A) = tr(AT). (3.14)

The Trace of Scalar Products of Square Matrices

For a scalar c and an n× n matrix A,

tr(cA) = c tr(A).

This follows immediately from the definition because for tr(cA) each diagonalelement is multiplied by c.

The Trace of Partitioned Square Matrices

If the square matrix A is partitioned such that the diagonal blocks are squaresubmatrices, that is,

A =

[A11 A12

A21 A22

], (3.15)

where A11 and A22 are square, then from the definition, we see that

tr(A) = tr(A11) + tr(A22). (3.16)

The Trace of the Sum of Square Matrices

If A and B are square matrices of the same order, a useful (and obvious)property of the trace is

tr(A + B) = tr(A) + tr(B). (3.17)

3.1.5 Scalar-Valued Operators on Square Matrices:The Determinant

The determinant, like the trace, is a mapping from IRn×n to IR. Althoughit may not be obvious from the definition below, the determinant has far-reaching applications in matrix theory.

3.1 Basic Definitions and Notation 61

The Determinant: det(·)

For an n × n (square) matrix A, consider the product a1j1 · · ·anjn, where

πj = (j1, . . . , jn) is one of the n! permutations of the integers from 1 to n.Define a permutation to be even or odd according to the number of times that asmaller element follows a larger one in the permutation. (For example, (1, 3, 2)is an odd permutation, and (3, 1, 2) and (1, 2, 3) are even permutations.) Letσ(πj) = 1 if πj = (j1, . . . , jn) is an even permutation, and let σ(πj) = −1otherwise. Then the determinant of A, denoted by det(A), is defined by

det(A) =∑

all permutations

σ(πj)a1j1 · · ·anjn. (3.18)

Notation and Simple Properties of the Determinant

The determinant is also sometimes written as |A|.I prefer the notation det(A), because of the possible confusion between

|A| and the absolute value of some quantity. The latter notation, however, isrecommended by its compactness, and I do use it in expressions such as thePDF of the multivariate normal distribution (see equation (9.1)) that involvenonnegative definite matrices (see page 82 for the definition). The determinantof a matrix may be negative, and sometimes, as in measuring volumes (seepage 68 for simple areas and page 181 for special volumes called Jacobians), weneed to specify the absolute value of the determinant, so we need somethingof the form |det(A)|.

The definition of the determinant is not as daunting as it may appearat first glance. Many properties become obvious when we realize that σ(·) isalways ±1, and it can be built up by elementary exchanges of adjacent ele-ments. For example, consider σ(3, 2, 1). There are three elementary exchangesbeginning with the natural ordering:

(1, 2, 3)→ (2, 1, 3)→ (2, 3, 1)→ (3, 2, 1);

hence, σ(3, 2, 1) = (−1)3 = −1.If πj consists of the interchange of exactly two elements in (1, . . . , n), say

elements p and q with p < q, then there are q − p elements before p thatare larger than p, and there are q − p − 1 elements between q and p in thepermutation each with exactly one larger element preceding it. The totalnumber is 2q − 2p + 1, which is an odd number. Therefore, if πj consists ofthe interchange of exactly two elements, then σ(πj) = −1.

If the integers 1, . . . , m occur sequentially in a given permutation and arefollowed by m + 1, . . . , n which also occur sequentially in the permutation,they can be considered separately:

σ(j1, . . . , jn) = σ(j1, . . . , jm)σ(jm+1 , . . . , jn). (3.19)

62 3 Basic Properties of Matrices

Furthermore, we see that the product a1j1 · · ·anjnhas exactly one factor from

each unique row-column pair. These observations facilitate the derivation ofvarious properties of the determinant (although the details are sometimesquite tedious).

We see immediately from the definition that the determinant of an upperor lower triangular matrix (or a diagonal matrix) is merely the product of thediagonal elements (because in each term of equation (3.18) there is a 0, exceptin the term in which the subscripts on each factor are the same).

Minors, Cofactors, and Adjugate Matrices

Consider the 2× 2 matrix

A =

[a11 a12

a21 a22

].

From the definition of the determinant, we see that

det(A) = a11a22 − a12a21. (3.20)

Now let A be a 3× 3 matrix:

A =

a11 a12 a13

a21 a22 a23

a31 a32 a33

.

In the definition of the determinant, consider all of the terms in which theelements of the first row of A appear. With some manipulation of those terms,we can express the determinant in terms of determinants of submatrices as

det(A) = a11(−1)1+1det

([a22 a23

a32 a33

])

+ a12(−1)1+2det

([a21 a23

a31 a33

])

+ a13(−1)1+3det

([a21 a22

a31 a32

]).

(3.21)

Notice that this is the same form as in equation (3.20):

det(A) = a11(1)det(a22) + a12(−1)det(a21).

The manipulation in equation (3.21) of the terms in the determinant couldbe carried out with other rows of A.

The determinants of the 2 × 2 submatrices in equation (3.21) are calledminors or complementary minors of the associated element. The definition

3.1 Basic Definitions and Notation 63

can be extended to (n−1)×(n−1) submatrices of an n×n matrix, for n ≥ 2.We denote the minor associated with the aij element as

det(A−(i)(j)

), (3.22)

in which A−(i)(j) denotes the submatrix that is formed from A by removing

the ith row and the jth column. The sign associated with the minor corre-sponding to aij is (−1)i+j . The minor together with its appropriate sign iscalled the cofactor of the associated element; that is, the cofactor of aij is(−1)i+jdet

(A−(i)(j)

). We denote the cofactor of aij as a(ij):

a(ij) = (−1)i+jdet(A−(i)(j)

). (3.23)

Notice that both minors and cofactors are scalars.The manipulations leading to equation (3.21), though somewhat tedious,

can be carried out for a square matrix of any size larger than 1×1, and minorsand cofactors are defined as above. An expression such as in equation (3.21)is called an expansion in minors or an expansion in cofactors.

The extension of the expansion (3.21) to an expression involving a sumof signed products of complementary minors arising from (n − 1) × (n − 1)submatrices of an n× n matrix A is

det(A) =n∑

j=1

aij(−1)i+jdet(A−(i)(j)

)

=n∑

j=1

aija(ij), (3.24)

or, over the rows,

det(A) =n∑

i=1

aija(ij). (3.25)

These expressions are called Laplace expansions. Each determinant det(A−(i)(j)

)

can likewise be expressed recursively in a similar expansion.Expressions (3.24) and (3.25) are special cases of a more general Laplace

expansion based on an extension of the concept of a complementary minorof an element to that of a complementary minor of a minor. The derivationof the general Laplace expansion is straightforward but rather tedious (seeHarville, 1997, for example, for the details).

Laplace expansions could be used to compute the determinant, but themain value of these expansions is in proving properties of determinants. Forexample, from the special Laplace expansion (3.24) or (3.25), we can quicklysee that the determinant of a matrix with two rows that are the same is zero.We see this by recursively expanding all of the minors until we have only 2×2matrices consisting of a duplicated row. The determinant of such a matrix is0, so the expansion is 0.

64 3 Basic Properties of Matrices

The expansion in equation (3.24) has an interesting property: if instead ofthe elements aij from the ith row we use elements from a different row, saythe kth row, the sum is zero. That is, for k 6= i,

n∑

j=1

akj(−1)i+jdet(A−(i)(j)

)=

n∑

j=1

akja(ij)

= 0. (3.26)

This is true because such an expansion is exactly the same as an expansion forthe determinant of a matrix whose kth row has been replaced by its ith row;that is, a matrix with two identical rows. The determinant of such a matrixis 0, as we saw above.

A certain matrix formed from the cofactors has some interesting properties.We define the matrix here but defer further discussion. The adjugate of then× n matrix A is defined as

adj(A) = (a(ji)), (3.27)

which is an n × n matrix of the cofactors of the elements of the transposedmatrix. (The adjugate is also called the adjoint or sometimes “classical ad-joint”, but as we noted above, the term adjoint may also mean the conjugatetranspose. To distinguish it from the conjugate transpose, the adjugate is alsosometimes called the “classical adjoint”. We will generally avoid using theterm “adjoint”.) Note the reversal of the subscripts; that is,

adj(A) = (a(ij))T.

The adjugate has an interesting property involving matrix multiplication(which we will define below in Section 3.2) and the identity matrix:

A adj(A) = adj(A)A = det(A)I. (3.28)

To see this, consider the (i, j)th element of A adj(A). By the definition ofthe multiplication of A and adj(A), that element is

∑k aik(adj(A))kj. Now,

noting the reversal of the subscripts in adj(A) in equation (3.27), and usingequations (3.24) and (3.26), we have

∑

k

aik(adj(A))kj =

{det(A) if i = j0 if i 6= j;

that is, A adj(A) = det(A)I.The adjugate has a number of other useful properties, some of which we

will encounter later, as in equation (3.137).

The Determinant of the Transpose of Square Matrices

One important property we see immediately from a manipulation of the defi-nition of the determinant is

det(A) = det(AT). (3.29)

3.1 Basic Definitions and Notation 65

The Determinant of Scalar Products of Square Matrices

For a scalar c and an n× n matrix A,

det(cA) = cndet(A). (3.30)

This follows immediately from the definition because, for det(cA), each factorin each term of equation (3.18) is multiplied by c.

The Determinant of an Upper (or Lower) Triangular Matrix

If A is an n × n upper (or lower) triangular matrix, then

det(A) =

n∏

i=1

aii. (3.31)

This follows immediately from the definition. It can be generalized, as in thenext section.

The Determinant of Certain Partitioned Square Matrices

Determinants of square partitioned matrices that are block diagonal or upperor lower block triangular depend only on the diagonal partitions:

det(A) = det

([A11 00 A22

])= det

([A11 0A21 A22

])= det

([A11 A12

0 A22

])

= det(A11)det(A22).

(3.32)

We can see this by considering the individual terms in the determinant, equa-tion (3.18). Suppose the full matrix is n × n, and A11 is m ×m. Then A22

is (n − m) × (n − m), A21 is (n − m) × m, and A12 is m × (n − m). Inequation (3.18), any addend for which (j1, . . . , jm) is not a permutation of theintegers 1, . . . , m contains a factor aij that is in a 0 diagonal block, and hencethe addend is 0. The determinant consists only of those addends for which(j1, . . . , jm) is a permutation of the integers 1, . . . , m, and hence (jm+1 , . . . , jn)is a permutation of the integers m + 1, . . . , n,

det(A) =∑∑

σ(j1, . . . , jm, jm+1, . . . , jn)a1j1 · · ·amjmam+1,jn

· · ·anjn,

where the first sum is taken over all permutations that keep the first m integerstogether while maintaining a fixed ordering for the integers m + 1 through n,and the second sum is taken over all permutations of the integers from m + 1through n while maintaining a fixed ordering of the integers from 1 to m.Now, using equation (3.19), we therefore have for A of this special form

66 3 Basic Properties of Matrices

det(A) =∑∑

σ(j1, . . . , jm, jm+1, . . . , jn)a1j1 · · ·amjmam+1,jm+1

· · ·anjn

=∑

σ(j1, . . . , jm)a1j1 · · ·amjm

∑σ(jm+1 , . . . , jn)am+1,jm+1

· · ·anjn

= det(A11)det(A22),

which is equation (3.32). We use this result to give an expression for thedeterminant of more general partitioned matrices in Section 3.4.2.

Another useful partitioned matrix of the form of equation (3.15) has A11 =0 and A21 = −I:

A =

[0 A12

−I A22

].

In this case, using equation (3.24), we get

det(A) = ((−1)n+1+1(−1))ndet(A12)

= (−1)n(n+3)det(A12)

= det(A12). (3.33)

We will consider determinants of a more general partitioning in Sec-tion 3.4.2, beginning on page 110.

The Determinant of the Sum of Square Matrices

Occasionally it is of interest to consider the determinant of the sum of squarematrices. We note in general that

det(A + B) 6= det(A) + det(B),

which we can see easily by an example. (Consider matrices in IR2×2, for ex-

ample, and let A = I and B =

[−1 0

0 0

].)

In some cases, however, simplified expressions for the determinant of asum can be developed. We consider one in the next section.

A Diagonal Expansion of the Determinant

A particular sum of matrices whose determinant is of interest is one in whicha diagonal matrix D is added to a square matrix A, that is, det(A+D). (Sucha determinant arises in eigenanalysis, for example, as we see in Section 3.8.2.)

For evaluating the determinant det(A+D), we can develop another expan-sion of the determinant by restricting our choice of minors to determinants ofmatrices formed by deleting the same rows and columns and then continuingto delete rows and columns recursively from the resulting matrices. The ex-pansion is a polynomial in the elements of D; and for our purposes later, thatis the most useful form.

3.1 Basic Definitions and Notation 67

Before considering the details, let us develop some additional notation.The matrix formed by deleting the same row and column of A is denotedA−(i)(i) as above (following equation (3.22)). In the current context, however,it is more convenient to adopt the notation A(i1,...,ik) to represent the matrixformed from rows i1, . . . , ik and columns i1, . . . , ik from a given matrix A.That is, the notation A(i1,...,ik) indicates the rows and columns kept ratherthan those deleted; and furthermore, in this notation, the indexes of the rowsand columns are the same. We denote the determinant of this k × k matrixin the obvious way, det(A(i1,...,ik)). Because the principal diagonal elementsof this matrix are principal diagonal elements of A, we call det(A(i1,...,ik)) aprincipal minor of A.

Now consider det(A + D) for the 2× 2 case:

det

([a11 + d1 a12

a21 a22 + d2

]).

Expanding this, we have

det(A + D) = (a11 + d1)(a22 + d2)− a12a21

= det

([a11 a12

a21 a22

])+ d1d2 + a22d1 + a11d2

= det(A(1,2)) + d1d2 + a22d1 + a11d2.

Of course, det(A(1,2)) = det(A), but we are writing it this way to develop thepattern. Now, for the 3× 3 case, we have

det(A + D) = det(A(1,2,3))

+det(A(2,3))d1 + det(A(1,3))d2 + det(A(1,2))d3

+ a33d1d2 + a22d1d3 + a11d2d3

+ d1d2d3. (3.34)

In the applications of interest, the elements of the diagonal matrix D may bea single variable: d, say. In this case, the expression simplifies to

det(A + D) = det(A(1,2,3)) +∑

i 6=j

det(A(i,j))d +∑

i

ai,id2 + d3. (3.35)

Carefully continuing in this way for an n×n matrix, either as in equation (3.34)for n variables or as in equation (3.35) for a single variable, we can make useof a Laplace expansion to evaluate the determinant.

Consider the expansion in a single variable because that will prove mostuseful. The pattern persists; the constant term is |A|, the coefficient of thefirst-degree term is the sum of the (n − 1)-order principal minors, and, atthe other end, the coefficient of the (n − 1)th-degree term is the sum of the

68 3 Basic Properties of Matrices

first-order principal minors (that is, just the diagonal elements), and finallythe coefficient of the nth-degree term is 1.

This kind of representation is called a diagonal expansion of the determi-nant because the coefficients are principal minors. It has occasional use formatrices with large patterns of zeros, but its main application is in analysisof eigenvalues, which we consider in Section 3.8.2.

Computing the Determinant

For an arbitrary matrix, the determinant is rather difficult to compute. Themethod for computing a determinant is not the one that would arise directlyfrom the definition or even from a Laplace expansion. The more efficient meth-ods involve first factoring the matrix, as we discuss in later sections.

The determinant is not very often directly useful, but although it maynot be obvious from its definition, the determinant, along with minors, co-factors, and adjoint matrices, is very useful in discovering and proving prop-erties of matrices. The determinant is used extensively in eigenanalysis (seeSection 3.8).

A Geometrical Perspective of the Determinant

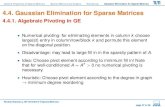

In Section 2.2, we discussed a useful geometric interpretation of vectors ina linear space with a Cartesian coordinate system. The elements of a vec-tor correspond to measurements along the respective axes of the coordinatesystem. When working with several vectors, or with a matrix in which thecolumns (or rows) are associated with vectors, we may designate a vectorxi as xi = (xi1, . . . , xid). A set of d linearly independent d-vectors define aparallelotope in d dimensions. For example, in a two-dimensional space, thelinearly independent 2-vectors x1 and x2 define a parallelogram, as shown inFigure 3.1.

The area of this parallelogram is the base times the height, bh, where, inthis case, b is the length of the vector x1, and h is the length of x2 times thesine of the angle θ. Thus, making use of equation (2.45) on page 36 for thecosine of the angle, we have

area = bh

= ‖x1‖‖x2‖ sin(θ)

= ‖x1‖‖x2‖√

1−( 〈x1, x2〉‖x1‖‖x2‖

)2

=√‖x1‖2‖x2‖2 − (〈x1, x2〉)2

=√

(x211 + x2

12)(x221 + x2

22)− (x11x21 − x12x22)2

= |x11x22 − x12x21|= |det(X)|, (3.36)

3.2 Multiplication of Matrices 69

x2

x1

θ

a

h

b

e1

e2

Figure 3.1. Volume (Area) of Region Determined by x1 and x2

where x1 = (x11, x12), x2 = (x21, x22), and

X = [x1 | x2]

=

[x11 x21

x12 x22

].

Although we will not go through the details here, this equivalence of avolume of a parallelotope that has a vertex at the origin and the absolutevalue of the determinant of a square matrix whose columns correspond to thevectors that form the sides of the parallelotope extends to higher dimensions.

In making a change of variables in integrals, as in equation (4.37) onpage 181, we use the absolute value of the determinant of the Jacobian as avolume element. Another instance of the interpretation of the determinant asa volume is in the generalized variance, discussed on page 318.

3.2 Multiplication of Matrices and

Multiplication of Vectors and Matrices

The elements of a vector or matrix are elements of a field, and most matrixand vector operations are defined in terms of the two operations of the field.Of course, in this book, the field of most interest is the field of real numbers.

3.2.1 Matrix Multiplication (Cayley)

There are various kinds of multiplication of matrices that may be useful. Themost common kind of multiplication is Cayley multiplication. If the numberof columns of the matrix A, with elements aij, and the number of rows of the

70 3 Basic Properties of Matrices

matrix B, with elements bij, are equal, then the (Cayley) product of A and Bis defined as the matrix C with elements

cij =∑

k

aikbkj. (3.37)

This is the most common type of matrix product, and we refer to it by theunqualified phrase “matrix multiplication”.

Cayley matrix multiplication is indicated by juxtaposition, with no inter-vening symbol for the operation: C = AB.

If the matrix A is n×m and the matrix B is m× p, the product C = ABis n× p:

C = A B

[ ]

n×p

=

[ ]

n×m

[ ]m×p

.

Cayley matrix multiplication is a mapping,

IRn×m × IRm×p 7→ IRn×p.

The multiplication of an n × m matrix and an m × p matrix requiresnmp scalar multiplications and np(m − 1) scalar additions. Here, as alwaysin numerical analysis, we must remember that the definition of an operation,such as matrix multiplication, does not necessarily define a good algorithmfor evaluating the operation.

It is obvious that while the product AB may be well-defined, the productBA is defined only if n = p; that is, if the matrices AB and BA are square.We assume throughout that writing a product of matrices AB implies thatthe number of columns of the first matrix is the same as the number of rows ofthe second; that is, they are conformable for multiplication in the order given.

It is easy to see from the definition of matrix multiplication (3.37) thatin general, even for square matrices, AB 6= BA. It is also obvious that if ABexists, then BTAT exists and, in fact,

BTAT = (AB)T. (3.38)

The product of symmetric matrices is not, in general, symmetric. If (but notonly if) A and B are symmetric, then AB = (BA)T.

Because matrix multiplication is not commutative, we often use the terms“premultiply” and “postmultiply” and the corresponding nominal forms ofthese terms. Thus, in the product AB, we may say B is premultiplied by A,or, equivalently, A is postmultiplied by B.

Although matrix multiplication is not commutative, it is associative; thatis, if the matrices are conformable,

A(BC) = (AB)C. (3.39)

3.2 Multiplication of Matrices 71

It is also distributive over addition; that is,

A(B + C) = AB + AC (3.40)

and(B + C)A = BA + CA. (3.41)

These properties are obvious from the definition of matrix multiplication.(Note that left-sided distribution is not the same as right-sided distributionbecause the multiplication is not commutative.)

An n×n matrix consisting of 1s along the diagonal and 0s everywhere else isa multiplicative identity for the set of n×n matrices and Cayley multiplication.Such a matrix is called the identity matrix of order n, and is denoted by In,or just by I. The columns of the identity matrix are unit vectors.

The identity matrix is a multiplicative identity for any matrix so long asthe matrices are conformable for the multiplication. If A is n×m, then

InA = AIm = A.

Powers of Square Matrices

For a square matrix A, its product with itself is defined, and so we will use thenotation A2 to mean the Cayley product AA, with similar meanings for Ak

for a positive integer k. As with the analogous scalar case, Ak for a negativeinteger may or may not exist, and when it exists, it has a meaning for Cayleymultiplication similar to the meaning in ordinary scalar multiplication. Wewill consider these issues later (in Section 3.3.3).

For an n × n matrix A, if Ak exists for negative integral values of k, wedefine A0 by

A0 = In. (3.42)

For a diagonal matrix D = diag ((d1, . . . , dn)), we have

Dk = diag((dk

1 , . . . , dkn)). (3.43)

Matrix Polynomials

Polynomials in square matrices are similar to the more familiar polynomialsin scalars. We may consider

p(A) = b0I + b1A + · · · bkAk.

The value of this polynomial is a matrix.The theory of polynomials in general holds, and in particular, we have the

useful factorizations of monomials: for any positive integer k,

I −Ak = (I −A)(I + A + · · ·Ak−1), (3.44)

and for an odd positive integer k,

I + Ak = (I + A)(I −A + · · ·Ak−1). (3.45)

72 3 Basic Properties of Matrices

3.2.2 Multiplication of Matrices with Special Patterns

Various properties of matrices may or may not be preserved under matrixmultiplication. We have seen already that the product of symmetric matricesis not in general symmetric.

Many of the various patterns of zeroes in matrices discussed on page 53 arepreserved under matrix multiplication. Assume A and B are square matricesof the same number of rows.

• If A and B are diagonal, AB is diagonal;• if A and B are upper triangular, AB is upper triangular;• if A and B are lower triangular, AB is lower triangular;• if A is upper triangular and B is lower triangular, in general, none of

AB, BA, ATA, BTB, AAT, and BBT is triangular.

Each of these statements can be easily proven using the definition of multi-plication in equation (3.37).

The products of banded matrices are generally banded with a wider band-width. If the bandwidth is too great, obviously the matrix can no longer becalled banded.

Multiplication of Partitioned Matrices

Multiplication and other operations with partitioned matrices are carried outwith their submatrices in the obvious way. Thus, assuming the submatricesare conformable for multiplication,

[A11 A12

A21 A22

] [B11 B12

B21 B22

]=

[A11B11 + A12B21 A11B12 + A12B22

A21B11 + A22B21 A21B12 + A22B22

].

It is clear that the product of conformable block diagonal matrices is blockdiagonal.

Sometimes a matrix may be partitioned such that one partition is just asingle column or row, that is, a vector or the transpose of a vector. In thatcase, we may use a notation such as

[X y]

or[X | y],

where X is a matrix and y is a vector. We develop the notation in the obviousfashion; for example,

[X y]T [X y] =

[XTX XTyyTX yTy

]. (3.46)

3.2 Multiplication of Matrices 73

3.2.3 Elementary Operations on Matrices

Many common computations involving matrices can be performed as a se-quence of three simple types of operations on either the rows or the columnsof the matrix:

• the interchange of two rows (columns),• a scalar multiplication of a given row (column), and• the replacement of a given row (column) by the sum of that row

(columns) and a scalar multiple of another row (column); that is, anaxpy operation.

Such an operation on the rows of a matrix can be performed by premultipli-cation by a matrix in a standard form, and an operation on the columns ofa matrix can be performed by postmultiplication by a matrix in a standardform. To repeat:

• premultiplication: operation on rows;• postmultiplication: operation on columns.

The matrix used to perform the operation is called an elementary trans-formation matrix or elementary operator matrix. Such a matrix is the identitymatrix transformed by the corresponding operation performed on its unitrows, eT

p , or columns, ep.In actual computations, we do not form the elementary transformation

matrices explicitly, but their formulation allows us to discuss the operationsin a systematic way and better understand the properties of the operations.Products of any of these elementary operator matrices can be used to effectmore complicated transformations.

Operations on the rows are more common, and that is what we will dis-cuss here, although operations on columns are completely analogous. Thesetransformations of rows are called elementary row operations.

Interchange of Rows or Columns; Permutation Matrices

By first interchanging the rows or columns of a matrix, it may be possibleto partition the matrix in such a way that the partitions have interestingor desirable properties. Also, in the course of performing computations on amatrix, it is often desirable to interchange the rows or columns of the matrix.(This is an instance of “pivoting”, which will be discussed later, especiallyin Chapter 6.) In matrix computations, we almost never actually move datafrom one row or column to another; rather, the interchanges are effected bychanging the indexes to the data.

Interchanging two rows of a matrix can be accomplished by premultiply-ing the matrix by a matrix that is the identity with those same two rowsinterchanged; for example,

74 3 Basic Properties of Matrices

1 0 0 00 0 1 00 1 0 00 0 0 1

a11 a12 a13

a21 a22 a23

a31 a32 a33

a41 a42 a43

=

a11 a12 a13

a31 a32 a33

a21 a22 a23

a41 a42 a43

.

The first matrix in the expression above is called an elementary permutationmatrix. It is the identity matrix with its second and third rows (or columns)interchanged. An elementary permutation matrix, which is the identity withthe pth and qth rows interchanged, is denoted by Epq. That is, Epq is theidentity, except the pth row is eT

q and the qth row is eTp . Note that Epq = Eqp.

Thus, for example, if the given matrix is 4×m, to interchange the second andthird rows, we use

E23 = E32 =

1 0 0 00 0 1 00 1 0 00 0 0 1

.

It is easy to see from the definition that an elementary permutation matrixis symmetric. Note that the notation Epq does not indicate the order of theelementary permutation matrix; that must be specified in the context.

Premultiplying a matrix A by a (conformable) Epq results in an inter-change of the pth and qth rows of A as we see above. Any permutation of rowsof A can be accomplished by successive premultiplications by elementary per-mutation matrices. Note that the order of multiplication matters. Althougha given permutation can be accomplished by different elementary permuta-tions, the number of elementary permutations that effect a given permutationis always either even or odd; that is, if an odd number of elementary per-mutations results in a given permutation, any other sequence of elementarypermutations to yield the given permutation is also odd in number. Any givenpermutation can be effected by successive interchanges of adjacent rows.

Postmultiplying a matrix A by a (conformable) Epq results in an inter-change of the pth and qth columns of A:

a11 a12 a13

a21 a22 a23

a31 a32 a33

a41 a42 a43

1 0 00 0 10 1 0

=

a11 a13 a12

a21 a23 a22

a31 a33 a32

a41 a43 a42

.

Note thatA = EpqEpqA = AEpqEpq; (3.47)

that is, as an operator, an elementary permutation matrix is its own inverseoperator: EpqEpq = I.

Because all of the elements of a permutation matrix are 0 or 1, the traceof an n × n elementary permutation matrix is n− 2.

The product of elementary permutation matrices is also a permutationmatrix in the sense that it permutes several rows or columns. For example,

3.2 Multiplication of Matrices 75

premultiplying A by the matrix Q = EpqEqr will yield a matrix whose pth rowis the rth row of the original A, whose qth row is the pth row of A, and whoserth row is the qth row of A. We often use the notation Eπ to denote a moregeneral permutation matrix. This expression will usually be used generically,but sometimes we will specify the permutation, π.

A general permutation matrix (that is, a product of elementary permuta-tion matrices) is not necessarily symmetric, but its transpose is also a per-mutation matrix. It is not necessarily its own inverse, but its permutationscan be reversed by a permutation matrix formed by products of elementarypermutation matrices in the opposite order; that is,

ETπ Eπ = I.

As a prelude to other matrix operations, we often permute both rows andcolumns, so we often have a representation such as

B = Eπ1AEπ2

, (3.48)

where Eπ1is a permutation matrix to permute the rows and Eπ2

is a permu-tation matrix to permute the columns. We use these kinds of operations toarrive at the important equation (3.105) on page 93, and combine these oper-ations with others to yield equation (3.119) on page 99. These equations areused to determine the number of linearly independent rows and columns andto represent the matrix in a form with a maximal set of linearly independentrows and columns clearly identified.

The Vec-Permutation Matrix

A special permutation matrix is the matrix that transforms the vector vec(A)into vec(AT). If A is n×m, the matrix Knm that does this is nm× nm. Wehave

vec(AT) = Knmvec(A). (3.49)

The matrix Knm is called the nm vec-permutation matrix.

Scalar Row or Column Multiplication

Often, numerical computations with matrices are more accurate if the rowshave roughly equal norms. For this and other reasons, we often transform amatrix by multiplying one of its rows by a scalar. This transformation can alsobe performed by premultiplication by an elementary transformation matrix.For multiplication of the pth row by the scalar, the elementary transformationmatrix, which is denoted by Ep(a), is the identity matrix in which the pth

diagonal element has been replaced by a. Thus, for example, if the givenmatrix is 4×m, to multiply the second row by a, we use

76 3 Basic Properties of Matrices

E2(a) =

1 0 0 00 a 0 00 0 1 00 0 0 1

.

Postmultiplication of a given matrix by the multiplier matrix Ep(a) resultsin the multiplication of the pth column by the scalar. For this, Ep(a) is a squarematrix of order equal to the number of columns of the given matrix.

Note that the notation Ep(a) does not indicate the number of rows andcolumns. This must be specified in the context.

Note that, if a 6= 0,A = Ep(1/a)Ep(a)A, (3.50)

that is, as an operator, the inverse operator is a row multiplication matrix onthe same row and with the reciprocal as the multiplier.

Axpy Row or Column Transformations

The other elementary operation is an axpy on two rows and a replacement ofone of those rows with the result

ap ← aaq + ap.

This operation also can be effected by premultiplication by a matrix formedfrom the identity matrix by inserting the scalar in the (p, q) position. Such amatrix is denoted by Epq(a). Thus, for example, if the given matrix is 4×m,to add a times the third row to the second row, we use

E23(a) =

1 0 0 00 1 a 00 0 1 00 0 0 1

.

Premultiplication of a matrix A by such a matrix,

Epq(a)A, (3.51)

yields a matrix whose pth row is a times the qth row plus the original row.Given the 4× 3 matrix A = (aij), we have

E23(a)A =

a11 a12 a13

a21 + aa31 a22 + aa32 a23 + aa33

a31 a32 a33

a41 a42 a43

.

Postmultiplication of a matrix A by an axpy operator matrix,

AEpq(a),

3.2 Multiplication of Matrices 77

yields a matrix whose qth column is a times the pth column plus the originalcolumn. For this, Epq(a) is a square matrix of order equal to the number ofcolumns of the given matrix. Note that the column that is changed correspondsto the second subscript in Epq(a).

Note thatA = Epq(−a)Epq(a)A; (3.52)

that is, as an operator, the inverse operator is the same axpy elementaryoperator matrix with the negative of the multiplier.

A common use of axpy operator matrices is to form a matrix with zerosin all positions of a given column below a given position in the column. Theseoperations usually follow an operation by a scalar row multiplier matrix thatputs a 1 in the position of interest. For example, given an n × m matrix Awith aij 6= 0, to put a 1 in the (i, j) position and 0s in all positions of the jth

column below the ith row, we form the product

Emi(−amj) · · ·Ei+1,i(−ai+1,j)Ei(1/aij)A. (3.53)

This process is called Gaussian elimination.Gaussian elimination is often performed sequentially down the diagonal

elements of a matrix. If at some point aii = 0, the operations of equation (3.53)cannot be performed. In that case, we may first interchange the ith row withthe kth row, where k > i and aki 6= 0. Such an interchange is called pivoting.We will discuss pivoting in more detail on page 229 in Chapter 6.

To form a matrix with zeros in all positions of a given column except one,we use additional matrices for the rows above the given element:

Emi(−amj) · · ·Ei+1,i(−ai+1,j) · · ·Ei−1,i(−ai−1,j) · · ·E1i(−a1j)Ei(1/aij)A.

We can likewise zero out all elements in the ith row except the one in the(ij)th position by similar postmultiplications.

These elementary transformations are the basic operations in Gaussianelimination, which is discussed in Sections 5.6 and 6.2.1.

Elementary Operator Matrices: Summary of Notation

Because we have introduced various notation for elementary operator matri-ces, it may be worthwhile to review the notation. The notation is useful andI will use it from time to time, but unfortunately, there is no general form forthe notation. I will generally use an “E” as the root symbol for the matrix,but the specific type is indicated by various other symbols.

Referring back to the listing of the types of operations on page 73, we havethe various elementary operator matrices:

• Epq: the interchange of rows p and q (Epq is the same as Eqp)Eπ: a general permutation of rows, where π denotes a permutation

• Ep(a): multiplication of row p by a.

78 3 Basic Properties of Matrices

• Epq(a): the replacement of row p by the sum of row p and a times row q.

Recall that these operations are effected by premultiplication. The same kindsof operations on the columns are effected by postmultiplication.

Determinants of Elementary Operator Matrices

The determinant of an elementary permutation matrix Epq has only one termin the sum that defines the determinant (equation (3.18), page 61), and thatterm is 1 times σ evaluated at the permutation that exchanges p and q. Aswe have seen (page 61), this is an odd permutation; hence, for an elementarypermutation matrix Epq,

det(Epq) = −1. (3.54)

Because all terms in det(EpqA) are exactly the same terms as in det(A)but with one different permutation in each term, we have

det(EpqA) = −det(A).

More generally, if A and Eπ are n × n matrices, and Eπ is any permutationmatrix (that is, any product of Epq matrices), then det(EπA) is either det(A)or −det(A) because all terms in det(EπA) are exactly the same as the termsin det(A) but possibly with different signs because the permutations are dif-ferent. In fact, the differences in the permutations are exactly the same as thepermutation of 1, . . . , n in Eπ; hence,

det(EπA) = det(Eπ) det(A).

(In equation (3.60) below, we will see that this equation holds more generally.)The determinant of an elementary row multiplication matrix Ep(a) is

det(Ep(a)) = a. (3.55)

If A and Ep(a) are n× n matrices, then

det(Ep(a)A) = adet(A),

as we see from the definition of the determinant, equation (3.18).The determinant of an elementary axpy matrix Epq(a) is 1,

det(Epq(a)) = 1, (3.56)

because the term consisting of the product of the diagonals is the only termin the determinant.

Now consider det(Epq(a)A) for an n×n matrix A. Expansion in the minors(equation (3.24)) along the pth row yields

3.2 Multiplication of Matrices 79

det(Epq(a)A) =

n∑

j=1

(apj + aaqj)(−1)p+jdet(A(ij))

=

n∑

j=1

apj(−1)p+jdet(A(ij)) + a

n∑

j=1

aqj(−1)p+jdet(A(ij)).

From equation (3.26) on page 64, we see that the second term is 0, and sincethe first term is just the determinant of A, we have

det(Epq(a)A) = det(A). (3.57)

3.2.4 The Trace of a Cayley Product that Is Square

A useful property of the trace for the matrices A and B that are conformablefor the multiplications AB and BA is

tr(AB) = tr(BA). (3.58)

This is obvious from the definitions of matrix multiplication and the trace.Note that A and B may not be square (so the trace is not defined for them),but if they are conformable for the multiplications, then both AB and BAare square.

Because of the associativity of matrix multiplication, this relation can beextended as

tr(ABC) = tr(BCA) = tr(CAB) (3.59)

for matrices A, B, and C that are conformable for the multiplications indi-cated. Notice that the individual matrices need not be square. This fact isvery useful in working with quadratic forms, as in equation (3.68).

3.2.5 The Determinant of a Cayley Product of Square Matrices

An important property of the determinant is

det(AB) = det(A) det(B) (3.60)

if A and B are square matrices conformable for multiplication. We see this byfirst forming

det

([I A0 I

] [A 0−I B

])= det

([0 AB−I B

])(3.61)

and then observing from equation (3.33) that the right-hand side is det(AB).Now consider the left-hand side. The matrix that is the first factor on theleft-hand side is a product of elementary axpy transformation matrices; thatis, it is a matrix that when postmultiplied by another matrix merely addsmultiples of rows in the lower part of the matrix to rows in the upper part of

80 3 Basic Properties of Matrices

the matrix. If A and B are n × n (and so the identities are likewise n × n),the full matrix is the product:

[I A0 I

]= E1,n+1(a11) · · ·E1,2n(a1n)E2,n+1(a21) · · ·E2,2n(a2,n) · · ·En,2n(ann).

Hence, applying equation (3.57) recursively, we have

det

([I A0 I

] [A 0−I B

])= det

([A 0−I B

]),

and from equation (3.32) we have

det

([A 0−I B

])= det(A)det(B),

and so finally we have equation (3.60).From equation (3.60), we see that if A and B are square matrices con-

formable for multiplication, then

det(AB) = det(BA). (3.62)

(Recall, in general, even in the case of square matrices, AB 6= BA.) Thisequation is to be contrasted with equation (3.58), tr(AB) = tr(BA), whichdoes not even require that the matrices be square. A simple counterexamplefor nonsquare matrices is det(xxT) 6= det(xTx), where x is a vector withat least two elements. (Here, think of the vector as an n × 1 matrix. Thiscounterexample can be seen in various ways. One way is to use a fact that wewill encounter on page 105, and observe that det(xxT) = 0 for any x with atleast two elements.)

3.2.6 Multiplication of Matrices and Vectors

It is often convenient to think of a vector as a matrix with only one ele-ment in one of its dimensions. This provides for an immediate extension ofthe definitions of transpose and matrix multiplication to include vectors aseither or both factors. In this scheme, we follow the convention that a vectorcorresponds to a column; that is, if x is a vector and A is a matrix, Ax orxTA may be well-defined, but neither xA nor AxT would represent anything,except in the case when all dimensions are 1. (In some computer systems formatrix algebra, these conventions are not enforced; see, for example the Rcode in Figure 12.4 on page 491.) The alternative notation xTy we introducedearlier for the dot product or inner product, 〈x, y〉, of the vectors x and y isconsistent with this paradigm.

Vectors and matrices are fundamentally different kinds of mathematicalobjects. In general, it is not relevant to say that a vector is a “column” or

3.2 Multiplication of Matrices 81

a “row”; it is merely a one-dimensional (or rank 1) object. We will continueto write vectors as x = (x1, . . . , xn), but this does not imply that the vectoris a “row vector”. Matrices with just one row or one column are differentobjects from vectors. We represent a matrix with one row in a form such asY = [y11 . . . y1n], and we represent a matrix with one column in a form such

as Z =

z11

...zm1

or as Z = [z11 . . . zm1]

T.

(Compare the notation in equations (1.1) and (1.2) on page 4.)

The Matrix/Vector Product as a Linear Combination

If we represent the vectors formed by the columns of an n × m matrix Aas a1, . . . , am, the matrix/vector product Ax is a linear combination of thesecolumns of A:

Ax =

m∑

i=1

xiai. (3.63)

(Here, each xi is a scalar, and each ai is a vector.)Given the equation Ax = b, we have b ∈ span(A); that is, the n-vector b

is in the k-dimensional column space of A, where k ≤ m.

3.2.7 Outer Products

The outer product of the vectors x and y is the matrix

xyT. (3.64)

Note that the definition of the outer product does not require the vectors tobe of equal length. Note also that while the inner product is commutative,the outer product is not commutative (although it does have the propertyxyT = (yxT)T).

A very common outer product is of a vector with itself:

xxT.

The outer product of a vector with itself is obviously a symmetric matrix.We should again note some subtleties of differences in the types of objects

that result from operations. If A and B are matrices conformable for theoperation, the product ATB is a matrix even if both A and B are n × 1 andso the result is 1 × 1. For the vectors x and y and matrix C, however, xTyand xTCy are scalars; hence, the dot product and a quadratic form are notthe same as the result of a matrix multiplication. The dot product is a scalar,and the result of a matrix multiplication is a matrix. The outer product ofvectors is a matrix, even if both vectors have only one element. Nevertheless,as we have mentioned before, we will treat a one by one matrix or a vectorwith only one element as a scalar whenever it is convenient to do so.

82 3 Basic Properties of Matrices

3.2.8 Bilinear and Quadratic Forms; Definiteness

A variation of the vector dot product, xTAy, is called a bilinear form, andthe special bilinear form xTAx is called a quadratic form. Although in thedefinition of quadratic form we do not require A to be symmetric— becausefor a given value of x and a given value of the quadratic form xTAx there is aunique symmetric matrix As such that xTAsx = xTAx —we generally workonly with symmetric matrices in dealing with quadratic forms. (The matrixAs is 1

2(A + AT); see Exercise 3.3.) Quadratic forms correspond to sums of

squares and hence play an important role in statistical applications.

Nonnegative Definite and Positive Definite Matrices

A symmetric matrix A such that for any (conformable and real) vector x thequadratic form xTAx is nonnegative, that is,

xTAx ≥ 0, (3.65)

is called a nonnegative definite matrix. We denote the fact that A is nonneg-ative definite by

A � 0.

(Note that we consider 0n×n to be nonnegative definite.)A symmetric matrix A such that for any (conformable) vector x 6= 0 the

quadratic formxTAx > 0 (3.66)

is called a positive definite matrix. We denote the fact that A is positivedefinite by

A � 0.

(Recall that A ≥ 0 and A > 0 mean, respectively, that all elements of A arenonnegative and positive.) When A and B are symmetric matrices of the sameorder, we write A � B to mean A − B � 0 and A � B to mean A − B � 0.Nonnegative and positive definite matrices are very important in applications.We will encounter them from time to time in this chapter, and then we willdiscuss more of their properties in Section 8.3.

In this book we use the terms “nonnegative definite” and “positive defi-nite” only for symmetric matrices. In other literature, these terms may be usedmore generally; that is, for any (square) matrix that satisfies (3.65) or (3.66).

The Trace of Inner and Outer Products

The invariance of the trace to permutations of the factors in a product (equa-tion (3.58)) is particularly useful in working with bilinear and quadratic forms.Let A be an n×m matrix, x be an n-vector, and y be an m-vector. Because

3.2 Multiplication of Matrices 83

the bilinear form is a scalar (or a 1×1 matrix), and because of the invariance,we have the very useful fact

xTAy = tr(xTAy)

= tr(AyxT). (3.67)

A common instance is when A is square and x = y. We have for the quadraticform the equality

xTAx = tr(AxxT). (3.68)

In equation (3.68), if A is the identity I, we have that the inner product ofa vector with itself is the trace of the outer product of the vector with itself,that is,

xTx = tr(xxT). (3.69)

Also, by letting A be the identity in equation (3.68), we have an alternativeway of showing that for a given vector x and any scalar a, the norm ‖x− a‖is minimized when a = x:

(x− a)T(x − a) = tr(xcxTc ) + n(a− x)2. (3.70)

(Here, “x” denotes the mean of the elements in x. Compare this with equa-tion (2.61) on page 44.)

3.2.9 Anisometric Spaces

In Section 2.1, we considered various properties of vectors that depend onthe inner product, such as orthogonality of two vectors, norms of a vector,angles between two vectors, and distances between two vectors. All of theseproperties and measures are invariant to the orientation of the vectors; thespace is isometric with respect to a Cartesian coordinate system. Noting thatthe inner product is the bilinear form xTIy, we have a heuristic generalizationto an anisometric space. Suppose, for example, that the scales of the coordi-nates differ; say, a given distance along one axis in the natural units of theaxis is equivalent (in some sense depending on the application) to twice thatdistance along another axis, again measured in the natural units of the axis.The properties derived from the inner product, such as a norm and a metric,may correspond to the application better if we use a bilinear form in whichthe matrix reflects the different effective distances along the coordinate axes.A diagonal matrix whose entries have relative values corresponding to theinverses of the relative scales of the axes may be more useful. Instead of xTy,we may use xTDy, where D is this diagonal matrix.

Rather than differences in scales being just in the directions of the co-ordinate axes, more generally we may think of anisometries being measuredby general (but perhaps symmetric) matrices. (The covariance and correlationmatrices defined on page 316 come to mind. Any such matrix to be used in this

84 3 Basic Properties of Matrices

context should be positive definite because we will generalize the dot prod-uct, which is necessarily nonnegative, in terms of a quadratic form.) A bilinearform xTAy may correspond more closely to the properties of the applicationthan the standard inner product.

We define orthogonality of two vectors x and y with respect to A by

xTAy = 0. (3.71)

In this case, we say x and y are A-conjugate.The L2 norm of a vector is the square root of the quadratic form of the

vector with respect to the identity matrix. A generalization of the L2 vectornorm, called an elliptic norm or a conjugate norm, is defined for the vectorx as the square root of the quadratic form xTAx for any symmetric positivedefinite matrix A. It is sometimes denoted by ‖x‖A:

‖x‖A =√

xTAx. (3.72)

It is easy to see that‖x‖A

satisfies the definition of a norm given on page 24. If A is a diagonal matrixwith elements wi ≥ 0, the elliptic norm is the weighted L2 norm of equa-tion (2.31).

The elliptic norm yields an elliptic metric in the usual way of defining ametric in terms of a norm. The distance between the vectors x and y withrespect to A is

√(x− y)TA(x− y). It is easy to see that this satisfies the

definition of a metric given on page 30.A metric that is widely useful in statistical applications is the Mahalanobis

distance, which uses a covariance matrix as the scale for a given space. (Thesample covariance matrix is defined in equation (8.70) on page 316.) If S isthe covariance matrix, the Mahalanobis distance, with respect to that matrix,between the vectors x and y is

√(x− y)TS−1(x− y). (3.73)

3.2.10 Other Kinds of Matrix Multiplication

The most common kind of product of two matrices is the Cayley product,and when we speak of matrix multiplication without qualification, we meanthe Cayley product. Three other types of matrix multiplication that are use-ful are Hadamard multiplication, Kronecker multiplication, and inner productmultiplication.

The Hadamard Product

Hadamard multiplication is defined for matrices of the same shape as the mul-tiplication of each element of one matrix by the corresponding element of the

3.2 Multiplication of Matrices 85

other matrix. Hadamard multiplication immediately inherits the commutativ-ity, associativity, and distribution over addition of the ordinary multiplicationof the underlying field of scalars. Hadamard multiplication is also called arraymultiplication and element-wise multiplication. Hadamard matrix multiplica-tion is a mapping

IRn×m × IRn×m 7→ IRn×m.

The identity for Hadamard multiplication is the matrix of appropriateshape whose elements are all 1s.

The Kronecker Product

Kronecker multiplication, denoted by ⊗, is defined for any two matrices An×m

and Bp×q as

A⊗B =

a11B . . . a1mB... . . .

...an1B . . . anmB

.

The Kronecker product of A and B is np × mq; that is, Kronecker matrixmultiplication is a mapping

IRn×m × IRp×q 7→ IRnp×mq.

The Kronecker product is also called the “right direct product” or justdirect product. (A left direct product is a Kronecker product with the factorsreversed. In some of the earlier literature, “Kronecker product” was used tomean a left direct product.) Note the similarity of the Kronecker product ofmatrices with the direct product of sets, defined on page 5, in the sense thatthe result is formed from ordered pairs of elements from the two operands.

Kronecker multiplication is not commutative, but it is associative and itis distributive over addition, as we will see below. (Again, this parallels thedirect product of sets.)

The identity for Kronecker multiplication is the 1 × 1 matrix with theelement 1; that is, it is the same as the scalar 1.

We can understand the properties of the Kronecker product by expressingthe (i, j) element of A⊗ B in terms of the elements of A and B,

(A⊗ B)i,j = A[i/p]+1, [j/q]+1Bi−p[i/p], j−q[i/q], (3.74)

where [·] is the greatest integer function.Some additional properties of Kronecker products that are immediate re-

sults of the definition are, assuming the matrices are conformable for theindicated operations,

86 3 Basic Properties of Matrices

(aA) ⊗ (bB) = ab(A ⊗B)

= (abA) ⊗B

= A ⊗ (abB), for scalars a, b, (3.75)

(A + B) ⊗ (C) = A ⊗C + B ⊗ C, (3.76)

(A⊗ B) ⊗C = A ⊗ (B ⊗ C), (3.77)

(A⊗ B)T = AT ⊗ BT, (3.78)

(A ⊗B)(C ⊗D) = AC ⊗ BD. (3.79)

These properties are all easy to see by using equation (3.74) to express the(i, j) element of the matrix on either side of the equation, taking into accountthe size of the matrices involved. For example, in the first equation, if A isn×m and B is p× q, the (i, j) element on the left-hand side is

aA[i/p]+1, [j/q]+1bBi−p[i/p], j−q[i/q]

and that on the right-hand side is

abA[i/p]+1, [j/q]+1Bi−p[i/p], j−q[i/q].

They are all this easy! Hence, they are Exercise 3.6.The determinant of the Kronecker product of two square matrices An×n

and Bm×m has a simple relationship to the determinants of the individualmatrices:

det(A ⊗B) = det(A)mdet(B)n . (3.80)

The proof of this, like many facts about determinants, is straightforward butinvolves tedious manipulation of cofactors. The manipulations in this case canbe facilitated by using the vec-permutation matrix. See Harville (1997) for adetailed formal proof.

Another property of the Kronecker product of square matrices is

tr(A⊗ B) = tr(A)tr(B). (3.81)

This is true because the trace of the product is merely the sum of all possibleproducts of the diagonal elements of the individual matrices.

The Kronecker product and the vec function often find uses in the sameapplication. For example, an n×m normal random matrix X with parametersM , Σ, and Ψ can be expressed in terms of an ordinary np-variate normalrandom variable Y = vec(X) with parameters vec(M) and Σ⊗Ψ . (We discussmatrix random variables briefly on page 185. For a fuller discussion, the readeris referred to a text on matrix random variables such as Carmeli, 1983.)

3.2 Multiplication of Matrices 87

A relationship between the vec function and Kronecker multiplication is

vec(ABC) = (CT ⊗A)vec(B) (3.82)

for matrices A, B, and C that are conformable for the multiplication indicated.

The Inner Product of Matrices

A product of two matrices of the same shape is defined as the sum of thedot products of the vectors formed from the columns of one matrix withvectors formed from the corresponding columns of the other matrix; that is,if a1, . . . , am are the columns of A and b1, . . . , bm are the columns of B, thenthe inner product of A and B, denoted 〈A, B〉, is

〈A, B〉 =

m∑

j=1

aTj bj. (3.83)

Similarly as for vectors (page 23), the inner product is sometimes called a “dotproduct”, and the notation A ·B is sometimes used to denote the matrix innerproduct. (I generally try to avoid use of the term dot product for matricesbecause the term may be used differently by different people. In Matlab, forexample, “dot product”, implemented in the dot function, can refer either to1×m matrix consisting of the individual terms in the sum in equation (3.83),or to the n×1 matrix consisting of the the dot products of the vectors formedfrom the rows of A and B. In the NumPy linear algebra package, the dot

function implements Cayley multiplication! This is probably because someoneworking with Python realized the obvious fact that the defining equation ofCayley multiplication, equation (3.37) on page 70, is actually the dot productof the vector formed from the elements in the ith row in the first matrix andthe vector formed from the elements in the jth column in the first matrix.)

For conformable matrices A, B, and C, we can easily confirm that thisproduct satisfies the general properties of an inner product listed on page 23: