180701 DS IMP Questions and Solution - 2015

description

Transcript of 180701 DS IMP Questions and Solution - 2015

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 1 | P a g e

Darshan Institute of Engineering and Technology

Subject: Distributed System (180701)

Question Bank - 2015

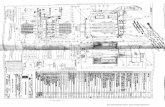

Sr. Unit Questions

Win

ter

14

Sum

mer

14

Win

ter

13

Win

ter

12

Tota

l

1 7 What is process migration? List the steps for it. Explain any one in detail. What is the advantage of process migration?

7 4 7 7 25

2 3 What is ordered message delivery? Discus different types of message ordering. OR What is ordered message delivery? Compare the various ordering semantics for message-passing. Explain how each of these semantics is implemented.

7 7 7 21

3 8 What is consistency? Discus the various consistency models used in DSM system. Explain any one.

7 7 7 21

4 7 What are the various issues while designing load balancing algorithm? Explain any one in detail.

7 7 7 21

5 4

What is RMI? What are the main features of Java RMI? Discuss the various components and the process of RMI execution. OR What is the significance of RMI in distributed systems? Explain the process of RMI execution.

7 7 7 21

6 5 Enumerate the various issues in clock synchronization. Classify the clock synchronization algorithms and explain Berkeley algorithm with an example.

7 7 7 21

7 5 What is a deadlock? List the four necessary and sufficient conditions for a deadlock to occur. And Discus various deadlock prevention strategies.

7 4 7 18

8 9 What is a name server? What is namespace? Explain the name resolution. 7 8 15

9 2

Why network system protocols are unsuitable for Distributed Systems? Explain any one communication protocol for Distributed System. OR Why traditional network protocols are not suitable for distributed systems? Explain VMTP protocol used for distributed system.

7 7 14

10 3 What are the desirable features of good Message Passing system? Explain each briefly.

7 7 14

11 8 Define thrashing in DSM. Also explain methods for solving thrashing problem in DSM.

7 7 14

12 5 Why mutual exclusion is more complex in distributed systems? Categorize and compare mutual exclusion algorithms.

7 7 14

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 2 | P a g e

1) What is process migration? List the steps for it. Explain any one in detail. What is the advantage of process migration?

Process Migration:

Process migration is the relocation of a process from its current location to another node.

It may be migrated either before it starts executing on its source node or during the course of its execution.

The former is known as non-preemptive process migration and,

The latter is known as preemptive process migration.

Preemptive process is costlier than non-preemptive. Steps to be taken care during Process migration are as follows:

Selection of process that should be migrated.

Selection of the destination node to which the selected process should be migrated.

Actual transfer of the selected process to the destination node.

Once we identify the process to be migrated and where it is migrated, the next step is to carry out process migration.

Figure: Process Migration Mechanism

The major steps involved in process migration are:

Freezing process on the source node

Starting process on the destination node

Transporting process address space on destination node

Forward the messages addressed to migrated processes

Advantages of process migration

Balancing the load: o It reduces average response time of processes o It speeds up individual jobs o It gains higher throughput

Moving the process closer to the resources it is using: o It utilizes resources effectively

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 3 | P a g e

o It reduces network traffic

It can be able to move a copy of a process (replicate) on another node to improve system reliability.

A process dealing with sensitive data may be moved to a secure machine (or just to a machine holding the data) to improve security

Freezing and Restarting of process

This Process blocks the execution of the migrant process, postponing all external communication o Immediate blocking - when not executing system calls o Postponed blocking - when executing certain system calls

Wait for I/O operations: o Wait for fast I/O - disk I/O. o Arrange to gracefully resume slow I/O operations at destination - terminal I/O, network

communication

Takes a “snapshot” of the process state o Relocatable information - register contents, program counter, etc. o Open files information - names, identifiers, access modes, current positions of file pointers etc.

Transfers the process state to the destination.

Restarts the process on destination node.

2) What is ordered message delivery? Discus different types of message ordering. OR What is ordered message delivery? Compare the various ordering semantics for message-passing. Explain how each of these semantics is implemented.

Message Ordering

In this type of Communication, multiple senders send messages to a single receiver.

A Selective receiver specifies a unique sender i.e. the message transfer takes place only from that sender.

In case of non-selective receivers, a set of senders are specified from which the message transfer can take place.

The ordered message delivery specifies that the message should be delivered in the order which is acceptable to the application.

The various semantics for the Message ordering are as below.

Figure: Types of Message Ordering

To Explain the various ordering mechanism, we use the following notations:

S for Sender Process

R for Receiver Process

T for Time stamp included in the message

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 4 | P a g e

M for Message

The Time increases from top to bottom along the Y-axis. Absolute Ordering

Absolute ordering semantics includes that the sender delivers all the messages to all the receiver processes in the exact order in which they were sent.

This semantic can be implemented by using a global timestamp embedded in the message as an ID.

Each machine in the group has its clock synchronized with the system clock.

The receiver machine kernel saves all messages from one process into a queue.

Periodically, the messages from the receiver buffer queue are delivered to the receiver process. Other messages remain in the queue for later access.

Figure: Absolute Ordering

Here, S1 sends a message to R1 and, R2 at time t1 followed by S2 sending the message to R1and R2 at time t2.

The two timestamps t1 and t2 attached to the messages are such that tl < t2.

Based on absolute ordering semantics, R1 and R2 receive the messages ml and m2 and will order them as m1 followed by m2.

Consistent Ordering

The absolute ordering technique needs a globally synchronized clock, which is practically not feasible.

S1 sends a message to Rl and R2 at time t1, followed by s2, sending the message to Rl and R2 at time t2.

The two timestamps, t1 and t2, attached to the messages are such that t1< t2.

Based on consistent ordering semantics, Rl and R2 received either the messages m1 followed by m2, or m2 followed by m1.

Figure: Consisting Ordering

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 5 | P a g e

The ABCAST protocol of the ISIS system is a distributed algorithm, which implements the consistent-ordering semantics.

ABCAST implements the sequencer method as given below:

The sender uses an incremental counter to assign a sequence number to the message and multicasts it.

Each member receives the message and returns a-proposed sequence number to the sender.

Member i calculates the sequence number from the function: max(Fmax,Pmax)+1+i/N

Where Fmax is the largest final sequence number mutually agreed upon by the group (Noted by all members).

Pmax is the largest proposed sequence number, and N is the total number of members of the multicast group.

The sender picks the largest proposed sequence number from all the messages received and sends it as the final sequence number (commit message) to all member of the group.

The final sequence number is a unique i/N term used by all the members for calculating the proposed sequence number.

Once each member receives the commit message, it attaches the final sequence number to the message.

Application programs now receive committed messages with the final sequence numbers in the correct order.

Causal Ordering

Causal ordering gives better performance than the other techniques.

This semantic ensures that the two messages are delivered to all receivers in correct order if they are causally related to each other.

Figure: Causal Ordering

S1 sends a message to R1, R2, and R3 at time t1 followed by S2 sending the message to R1, R2, and R3 at time t2.

The two timestamps, t1 and t2, attached to the messages are such that t1< t2.

After receiving message m1, R1 sends a message m3 to R2 and R3.

Based on causal ordering semantics, m1 and m3 are causally related to each other.

Hence, they need to be ordered at R1 and R2, but there is no ordering required for messages at R3.

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 6 | P a g e

3) What is consistency? Discus the various consistency models used in DSM system. Explain any one.

Memory consistency model refers to the time frame, i.e. how recent the shared memory updates are visible to all the processes running on different machines.

Consistency affects the ease with which correct and efficient shared memory programs can be written.

Several consistency models are proposed in literature, such as strict, sequential, causal, PRAM, processor, weak, release, and entry consistency models.

To explain the various consistency models, we have used a special notation. Processes are represented as P1, P2 etc.

The symbols used are W(x) a and R(y) b which means write to x with value a and read from y which returns a value b. The initial values of all variables are assumed to be 0.

Figure: Classification of Consistency model

Strict consistency

Strict consistency is the strongest form of consistency model.

A shared memory system supports strict consistency model if the value read is the last value written irrespective of the location of processes performing the read or write operations.

When the memory is strictly consistent, all writes are instantaneously visible to all processes and an absolute global time order is maintained.

P1 does a write operation to a location storing a value 5. Later when P2 reads x, it sees the value 5. This implies strictly consistent memory.

Figure: Strict Consistency Model

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 7 | P a g e

Sequential consistency

When all processors in the system observe the same ordering of reads and writes, which are issued in sequence by the individual processors.

This consistency model can be implemented on a DSM that replicates writable pages by ensuring that no memory operation is started until the earlier ones have been completed.

The memory is sequentially consistent even though the first read done by P2 returns the initial values 0, instead of the new value 5.

This consistency model does not guarantee that a read return the value just written, but it ensures that all processes see the references in the same order.

Figure: Sequential consistency

Causal consistency

The causal consistency model represents a weakening of sequential consistency for better concurrency.

A causally related operation is the one which has influenced another operation.

If two processes simultaneously write two variables, they are not causally related.

Suppose process P1 writes a variable x and P2 reads x and then writes y.

The operations performed by P2: read of x and write of y are causally related.

The writes W(x)2 and W(x)4 are concurrent, and hence it is not necessary that all processes see them in the same order.

Figure: Causal Consistency

PRAM consistency

Pipelined Random Access Memory (PRAM) consistency model provides weaker consistency semantics as compared to the consistency models described so far.

This consistency model is simple, easy to implement, and provides good performance.

The sequence of events shown is allowed with PRAM consistency but not with other stronger models

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 8 | P a g e

like strict, sequential, and causal.

Figure: PRAM Consistency

Processor consistency

Processor consistency model adheres to the PRAM consistency, but with a constraint on memory coherence. Memory coherence implies that all processes agree on the same order of all write operations to that location.

There is no need to show the change in memory done by every memory write operation to other processes.

Weak consistency

All DSM systems that support the weak consistency model use a special variable called the synchronization variable.

Weak consistency model requires that the memory be made consistent only on synchronization accesses which divided into ‘acquire' and 'release' pair.

The three properties of the weak consistency model are: o Access to synchronization variables is sequentially consistent. o Access to synchronizations variable be allowed only when all previous writes are completed

everywhere. o Until all previous accesses to synchronization variables are performed, no read-write data access

operations will be allowed.

Release consistency (RC)

RC mechanism tells the system whether a process is entering or exiting a critical section. So the system decides whether to perform the first or second operation when a synchronization variable is accessed by a process.

Release consistency can also be implemented using a synchronization mechanism called barrier which define the end of execution of a group of concurrently executing processes.

When all processes reach the barrier, the shared variables are synchronized and then all processes resume execution.

They acquire and release of different critical sections or barriers can occur independently of each other.

Entry consistency (EC)

EC requires the programmer to use acquire and release at the start and end of each critical section, respectively.

EC is similar to LRC but is more relaxed; shared data is explicitly associated with synchronization primitives and is made consistent when such an operation is performed.

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 9 | P a g e

Scope consistency (ScC)

ScC provides high performance like EC and good programmability like LRC.

In ScC, a scope is a limited view of memory with respect to which memory-references are performed.

All critical sections guarded by the same lock comprise a scope.

4) What are the various issues while designing load balancing algorithm? Explain any one in detail.

There are various issues involved in designing a good load-balancing algorithm, these are as follows:

Load estimation policies

Process transfer policies

Static information exchange policies

Location policies

Priority assignment policies

Migration limitation policies

Load estimation policies

The various methods adopted for load estimation are based on the number of processes running on the machine and capturing CPU busy-time.

The classification of load estimation policies are as follows

Measuring the number of processes running on a machine o One way to measure the load is to calculate the number of processes running on the machine. o But as you are aware, machines can have many processes running, such as mail, news, windows

managers, etc. o Load can be taken as equal to the number of processes running or ready to run. o It is quite obvious that every running or runnable process puts some load on the CPU even when

it is a background process. o For example, some daemons wake up periodically to see a specific task has to be executed, else

they go back to sleep. These daemons put only a small load on the system.

Capturing GPU busy-time o CPU utilization can be measured by allowing a timer to interrupt the machine to periodically

observe the CPU state and find the fraction of idle time. o The CPU Disable all the timers when the kernel is executing a critical code. o If the kernel was blocking the last active process, the timer will not interrupt till the kernel has

Load estimation policies

Measuring the number of

Processes running on a

machine

Capturing CPU busy-time

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 10 | P a g e

finished operation and entered an idle loop. o In this case, the main problem is the under estimation of the CPU usage, Practically, we need to

look at the processor allocation algorithm overheads like measuring CPU load, collecting this information, and moving processes around.

Process Transfer policy

Process transfer policies are used to decide the time when a process should be transferred.

It is based on a threshold, which is a limiting value and it decides whether a new process ready for execution should be executed locally or transferred to a lightly loaded node.

The threshold level can be decided statically or dynamically and can be either a single or a two level threshold policy.

Figure: Process Transfer Policies

Single level threshold o The threshold policy evolved as a single threshold value. o A node accepts a new process as long as long as its load is below the threshold, else it rejects

the process as well as the request for remote execution. o The use of this single threshold value may lead to useless process transfer, leading to instability

in scheduling decisions.

Two level Threshold policies o A two level threshold policy is preferred to avoid instability. o It has two threshold level: High and Low Marks o The load states of a node can be divided into three regions: o Overloaded (above high mark) o Normal (above low mark and below high mark) o Under-loaded (below low mark)

Location policies

The location policy is used to select the destination node where the process can be executed.

The location policies are classified as below.

Threshold policy o The destination node is selected at random and a check is made to verify whether the remote

process transfer would load that node. o If not, the process transfer is carried out; else another node is selected at random and probed.

Shortest location policy o Distinct nodes are chosen at random and each of these nodes is polled to check for load.

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 11 | P a g e

o The node with the lowest load value is selected as the destination node. o Once selected, the destination node has to execute the process irrespective of its state at the

time the process arrives.

Bidding location policy o Each node is assigned two roles, namely the manager and the contractor. o A manager is node having a process which needs a location to execute and the contractor is a

node which can accept remote processes. o The same node can take both roles and none of the nodes can be only managers or contractors.

Pairing policy o The pairing policy focuses on load- balancing between a pair of nodes. o Two nodes which have large difference of load are paired together temporarily. o The load balancing operations carried out between the nodes belonging to the same pair by

migrating process from heavily loaded node to the lightly loaded node.

Priority assignment policies For process migration in distributed system, priority assignment policy for scheduling local and remote processes at a node is necessary.

Selfish priority assignment policy o Local processes are given higher priority are compared to remote processes. o The converse is true in altruistic policy where remote processes are given higher priority than

local processes. o The priority of a process depends on the number of local processes and the number of remote

process at a specific node. o The intermediate policy gives a higher priority to local processes if the number of local

processes is greater than the remote processes; else remote processes are given higher priority.

Altruistic priority assignment policy o This policy has the best response-time performance in this policy; remote processes incur lower

delay than local processes, even though local processes are the principal workload at each node, while remote processes are secondary workload.

Intermediate priority assignment policy o The performance of intermediate priority assignment policy falls between selfish and altruistic

priority assignment policies. o Of the three policies, the selfish priority assignment gives the worst response-time performance

for remote processes, but it is best for local processes.

Migration limitation policies

In a distributed system supporting process migration, it is important to decide the number of times a process is allowed to migrate.

Uncontrolled o A remote process arriving at a node is treated as a local process. o The process can be migrated any number of times, but may result in system instability.

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 12 | P a g e

Controlled o The local and remote processes are treated differently. o A migration count is used to limit the number of times the process can be migrated with the upper

limit fixed to 1. o A process can be migrated only once. But the upper limit can be set to a count fr > 1 for long

processes which may be allowed to migrate more times.

5) What is RMI? What are the main features of Java RMI? Discuss the various components and the process of RMI execution. OR What is the significance of RMI in distributed systems? Explain the process of RMI execution.

RMI

The server manages the objects and clients invoke the method called the RMI.

The RMI technique sends the requests as a message to the server which executes the method of the object and returns the result message to the client.

Method invocation between objects in the same process is called local invocation. While those between different processes are called remote invocation.

Two fundamental concepts: Remote Object Reference and Remote Interface

Each process contains objects, some of which can receive remote invocations are called remote objects (B, F), others only local invocations

Objects need to know the remote object reference of an object in another process in order to invoke its methods, called remote method invocations Every remote object has a remote interface that specifies

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 13 | P a g e

which of its methods can be invoked remotely Remote Object References

Accessing the remote object

Identifier throughout a distributed system

Can be passed as arguments

Remote Interfaces

Specifying which methods can be invoked remotely

Name, arguments, return type

Interface Definition Language (IDL) used for defining remote interface Main Features of Java RMI Java RMI has several advantages over traditional RPC systems because it is part of Java's object oriented approach.

Object Oriented: RMI can pass full objects as arguments and return values, not just predefined data types. This means that you can pass complex types, such as a standard Java hashtable object, as a single argument.

Mobile Behavior: RMI can move behavior (class implementations) from client to server and server to client. For example, you can define an interface for examining employee expense reports to see whether they conform to current company policy.

Design Patterns: Passing objects lets you use the full power of object oriented technology in distributed computing, such as two- and three-tier systems.

Safe and Secure: RMI uses built-in Java security mechanisms that allow your system to be safe when users downloading implementations.

Easy to Write/Easy to Use: RMI makes it simple to write remote Java servers and Java clients that access those servers. A remote interface is an actual Java interface. A server has roughly three lines of code to declare itself a server, and otherwise is like any other Java object. This simplicity makes it easy to write servers for full-scale distributed object systems quickly, and to rapidly bring up prototypes and early versions of software for testing and evaluation. And because RMI programs are easy to write they are also easy to maintain.

Connects to Existing/Legacy Systems: RMI interacts with existing systems through Java's native method interface JNI. Using RMI and JNI you can write your client in Java and use your existing server implementation.

Write Once, Run Anywhere: RMI is part of Java's "Write Once, Run Anywhere" approach. Any RMI based system is 100% portable to any Java Virtual Machine *, as is an RMI/JDBC system. If you use RMI/JNI to interact with an existing system, the code written using JNI will compile and run with any Java virtual machine.

Distributed Garbage Collection: RMI uses its distributed garbage collection feature to collect remote server objects that are no longer referenced by any clients in the network.

Parallel Computing: RMI is multi-threaded, allowing your servers to exploit Java threads for better concurrent processing of client requests.

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 14 | P a g e

Process of RMI execution

Communication module: The client and the server processes form a part of the communication module which use RR protocol. The format of the request-and-reply message is very similar to the RPC message.

Remote reference module: It is responsible for translating between local and remote object references and creating remote object references. It uses a remote object table which maps local to remote object references. This module is called by the components of RMI software for marshaling and unmarshaling remote object references. When a request message arrives, the table is used to locate the object to be invoked.

RMI software: This is the middleware layer and consists of the following: 1) Proxy:

o It makes RMI transparent to the client and forwards the message to the remote object. o It marshals the arguments and unmarshals the result and also sends and receives messages

from the client. o There is one proxy for each remote object for which it holds a remote reference.

2) Dispatcher: o A server consists of one dispatcher and a skeleton for each class which represents a remote

object. o This unit receives the request message from the communication module, selects the

appropriate method in the skeleton, and passes the request message. 3) Skeleton:

o It implements the method in the remote interface. o It unmarshals the arguments in the request message and invokes the corresponding method in

the remote object. o After the invocation is complete, it unmarshals the result along with excerptions in the reply

message to send the proxy’s method o The interface compiles automatically generating classes for proxy, dispatcher, and skeleton.

Server and Client Programs o The server contains classes for dispatcher, skeleton, and the remote objects it supports. o A section creates and initializes at least one object hosted by the server. o The other remote objects are created later when requests come from the client. o The client program contains classes of processes for the entire remote object, which it will

invoke. o The client looks up the remote object references using a binder.

The Binder o An object A requests remote object reference for object B. The binder in a distributed system is

a service which maintains a table of textual names to be remote object referenced. o Servers use this service to look up remote object references.

Figure: Locating Remote Objects

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 15 | P a g e

6) Enumerate the various issues in clock synchronization. Classify the clock synchronization algorithms and

explain Berkeley algorithm with an example.

Various issues in clock synchronization:

Consider a distributed online reservation system in which the last available seat may get booked from multiple nodes if their local clocks are not synchronized.

Synchronized clocks enable measuring the time duration of distributed activities which starts on node and terminate on another node. For example: there is a need to calculate the time taken to transmit a message from one node to another at any time.

As shown in figure event occurred after another event may nevertheless be assigned an earlier time.

In figure newly modified ‘output.c’ will not be re-compiled by the ‘make’ program because of a slightly slower clock on the editor’s machine.

Figure: Problem with Unsynchronized clocks

For correctness, all distributed application needs the clocks on the nodes to be synchronized each other.

Clock Synchronization algorithm is broadly classified as centralized and distributed. Centralized Algorithm.

In centralized clock synchronization algorithms one node has a real time receiver, this node is usually called the time server node, and clock time is regarded as correct and used as reference time.

Goal of the algorithm is to keep the clocks of all other nodes synchronized.

1) Passive Time server Centralized Algorithm.

In this method each node periodically sends a message to the time severs.

When the time server receives the message, it quickly responds with a message, where T is the current in the time server.

Let’s us assume that when the client node sends the time message at time T0 and when it receives the message at time T1

Since T0 and T1 are measured using same clock , the best estimate of the time required for propagation of the message at T from the time server node to clients node is (T1-T0)/2.

When reply is received at the clients node its clock is readjusted to T+T (T1-T2)/2.

The above proposed estimate is not proper , several methods are there to propose good estimate which are as follows:

a) In this method it is assumed that approximate time taken by time server to handle the interrupt and a

process is known which is equal to l.

Then estimate of the time taken for propagation of the message would be (T1-T0-l)/2.

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 16 | P a g e

Therefore in this method when reply is received time is adjusted to T+ (T1-T0-l)/2.

b) Cristian method.

In this method several measurements of T1-T0 are made, and those measurements for which T1-T0 exceeds some threshold value are considered to be unreliable and discarded.

The average of remaining measurements is then calculated and half of the value is used as a value to be added to T.

2) Active Time Server Centralized Algorithm.

A) In the active server method, the time server periodically broadcast its clock time ‘time=T’.

All other nodes receive the broadcast message and use the clock time in the message for correcting their own clocks.

If a broadcast message reaches a node a little late at a node due to, say, a communication link failure, the clock of the client node will readjusted to an incorrect value.

B) Another active time server algorithm is the Berkeley algorithm.

This method avoids readings from unreliable clocks whose values, if chosen, would have modified the actual value.

Here a coordinator computer is chosen as master.

The master periodically polls the slaves whose clocks are to be synchronized to the master.

The slaves send their clock values to the master.

The master observes the transmission delays and estimates their local clock times.

Once this is done, it takes a fault-tolerant average of all the times including its own, and ignores those that are far outside of the range of the rest.

Figure: Berkley Algorithm.

The master then sends each slave the amount (positive or negative) that it should adjust its clock.

As in figure (a) at 3:00 the time daemons tells the other machines its time and asks for theirs.

In figure (b), they respond with how far ahead or behind the time daemon they are.

The time daemon computes the average and tells each machine how to adjust it clock fig (c).

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 17 | P a g e

Distributed Algorithms.

Here each node of the system is equip with real time receiver so that each node’s clock can be independently synchronized with real time.

Multiple real time clocks are normally used for this purpose.

1) Global Averaging Distributed Algorithms.

In this approach , the clock process at each node broadcasts its local clock time in the form of a special “resync” message when its local time equals T0+iR for some integer I.

Where T0 is a fixed time in the past agreed upon by all nodes and R is a system parameter that depends on such factors as the total number of nodes in the system, the maximum allowable drift rate and so on.

To commonly used algorithm are described below :

A) The simplest algorithm is to take the average of the estimated skews and use it as correction for the local clock.

The average value, the estimated skew with respect to each node is compared against a threshold.

Skews greater than the threshold are set to zero before computing the average of estimated skews.

B) Each node limits the impact of faulty clocks by first discarding the m highest and m lowest estimated skews.

Then calculating the average of the remaining skews, which is then used as the correction for the local clocks.

2) Localized Averaging Distributed Algorithms.

The global averaging algorithm do not scale well because they require the network to support broadcast facility and also network traffic is generated.

In this approach, the nodes of a distributed system are logically arranged in some kind of pattern, such as ring or a grid.

Periodically each node exchange its clock time with its neighbor’s in the ring, grid or other structure and then sets its clock time to the average of its own clock time and the neighbor’s clock time.

7) What is a deadlock? List the four necessary and sufficient conditions for a deadlock to occur. And Discus

various deadlock prevention strategies.

A deadlock is a fundamental problem in distributed systems.

A process may request resources in any order, which may not be known a priori and a process can request resource while holding others.

If the sequence of the allocations of resources to the processes is not controlled, deadlocks can occur.

A deadlock is a state where a set of processes request resources that are held by other processes in the set.

Processes need access to resources in a reasonable order. Suppose a process holds resource A and requests resource B, while at the same time another process holds resource B and requests for resource A. Here, both are blocked and remain so. A set of processes is said to be deadlocked if each process in the set is waiting for an event that only another process in the set can cause.

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 18 | P a g e

Deadlocks in distributed systems are similar to deadlocks in single processor systems, but only worse. This is how they differ:

They are harder to avoid, prevent, or even detect.

They are hard to cure when tracked down because all relevant information is scattered over many machines.

Distributed deadlocks are classified into communication deadlocks and resource deadlocks as explained below. Communication deadlocks

This type of deadlock occurs among a set of processes when they are blocked and waiting for messages from other processes in the set in order to start execution.

There are no messages in transit between them.

Due to this reason, none of the processes will ever receive a message. Hence, all processes in the system are deadlocked.

A typical example is that of processes competing with buffers for send/receive operation. Resource deadlocks

This type of deadlock occurs when two or more processes wait permanently for resources held by each other.

For example, exclusive access on I/O devices, files, locks and other resources. Four conditions must hold for deadlock to occur:

Exclusive use – when a process accesses a resource, it is granted exclusive use of that resource.

Hold and wait – a process is allowed to hold onto some resources while it is waiting for other resources.

No preemption – a process cannot preempt or take away the resources held by another process.

Cyclical wait – there is a circular chain of waiting processes, each waiting for a resource held by the next process in the chain.

Deadlock prevention

Mutual exclusion, hold-and-wait, no pre-emption and circular wait are the necessary and sufficient conditions for a deadlock to occur. If we do not allow any one of the four conditions to occur, then there is no chance of a deadlock.

Based on this idea, the three important deadlock prevention methods are: o Collective Requests(denies the hold-and-wait condition) o Ordered requests (denies the circular wait condition) o Pre-emption (denies the no pre-emption condition)

1) Collective Requests

This method denies the hold-and-wait condition by ensuring that whenever a process requests for a resource, it does not hold any other resources.

A process must collect all its resources before it commences execution.

Any one of the following resource allocation policies can be used: o Either all Resources allocated, or Process waits. o Hold and Request not allowed, if holding some resources then release the resource and Re-request

the necessary resource.

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 19 | P a g e

2) Ordered Requests o In the circular-wait method, each resource type is assigned a unique global number to ensure a total

ordering of all resource type. o The resource allocation policy is such that a process can request a resource at any time, but the

process must not request a resource with a number lower than the number of any of the resources that it is already holding.

o The ordering of requests is based on the usage pattern of the resources. o For example if a tape drive is usually needed before a printer, the tape drive can assign a lower

number than a printer. o A limitation of this algorithm is that this natural numbering may not be suitable for all resources. o Once the ordering is decided, it is coded into the programs and will stay for a long time. Reordering

will require reprograming of several jobs. o Reordering will be essential when new resources are added to the system. o In spite of these limitations, the method of ordered requests is the most efficient method for

handling deadlocks.

3) Pre-emption

A pre-emptable resource is a resource whose state can be saved and restored later.

The resource can be taken away temporarily from the process to which it is currently allocated without disturbing the computation performed.

Typical examples of pre-emptable resources are CPU registers and main memory.

8) What is a name server? What is namespace? Explain the name resolution. Name Server

A name Server (process) is used to maintain information about named objects and enable users to access this name information.

It also binds the object’s name to its property (such as location).

Multiple name servers are used to manage the name space.

Each name server will store information about a subset of objects in the distributed system.

The authoritative name servers store the information about the objects and the naming services maintains these names.

The authoritative name servers maintain information as bellows:

The root node stores information about only the location of the name servers which branch out from the Root, D1, D2, and D3.

Domain D1 node stores information about domains D4 and D5.

Domain D3 node stores information about domains D6, D7 and D8.

It is evident that the amount of configuration data to be maintained by name servers at various levels in the hierarchy is directly proportional to the degree of branching of the namespace tree.

Hence, a hierarchical naming convention with multiple levels is better suited for a large number of objects rather than a flat namespace.

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 20 | P a g e

Figure: Name servers in hierarchical namespace

Name Space

A name space is defined as the set of names within a distributed system that compiles with the naming convention and can be classified as flat namespace or partitioned namespace.

A Context is an environment within which a name is valid. The same object can be named using abbreviation/alias, absolute/relative name, generic/multicast name, descriptive/attribute based name, or source routing name.

Types of Name Space are: o Flat Namespace o Partitioned Namespace

Name Resolution

Name Resolution is the process of mapping information about an object’s name with its properties like location.

This process is carried out at the authoritative name servers because the object’s properties are stored and maintained in that name server.

The initial step of the name resolution process is to locate the authoritative name server of the objects, followed by invoking operations for either reading or updating the object’s properties.

The name resolution process works as follows:

A Client contact the name agent

The name agent contacts the known name server to locate the object.

If the object is not located, there then this known server contacts other name servers.

The Name Servers are treated as objects and they identify each other with system oriented low level names.

In partitioned namespace, the name resolution process involves traversing the common text chain till the specific authoritative name server is encountered.

As below figure, the name is first interpreted in the context to which it is associated. This interpretation returns two possible values.

Returns the name of the authoritative name server of the named object and the name resolution process ends.

Returns the new context to interpret the name and the process of name resolution continues till the authoritative name server for that object is encountered.

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 21 | P a g e

9) Why network system protocols are unsuitable for Distributed Systems? Explain any one communication protocol for Distributed System. OR Why traditional network protocols are not suitable for distributed systems? Explain VMTP protocol used for distributed system.

Traditional protocols do not support distributed systems, which have the following requirements:

Transparency: Communication protocols for distributed systems should use location-independent identifiers for processes, which should not change even if the process migrates to another node.

Client-server based communication: Client requests the server to perform a specific task. The server executes the task and responds with a reply. Hence, protocols for distributed systems must be connectionless with features to support request/response behavior.

Group communication: Protocols should support group communication facility so that messages can be selectively sent to a group or to the entire system by defining multicast or broadcast address respectively.

Security: Protocols must support flexible encryption mechanism, which can be used if a network path is not trustworthy.

Network management: Protocols must be capable of handling dynamic changes in network configuration.

Scalability: Protocols should be scalable to support both-LAN and WAN Technologies. Versatile message transfer protocol (VMTP):

VMTP supports request/response behavior.

Provides transparency and group communication, selective retransmission mechanism, rate based control flow

Supports execution of non-idempotent operations, and conditional delivery of real time Communication.

This connectionless protocol provides end to end datagram delivery.

In figure packets divided into 16kb segments, which are split into 512 kb blocks.

Each block consists of data and a 32 bit mask, delivery mask field, indicating data segment in the Packet.

Figure: Packet Transmission

The VMTP request response behavior is depicted in figure 2-22. This technique provides feedback when

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 22 | P a g e

transmission rate is high.

Rate based flow control mechanism: VMTP users burst protocol and sends blocks of packet to the receivers with spaced inter packet gaps.

This technique helps in matching data transmission and reception speeds.

For example money transfers from a bank account. Server does not respond to such request by executing them more than once.

Conditional delivery for real time communication: message should be delivered only if the server can immediately process it.

10) What are the desirable features of good Message Passing system? Explain each briefly.

The major functions of the message-passing system include efficient message delivery and availability of communication progress information. The message passing model has gained wide use in the field of parallel computing, as it offers various advantages.

Hardware match: The message-passing model fits well on parallel super-computers and clusters of workstations, which are composed of separate processor connected by a communication network.

Functionality: Message passing offers a full set of functions for expressing parallel algorithms, providing the control which is not found in parallel data and compiler based models.

Performance: Effective use of modern CPUs requires Management of the memory hierarchy and especially the cache. Message passing achieves this by providing programmers the explicit control of data locality.

Uniform semantics: Uniform semantics are used for both send and receive operations and for local as well as remote processes.

Efficiency: An optimization technique for efficient message-passing is to avoid repeated costs of setup and termination of connection for multiple message transfers between the same processes

Reliability: A good message-passing system should cope with failures and guarantee message delivery. The techniques used to ensure reliability include handling lost and duplicate messages.

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 23 | P a g e

Acknowledgements and retransmissions based on the time-out mechanism are used to handle the lost messages. A proper sequence number is assigned to each message and it handles the duplicate messages.

Correctness: A message-passing system supports group communication, i.e. it allows message to be sent to multiple or all receivers or received from one or more senders. Correctness is related to IPC protocols and is often required for group communication.

Flexibility: The message-passing system should be flexible enough to cater to the varied requirements of the application in terms of reliability. The correctness requirement and IPC primitives like synchronous or asynchronous send/receive are used to control the flow of messages between the sender and the receiver.

Portability: It should be possible to construct a new IPC facility on another system by reusing the basic design of the existing message-passing system. The application should be designed using high level primitives to hide the heterogeneous nature of the network.

Security: A good message-passing should provide secure end-to-end communication. Only authenticated users are allowed to access the message in transit, The steps necessary for secure communication include authentication of receiver by a sender, of a sender by the receivers, and encryption of the message prior to sending it over the network.

11) Define thrashing in DSM. Also explain methods for solving thrashing problem in DSM.

Thrashing occurs when the system spends a large amount of time transferring shared data blocks from one node to another.

It is a serious performance problem with DSM systems that allow data blocks to migrate from one node to another.

Thrashing may occur in the following situations : o When interleaved data accesses made by processes on two or more nodes causes a data block to

move back and forth from one node to another in quick succession (a ping – pong effect) o When blocks with read only permissions are repeatedly invalidated soon after they are replicated.

Such situations indicate poor (node) locality in references. If not properly handled, thrashing degrades system performance considerably.

The following methods may be used to solve the thrashing problem in DSM systems.

1) Providing application – controlled locks.

Locking data to prevent other nodes from accessing that for a short period of time can reduce thrashing.

An application controlled lock can be associated with each data block to implement this method.

2) Nailing a block to a node for a minimum amount of time.

Disallow a block to be taken away from a node until a minimum amount of time t elapses after its allocation to that node.

The time t can either be fixed statically or be turned dynamically on the basis of access patterns.

180701 – Distributed System IMP Questions

Prepared by: Dipak Ramoliya Darshan Institute of Engineering & Technology, Rajkot 24 | P a g e

The main drawback of this scheme is that it is very difficult to choose the appropriate value for the time.

If the value is fixed statically, it is liable to be inappropriate in many cases.

Therefore, tuning the value of t dynamically is the preferred approach in this case; the value of t for a block can be decided based on past access patterns of the block.

3) Tailoring the coherence algorithm to the shared data usage pattern.

Thrashing can also minimized by using difference coherence protocols for shared data having different characteristics.

For example, the coherence protocol used in Munin for write shared variables avoids the false sharing problem, which ultimately results in the avoidance of thrashing.

12) Why mutual exclusion is more complex in distributed systems? Categorize and compare mutual exclusion algorithms.

In distributed systems, Mutual Exclusion is more complex due to the following reasons:

No shared memory

Delays in propagation of information which are unpredictable

Synchronization issues with clocks

Need for ordering of events Several algorithms to implement critical sections and mutual exclusion in distributed systems:

Centralized algorithm

Distributed algorithm

Token Ring algorithm A brief comparison of the centralized, distributed, and token ring mutual exclusion algorithms is shown in table.

Algorithm Message per entry/exit Delay before entry (In message times)

Problems

Centralized 3 2 Coordinator crash

Distributed 2(n – 1) 2(n – 1) Crash of any process

Token Ring 1 to ∞ 0 to (n – 1) Lost token, process crash

The basic design principle is that building fault handling into algorithms for distributed systems is hard.

Also, rash recovery is subtle and introduces an overhead in normal operation.