10

Click here to load reader

Transcript of 10

Int Jr of Advanced Computer Engineering and ArchitectureVol. 1, No. 1, June 2011Copyright Mind Reader Publications www.ijacea.yolasite.com

The Applications and Simulation of

Adaptive Filter in Speech Enhancement

Soni Changlani, Dr. M.K. Gupta

Assistant Professor Lakshmi Narain College of Technology, BhopalDepartment of Electronics and Communication Engineering,

e-mail:[email protected] Maulana Azad National Institute of Technology, Bhopal

Department of Electronics and Communication Engineering,e-mail: [email protected]

Abstract

In many applications of noise cancellation the changes in signal characteristics could be quite fast. This requires the utilization of adaptive algorithms, which converge rapidly. Least mean square (LMS) and Normalized LMS (NLMS) adaptive filters have been used in a wide range of signal processing applications because of its simplicity in computation and implementation. The Recursive Least Squares (RLS) algorithm has established itself as the ultimate” adaptive filtering algorithm in the sense that it is the adaptive filter exhibiting the best convergence behavior. Unfortunately, practical implementations of this algorithm are often associated with high computational complexity and/or poor numerical properties. In this paper we have performed and compared these classical adaptive filters for attenuating noise in speech signals. In each algorithm, the optimum orders of filter of adaptive algorithms have also been found through experiments... The objective of noise cancellation is to produce the estimate of the noise signal and to subtract it from the noisy signal and hence to obtain noise free signal. We simulate the adaptive noise canceller with MATLAB.

Keywords- Noise canceller; adaptive algorithm; LMS; NLMS RLS;

Soni Changlani 96

1 Introduction

Liner filtering is required in a variety of applications. A filter will be optimal only if it is designed with some knowledge about input data. If this information is not known, then adaptive filters [1] are used .An adaptive filter is essentially a digital filter with self-adjusting characterstics.It adapts, automatically, to changes in its input signals. In adaptive filtering, the adjustable filter parameters are to be optimized. The criteria arrived at for optimization should consider the filter performance and realisability. Adaptive algorithms are used to adjust the coefficient of the digital filter. Common algorithms that have found widespread applications are the least mean square (LMS), the recursive least square (RLS), and the kalman filter [3] algorithms.RLS algorithm is one of the most popular adaptive algorithm. As compared to the least-mean-square (LMS) algorithm, the RLS offers a superior convergence rate, especially for highly correlated input signals. The price to pay for this is an increase in the computational complexity.

2 RLS Adaptive Algorithm

The RLS (recursive least squares) algorithm is another algorithm for determining the coefficients of an adaptive filter. In contrast to the LMS algorithm, the RLS algorithm uses information from all past input samples (and not only from the current tap-input samples) to estimate the (inverse of the) autocorrelation matrix of the input vector. The RLS algorithm is a recursive form of the Least Squares (LS) algorithm. It is recursive because the coefficients at time n are found by updating the coefficients at time n-1 using the new input data. Whereas the LS algorithm is a block-update algorithm where the coefficients are computed from scratch at each sample time. To decrease the influence of input samples from the far past, a weighting factor for the influence of each sample is used. This weighting factor is introduced in the cost function.

2

1

ienn

i

in

(1)

where ie is the difference between the desired response and output produced by a filter. λ is special form of weighting factor, known as forgetting factor, the performance of RLS-type algorithms in terms of convergence rate, tracking, midadjustment, and stability depends on the forgetting factor. The classical RLS algorithm uses a constant forgetting factor and needs to compromise between the previous performance criteria. When the forgetting factor is very close to one, the algorithm achieves low misadjustment and good stability, but its tracking capabilities are reduced. A smaller value of the forgetting factor improves the tracking but increases the misadjustment, and it could affect the stability of the

97 The Applications and Simulation

of

algorithm. The use of the forgetting factor is intended to ensure that data in the distant past are “forgotten” in order to afford the possibility of following the statistical variations of the observable data when the filter operates in a nonstationary environment. λ is a positive constant close to, but less than, 1. The rate of convergence of the RLS also depends on λ.

3 Adaptive Noise Cancellation.The basic idea of an adaptive noise cancellation algorithm is to pass the noisy signal through a filter that tends to suppress the noise while leaving the signal unchanged. This process is an adaptive process, which means it can not require a priori knowledge of signal or noise characteristics. The technique adaptively adjusts a set of filter coefficients so as to remove the noise from the noisy signal. To realize the adaptive noise cancellation, we use two inputs and an adaptive filter. One input is the signal corrupted by noise (Primary Input, which can be expressed as (s(n) +No(n)) ,the other input contains noise related in some way to that in the main input but does not contain anything related to the signal (Noise Reference Input expressed as N1(n) .the noise reference input pass through the adaptive filter and output y(n) is produced as close a replica as possible of No(n) . The filter readjusts itself continuously to minimize the error between No (n) and y (n) during this process. Then the output y (n) is subtracted from the primary input to produce the system output e= S+No -y, which is the denoised signal. Assume that S, N0, N1 and y are statistically stationary and have zero means. Suppose that S is uncorrelated with N0 and N1, but N1 is correlated with N0. We can get the following equation of expectations:

222 yNEsEeE o (2)

When the filter is adjusted so that E [e2] is minimized, E[(No-y)2] is also minimized. So the system output can serve as the error signal for the adaptive filter.

Adaptive +

+

?

REFERENCE

+y(n)

-

e(n) OUTPUT

d(n)

S(n)+No (n)

x(n)N1(n)

Fig. 1 : Adaptive noise canceller.

PRIMARY INPUT

Soni Changlani 98

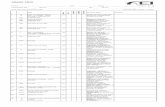

4 Simulation And Results:

This section describes parts of the sample MATLAB program and results. The Program can be divided into four parts: First employ the randn(N,1) function within MATLAB to generate x(k) which will have unity power and zero mean, where N is number of system point. The information bearing signal is a sine wave of 0.055 cycles/sample the noise picked up by the secondary microphone is the input for the RLS adaptive filter. The noise that corrupts the sine wave is a low pass filtered version of (correlated to) this noise. The sum of the filtered noise and the information bearing signal is the desired signal for the adaptive filter. Set and initialize RLS adaptive filter parameters and values Filter order N=32 Exponential weighting factor lam=1;

0 20 40 60 80 100 120 140 160 180 200-2

-1.5

-1

-0.5

0

0.5

1

1.5

2The information bearing signal

Fig 2: The information bearing signal

99 The Applications and Simulation

of

Fig 3: Noise picked up by the secondary microphone

0 20 40 60 80 100 120 140 160 180 200-4

-3

-2

-1

0

1

2

3

4Desired input to the Adaptive Filter = Signal + Filtered Noise

Fig 4: Input to the Adaptive filter

0 100 200 300 400 500 600 700 800 900 1000-4

-3

-2

-1

0

1

2

3

4

Soni Changlani 100

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 10

0.2

0.4

0.6

0.8

1

1.2

1.4

1.6

1.8

2

Normalized Frequency ( rad/sample)

Magnitude

Adaptive Filter Response

Required Filter Response

Fig 5: Response of the Adaptive filter

0 50 100 150 200 250 300 350 400 450 500-4

-3

-2

-1

0

1

2

3

4Original information bearing signal and the error signal

Original Signal

Error Signal

Fig 6: Information Signal and Error signal

5 Conclusion

Simulation results for filter length N=32 ,and forgetting factor=1shows that if Speech enhancement is done by using RLS algorithm and Filter order should be taken high ,in this The RLS algorithm converges quickly, but its complexity grows with the square of the number of weights, roughly speaking. This algorithm can also be unstable when the number of weights is large

101 The Applications and Simulation

of

References

.

[1] Emmanuel C.ifehor, Barrie W.jervis “Digital Signal Processing a PacticalApproach" II edition, Pearson Education .ISBN81-7808-609-3.

[2] Simon Haykin, “AdaptiveFilterTheory”, fourth edition, Pearson Education .ISBN978-81-317-0869-9.

[3] Bernard widrow, Samuel D.Stearns.” Adaptive Signal Processing” third Impression, (2009), Pearson Education. ISBN 978-81-317-0532-2

[4] Ying He, Hong He, Li Li, Yi Wu “The Applications and Simulation of Adaptive Filter in Noise Canceling” (2008) International Conference on computer Science and Software Engineering pp-1-4.

[5] Gnitecki1, Z. Moussavi, H. Pasterkamp,” Recursive Least Squares Adaptive Noise Cancellation Filtering for Heart Sound Reduction in Lung Sounds Recordings” (2003) IEEE proc pp 2416-2419,

[6] Ondracka J., Oravec R., Kadlec J., Cocherová E. “Simulation of RLS and LMS Algorithms for Adaptive Noise Cancellation in MATLAB.”

[7] Honing. M., Messerschmitt. D, 1984, “Adaptive Filters: Structures, Algorithms and Applications”, ISBN-13: 978-0898381634, Kluwer Academic Publishers, pp. 1061- 1064.