-,2& A * 0 4,%-1+-*-%' * '+9* 4-,1on-demand.gputechconf.com/gtc/2013/poster/pdf/P0166_ramses.pdf ·...

Transcript of -,2& A * 0 4,%-1+-*-%' * '+9* 4-,1on-demand.gputechconf.com/gtc/2013/poster/pdf/P0166_ramses.pdf ·...

Category: Computational physiCsposter

Cy08contact name

claudio Gheller: [email protected]

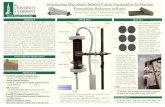

RAMSES on the GPU: Accelera4ng Cosmological Simula4ons

Claudio Gheller (ETH-‐CSCS), Romain Teyssier (University of Zurich), Alistair Hart (CRAY)

Numerical simula,ons represent one of the most effec,ve tools to study and to solve astrophysical problems. Thanks to the enormous technological progress in the recent years, the available supercomputers allow now to study the details of complex processes, like galaxy forma,on or the evou,on of the large scale structure of the universe. Sophis,cated numerical codes can exploit the most advanced HPC architectures to simulate such phenomena. RAMSES is a prime example of such codes.

RAMSES (R.Teyssier, A&A, 385, 2002) is a Fortran 90 code, designed for the study of a number astrophysical problems on different scales (e.g. star forma,on, galaxy dynamics, large scale structure of the universe evolu,on), trea,ng at the same ,me various components (dark energy, dark maQer, baryonic maQer, photons) and including a variety of physical processes (gravity, magnetohydrodynamics, chemical reac,ons, star forma,on, supernova and AGN feedback, etc.).

The most relevant characteris,cs of RAMSES are: 1. High accuracy in describing the baryonic maQer behavior (hydrodynamics approach

based on various Godunov schema). 2. High spa,al resolu,on, thanks to the exploita,on of the Adap,ve Mesh Refinement

(AMR) approach. 3. High performance, thanks to the exploita,on of an hybrid MPI + OpenMP approach.

AMR is the key for the high quality of RAMSES results. It defines the geometry of the computa,onal mesh where MHD equa,ons are solved. RAMSES’ AMR is based on a Graded Octree: Fully Threaded Tree (Khokhlov, J.Comput.Physics, 143, 98). Cartesian mesh refined on a cell by cell basis. Each cell (in 3D) can be refined in octs: small grid of 8 cells Each oct has 17 pointers (arrays of indexes) to: • 1 parent cell; • 6 neighboring parent cells; • 8 children octs; • 2 linked list indices. Pointers allow to navigate the tree. An effec4ve management of the AMR structure is crucial for performance and for ge[ng an efficient parallelism.

MPI domain decomposi4on adopts the Peano-‐Hilbert curve

This work was developed in the framework of: WP8-‐PRACE-‐2IP 7th Framework EU funded project (h@p://www.prace-‐ri.eu/, grant agreement number: RI-‐283493)

HP2C project -‐ Swiss Pla_orm for High-‐Performance and High-‐ProducFvity CompuFng (h@p://www.hp2c.ch/)

TWO MAIN RAMSES’ COMPONENTS MOVED TO THE GPU

1. ATON Radia4ve Transfer & Ioniza4on module (D.Aubert, R.Teyssier, MNRAS, 397, 2008)

Example of decoupled CPU-‐GPU computa,on: solve radia,ve transfer for reioniza,on calcula,on on the GPU, keeping hydro on CPU Main steps: • Solve hydrodynamics step on the CPU • CPU (host) sends hydro data to the GPU, • the host asks to the GPU to compute the

radia4on transport via finite differences… • …then the chemistry… • …and finally the cooling. • Host starts the next 4me step Implemented adop,ng the CUDA programming model.

2. Hydrodynamic Solver

• The Hydro kernel is the most relevant algorithmic component of RAMSES.

• Hydro data are calculated on the AMR mesh.

• GPU parallel efficiency depends cri4cally on data access in the AMR structure.

The Hydro kernel is begin implemented on the GPU with a direc4ve based program-‐ming model, using the OpenACC API (hQp://www.openacc-‐standard.org)

Two different aiempts… (A and B)

Use case: ioniza,on of a uniform medium by a point source (643 cells computa,onal mesh benchmark).

CPU version sec Speed-‐up 1 core 247 145.3 8 cores 34 20 16 cores 18 10.5

GPU version sec 1 GPU 1.7

H I frac,on, cut through the simula,on volume at coordinate z = 0 at ,me t = 500 Myr

System used for the tests: TODI CRAY XK7 system @ CSCS hip://user.cscs.ch/hardware/todi_cray_xk7

272 nodes; 16-‐core AMD Opteron CPU per node; 32 GB DDR3 memory; 1 NVIDIA Tesla K20X GPU per node; 6 GB of GDDR5 memory per GPU.

Solu4on B: (implementa4on in progress…)

Re-‐arrange data in regular rectangular patches before entering the Hydro solver. GPU solves one patch at a 4me, exploi4ng regularity and locality of data organiza4on. Asynchronous data copy is exploited to overlap computa4on to data copy: • The first patch is copied on the GPU. • GPU solves it. • At the same ,me, the control go back to the

CPU that starts copying the next patch. • Copy 4me is hidden.

Solu4on A: • Copy data on the GPU (AMR mesh). • Solve the hydro equa4ons on the GPU. • Copy the results (only) back to the CPU. Async opera4ons in this case are not possible

Use case: Sedov blast wave, 1283 mesh. Limited performance gain. Main reasons: • Irregular data distribu4on (AMR tree-‐

like organiza4on). • Size of transferred data (host-‐device). • Low flops per byte ra4o. OpenACC Nvect Ttot sec. Tacc sec. Ttrnsf sec. Speed-‐up OFF -‐ 1 Pe 10 94.54 ON 512 55.83 38.22 9.2 2.01 ON 1024 45.66 29.27 9.2 2.67 ON 2048 42.08 25.36 9.2 3.07 ON 4096 41.32 23.2 9.2 3.29 ON 8192 41.19 23.15 9.2 3.30

Total, GPU compu,ng and GPU-‐CPU data transfer ,me for the RAMSES hydro solver as a func,on of Nvect, a memory management parameter.